Bias detection in Laptop Imaginative and prescient (CV) goals to search out and remove unfair biases that may result in inaccurate or discriminatory outputs from laptop imaginative and prescient methods.

Laptop imaginative and prescient has achieved exceptional outcomes, particularly lately, outperforming people in most duties. Nonetheless, CV methods are extremely depending on the info they’re educated on and might study to amplify the bias inside such information. Thus, it has change into of utmost significance to determine bias and mitigate bias.

This text will discover the important thing forms of bias in laptop imaginative and prescient, the methods used to detect and mitigate them, and the important instruments and finest practices for constructing fairer CV methods. Let’s get began.

About us: Viso Suite is the infrastructure developed for enterprises to seamlessly combine laptop imaginative and prescient into their tech ecosystems. Viso Suite permits enterprise ML groups to coach, deploy, handle, and safe laptop imaginative and prescient functions in a single interface. To study extra, ebook a demo with our crew.

Bias Detection in Laptop Imaginative and prescient: A Information to Sorts and Origins

Synthetic Intelligence (AI) bias detection usually refers to detecting systematic errors or prejudices in AI fashions that amplify societal biases, resulting in unfair or discriminatory outcomes. Whereas bias in AI methods is a well-established analysis space, the sector of biased laptop imaginative and prescient hasn’t acquired as a lot consideration.

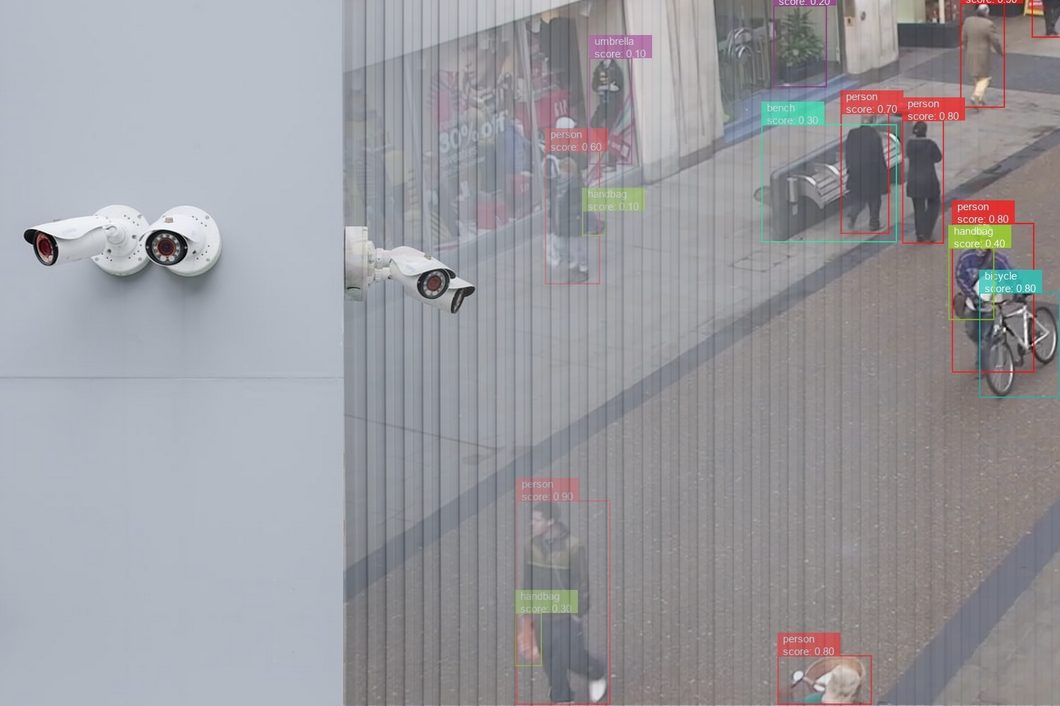

That is regarding contemplating the huge quantity of visible information utilized in machine studying in the present day and the heavy reliance of recent deep studying methods on this information for duties like object detection and picture classification. These biases in laptop imaginative and prescient information can manifest regarding methods, resulting in potential discrimination in real-world functions like focused promoting or regulation enforcement.

Understanding the forms of bias that may corrupt CV fashions is step one towards bias detection and mitigation. It’s necessary to notice that the categorization of visible dataset bias can differ between sources.

This part will record the most typical bias varieties in visible datasets for laptop imaginative and prescient duties. This part will use the framework outlined here.

Choice Bias

Choice bias (additionally referred to as pattern bias) happens when the best way pictures are chosen for a dataset introduces imbalances that don’t mirror the actual world. This implies the dataset might over- or underrepresent sure teams or conditions, resulting in a doubtlessly unfair mannequin.

Sure varieties of images usually tend to be chosen when gathering large-scale benchmark datasets as they depend upon pictures collected from available on-line sources with present societal biases or automated scraping and filtering strategies.

This makes it essential to know the right way to detect pattern bias inside these datasets to make sure fairer fashions. Listed here are just a few examples:

- Caltech101 dataset: Automobile footage are principally taken from the aspect

- ImageNet: Incorporates extra racing automobiles.

Whereas such imbalances may appear much less consequential, choice bias turns into far more crucial when utilized to photographs of people.

Underneath-representation of various teams can result in fashions that misclassify or misidentify people primarily based on protected traits like gender or ethnicity, leading to real-world penalties.

Research revealed that the error charge for dark-skinned people could possibly be 18 occasions greater than that for light-skinned people in some business gender classification algorithms.

Facial recognition algorithms are one of many areas affected by the sampling bias, as it may possibly trigger totally different error charges relying on the info it was educated on. Therefore, such know-how would require far more care, particularly in high-impact functions like regulation enforcement. Nonetheless, it’s value noting that though this class imbalance has a big impression, they don’t clarify each disparity within the efficiency of machine studying algorithms.

One other instance is autonomous driving methods as it is rather difficult to gather a dataset that describes each doable scene and scenario a automotive would possibly face.

Framing Bias

Framing bias refers to how pictures are captured, composed, and edited, in a visible dataset, influencing what a pc imaginative and prescient mannequin learns. This bias encompasses the impression of visible parts like angle, lighting, cropping, and technical selections resembling augmentation throughout picture assortment. Importantly, framing bias differs from choice bias, as every presents its twist.

One instance of framing bias is seize bias. Analysis signifies that representations of obese people in pictures could be considerably totally different in visible content material, with headless pictures occurring way more incessantly in comparison with footage of people who are usually not obese.

These kinds of pictures usually discover their means into giant datasets used to coach CV methods, like picture search engines like google and yahoo.

Even for us, our choices are influenced by how sure issues are framed as this can be a broadly used advertising technique.

For instance, a buyer will select an 80% fat-free milk bottle over a bottle with 20% fats, though they convey the identical factor.

Framing bias in picture search can result in outcomes that perpetuate dangerous stereotypes, even with out express search phrases. For instance, a seek for a common occupation like “building employee” would possibly lead to gender imbalances in illustration. No matter whether or not the algorithm itself is biased or just displays present biases, the outcome amplifies destructive representations. This underscores the significance of bias detection in CV fashions.

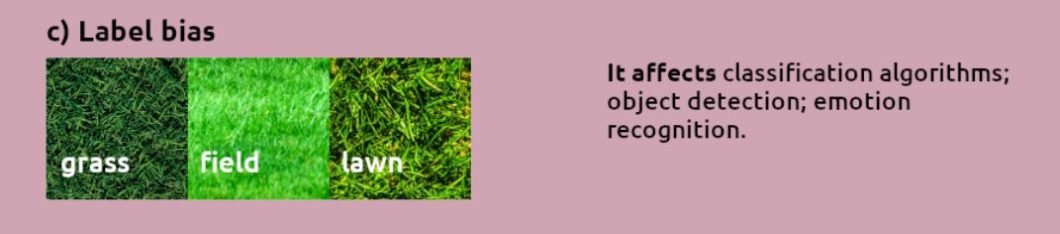

Label Bias

Labeled information is important for supervised studying, and the standard of these labels is essential for any machine studying mannequin, particularly in laptop imaginative and prescient. Labeling errors and biases could be fairly widespread in in the present day’s datasets due to their complexity and quantity, making detecting bias inside these datasets difficult.

We will outline label bias because the distinction between the labels assigned to photographs and their floor reality, this contains errors or inconsistencies in how visible information is categorized. This could occur when labels don’t mirror the true content material of the picture, or when the label classes themselves are imprecise or deceptive.

However, this turns into significantly problematic with human-related pictures. For instance, label bias can embrace destructive set bias the place labels fail to characterize the total range of a class: non-white in a binary function as white folks and non-white/folks of shade.

To deal with challenges like racial bias, utilizing particular visible attributes or measurable properties (like pores and skin reflectance) is commonly extra correct than subjective classes like race. A classification algorithm educated on biased labels will seemingly reinforce these biases when used on new information. This highlights the significance of bias detection early within the lifecycle of visible information.

Visible Information Life Cycle

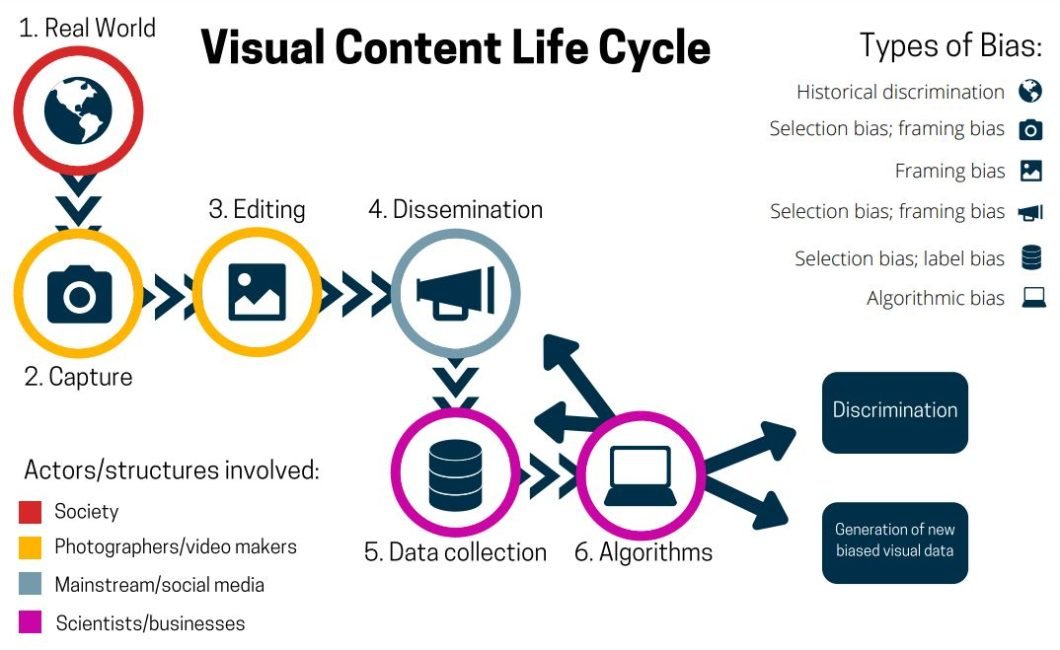

Understanding the right way to detect bias at its supply is essential. The lifecycle of visible content material gives a useful framework for this. It exhibits that bias could be launched or amplified at a number of levels.

The lifecycle exhibits potential for biases like seize bias (digital camera angles influencing notion). Different biases may also happen through the lifecycle of visible content material, in keeping with the processes proven within the illustration. This contains availability bias (utilizing simply obtainable information), or automation bias (automating the labeling and/or assortment course of).

On this information, we talked about the foremost varieties, as different biases are often subcategories. These biases usually work together and create overlaps as a result of totally different sorts of bias can co-occur, making bias detection much more essential.

Bias Detection Methods In Laptop Imaginative and prescient

Detecting bias in visible datasets is a crucial step in direction of constructing truthful and reliable CV methods. Researchers have developed a variety of methods to uncover these biases, guaranteeing the creation of extra equitable fashions. Let’s discover some key approaches.

Discount To Tabular Information

This class of strategies focuses on the attributes and labels related to pictures. By extracting this data and representing it in a tabular format, researchers can apply well-established bias detection strategies developed for tabular datasets.

The options extracted for this tabular illustration can come instantly from pictures utilizing picture recognition and detection instruments, or from present metadata like captions, or a mix of each. Additional evaluation of the extracted tabular information reveals alternative ways to evaluate potential bias.

Widespread approaches could be roughly categorized into:

- Parity-based strategies (Measure of Equality)

- Info concept (Analyzing Redundancy)

- Others

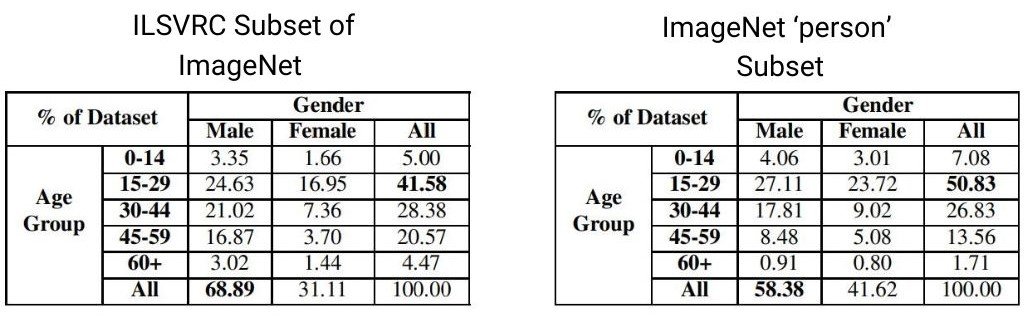

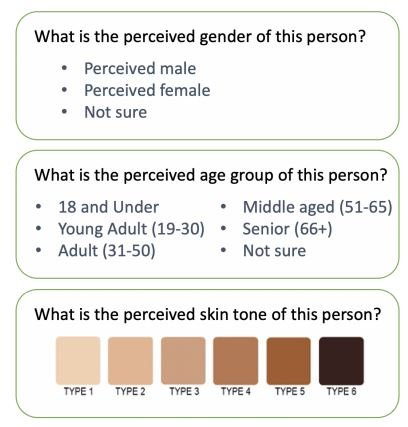

One solution to assess dataset bias is thru parity-based strategies, which study label assignments like age and gender to totally different teams inside visible information utilizing recognition fashions.

Listed here are a number of the statistical outcomes for ImageNet Subsets utilizing a parity-based strategy.

Detecting bias utilizing data concept strategies can be fairly in style, particularly in facial recognition datasets. Researchers make the most of these methods to research equity and create extra balanced datasets. Different reduction-to-tabular strategies exist, and analysis continues to discover new and improved methods for bias detection in tabular information.

Biased Picture Representations

Whereas lowering picture information to tabular information could be worthwhile, generally analyzing the picture representations gives distinctive insights into bias. These strategies concentrate on lower-dimensional representations of pictures, which reveal how a machine studying mannequin would possibly “see” and group them. They depend on analyzing distances and geometric relations of pictures in a lower-dimensional area to detect bias.

Strategies on this class embrace Distance-based and different strategies. To make use of these strategies researchers examine how pre-trained fashions characterize pictures in a lower-dimensional area, and calculate distances between these representations to detect bias inside visible datasets.

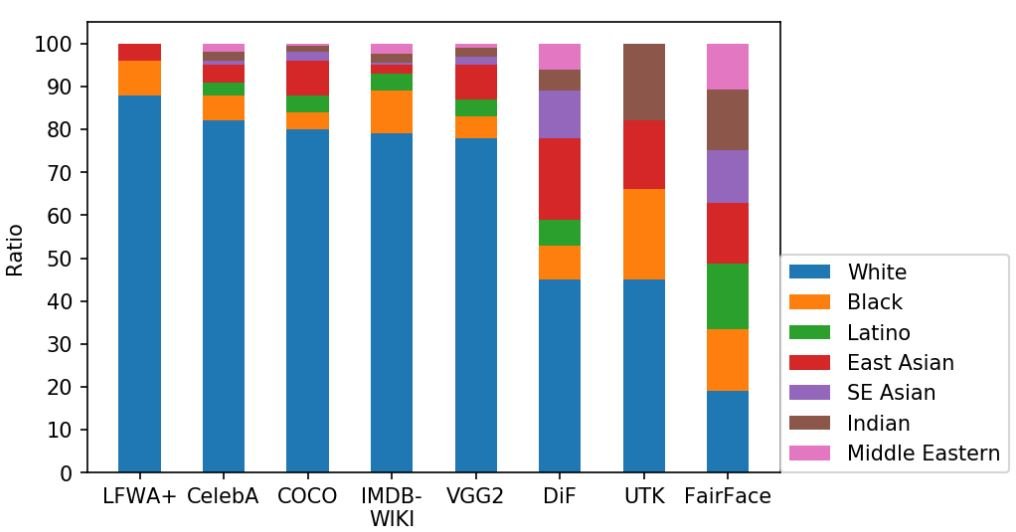

The graph under exhibits the distribution of races inside in style face datasets utilizing picture illustration strategies:

Distance-based strategies may also reveal biases that mirror human stereotypes. Researchers have analyzed how fashions characterize totally different ideas (like “profession” or “household”) in lower-dimensional areas. By measuring the similarity between these representations, they will detect doubtlessly dangerous associations (e.g., if “profession” representations are nearer to photographs of males than pictures of ladies).

Different strategies on this class embrace manipulating the latent area vectors utilizing generative fashions like GANs as a bias detection software. Researchers modify particular latent representations (e.g., hair, gender) to watch the mannequin’s response. These manipulations can generally result in unintended correlations, seemingly on account of present biases within the dataset.

Cross-dataset Bias Detection

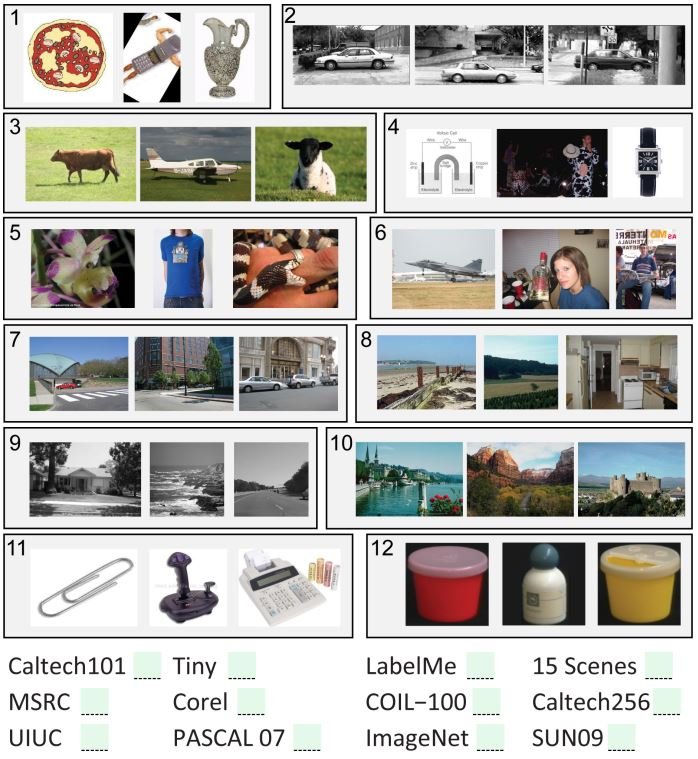

Cross-dataset bias detection strategies examine totally different datasets, looking for “signatures” that reveal biases. This idea of a “signature” comes from the truth that skilled researchers can usually determine which benchmark dataset a picture comes from with good accuracy.

These signatures (biases) are distinctive patterns or traits inside a dataset that often have an effect on the flexibility of a mannequin to generalize properly on new unseen information. Cross-data generalization is one strategy used on this class, which exams how properly a mannequin generalizes to a consultant subset of information it was not educated on.

Researchers have proposed a metric to attain the efficiency of a mannequin on new information in opposition to its native information, the decrease the rating the extra bias within the native information of the mannequin. A preferred associated check, referred to as “Title the Dataset” includes the SVM linear classifier educated to detect the supply dataset of a picture.

The upper the mannequin’s accuracy, the extra distinct and doubtlessly biased the datasets had been. Here’s what this check seems to be like:

This job proved surprisingly straightforward for people working in object and scene recognition. In different efforts, researchers used CNN function descriptors and SVM binary classifiers to assist with detecting bias in visible datasets.

Different Strategies

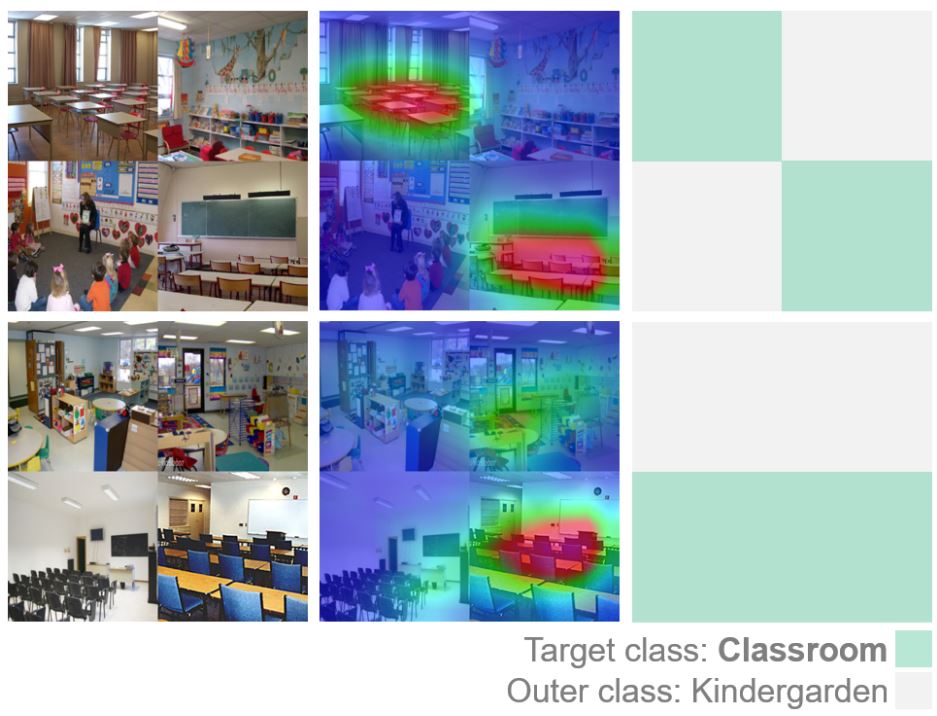

Some strategies don’t fall underneath any of the classes talked about to date. On this subsection, we’ll discover XAI as one development that helped bias detection.

Deep studying fashions are black-box strategies by nature, and though these fashions succeeded probably the most in CV duties, explainability continues to be poorly assessed. Explainable AI improves the transparency of these fashions making them extra reliable.

XAI gives a number of methods to determine potential biases inside deep studying fashions:

- Saliency Maps: These spotlight areas in a picture which are most influential within the mannequin’s choice. A concentrate on irrelevant parts would possibly flag potential bias.

- Function Significance: By figuring out which attributes (e.g., colours, shapes) the mannequin prioritizes, it may possibly uncover reliance on biased attributes.

- Choice Bushes/Rule-Based mostly Programs: Some XAI strategies create choice timber or rule-based methods that mimic a mannequin logic, making a mannequin’s logic extra clear which might expose bias in its reasoning.

Detecting bias is step one in direction of addressing it. Within the subsequent part let’s discover particular instruments, methods, and finest practices developed by researchers and practitioners as actionable steps for mitigation.

Bias Detection in Laptop Imaginative and prescient: A Information To Mitigation

Constructing on the bias detection methods we explored beforehand, researchers analyze in style benchmark datasets to detect the forms of bias current in them and inform the creation of fairer datasets.

Since dataset bias results in algorithmic bias, mitigation methods often concentrate on finest practices for dataset creation and sampling. This strategy permits us to have a bias-aware visible information assortment course of, to attenuate bias from the muse of CV fashions.

Knowledgeable by researcher-led case research, this part outlines actionable mitigation methods divided into three focus areas:

- Dataset Creation

- Assortment Processes

- Broader Concerns

Dataset Creation

- Try for Balanced Illustration: Fight choice bias by together with various examples by way of gender, pores and skin tone, age, and different protected attributes. Oversampling under-represented teams or rigorously adjusting dataset composition can promote this stability. E.g., a dataset together with solely younger adults could possibly be balanced by including pictures of seniors.

- Critically Think about Labels: Be aware of how labels can introduce bias, and think about extra refined labeling approaches when doable. Imposing classes like overly simplistic racial classes can itself be a type of bias. E.g., As an alternative of “Asian”, embrace extra particular regional or cultural identifiers if related.

- Crowdsourcing Challenges: Crowdsourced annotators often have inconsistencies, as particular person annotators might have potential biases. So, if utilizing crowdsourced annotations, ensure that to implement high quality management mechanisms. E.g., Present annotators with clear tips and coaching on potential biases.

Assortment Course of

- Symbolize Numerous Environments: To keep away from framing bias, ensure that to seize range in lighting, digital camera angles, backgrounds, and topic illustration. Introducing artificial information may give extra selection to the settings of the photographs. It will keep away from overfitting fashions to particular contexts or lighting circumstances and permit for an sufficient pattern dimension. E.g., Images taken each indoors and outside.

- Be Aware of Exclusion: Think about the potential impression of eradicating sure object lessons on mannequin efficiency. This may also have an effect on destructive examples, eradicating common object lessons (“folks,” “beds”) can skew the stability.

Broader Concerns

- Broaden Geographic Scope: Geographic bias is one kind of pattern bias. This bias exists in a variety of datasets which are US-centric or European-centric. So, it is very important embrace pictures from various areas to fight this bias. E.g., Acquire pictures from international locations throughout a number of continents.

- Acknowledge Id Complexity: Binary gender labels generally fail to mirror gender id, requiring totally different approaches. Thus, inclusive illustration in datasets could be useful.

Guidelines:

Lastly, think about using the guidelines under for bias-aware visible information assortment from the paper: “A Survey on Bias in Visible Datasets.”

| Normal | Choice Bias | Framing Bias | Label Bias |

|---|---|---|---|

| What are the needs the info is collected for? | Do we’d like balanced information or statistically consultant information? | Are there any spurious correlation that may contribute to framing totally different topics in numerous methods? | If the labelling course of depends on machines: have their biases been taken into consideration? |

| Are there makes use of of the info that must be discouraged due to doable biases? | Are the destructive units consultant sufficient? | Is there any biases as a result of means pictures/movies are captured? | If the labelling course of depends on human annotators: is there an sufficient and various pool of annotators? Have their doable biases been taken into consideration? |

| What sorts of bias could be inserted by the best way the gathering course of is designed? | Is there any group of topics that’s systematically excluded from the info? | Did the seize induce some habits within the topics? (e.g. smiling when photographed?) | If the labelling course of depends on crowd sourcing: are there any biases as a result of employees’ entry to crowd sourcing platforms? |

| Do the info come from or depict a selected geographical space? | Are there any pictures that might presumably convey totally different messages relying on the viewer? | Can we use fuzzy labels? (e.g., race or gender) | |

| Does the number of the themes create any spurious associations? | Are topics in a sure group depicted in a selected context greater than others? | Can we operationalise any unobservable theoretical constructs/use proxy variables? | |

| Will the info stay consultant for a very long time? | Do the info agree with dangerous stereotypes? |

In any of the talked about focus areas, you should use adversarial studying methods or fairness-enhancing AI algorithms. We refer to those as Explainable AI (XAI), talked about within the bias detection part and utilized by researchers.

Adversarial studying methods practice fashions to withstand bias by coaching them on examples that spotlight these biases. Additionally, be aware of trade-offs as mitigating one bias can generally introduce others.

Conclusion

This text gives a basis for understanding bias detection in laptop imaginative and prescient, overlaying bias varieties, detection strategies, and mitigation methods.

As famous within the earlier sections, bias is pervasive all through the visible information lifecycle. Additional analysis should discover richer representations of visible information, the connection between bias and latent area geometry, and bias detection in video. To scale back bias, we require extra equitable information assortment practices and a heightened consciousness of biases inside these datasets.

Be taught Extra

To proceed studying about laptop imaginative and prescient methods, take a look at our different articles: