VB Remodel 2024 returns this July! Over 400 enterprise leaders will collect in San Francisco from July Sep 11 to dive into the development of GenAI methods and interesting in thought-provoking discussions inside the group. Discover out how one can attend right here.

Latest headlines, equivalent to an AI suggesting folks ought to eat rocks or the creation of ‘Miss AI,’ the primary magnificence contest with AI-generated contestants, have reignited debates concerning the accountable improvement and deployment of AI. The previous is probably going a flaw to be resolved, whereas the latter reveals human nature’s flaws in valuing a selected magnificence commonplace. In a time of repeated warnings of AI-led doom –— the newest private warning from an AI researcher pegging the probability at 70%! — these are what rise to the highest of the present record of worries and neither suggests greater than enterprise as typical.

There have, in fact, been egregious examples of hurt from AI instruments equivalent to deepfakes used for financial scams or portraying innocents in nude pictures. Nonetheless, these deepfakes are created on the route of nefarious people and never led by AI. As well as, there are worries that the applying of AI might get rid of a major variety of jobs, though up to now this has but to materialize.

In truth, there’s a lengthy record of potential dangers from AI expertise, together with that it’s being weaponized, encodes societal biases, can result in privateness violations and that we stay challenged in with the ability to clarify the way it works. Nonetheless, there isn’t any proof but that AI by itself is out to hurt or kill us.

However, this lack of proof didn’t cease 13 present and former workers of main AI suppliers from issuing a whistleblowing letter warning that the expertise poses grave dangers to humanity, together with vital demise. The whistleblowers embody consultants who’ve labored carefully with cutting-edge AI techniques, including weight to their considerations. We now have heard this earlier than, together with from AI researcher Eliezer Yudkowsky, who worries that ChatGPT factors in the direction of a close to future when AI “will get to smarter-than-human intelligence” and kills everybody.

Even so, as Casey Newton identified concerning the letter in Platformer: “Anybody in search of jaw-dropping allegations from the whistleblowers will probably go away dissatisfied.” He famous this could be as a result of stated whistleblowers are forbidden by their employers to blow the whistle. Or it might be that there’s scant proof past sci-fi narratives to help the troubles. We simply don’t know.

Getting smarter on a regular basis

What we do know is that “frontier” generative AI fashions proceed to get smarter, as measured by standardized testing benchmarks. Nonetheless, it’s doable these outcomes are skewed by “overfitting,” when a mannequin performs properly on coaching knowledge however poorly on new, unseen knowledge. In a single example, claims of Ninetieth-percentile efficiency on the Uniform Bar Examination had been proven to be overinflated.

Even so, attributable to dramatic beneficial properties in capabilities during the last a number of years in scaling these fashions with extra parameters skilled on bigger datasets, it’s largely accepted that this progress path will result in even smarter fashions within the subsequent yr or two.

What’s extra, many main AI researchers, together with Geoffrey Hinton (usually known as an ‘AI godfather’ for his pioneering work in neural networks), believes synthetic basic intelligence (AGI) might be achieved within five years. AGI is regarded as an AI system that may match or exceed human-level intelligence throughout most cognitive duties and domains, and the purpose at which the existential worries might be realized. Hinton’s viewpoint is critical, not solely as a result of he has been instrumental in constructing the expertise powering gen AI, however as a result of — till not too long ago — he thought the opportunity of AGI was many years into the long run.

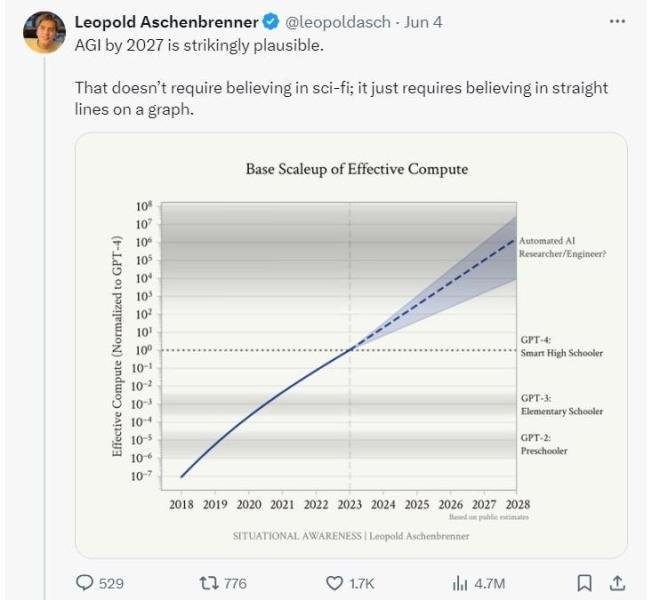

Leopold Aschenbrenner, a former OpenAI researcher on the superalignment team who was fired for allegedly leaking info, not too long ago revealed a chart displaying that AGI is achievable by 2027. This conclusion assumes that progress will proceed in a straight line, up and to the precise. If appropriate, this provides credence to claims AGI might be achieved in 5 years or much less.

One other AI winter?

Though not everybody agrees that gen AI will obtain these heights. It appears probably that the subsequent era of instruments (GPT-5 from OpenAI and the subsequent iteration of Claude and Gemini) will make spectacular beneficial properties. That stated, related progress past the subsequent era will not be assured. If technological advances degree out, worries about existential threats to humanity might be moot.

AI influencer Gary Marcus has lengthy questioned the scalability of those fashions. He now speculates that as a substitute of witnessing early indicators of AGI, we’re as a substitute now seeing early signs of a new “AI Winter.” Traditionally, AI has skilled a number of “winters,” such because the intervals within the Nineteen Seventies and late Eighties when curiosity and funding in AI analysis dramatically declined attributable to unmet expectations. This phenomenon sometimes arises after a interval of heightened expectations and hype surrounding AI’s potential, which finally results in disillusionment and criticism when the expertise fails to ship on overly bold guarantees.

It stays to be seen if such disillusionment is underway, however it’s doable. Marcus factors to a recent story reported by Pitchbook that states: “Even with AI, what goes up should ultimately come down. For 2 consecutive quarters, generative AI dealmaking on the earliest phases has declined, dropping 76% from its peak in Q3 2023 as cautious buyers sit again and reassess following the preliminary flurry of capital into the area.”

This decline in funding offers and dimension might imply that present corporations will turn out to be money starved earlier than substantial revenues seem, forcing them to cut back or stop operation, and it may restrict the variety of new corporations and new concepts getting into {the marketplace}. Though it’s unlikely this could have any influence on the biggest corporations growing frontier AI fashions.

Including to this development is a Fast Company story that claims there may be “little proof that the [AI] expertise is broadly unleashing sufficient new productiveness to push up firm earnings or carry inventory costs.” Consequently, the article opines that the specter of a brand new AI Winter might dominate the AI dialog within the latter half of 2024.

Full velocity forward

However, the prevailing knowledge could be greatest captured by Gartner when they state: “Much like the introduction of the web, the printing press and even electrical energy, AI is having an influence on society. It’s nearly to remodel society as a complete. The age of AI has arrived. Development in AI can’t be stopped and even slowed down.”

The comparability of AI to the printing press and electrical energy underscores the transformative potential many imagine AI holds, driving continued funding and improvement. This viewpoint additionally explains why so many are all-in on AI. Ethan Mollick, a professor at Wharton Enterprise College, said recently on a Tech at Work podcast from Harvard Enterprise Overview that work groups ought to carry gen AI into every part they do — proper now.

In his One Helpful Factor blog, Mollick factors to current proof displaying how far superior gen AI fashions have turn out to be. For instance: “For those who debate with an AI, they’re 87% more likely to persuade you to their assigned viewpoint than when you debate with a median human.” He additionally cited a study that confirmed an AI mannequin outperforming people for offering emotional help. Particularly, the analysis targeted on the talent of reframing detrimental conditions to cut back detrimental feelings, often known as cognitive reappraisal. The bot outperformed people on three of the 4 examined metrics.

The horns of a dilemma

The underlying query behind this dialog is whether or not AI will resolve a few of our best challenges or if it can finally destroy humanity. Most definitely, there might be a mix of magical beneficial properties and regrettable hurt emanating from superior AI. The easy reply is that no one is aware of.

Maybe consistent with the broader zeitgeist, by no means has the promise of technological progress been so polarized. Even tech billionaires, presumably these with extra perception than everybody else, are divided. Figures like Elon Musk and Mark Zuckerberg have publicly clashed over AI’s potential dangers and advantages. What is evident is that the doomsday debate will not be going away, neither is it near decision.

My very own likelihood of doom “P(doom)” stays low. I took the place a yr in the past that my P(doom) is ~ 5% and I stand by that. Whereas the troubles are professional, I discover current developments on the AI protected entrance encouraging.

Most notably, Anthropic has made progress has been made on explaining how LLMs work. Researchers there not too long ago been in a position to look inside Claude 3 and determine which mixtures of its synthetic neurons evoke particular ideas, or “options.” As Steven Levy noted in Wired, “Work like this has doubtlessly big implications for AI security: For those who can work out the place hazard lurks inside an LLM, you’re presumably higher outfitted to cease it.”

In the end, the way forward for AI stays unsure, poised between unprecedented alternative and vital threat. Knowledgeable dialogue, moral improvement and proactive oversight are essential to making sure AI advantages society. The goals of many for a world of abundance and leisure might be realized, or they might flip right into a nightmarish hellscape. Accountable AI improvement with clear moral rules, rigorous security testing, human oversight and sturdy management measures is important to navigate this quickly evolving panorama.

Gary Grossman is EVP of expertise follow at Edelman and international lead of the Edelman AI Middle of Excellence.

Source link