Be part of our each day and weekly newsletters for the most recent updates and unique content material on industry-leading AI protection. Be taught Extra

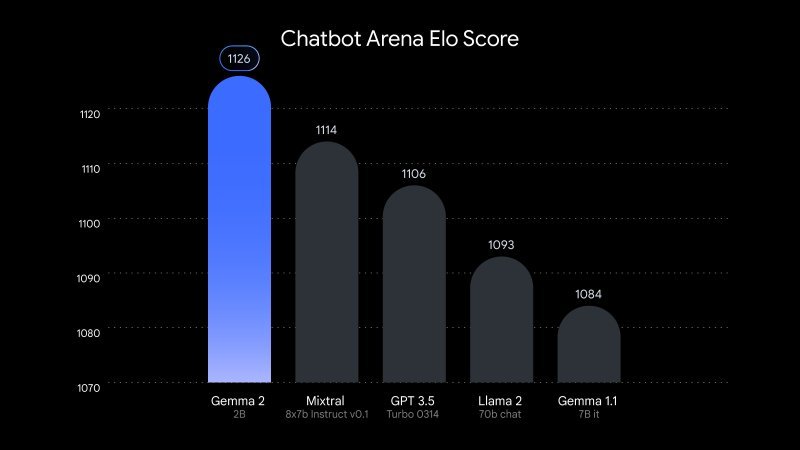

Google has simply unveiled Gemma 2 2B, a compact but highly effective synthetic intelligence mannequin that rivals {industry} leaders regardless of its considerably smaller dimension. The brand new language mannequin, containing simply 2.6 billion parameters, demonstrates efficiency on par with or surpassing a lot bigger counterparts, together with OpenAI’s GPT-3.5 and Mistral AI’s Mixtral 8x7B.

Introduced on Google’s Developer Blog, Gemma 2 2B represents a serious development in creating extra accessible and deployable AI methods. Its small footprint makes it significantly appropriate for on-device purposes, doubtlessly having a serious influence on cell AI and edge computing.

The little AI that would: Punching above its weight class

Unbiased testing by LMSYS, an AI analysis group, noticed Gemma 2 2B obtain a rating of 1130 of their analysis area. This outcome locations it barely forward of GPT-3.5-Turbo-0613 (1117) and Mixtral-8x7B (1114), fashions with ten instances extra parameters.

The mannequin’s capabilities lengthen past mere dimension effectivity. Google stories Gemma 2 2B scores 56.1 on the MMLU (Huge Multitask Language Understanding) benchmark and 36.6 on MBPP (Largely Fundamental Python Programming), marking important enhancements over its predecessor.

This achievement challenges the prevailing knowledge in AI improvement that bigger fashions inherently carry out higher. Gemma 2 2B’s success means that refined coaching methods, environment friendly architectures, and high-quality datasets can compensate for uncooked parameter rely. This breakthrough might have far-reaching implications for the sector, doubtlessly shifting focus from the race for ever-larger fashions to the refinement of smaller, extra environment friendly ones.

Distilling giants: The artwork of AI compression

The event of Gemma 2 2B additionally highlights the rising significance of mannequin compression and distillation methods. By successfully distilling information from bigger fashions into smaller ones, researchers can create extra accessible AI instruments with out sacrificing efficiency. This method not solely reduces computational necessities but in addition addresses issues concerning the environmental influence of coaching and working giant AI fashions.

Google skilled Gemma 2 2B on a large dataset of two trillion tokens utilizing its superior TPU v5e {hardware}. The multilingual mannequin enhances its potential for international purposes.

This launch aligns with a rising {industry} pattern in direction of extra environment friendly AI fashions. As issues concerning the environmental influence and accessibility of enormous language fashions enhance, tech corporations are specializing in creating smaller, extra environment friendly methods that may run on consumer-grade {hardware}.

Open supply revolution: Democratizing AI for all

By making Gemma 2 2B open source, Google reaffirms its dedication to transparency and collaborative improvement in AI. Researchers and builders can entry the mannequin by way of a Hugging Face via Gradio, with implementations out there for varied frameworks together with PyTorch and TensorFlow.

Whereas the long-term influence of this launch stays to be seen, Gemma 2 2B clearly represents a major step in direction of democratizing AI expertise. As corporations proceed to push the boundaries of smaller fashions’ capabilities, we could also be coming into a brand new period of AI improvement—one the place superior capabilities are not the unique area of resource-intensive supercomputers.

Source link