In 2024 the Royal Swedish Academy of Sciences awarded the Nobel Prize in Physics to John J. Hopfield and Geoffrey E. Hinton, who’re thought of pioneers for his or her work in synthetic intelligence (AI). Physics is an fascinating area and it has all the time been intertwined with groundbreaking discoveries that change our understanding of the universe and improve our expertise. John Hopfield is a physicist with contributions to machine studying and AI, Geoffrey Hinton, typically thought of the godfather of AI, is the pc scientist whom we are able to thank for the present developments in AI.

Each John Hopfield and Geoffrey Hinton performed foundational analysis on synthetic neural networks (ANNs). The Nobel Prize’s exceptional achievement comes from their analysis that enabled machine studying with ANNs, which allowed machines to be taught in new methods beforehand thought unique to people. On this complete overview, we are going to delve into the groundbreaking analysis of Hopfield and Hinton, exploring the important thing ideas of their analysis which have formed fashionable AI and earned them the celebrated Nobel Prize.

About us: Viso Suite is end-to-end laptop imaginative and prescient infrastructure for enterprises. In a unified interface, companies can streamline the manufacturing, deployment, and scaling of clever, vision-based functions. To begin implementing laptop imaginative and prescient for enterprise options, guide a demo of Viso Suite with our workforce of consultants.

Overview of Synthetic Neural Networks (ANNs): The Basis of Fashionable AI

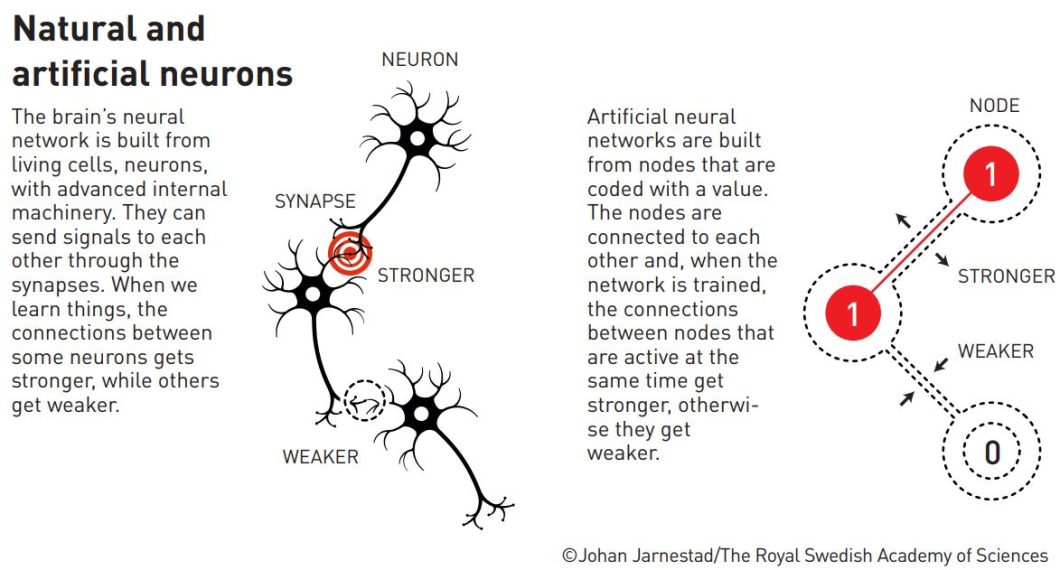

John Hopfield and Geoffrey Hinton made foundational discoveries and innovations that enabled machine studying with Synthetic Neural Networks (ANNs), which make up the constructing blocks for contemporary AI. Arithmetic, laptop science, biology, and physics type the roots of machine studying and neural networks. For instance, the organic neurons within the mind encourage ANNs. Basically, ANNs are massive collections of “neurons”, or nodes, related by “synapses”, or weighted couplings. Researchers practice them to carry out sure duties moderately than asking them to execute a predetermined set of directions. That is additionally just like spin fashions in statistical physics, utilized in theories like magnetism or alloy.

Analysis on neural networks and machine studying existed ever for the reason that invention of the pc. ANNs are fabricated from nodes, layers, connections, and weights, the layers are fabricated from many nodes with connections between them, and a weight for these connections. The information goes in and the weights of the connections change relying on mathematical fashions. Within the ANN space, researchers explored two architectures for programs of interconnected nodes:

- Recurrent Neural Networks (RNNs)

- Feedforward neural networks

RNNs are a sort of neural community that takes in sequential information, like a time collection, to make sequential predictions, and they’re recognized for his or her “reminiscence”. RNNs are helpful for a variety of duties like climate prediction, inventory value prediction, or these days deep studying duties like language translation, pure language processing (NLP), sentiment evaluation, and picture captioning. Feedforward neural networks however are extra conventional one-way networks, the place information flows in a single route (ahead) which is the alternative of RNNs which have loops. Now that we perceive ANNs let’s dive into John Hopfield and Geoffrey Hinton’s analysis individually.

Hopfield’s Contribution: Recurrent Networks and Associative Reminiscence

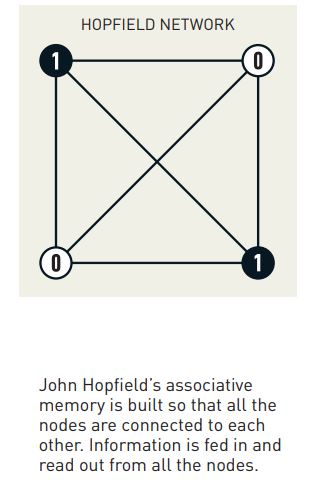

John J. Hopfield, a physicist in organic physics, revealed a dynamical mannequin in 1982 for an associative reminiscence primarily based on a easy recurrent neural community. The straightforward memory-based RNN construction was new and influenced by his background in physics similar to domains in magnetic programs and vortices in fluid circulate. RNN networks with loops permit data to persist and affect future computations, identical to a sequence of whispers the place every particular person’s whisper impacts the following.

Hopfield’s most important contribution was the event of the Hopfield Community mannequin, let’s have a look at that subsequent.

The Hopfield Community Mannequin

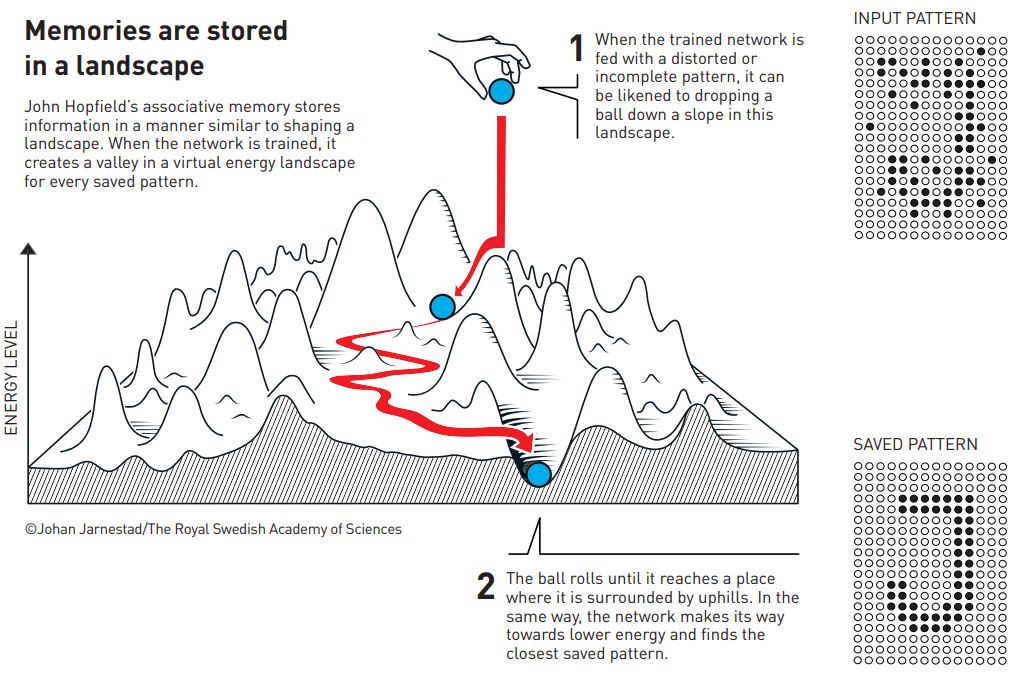

Hopfield’s community mannequin is associative reminiscence primarily based on a easy recurrent neural community. As we now have mentioned RNN consists of related nodes, however the mannequin Hopfield developed had a novel characteristic known as an “vitality perform” which represents the reminiscence of the community. Think about this vitality perform like a panorama with hills and valleys. The community’s state is sort of a ball rolling on this panorama, and it naturally needs to settle within the lowest factors, the valleys, which signify secure states. These secure states are like saved recollections within the community.

The time period “associative reminiscence” on this community means it may possibly hyperlink patterns into the appropriate secure state, even when distorted. It’s like recognizing a tune from just some notes. Even for those who give the community a partial or noisy enter, it may possibly nonetheless retrieve the whole reminiscence, like filling within the lacking components of a puzzle. This capability to recall full patterns from incomplete data makes the Hopfield Community a big contribution to the world of machine studying.

Purposes of Hopfield Networks

The Hopfield community influenced analysis throughout the pc science area to at the present time. Researchers discovered functions in numerous areas, notably in sample recognition and optimization issues. John Hopfield networks can acknowledge photos, even when they’re distorted or incomplete. They’re additionally helpful for search algorithms the place it is advisable discover the most effective resolution amongst many potentialities, like discovering the shortest route. The Hopfield community has been used to resolve widespread issues within the laptop science area just like the touring salesman drawback, and utilizing its associative reminiscence for duties like picture reconstruction.

Hopfield’s work laid the inspiration for additional developments in neural networks, particularly in deep studying. His analysis impressed many others to discover the potential of neural networks, together with Geoffrey Hinton, who took these concepts to new heights together with his work on deep studying and generative AI. Subsequent, let’s dive into Hinton’s analysis and see why he’s the godfather of AI.

Hinton’s Contribution: Deep Studying and Generative AI

Geoffrey Hinton, a pioneer in AI, his analysis led to the present developments of synthetic neural networks. His analysis modified our perspective on how machines can be taught and paved the best way for contemporary AI functions which can be remodeling industries. Hinton explored the potential of a number of varieties of synthetic neural networks and made vital contributions to numerous architectures and coaching strategies that we’ll focus on on this part.

Hinton’s Work on Numerous ANN Architectures

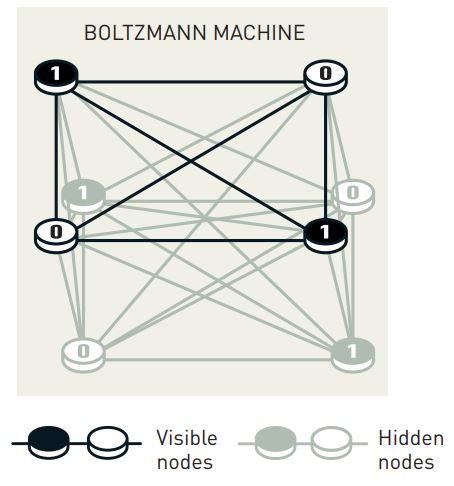

In 1983–1985 Geoffrey Hinton, along with Terrence Sejnowski and different coworkers developed an extension of Hopfield’s mannequin known as the Boltzmann machine. This can be a stochastic recurrent neural community however in contrast to the Hopfield mannequin, the Boltzmann machine is a generative mannequin. The Boltzmann machine is likely one of the earliest approaches to deep studying. It’s a kind of ANN that makes use of a stochastic (random) method to be taught the underlying construction of information the place the nodes are just like the switches, they’re both seen (representing the enter information) or hidden (capturing inner representations). Think about it like a community of related switches, every randomly flipping between “on” and “off” states.

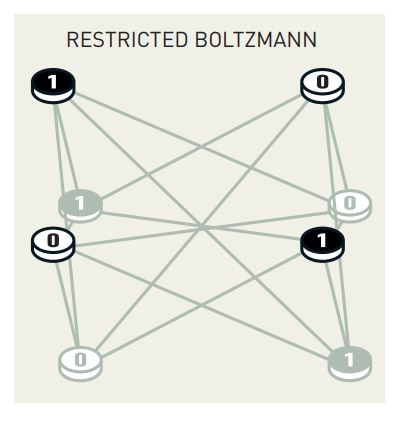

The Boltzmann machine nonetheless had the identical idea because the Hopfield mannequin the place it goals to discover a state of minimal vitality, which corresponds to the most effective illustration of the enter information. This distinctive community structure on the time allowed it to be taught inner representations and even generate new samples from the realized information. Nevertheless, coaching these Boltzmann Machines will be fairly computationally costly. So, Hinton and his colleagues created a simplified model known as the Restricted Boltzmann Machine (RBM). The RBM is a slimmed-down model with fewer weights making it simpler to coach whereas nonetheless being a flexible instrument.

In a restricted Boltzmann machine, there aren’t any connections between nodes in the identical layer. This proved notably highly effective when Hinton later confirmed the way to stack them collectively to create highly effective multi-layered networks able to studying complicated patterns. Researchers steadily use the machines in a sequence, one after the opposite. After coaching the primary restricted Boltzmann machine, the content material of the hidden nodes is used to coach the following machine, and so forth.

Backpropagation: Coaching AI Successfully

In 1986 David Rumelhart, Hinton, and Ronald Williams demonstrated a key development of how architectures with a number of hidden layers might be skilled for classification utilizing the backpropagation algorithm. This algorithm is sort of a suggestions mechanism for neural networks. The target of this algorithm is to reduce the imply sq. deviation, between output from the community and coaching information, by gradient descent.

In easy phrases backpropagation permits the community to be taught from its errors by adjusting the weights of the connections primarily based on the errors it makes which improves its efficiency over time. Furthermore, Hinton’s work on backpropagation is crucial in enabling the environment friendly coaching of deep neural networks to at the present time.

In direction of Deep Studying and Generative AI

All of the breakthroughs that Hinton made together with his workforce, had been quickly adopted by profitable functions in AI, together with sample recognition in photos, languages, and scientific information. A kind of developments was Convolutional Neural Networks (CNNs) which had been skilled by backpropagation. One other profitable instance of that point was the lengthy short-term reminiscence methodology created by Sepp Hochreiter and Jürgen Schmidhuber. This can be a recurrent community for processing sequential information, as in speech and language, and will be mapped to a multilayered community by unfolding in time. Nevertheless, it remained a problem to coach deep multilayered networks with many connections between consecutive layers.

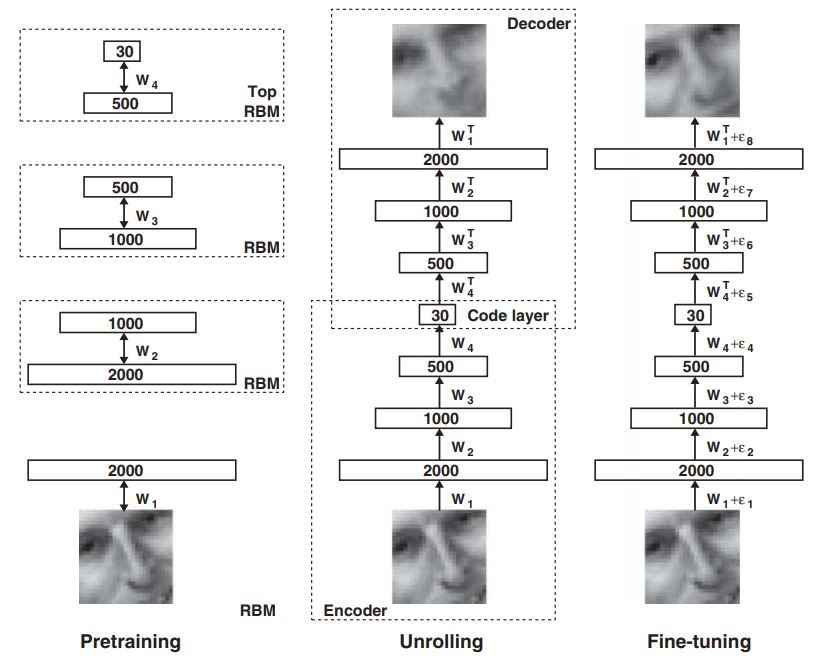

Hinton was the main determine in creating the answer and an essential instrument was the restricted Boltzmann machine (RBM). For RBMs, Hinton created an environment friendly approximate studying algorithm, known as contrastive divergence, which was a lot sooner than that for the complete Boltzmann machine. Different researchers then developed a pre-training process for multilayer networks, through which the layers are skilled one after the other utilizing an RBM. An early utility of this method was an autoencoder community for dimensional discount.

Following pre-training, it turned attainable to carry out a world parameter finetuning utilizing the backpropagation algorithm. The pre-training with RBMs recognized constructions in information, like corners in photos, with out utilizing labeled coaching information. Having discovered these constructions, labeling these by backpropagation turned out to be a comparatively easy process. By linking layers pre-trained on this approach, Hinton was capable of efficiently implement examples of deep and dense networks, an important achievement for deep studying. Now, let’s transfer on to discover the influence of Hinton and Hopfield’s analysis and the long run implications of their work.

The Influence of Hopfield and Hinton’s Analysis

The groundbreaking analysis of Hopfield and Hinton has had a deep influence on the sphere of AI, their work superior the speculation foundations of neural networks and led to the capabilities that AI has at present. Picture recognition, for instance, has been drastically enhanced by their work, permitting for duties like object detection, faces, and even feelings. Pure language processing (NLP) is one other space, due to their contributions, we now have fashions that may perceive and generate human-like textual content, enabling in style functions just like the GPTs.

The listing of functions utilized in on a regular basis life primarily based on ANNs is lengthy, these networks are behind virtually all the things we do with computer systems. Nevertheless, their analysis has a broader influence on scientific discoveries. In fields like physics, chemistry, and biology, researchers use AI to simulate experiments and design new medication and supplies. In astrophysics and astronomy, ANNs have additionally develop into an ordinary information evaluation instrument the place we not too long ago used them to get neutrino picture of the Milky Manner.

Resolution assist inside well being care can also be a well-established utility for ANNs. A current potential randomized research of mammographic screening photos confirmed a transparent good thing about utilizing machine studying in enhancing the detection of breast most cancers or movement correction for magnetic resonance imaging (MRI) scans.

The Future Implications of the Nobel Prize in Physics 2024

The longer term implications of John J. Hopfield and Geoffrey E. Hinton’s analysis are huge. Hopfield’s analysis on recurrent networks and associative reminiscence laid the foundations and Hinton’s additional exploration of deep studying and generative AI has led to the event of highly effective AI programs. Furthermore, As AI continues to evolve, we are able to anticipate much more groundbreaking analysis and transformative functions. Their work has laid the inspiration for a future the place AI can assist clear up the world’s most urgent challenges. The 2024 Nobel Prize in Physics is a testomony to their exceptional achievements and their lasting influence on AI. Nevertheless, it is very important take into account that as we proceed to develop and deploy AI programs, we should use them ethically and responsibly to profit us and the planet.

FAQs

Q1. What are synthetic neural networks (ANNs)?

The organic neural networks within the human mind impressed the structure of ANNs. They include related nodes organized in layers, with weighted connections between them. Studying happens by adjusting these weights primarily based on the community coaching information.

Q2. What’s deep studying?

Deep studying is a subfield of machine studying that makes use of ANNs with a number of hidden layers to be taught complicated patterns and representations from enter information.

Q3. What’s generative AI?

Generative AI are AI programs that may generate new content material. These programs be taught the patterns and constructions of the enter information after which use this data to create new and authentic content material.

This autumn. What’s the significance of Hopfield and Hinton’s analysis?

Hopfield and Hinton’s analysis has been foundational within the growth of contemporary AI. Their work led to the sensible functions we now have at present for AI.