Within the ongoing effort to make AI extra like people, OpenAI’s GPT fashions have frequently pushed the boundaries. GPT-4 is now in a position to settle for prompts of each textual content and pictures.

Multimodality in generative AI denotes a mannequin’s functionality to supply assorted outputs like textual content, photographs, or audio based mostly on the enter. These fashions, educated on particular knowledge, study underlying patterns to generate related new knowledge, enriching AI functions.

Current Strides in Multimodal AI

A latest notable leap on this subject is seen with the mixing of DALL-E 3 into ChatGPT, a major improve in OpenAI’s text-to-image know-how. This mix permits for a smoother interplay the place ChatGPT aids in crafting exact prompts for DALL-E 3, turning person concepts into vivid AI-generated artwork. So, whereas customers can instantly work together with DALL-E 3, having ChatGPT within the combine makes the method of making AI artwork way more user-friendly.

Take a look at extra on DALL-E 3 and its integration with ChatGPT here. This collaboration not solely showcases the development in multimodal AI but in addition makes AI artwork creation a breeze for customers.

Google’s well being then again launched Med-PaLM M in June this 12 months. It’s a multimodal generative mannequin adept at encoding and decoding numerous biomedical knowledge. This was achieved by fine-tuning PaLM-E, a language mannequin, to cater to medical domains using an open-source benchmark, MultiMedBench. This benchmark, consists of over 1 million samples throughout 7 biomedical knowledge varieties and 14 duties like medical question-answering and radiology report technology.

Varied industries are adopting modern multimodal AI instruments to gasoline enterprise enlargement, streamline operations, and elevate buyer engagement. Progress in voice, video, and textual content AI capabilities is propelling multimodal AI’s progress.

Enterprises search multimodal AI functions able to overhauling enterprise fashions and processes, opening progress avenues throughout the generative AI ecosystem, from knowledge instruments to rising AI functions.

Put up GPT-4’s launch in March, some customers noticed a decline in its response high quality over time, a priority echoed by notable builders and on OpenAI’s boards. Initially dismissed by an OpenAI, a later study confirmed the problem. It revealed a drop in GPT-4’s accuracy from 97.6% to 2.4% between March and June, indicating a decline in reply high quality with subsequent mannequin updates.

ChatGPT (Blue) & Synthetic intelligence (Crimson) Google Search Pattern

The hype round Open AI’s ChatGPT is again now. It now comes with a imaginative and prescient function GPT-4V, permitting customers to have GPT-4 analyze photographs given by them. That is the most recent function that is been opened as much as customers.

Including picture evaluation to giant language fashions (LLMs) like GPT-4 is seen by some as an enormous step ahead in AI analysis and improvement. This sort of multimodal LLM opens up new potentialities, taking language fashions past textual content to supply new interfaces and resolve new sorts of duties, creating recent experiences for customers.

The coaching of GPT-4V was completed in 2022, with early entry rolled out in March 2023. The visible function in GPT-4V is powered by GPT-4 tech. The coaching course of remained the identical. Initially, the mannequin was educated to foretell the following phrase in a textual content utilizing a large dataset of each textual content and pictures from varied sources together with the web.

Later, it was fine-tuned with extra knowledge, using a technique named reinforcement studying from human suggestions (RLHF), to generate outputs that people most popular.

GPT-4 Imaginative and prescient Mechanics

GPT-4’s outstanding imaginative and prescient language capabilities, though spectacular, have underlying strategies that continues to be on the floor.

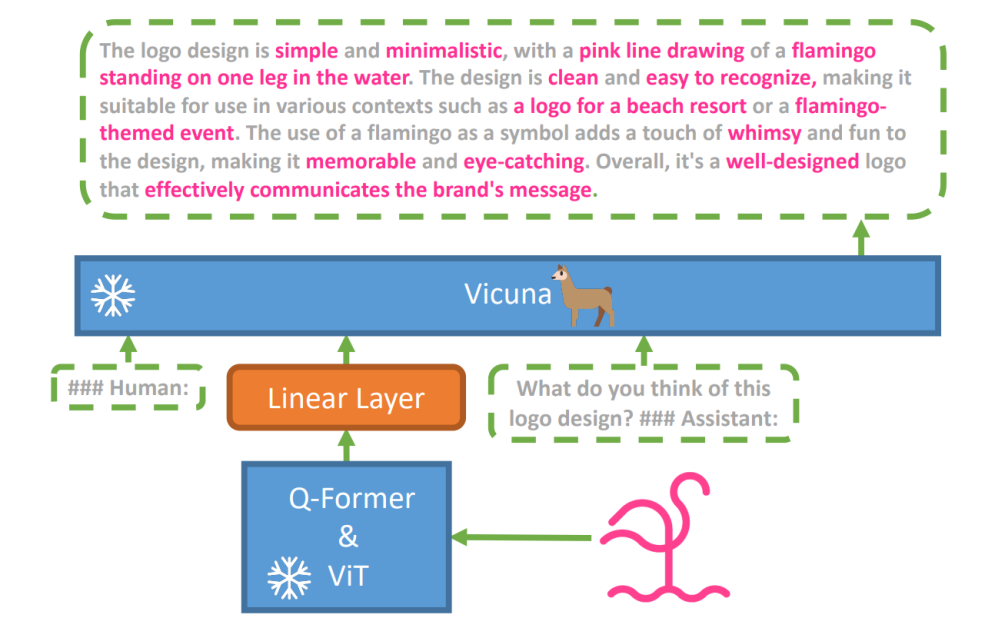

To discover this speculation, a brand new vision-language mannequin, MiniGPT-4 was launched, using a complicated LLM named Vicuna. This mannequin makes use of a imaginative and prescient encoder with pre-trained parts for visible notion, aligning encoded visible options with the Vicuna language mannequin by a single projection layer. The structure of MiniGPT-4 is easy but efficient, with a give attention to aligning visible and language options to enhance visible dialog capabilities.

MiniGPT-4’s structure features a imaginative and prescient encoder with pre-trained ViT and Q-Former, a single linear projection layer, and a complicated Vicuna giant language mannequin.

The pattern of autoregressive language fashions in vision-language duties has additionally grown, capitalizing on cross-modal switch to share information between language and multimodal domains.

MiniGPT-4 bridge the visible and language domains by aligning visible data from a pre-trained imaginative and prescient encoder with a complicated LLM. The mannequin makes use of Vicuna because the language decoder and follows a two-stage coaching method. Initially, it is educated on a big dataset of image-text pairs to know vision-language information, adopted by fine-tuning on a smaller, high-quality dataset to boost technology reliability and value.

To enhance the naturalness and value of generated language in MiniGPT-4, researchers developed a two-stage alignment course of, addressing the shortage of enough vision-language alignment datasets. They curated a specialised dataset for this function.

Initially, the mannequin generated detailed descriptions of enter photographs, enhancing the element by utilizing a conversational immediate aligned with Vicuna language mannequin’s format. This stage geared toward producing extra complete picture descriptions.

Preliminary Picture Description Immediate:

###Human: <Img><ImageFeature></Img>Describe this picture intimately. Give as many particulars as attainable. Say all the pieces you see. ###Assistant:

For knowledge post-processing, any inconsistencies or errors within the generated descriptions have been corrected utilizing ChatGPT, adopted by handbook verification to make sure prime quality.

Second-Stage Positive-tuning Immediate:

###Human: <Img><ImageFeature></Img><Instruction>###Assistant:

This exploration opens a window into understanding the mechanics of multimodal generative AI like GPT-4, shedding gentle on how imaginative and prescient and language modalities could be successfully built-in to generate coherent and contextually wealthy outputs.

Exploring GPT-4 Imaginative and prescient

Figuring out Picture Origins with ChatGPT

GPT-4 Imaginative and prescient enhances ChatGPT’s means to research photographs and pinpoint their geographical origins. This function transitions person interactions from simply textual content to a mixture of textual content and visuals, changing into a useful device for these interested by completely different locations by picture knowledge.

Asking ChatGPT the place a Landmark Picture is taken

Advanced Math Ideas

GPT-4 Imaginative and prescient excels in delving into advanced mathematical concepts by analyzing graphical or handwritten expressions. This function acts as a great tool for people trying to resolve intricate mathematical issues, marking GPT-4 Imaginative and prescient a notable support in academic and educational fields.

Asking ChatGPT to know a fancy math idea

Changing Handwritten Enter to LaTeX Codes

One in all GPT-4V’s outstanding talents is its functionality to translate handwritten inputs into LaTeX codes. This function is a boon for researchers, teachers, and college students who typically have to convert handwritten mathematical expressions or different technical data right into a digital format. The transformation from handwritten to LaTeX expands the horizon of doc digitization and simplifies the technical writing course of.

GPT-4V’s means to transform handwritten enter into LaTeX codes

Extracting Desk Particulars

GPT-4V showcases talent in extracting particulars from tables and addressing associated inquiries, a significant asset in knowledge evaluation. Customers can make the most of GPT-4V to sift by tables, collect key insights, and resolve data-driven questions, making it a sturdy device for knowledge analysts and different professionals.

GPT-4V deciphering desk particulars and responding to associated queries

Comprehending Visible Pointing

The distinctive means of GPT-4V to grasp visible pointing provides a brand new dimension to person interplay. By understanding visible cues, GPT-4V can reply to queries with a better contextual understanding.

GPT-4V showcases the distinct means to grasp visible pointing

Constructing Easy Mock-Up Web sites utilizing a drawing

Motivated by this tweet, I tried to create a mock-up for the unite.ai web site.

Whereas the end result did not fairly match my preliminary imaginative and prescient, this is the outcome I achieved.

ChatGPT Imaginative and prescient based mostly output HTML Frontend

Limitations & Flaws of GPT-4V(ision)

To investigate GPT-4V, Open AI crew carried qualitative and quantitative assessments. Qualitative ones included inner checks and exterior skilled critiques, whereas quantitative ones measured mannequin refusals and accuracy in varied eventualities resembling figuring out dangerous content material, demographic recognition, privateness issues, geolocation, cybersecurity, and multimodal jailbreaks.

Nonetheless the mannequin will not be good.

The paper highlights limitations of GPT-4V, like incorrect inferences and lacking textual content or characters in photographs. It might hallucinate or invent details. Notably, it is not fitted to figuring out harmful substances in photographs, typically misidentifying them.

In medical imaging, GPT-4V can present inconsistent responses and lacks consciousness of normal practices, resulting in potential misdiagnoses.

Unreliable efficiency for medical functions (Source)

It additionally fails to know the nuances of sure hate symbols and will generate inappropriate content material based mostly on the visible inputs. OpenAI advises in opposition to utilizing GPT-4V for vital interpretations, particularly in medical or delicate contexts.

The arrival of GPT-4 Imaginative and prescient (GPT-4V) brings alongside a bunch of cool potentialities and new hurdles to leap over. Earlier than rolling it out, plenty of effort has gone into ensuring dangers, particularly in terms of photos of individuals, are properly regarded into and lowered. It is spectacular to see how GPT-4V has stepped up, displaying plenty of promise in difficult areas like drugs and science.

Now, there are some massive questions on the desk. For example, ought to these fashions be capable of determine well-known of us from pictures? Ought to they guess an individual’s gender, race, or emotions from an image? And, ought to there be particular tweaks to assist visually impaired people? These questions open up a can of worms about privateness, equity, and the way AI ought to match into our lives, which is one thing everybody ought to have a say in.