Are you able to convey extra consciousness to your model? Contemplate changing into a sponsor for The AI Impression Tour. Study extra concerning the alternatives here.

Google DeepMind quietly revealed a big development of their synthetic intelligence (AI) analysis on Tuesday, presenting a brand new autoregressive mannequin geared toward bettering the understanding of lengthy video inputs.

The brand new mannequin, named “Mirasol3B,” demonstrates a groundbreaking method to multimodal studying, processing audio, video, and textual content knowledge in a extra built-in and environment friendly method.

Based on Isaac Noble, a software program engineer at Google Analysis, and Anelia Angelova, a analysis scientist at Google DeepMind, who co-wrote a prolonged blog post about their analysis, the problem of constructing multimodal fashions lies within the heterogeneity of the modalities.

“Among the modalities is likely to be effectively synchronized in time (e.g., audio, video) however not aligned with textual content,” they clarify. “Moreover, the massive quantity of information in video and audio alerts is far bigger than that in textual content, so when combining them in multimodal fashions, video and audio usually can’t be totally consumed and should be disproportionately compressed. This downside is exacerbated for longer video inputs.”

A brand new method to multimodal studying

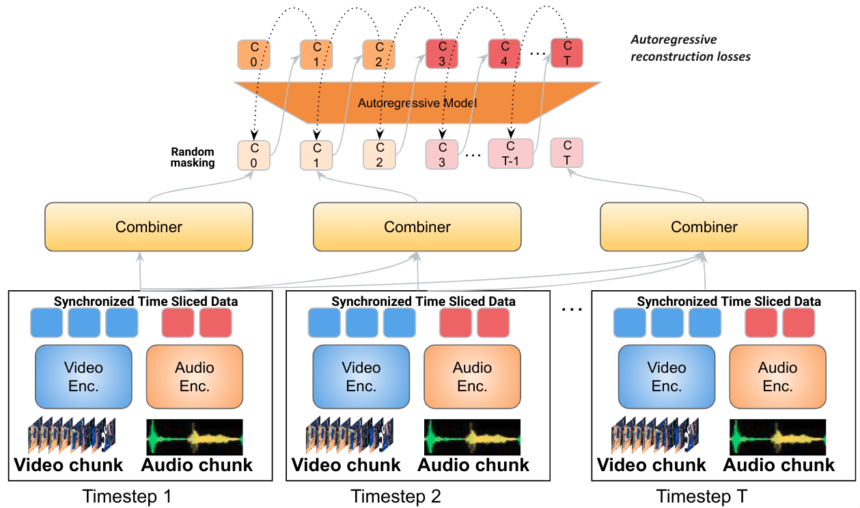

In response to this complexity, Google’s Mirasol3B mannequin decouples multimodal modeling into separate targeted autoregressive fashions, processing inputs in accordance with the traits of the modalities.

“Our mannequin consists of an autoregressive element for the time-synchronized modalities (audio and video) and a separate autoregressive element for modalities that aren’t essentially time-aligned however are nonetheless sequential, e.g., textual content inputs, similar to a title or description,” Noble and Angelova clarify.

The announcement comes at a time when the tech business is striving to harness the ability of AI to research and perceive huge quantities of information throughout completely different codecs. Google’s Mirasol3B represents a big step ahead on this endeavor, opening up new potentialities for functions similar to video query answering and lengthy video high quality assurance.

Potential functions for YouTube

One of many attainable functions of the mannequin that Google may discover is to apply it to YouTube, which is the world’s largest on-line video platform and one of many firm’s main sources of revenue.

The mannequin may theoretically be used to boost the consumer expertise and engagement by offering extra multimodal options and functionalities, similar to producing captions and summaries for movies, answering questions and offering suggestions, creating customized suggestions and commercials, and enabling customers to create and edit their very own movies utilizing multimodal inputs and outputs.

For instance, the mannequin may generate captions and summaries for movies primarily based on each the visible and audio content material, and permit customers to go looking and filter movies by key phrases, matters, or sentiments. This might enhance the accessibility and discoverability of the movies, and assist customers discover the content material they’re searching for extra simply and rapidly.

The mannequin may additionally theoretically be used to reply questions and supply suggestions for customers primarily based on the video content material, similar to explaining the that means of a time period, offering extra data or assets, or suggesting associated movies or playlists.

The announcement has generated a variety of curiosity and pleasure within the synthetic intelligence group, in addition to some skepticism and criticism. Some consultants have praised the mannequin for its versatility and scalability, and expressed their hopes for its potential functions in varied domains.

As an illustration, Leo Tronchon, an ML analysis engineer at Hugging Face, tweeted: “Very attention-grabbing to see fashions like Mirasol incorporating extra modalities. There aren’t many robust fashions within the open utilizing each audio and video but. It could be actually helpful to have it on [Hugging Face].”

Gautam Sharda, scholar of pc science on the College of Iowa, tweeted: “Looks as if there’s no code, mannequin weights, coaching knowledge, and even an API. Why not? I’d like to see them really launch one thing past only a analysis paper ?.”

A big milestone for the way forward for AI

The announcement marks a big milestone within the area of synthetic intelligence and machine studying, and demonstrates Google’s ambition and management in growing cutting-edge applied sciences that may improve and rework human lives.

Nonetheless, it additionally poses a problem and alternative for the researchers, builders, regulators, and customers of AI, who want to make sure that the mannequin and its functions are aligned with the moral, social, and environmental values and requirements of the society.

Because the world turns into extra multimodal and interconnected, it’s important to foster a tradition of collaboration, innovation, and accountability among the many stakeholders and the general public, and to create a extra inclusive and numerous AI ecosystem that may profit everybody.