Synthetic Neural Networks (ANNs) have been demonstrated to be state-of-the-art in lots of instances of supervised studying, however programming an ANN manually could be a difficult job. Consequently, frameworks similar to TensorFlow and PyTorch have been created to simplify the creation, serving, and scaling of deep studying fashions.

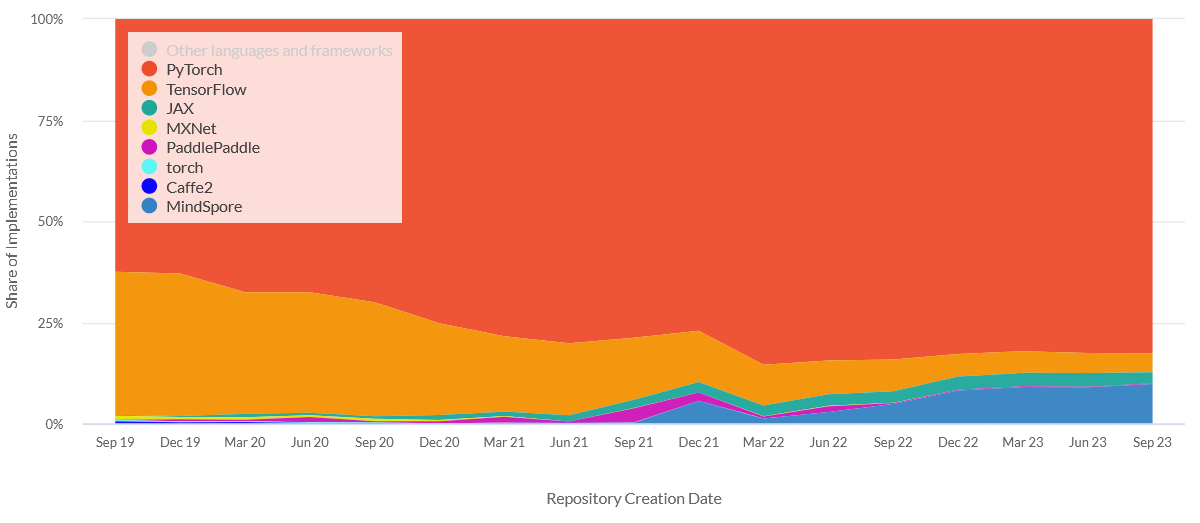

With the elevated curiosity in deep studying lately, there was an explosion of machine studying instruments. In recent times, deep studying frameworks similar to PyTorch, TensorFlow, Keras, Chainer, and others have been launched and developed at a speedy tempo. These frameworks present neural community items, value features, and optimizers to assemble and prepare neural community fashions.

Utilizing synthetic neural networks is a crucial method for drawing inferences and making predictions when analyzing massive and complicated knowledge units. TensorFlow and PyTorch are two widely-used machine studying frameworks that assist synthetic neural community fashions.

This text describes the effectiveness and variations between these two frameworks based mostly on latest research to check the coaching time, reminiscence utilization, and ease of use of the 2 frameworks. Specifically, you’ll study:

- Traits of PyTorch vs. TensorFlow

- Efficiency, Accuracy, Coaching, and Ease of Use

- Primary Variations PyTorch vs. TensorFlow

- Full Comparability Desk

Key Traits of TensorFlow and PyTorch

TensorFlow Overview

TensorFlow is a very fashionable end-to-end open-source platform for machine studying. It was initially developed by researchers and engineers engaged on the Google Mind crew earlier than it was open-sourced.

The TensorFlow software program library changed Google’s DistBelief framework and runs on nearly all accessible execution platforms (CPU, GPU, TPU, Cellular, and so on.). The framework gives a math library that features primary arithmetic operators and trigonometric features.

TensorFlow is at the moment utilized by numerous worldwide firms, similar to Google, Uber, Microsoft, and a variety of universities.

Keras is the high-level API of the TensorFlow platform. It gives an approachable, environment friendly interface for fixing machine studying (ML) issues, with a deal with trendy deep studying fashions. The TensorFlow Lite implementation is specifically designed for edge-based machine studying. TF Lite is optimized to run numerous light-weight algorithms on numerous resource-constrained edge units, similar to smartphones, microcontrollers, and different chips.

TensorFlow Serving gives a high-performance and versatile system for deploying machine studying fashions in manufacturing settings. One of many best methods to get began with TensorFlow Serving is with Docker. For enterprise purposes utilizing TensorFlow, try the pc imaginative and prescient platform Viso Suite which automates the end-to-end infrastructure round serving a TensorFlow mannequin at scale.

TensorFlow Benefits

- Help and library administration: TensorFlow is backed by Google and has frequent releases with new options. It’s popularly utilized in manufacturing environments.

- Open-sourced: TensorFlow is an open-source platform that could be very well-liked and accessible to a broad vary of customers.

- Information visualization: TensorFlow gives a software referred to as TensorBoard to visualise knowledge graphically. It additionally permits simple debugging of nodes, reduces the trouble of wanting on the complete code, and successfully resolves the neural community.

- Keras compatibility: TensorFlow is appropriate with Keras, which permits its customers to code some high-level performance sections and gives system-specific performance to TensorFlow (pipelining, estimators, and so on.).

- Very scalable: TensorFlow’s attribute of being deployed on each machine permits its customers to develop any sort of system.

- Compatibility: TensorFlow is appropriate with many languages, similar to C++, JavaScript, Python, C#, Ruby, and Swift. This permits a person to work in an surroundings they’re comfy in.

- Architectural assist: TensorFlow finds its use as a {hardware} acceleration library as a result of parallelism of labor fashions. It makes use of completely different distribution methods in GPU and CPU programs. TensorFlow additionally has its structure TPU, which performs computations sooner than GPU and CPU. Due to this fact, fashions constructed utilizing TPU could be simply deployed on a cloud at a less expensive charge and executed at a sooner charge. Nevertheless, TensorFlow’s structure TPU solely permits the execution of a mannequin, not coaching it.

TensorFlow Disadvantages

- Benchmark assessments: Computation velocity is the place TensorFlow is lagging behind when in comparison with its rivals. It has much less usability compared to different frameworks.

- Dependency: Though TensorFlow reduces the size of code and makes it simpler for a person to entry it, it provides a stage of complexity to its use. Each code must be executed utilizing any platform for its assist, which will increase the dependency for the execution.

- Symbolic loops: TensorFlow lags at offering the symbolic loops for indefinite sequences. It has its utilization for particular sequences, which makes it a usable system. Therefore it’s known as a low-level API.

- GPU Help: Initially, TensorFlow had solely NVIDIA assist for GPU and Python assist for GPU programming, which is a disadvantage as there’s a hike of different languages in deep studying.

TensorFlow Distribution Strategies is a TensorFlow API to distribute coaching throughout a number of GPUs, a number of machines, or TPUs. Utilizing this API, you possibly can distribute your current fashions and coaching code with minimal code modifications.

PyTorch Overview

PyTorch was first launched in 2016. Earlier than PyTorch, deep studying frameworks usually targeted on both velocity or usability, however not each. PyTorch has grow to be a preferred software within the deep studying analysis group by combining a deal with usability with cautious efficiency issues. It gives an crucial and Pythonic programming type that helps code as a mannequin, makes debugging simple, and is in step with different well-liked scientific computing libraries whereas remaining environment friendly and supporting {hardware} accelerators similar to GPUs.

The open supply deep studying framework is a Python library that performs fast execution of dynamic tensor computations with computerized differentiation and GPU acceleration and does so whereas sustaining efficiency akin to the quickest present libraries for deep studying. Immediately, most of its core is written in C++, one of many main causes PyTorch can obtain a lot decrease overhead in comparison with different frameworks. As of immediately, PyTorch seems to be greatest suited to drastically shortening the design, coaching, and testing cycle for brand new neural networks for particular functions. Therefore it grew to become very fashionable within the analysis communities.

PyTorch 2.0 marks a significant development within the PyTorch framework, providing enhanced efficiency whereas sustaining backward compatibility and its Python-centric method, which has been key to its widespread adoption within the AI/ML group.

For cell deployment, PyTorch gives experimental end-to-end workflow assist from Python to iOS and Android platforms, together with API extensions for cell ML integration and preprocessing duties. PyTorch is suitable for pure language processing (NLP) duties to energy clever language purposes utilizing deep studying. Moreover, PyTorch gives native assist for the ONNX (Open Neural Community Trade) format, permitting for seamless mannequin export and compatibility with ONNX-compatible platforms and instruments.

A number of well-liked deep studying software program and analysis oriented initiatives are constructed on high of PyTorch, together with Tesla Autopilot or Uber’s Pyro.

PyTorch Benefits

- PyTorch relies on Python: PyTorch is Python-centric or “pythonic”, designed for deep integration in Python code as a substitute of being an interface to a library written in another language. Python is without doubt one of the hottest languages utilized by knowledge scientists and can be probably the most well-liked languages used for constructing machine studying fashions and ML analysis.

- Simpler to study: As a result of its syntax is much like typical programming languages like Python, PyTorch is relatively simpler to study than different deep studying frameworks.

- Debugging: PyTorch could be debugged utilizing one of many many extensively accessible Python debugging instruments (for instance, Python’s pdb and ipdb instruments).

- Dynamic computational graphs: PyTorch helps dynamic computational graphs, which suggests the community habits could be modified programmatically at runtime. This makes optimizing the mannequin a lot simpler and provides PyTorch a significant benefit over different machine studying frameworks, which deal with neural networks as static objects.

- Information parallelism: The info parallelism function permits PyTorch to distribute computational work amongst a number of CPU or GPU cores. Though this parallelism could be finished in different machine-learning instruments, it’s a lot simpler in PyTorch.

- Group: PyTorch has a really lively group and boards (focus on.pytorch.org). Its documentation (pytorch.org) could be very organized and useful for rookies; it’s stored updated with the PyTorch releases and gives a set of tutorials. PyTorch could be very easy to make use of, which additionally signifies that the training curve for builders is comparatively quick.

- Distributed Coaching: PyTorch gives native assist for asynchronous execution of collective operations and peer-to-peer communication, accessible from each Python and C++.

PyTorch Disadvantages

- Lacks mannequin serving in manufacturing: Whereas this can change sooner or later, different frameworks have been extra extensively used for actual manufacturing work (even when PyTorch turns into more and more well-liked within the analysis communities). Therefore, the documentation and developer communities are smaller in comparison with different frameworks.

- Restricted monitoring and visualization interfaces: Whereas TensorFlow additionally comes with a extremely succesful visualization software for constructing the mannequin graph (TensorBoard), PyTorch doesn’t have something like this but. Therefore, builders can use one of many many current Python knowledge visualization instruments or join externally to TensorBoard.

- Not as in depth as TensorFlow: PyTorch just isn’t an end-to-end machine studying improvement software; the event of precise purposes requires conversion of the PyTorch code into one other framework, similar to Caffe2, to deploy purposes to servers, workstations, and cell units.

Evaluating PyTorch vs. TensorFlow

1.) Efficiency Comparability

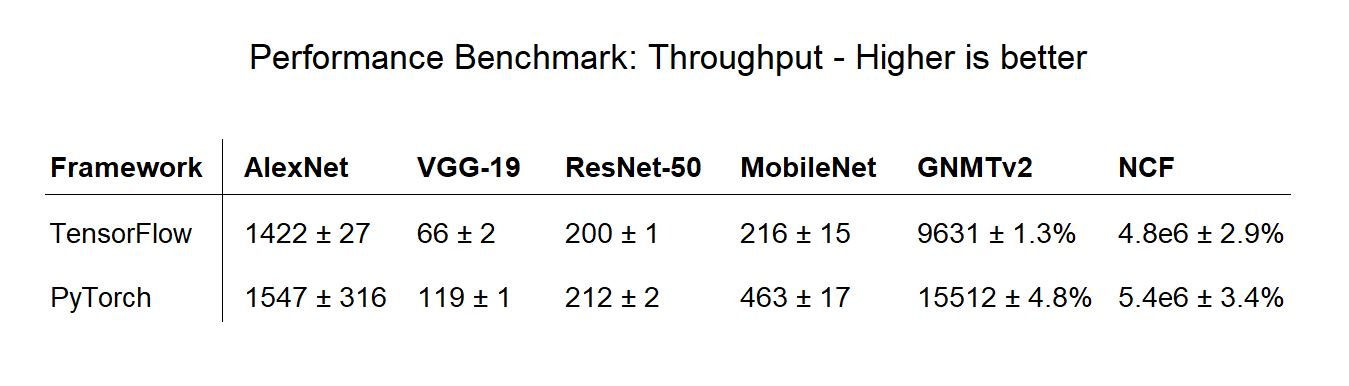

The next efficiency benchmark goals to indicate an general comparability of the single-machine keen mode efficiency of PyTorch by evaluating it to the favored graph-based deep studying Framework TensorFlow.

The desk exhibits the coaching velocity for the 2 fashions utilizing 32-bit floats. Throughput is measured in photos per second for the AlexNet, VGG-19, ResNet-50, and MobileNet fashions, in tokens per second for the GNMTv2 mannequin, and in samples per second for the NCF mannequin. The benchmark exhibits that the efficiency of PyTorch is best in comparison with TensorFlow, which could be attributed to the truth that these instruments offload a lot of the computation to the identical model of the cuDNN and cuBLAS libraries.

2.) Accuracy

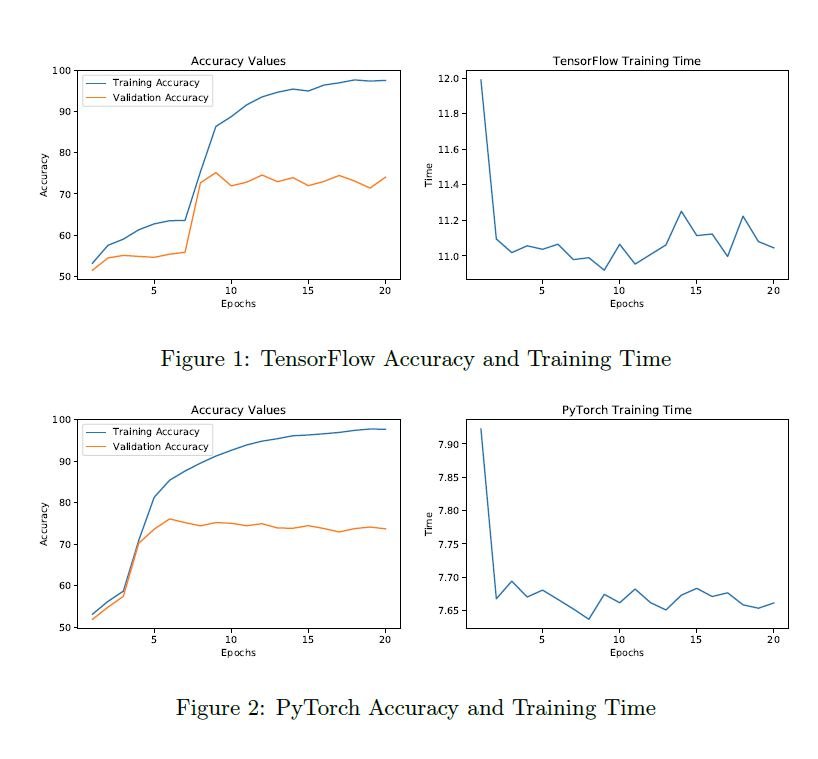

The TensorFlow Accuracy and the PyTorch Accuracy graphs (see under) present how related the accuracies of the 2 frameworks are. For each fashions, the coaching accuracy consistently will increase because the fashions begin to memorize the knowledge they’re being educated on.

The validation accuracy signifies how nicely the mannequin is definitely studying by the coaching course of. For each fashions, the validation accuracy of the fashions in each frameworks averaged about 78% after 20 epochs. Therefore, each frameworks are in a position to implement the neural community precisely and are able to producing the identical outcomes given the identical mannequin and knowledge set to coach on.

3.) Coaching Time and Reminiscence Utilization

The above determine exhibits the coaching occasions of TensorFlow and PyTorch. It signifies a considerably increased coaching time for TensorFlow (a median of 11.19 seconds for TensorFlow vs. PyTorch with a median of seven.67 seconds).

Whereas the length of the mannequin coaching occasions varies considerably from day after day on Google Colaboratory, the relative durations between TensorFlow and PyTorch stay constant.

The reminiscence utilization through the coaching of TensorFlow (1.7 GB of RAM) was considerably decrease than PyTorch’s reminiscence utilization (3.5 GB RAM). Nevertheless, each fashions had just a little variance in reminiscence utilization throughout coaching and better reminiscence utilization through the preliminary loading of the info: 4.8 GB for TensorFlow vs. 5 GB for PyTorch.

4.) Ease of Use

PyTorch’s extra object-oriented type made implementing the mannequin much less time-consuming. Additionally, the specification of knowledge dealing with was extra easy for PyTorch in comparison with TensorFlow.

However, TensorFlow signifies a barely steeper studying curve as a result of low-level implementations of the neural community construction. Therefore, its low-level method permits for a extra personalized method to forming the neural community, permitting for extra specialised options.

Furthermore, the very high-level Keras library runs on high of TensorFlow. In order a instructing software, the very high-level Keras library can be utilized to show primary ideas, after which TensorFlow can be utilized to additional the understanding of the ideas by having to put out extra of the construction.

Variations of PyTorch vs. TensorFlow – Abstract

The reply to the query “what is best, PyTorch or Tensorflow?” will depend on the use case and utility, however there are a number of necessary features to contemplate:

On the whole, TensorFlow and PyTorch implementations present equal accuracy. Nevertheless, the coaching time of TensorFlow is considerably increased, however the reminiscence utilization was decrease.

PyTorch permits faster prototyping than TensorFlow, however TensorFlow could also be a greater possibility if customized options are wanted within the neural community.

TensorFlow treats the neural community as a static object; if you wish to change the habits of your mannequin, you must begin from scratch. With PyTorch, the neural community could be tweaked on the fly at run-time, making it simpler to optimize the mannequin.

One other main distinction lies in how builders go about debugging. Efficient debugging with TensorFlow requires a particular debugger software that lets you look at how the community nodes are doing their calculations at every step. PyTorch could be debugged utilizing one of many many extensively accessible Python debugging instruments.

Each PyTorch and TensorFlow present methods to hurry up mannequin improvement and cut back the quantity of boilerplate code. Nevertheless, the core distinction between PyTorch and TensorFlow is that PyTorch is extra “pythonic” and based mostly on an object-oriented method. On the identical time, TensorFlow gives extra choices to select from, leading to usually increased flexibility. For a lot of builders accustomed to Python, this is a crucial motive why Pytorch is best than TensorFlow.

Comparability Record

| Characteristic | PyTorch | TensorFlow |

|---|---|---|

| Ease of Use | Extra Pythonic syntax and simpler to debug | Steeper studying curve, requires extra boilerplate code |

| Dynamic Computation Graph | Simpler to change the computation graph throughout runtime | Static computation graph requires recompilation for modifications |

| GPU Help | Multi-GPU assist is simpler to arrange and use | Multi-GPU assist is extra complicated and requires extra setup, there’s a TF API |

| Group Help | Newer group in comparison with TensorFlow, rising very quick | Giant and lively group with in depth sources |

| Ecosystem | Has fewer libraries and instruments in comparison with TensorFlow | Has an intensive library of pre-built fashions and instruments |

| Debugging | Simpler to debug because of Pythonic syntax and dynamic computation graph | Debugging could be tougher as a result of static computation graph |

| Analysis | Typically used for analysis because of its flexibility and ease of use | Typically used for manufacturing purposes because of its velocity and scalability |

| Math Library | PyTorch makes use of TorchScript for tensor manipulation and NumPy for numerical computations | TensorFlow makes use of its personal math library for each tensor manipulation and numerical computations |

| Keras Integration | PyTorch doesn’t have a local Keras integration | TensorFlow has a local Keras integration which simplifies mannequin constructing and coaching |

What’s Subsequent?

If you happen to loved studying this text and need to study extra about synthetic intelligence, machine studying, and deep studying, we suggest studying: