There have been many advances in vision-language fashions (VLM) that may match pure language queries to things in a visible scene. And researchers are experimenting with how these fashions could be utilized to robotics techniques, that are nonetheless lagging in generalizing their skills.

A brand new paper by researchers at Meta AI and New York University introduces an open-knowledge-based framework that brings pre-trained machine studying (ML) fashions collectively to create a robotics system that may carry out duties in unseen environments. Known as OK-Robot, the framework combines VLMs with movement-planning and object-manipulation fashions to carry out pick-and-drop operations with out coaching.

Robotic techniques are often designed to be deployed in beforehand seen environments and are poor at generalizing their capabilities past areas the place they’ve been educated. This limitation is very problematic in settings the place knowledge is scarce, corresponding to unstructured properties.

There have been spectacular advances in particular person elements wanted for robotics techniques. VLMs are good at matching language prompts to visible objects. On the similar time, robotic expertise for navigation and greedy have progressed significantly. Nonetheless, robotic techniques that mix trendy imaginative and prescient fashions with robot-specific primitives nonetheless carry out poorly.

“Making progress on this drawback requires a cautious and nuanced framework that each integrates VLMs and robotics primitives, whereas being versatile sufficient to include newer fashions as they’re developed by the VLM and robotics group,” the researchers write of their paper.

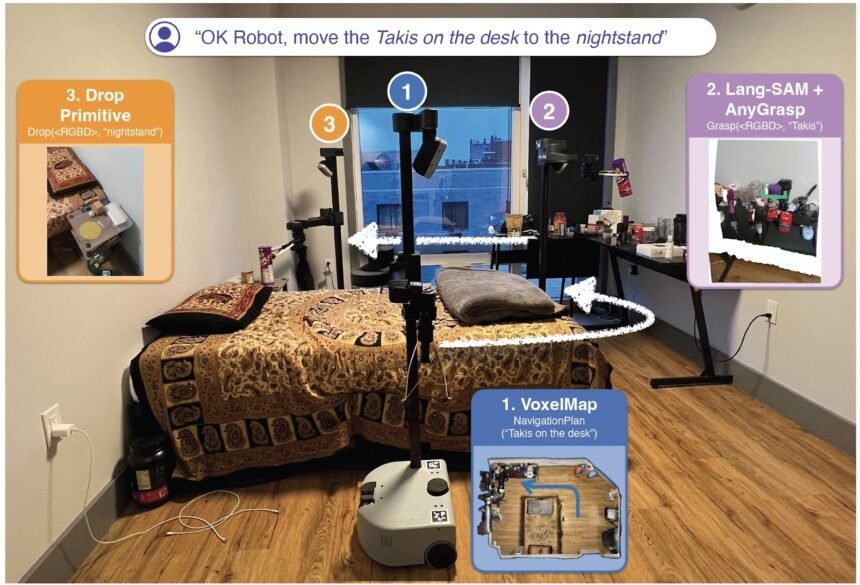

OK-Robotic modules (supply: arxiv)

OK-Robotic combines state-of-the-art VLMs with highly effective robotics primitives to carry out pick-and-drop duties in unseen environments. The fashions used within the system are educated on massive, publicly out there datasets.

OK-Robotic combines three main subsystems: an open-vocabulary object navigation module, an RGB-D greedy module and a dropping heuristic system. When positioned in a brand new residence, OK-Robotic requires a handbook scan of the inside, which could be captured with an iPhone app that takes a sequence of RGB-D photographs because the person strikes across the constructing. The system makes use of the pictures and the digicam pose and positions to create a 3D atmosphere map.

The system processes every picture with a imaginative and prescient transformer (ViT) mannequin to extract details about objects. The item and atmosphere info are introduced collectively to create a semantic object reminiscence module.

Given a pure language question for choosing an object, the reminiscence module computes the embedding of the immediate and matches it with the thing with the closest semantic illustration. OK-Robotic then makes use of navigation algorithms to seek out the very best path to the placement of the thing in a approach that gives the robotic with room to govern the thing with out inflicting collisions.

Lastly, the robotic makes use of an RGB-D digicam, an object segmentation mannequin and a pre-trained grasp mannequin to choose the thing. The system makes use of an identical course of to achieve the vacation spot and drop the thing. This allows the robotic to seek out essentially the most appropriate grasp for every object and likewise have the ability to deal with vacation spot spots which may not be flat.

“From arriving into a very novel atmosphere to begin working autonomously in it, our system takes below 10 minutes on common to finish the primary pick-and-drop activity,” the researchers write.

The researchers examined OK-Robotic in 10 properties and ran 171 pick-and-drop experiments to judge the way it performs in novel environments. OK-Robotic succeeded in finishing full pick-and-drops in 58% of circumstances. Notably, this can be a zero-shot algorithm, which suggests the fashions used within the system weren’t particularly educated for such environments. The researchers additionally discovered that by enhancing the queries, decluttering the house, and excluding adversarial objects, the success fee will increase to above 82%.

OK-Robotic will not be excellent. It generally fails to match the pure language immediate with the proper object. Its greedy mannequin fails on some objects, and the robotic {hardware} has limitations. Extra importantly, its object reminiscence module is frozen after the atmosphere is scanned. Due to this fact, the robotic can’t dynamically adapt to modifications within the objects and preparations.

Nonetheless, the OK-Robotic venture has some crucial findings. First, it reveals that present open-vocabulary vision-language fashions are excellent at figuring out arbitrary objects in the true world and navigating to them in a zero-shot method. Additionally, the findings present that special-purpose robotic fashions pre-trained on massive quantities of knowledge could be utilized out-of-the-box to method open-vocabulary greedy in unseen environments. Lastly, it reveals that with the proper tooling and configuration, pre-trained fashions could be mixed to carry out zero-shot duties with no coaching. OK-Robotic could be the start of a subject of analysis with loads of room for enchancment.