Over the previous few years, diffusion fashions have achieved huge success and recognition for picture and video technology duties. Video diffusion fashions, particularly, have been gaining vital consideration because of their means to supply movies with excessive coherence in addition to constancy. These fashions generate high-quality movies by using an iterative denoising course of of their structure that regularly transforms high-dimensional Gaussian noise into actual knowledge.

Steady Diffusion is likely one of the most consultant fashions for picture generative duties, counting on a Variational AutoEncoder (VAE) to map between the actual picture and the down-sampled latent options. This permits the mannequin to scale back generative prices, whereas the cross-attention mechanism in its structure facilitates text-conditioned picture technology. Extra just lately, the Steady Diffusion framework has constructed the inspiration for a number of plug-and-play adapters to realize extra progressive and efficient picture or video technology. Nevertheless, the iterative generative course of employed by a majority of video diffusion fashions makes the picture technology course of time-consuming and relatively expensive, limiting its purposes.

On this article, we’ll speak about AnimateLCM, a customized diffusion mannequin with adapters aimed toward producing high-fidelity movies with minimal steps and computational prices. The AnimateLCM framework is impressed by the Consistency Mannequin, which accelerates sampling with minimal steps by distilling pre-trained picture diffusion fashions. Moreover, the profitable extension of the Consistency Mannequin, the Latent Consistency Mannequin (LCM), facilitates conditional picture technology. As an alternative of conducting consistency studying straight on the uncooked video dataset, the AnimateLCM framework proposes utilizing a decoupled consistency studying technique. This technique decouples the distillation of movement technology priors and picture technology priors, permitting the mannequin to boost the visible high quality of the generated content material and enhance coaching effectivity concurrently. Moreover, the AnimateLCM mannequin proposes coaching adapters from scratch or adapting present adapters to its distilled video consistency mannequin. This facilitates the mixture of plug-and-play adapters within the household of steady diffusion fashions to realize totally different features with out harming the pattern pace.

This text goals to cowl the AnimateLCM framework in depth. We discover the mechanism, the methodology, and the structure of the framework, together with its comparability with state-of-the-art picture and video technology frameworks. So, let’s get began.

Diffusion fashions have been the go to framework for picture technology and video technology duties owing to their effectivity and capabilities on generative duties. A majority of diffusion fashions depend on an iterative denoising course of for picture technology that transforms a excessive dimensional Gaussian noise into actual knowledge regularly. Though the strategy delivers considerably passable outcomes, the iterative course of and the variety of iterating samples slows the technology course of and likewise provides to the computational necessities of diffusion fashions which can be a lot slower than different generative frameworks like GAN or Generative Adversarial Networks. Prior to now few years, Consistency Fashions or CMs have been proposed as an alternative choice to iterative diffusion fashions to hurry up the technology course of whereas conserving the computational necessities fixed.

The spotlight of consistency fashions is that they be taught consistency mappings that keep self-consistency of trajectories launched by the pre-trained diffusion fashions. The educational technique of Consistency Fashions permits it to generate high-quality photos with minimal steps, and likewise eliminates the necessity for computation-intensive iterations. Moreover, the Latent Consistency Mannequin or LCM constructed on prime of the steady diffusion framework might be built-in into the net consumer interface with the prevailing adapters to realize a number of extra functionalities like actual time picture to picture translation. Compared, though the prevailing video diffusion fashions ship acceptable outcomes, progress remains to be to be made within the video pattern acceleration area, and is of nice significance owing to the excessive video technology computational prices.

That leads us to AnimateLCM, a excessive constancy video technology framework that wants a minimal variety of steps for the video technology duties. Following the Latent Consistency Mannequin, AnimateLCM framework treats the reverse diffusion course of as fixing CFG or Classifier Free Steering augmented likelihood movement, and trains the mannequin to foretell the answer of such likelihood flows straight within the latent area. Nevertheless, as a substitute of conducting consistency studying on uncooked video knowledge straight that requires excessive coaching and computational assets, and infrequently results in poor high quality, the AnimateLCM framework proposes a decoupled constant studying technique that decouples the consistency distillation of movement technology and picture technology priors.

The AnimateLCM framework first conducts the consistency distillation to adapt the picture base diffusion mannequin into the picture consistency mannequin, after which conducts 3D inflation to each the picture consistency and picture diffusion fashions to accommodate 3D options. Ultimately, the AnimateLCM framework obtains the video consistency mannequin by conducting consistency distillation on video knowledge. Moreover, to alleviate potential function corruption because of the diffusion course of, the AnimateLCM framework additionally proposes to make use of an initialization technique. Because the AnimateLCM framework is constructed on prime of the Steady Diffusion framework, it will possibly exchange the spatial weights of its skilled video consistency mannequin with the publicly out there customized picture diffusion weights to realize progressive technology outcomes.

Moreover, to coach particular adapters from scratch or to go well with publicly out there adapters higher, the AnimateLCM framework proposes an efficient acceleration technique for the adapters that don’t require coaching the precise instructor fashions.

The contributions of the AnimateLCM framework might be very effectively summarized as: The proposed AnimateLCM framework goals to realize top quality, quick, and excessive constancy video technology, and to realize this, the AnimateLCM framework proposes a decoupled distillation technique the decouples the movement and picture technology priors leading to higher technology high quality, and enhanced coaching effectivity.

InstantID : Methodology and Structure

At its core, the InstantID framework attracts heavy inspiration from diffusion fashions and sampling pace methods. Diffusion fashions, often known as score-based generative fashions have demonstrated exceptional picture generative capabilities. Below the steerage of rating course, the iterative sampling technique applied by diffusion fashions denoise the noise-corrupted knowledge regularly. The effectivity of diffusion fashions is likely one of the main explanation why they’re employed by a majority of video diffusion fashions by coaching on added temporal layers. Then again, sampling pace and sampling acceleration methods assist deal with the sluggish technology speeds in diffusion fashions. Distillation primarily based acceleration methodology tunes the unique diffusion weights with a refined structure or scheduler to boost the technology pace.

Shifting alongside, the InstantID framework is constructed on prime of the steady diffusion mannequin that permits InstantID to use related notions. The mannequin treats the discrete ahead diffusion course of as continuous-time Variance Preserving SDE. Moreover, the steady diffusion mannequin is an extension of DDPM or Denoising Diffusion Probabilistic Mannequin, through which the coaching knowledge level is perturbed regularly by the discrete Markov chain with a perturbation kennel permitting the distribution of noisy knowledge at totally different time step to observe the distribution.

To attain high-fidelity video technology with a minimal variety of steps, the AnimateLCM framework tames the steady diffusion-based video fashions to observe the self-consistency property. The general coaching construction of the AnimateLCM framework consists of a decoupled consistency studying technique for instructor free adaptation and efficient consistency studying.

Transition from Diffusion Fashions to Consistency Fashions

The AnimateLCM framework introduces its personal adaptation of the Steady Diffusion Mannequin or DM to the Consistency Mannequin or CM following the design of the Latent Consistency Mannequin or LCM. It’s value noting that though the steady diffusion fashions sometimes predict the noise added to the samples, they’re important sigma-diffusion fashions. It’s in distinction with consistency fashions that purpose to foretell the answer to the PF-ODE trajectory straight. Moreover, in steady diffusion fashions with sure parameters, it’s important for the mannequin to make use of a classifier-free steerage technique to generate top quality photos. The AnimateLCM framework nevertheless, employs a classifier-free steerage augmented ODE solver to pattern the adjoining pairs in the identical trajectories, leading to higher effectivity and enhanced high quality. Moreover, present fashions have indicated that the technology high quality and coaching effectivity is influenced closely by the variety of discrete factors within the trajectory. Smaller variety of discrete factors accelerates the coaching course of whereas the next variety of discrete factors leads to much less bias throughout coaching.

Decoupled Consistency Studying

For the method of consistency distillation, builders have noticed that the information used for coaching closely influences the standard of the ultimate technology of the consistency fashions. Nevertheless, the main subject with publicly out there datasets at present is that always encompass watermark knowledge, or its of low high quality, and may comprise overly transient or ambiguous captions. Moreover, coaching the mannequin straight on large-resolution movies is computationally costly, and time consuming, making it a non-feasible choice for a majority of researchers.

Given the provision of filtered top quality datasets, the AnimateLCM framework proposes to decouple the distillation of the movement priors and picture technology priors. To be extra particular, the AnimateLCM framework first distills the steady diffusion fashions into picture consistency fashions with filtered high-quality picture textual content datasets with higher decision. The framework then trains the sunshine LoRA weights on the layers of the steady diffusion mannequin, thus freezing the weights of the steady diffusion mannequin. As soon as the mannequin tunes the LoRA weights, it really works as a flexible acceleration module, and it has demonstrated its compatibility with different customized fashions within the steady diffusion communities. For inference, the AnimateLCM framework merges the weights of the LoRA with the unique weights with out corrupting the inference pace. After the AnimateLCM framework beneficial properties the consistency mannequin on the degree of picture technology, it freezes the weights of the steady diffusion mannequin and LoRA weights on it. Moreover, the mannequin inflates the 2D convolution kernels to the pseudo-3D kernels to coach the consistency fashions for video technology. The mannequin additionally provides temporal layers with zero initialization and a block degree residual connection. The general setup helps in assuring that the output of the mannequin won’t be influenced when it’s skilled for the primary time. The AnimateLCM framework below the steerage of open sourced video diffusion fashions trains the temporal layers prolonged from the steady diffusion fashions.

It is essential to acknowledge that whereas spatial LoRA weights are designed to expedite the sampling course of with out taking temporal modeling under consideration, and temporal modules are developed by way of customary diffusion methods, their direct integration tends to deprave the illustration on the onset of coaching. This presents vital challenges in successfully and effectively merging them with minimal battle. By way of empirical analysis, the AnimateLCM framework has recognized a profitable initialization strategy that not solely makes use of the consistency priors from spatial LoRA weights but additionally mitigates the opposed results of their direct mixture.

On the onset of consistency coaching, pre-trained spatial LoRA weights are built-in solely into the web consistency mannequin, sparing the goal consistency mannequin from insertion. This technique ensures that the goal mannequin, serving as the tutorial information for the web mannequin, doesn’t generate defective predictions that would detrimentally have an effect on the web mannequin’s studying course of. All through the coaching interval, the LoRA weights are progressively included into the goal consistency mannequin through an exponential shifting common (EMA) course of, reaching the optimum weight stability after a number of iterations.

Trainer Free Adaptation

Steady Diffusion fashions and plug and play adapters usually go hand in hand. Nevertheless, it has been noticed that regardless that the plug and play adapters work to some extent, they have a tendency to lose management in particulars even when a majority of those adapters are skilled with picture diffusion fashions. To counter this subject, the AnimateLCM framework opts for instructor free adaptation, a easy but efficient technique that both accommodates the prevailing adapters for higher compatibility or trains the adapters from the bottom up or. The strategy permits the AnimateLCM framework to realize the controllable video technology and image-to-video technology with a minimal variety of steps with out requiring instructor fashions.

AnimateLCM: Experiments and Outcomes

The AnimateLCM framework employs a Steady Diffusion v1-5 as the bottom mannequin, and implements the DDIM ODE solver for coaching functions. The framework additionally applies the Steady Diffusion v1-5 with open sourced movement weights because the instructor video diffusion mannequin with the experiments being carried out on the WebVid2M dataset with none extra or augmented knowledge. Moreover, the framework employs the TikTok dataset with BLIP-captioned transient textual prompts for controllable video technology.

Qualitative Outcomes

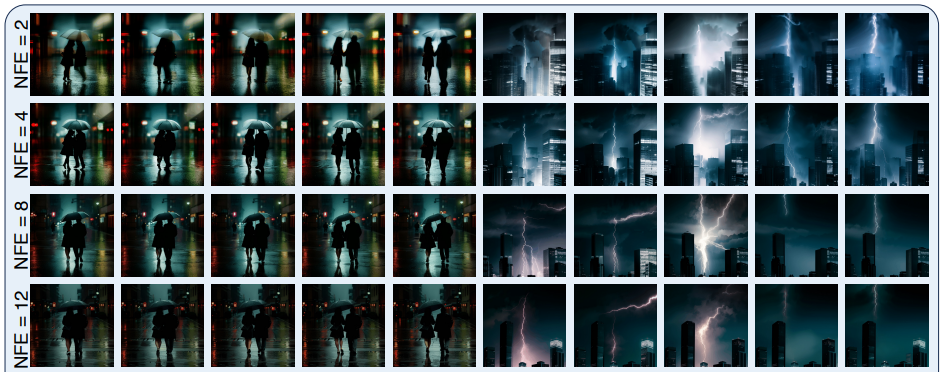

The next determine demonstrates outcomes of the four-step technology methodology applied by the AnimateLCM framework in text-to-video technology, image-to-video technology, and controllable video technology.

As it may be noticed, the outcomes delivered by every of them are passable with the generated outcomes demonstrating the power of the AnimateLCM framework to observe the consistency property even with various inference steps, sustaining comparable movement and magnificence.

Quantitative Outcomes

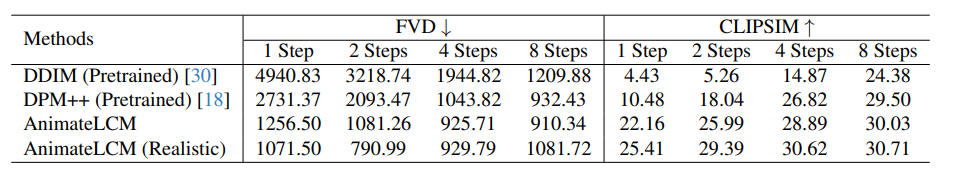

The next determine illustrates the quantitative outcomes and comparability of the AnimateLCM framework with state-of-the-art DDIM and DPM++ strategies.

As it may be noticed, the AnimateLCM framework outperforms the prevailing strategies by a major margin particularly within the low step regime starting from 1 to 4 steps. Moreover, the AnimateLCM metrics displayed on this comparability are evaluated with out utilizing the CFG or classifier free steerage that permits the framework to avoid wasting practically 50% of the inference time and inference peak reminiscence price. Moreover, to additional validate its efficiency, the spatial weights throughout the AnimateLCM framework are changed with a publicly out there customized reasonable mannequin that strikes stability between constancy and variety, that helps in boosting the efficiency additional.

Closing Ideas

On this article, we have now talked about AnimateLCM, a customized diffusion mannequin with adapters that goals to generate high-fidelity movies with minimal steps and computational prices. The AnimateLCM framework is impressed by the Consistency Mannequin that accelerates the sampling with minimal steps by distilling pre-trained picture diffusion fashions, and the profitable extension of the Consistency Mannequin, the Latent Consistency Mannequin or LCM that facilitates conditional picture technology. As an alternative of conducting consistency studying on the uncooked video dataset straight, the AnimateLCM framework proposes to make use of a decoupled consistency studying technique that decouples the distillation of movement technology priors and picture technology priors, permitting the mannequin to boost the visible high quality of the generated content material, and enhance the coaching effectivity concurrently.