Autoencoders are a strong software utilized in machine studying for function extraction, information compression, and picture reconstruction. These neural networks have made important contributions to laptop imaginative and prescient, pure language processing, and anomaly detection, amongst different fields. An autoencoder mannequin has the flexibility to robotically study advanced options from enter information. This has made them a preferred methodology for enhancing the accuracy of classification and prediction duties.

On this article, we are going to discover the basics of autoencoders and their various purposes within the area of machine studying.

- The fundamentals of autoencoders, together with the categories and architectures.

- How autoencoders are used with real-world examples

- We’ll discover the totally different purposes of autoencoders in laptop imaginative and prescient.

About us: Viso.ai powers the main end-to-end Laptop Imaginative and prescient Platform Viso Suite. Our answer permits organizations to quickly construct and scale laptop imaginative and prescient purposes. Get a demo on your firm.

What’s an Autoencoder?

Clarification and Definition of Autoencoders

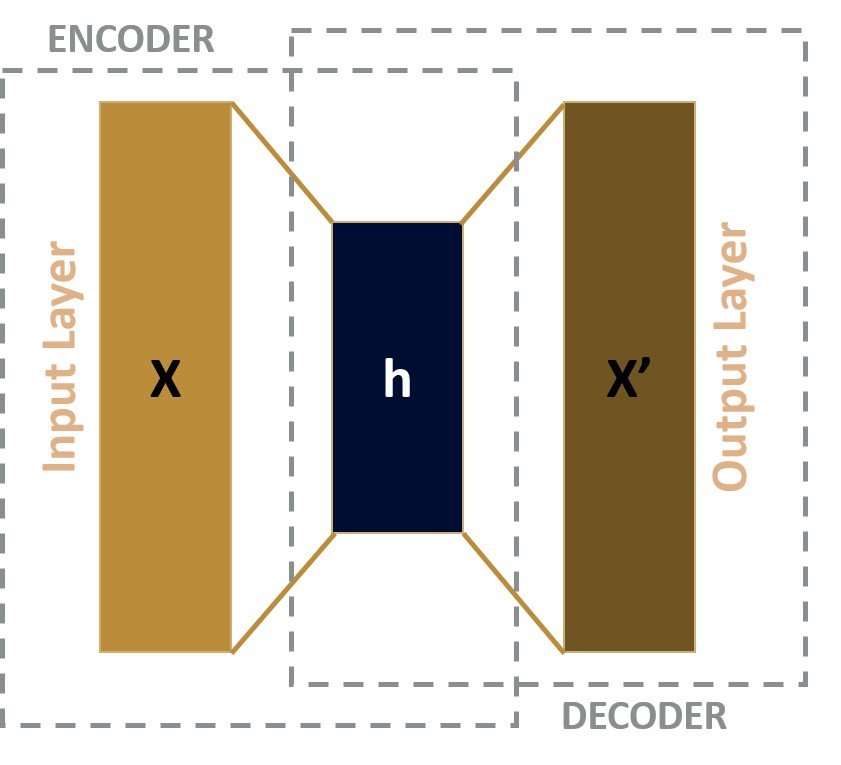

Autoencoders are neural networks that may study to compress and reconstruct enter information, similar to photographs, utilizing a hidden layer of neurons. An autoencoder mannequin consists of two elements: an encoder and a decoder.

The encoder takes the enter information and compresses it right into a lower-dimensional illustration referred to as the latent house. The decoder then reconstructs the enter information from the latent house illustration. In an optimum situation, the autoencoder performs as near good reconstruction as attainable.

Loss operate and Reconstruction Loss

Loss features play a important position in coaching autoencoders and figuring out their efficiency. Probably the most generally used loss operate for autoencoders is the reconstruction loss. It’s used to measure the distinction between the mannequin enter and output.

The reconstruction error is calculated utilizing numerous loss features, similar to imply squared error, binary cross-entropy, or categorical cross-entropy. The utilized methodology is determined by the kind of information being reconstructed.

The reconstruction loss is then used to replace the weights of the community throughout backpropagation to reduce the distinction between the enter and the output. The objective is to attain a low reconstruction loss. A low loss signifies that the mannequin can successfully seize the salient options of the enter information and reconstruct it precisely.

Dimensionality discount

Dimensionality discount is the method of lowering the variety of dimensions within the encoded illustration of the enter information. Autoencoders can study to carry out dimensionality discount by coaching the encoder community to map the enter information to a lower-dimensional latent house. Then, the decoder community is skilled to reconstruct the unique enter information from the latent house illustration.

The dimensions of the latent house is usually a lot smaller than the dimensions of the enter information, permitting for environment friendly storage and computation of the information. By way of dimensionality discount, autoencoders may assist to take away noise and irrelevant options. That is helpful for enhancing the efficiency of downstream duties similar to information classification or clustering.

The preferred Autoencoder fashions

There are a number of kinds of autoencoder fashions, every with its personal distinctive strategy to studying these compressed representations:

- Autoencoding fashions: These are the only sort of autoencoder mannequin. They study to encode enter information right into a lower-dimensional illustration. Then, they decode this illustration again into the unique enter.

- Contractive autoencoder: Any such autoencoder mannequin is designed to study a compressed illustration of the enter information whereas being immune to small perturbations within the enter. That is achieved by including a regularization time period to the coaching goal. This time period penalizes the community for altering the output with respect to small adjustments within the enter.

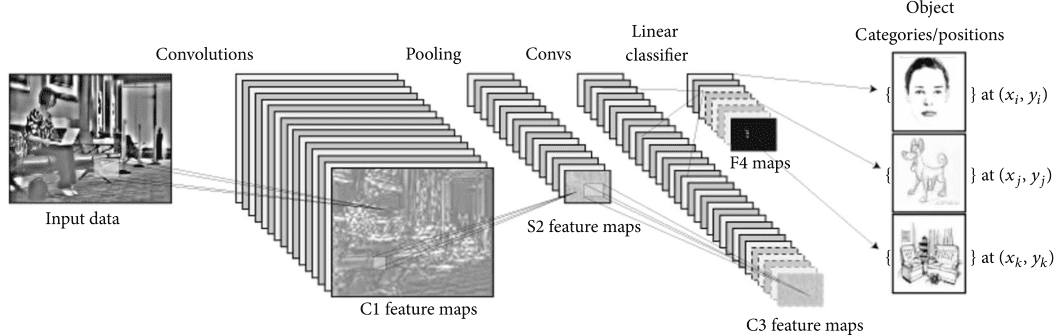

- Convolutional autoencoder (CAE): A Convolutional Autoencoder (CAE) is a sort of neural community that makes use of convolutional layers for encoding and decoding of photographs. This autoencoder sort goals to study a compressed illustration of a picture by minimizing the reconstruction error between the enter and output of the community. Such fashions are generally used for picture era duties, picture denoising, compression, and picture reconstruction.

- Sparse autoencoder: A sparse autoencoder is just like an everyday autoencoder, however with an added constraint on the encoding course of. In a sparse autoencoder, the encoder community is skilled to supply sparse encoding vectors, which have many zero values. This forces the community to establish solely an important options of the enter information.

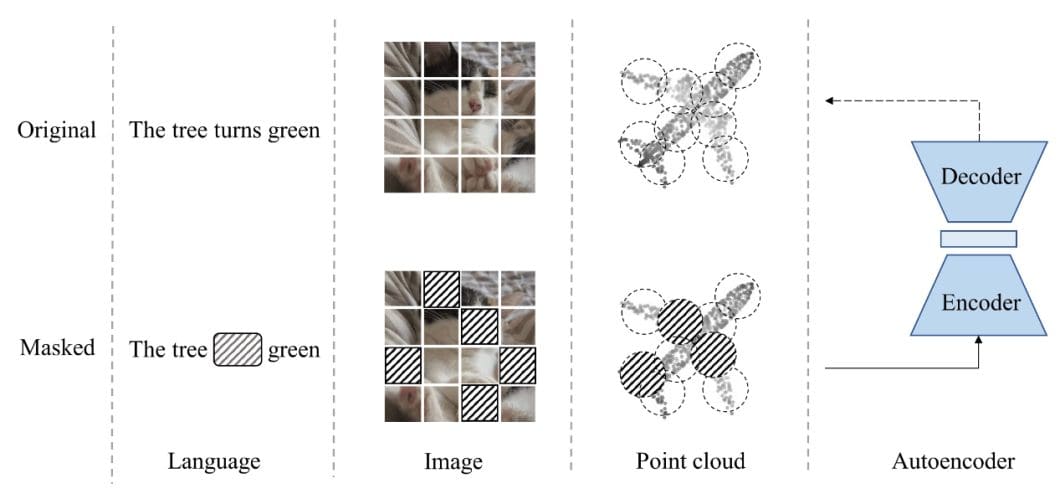

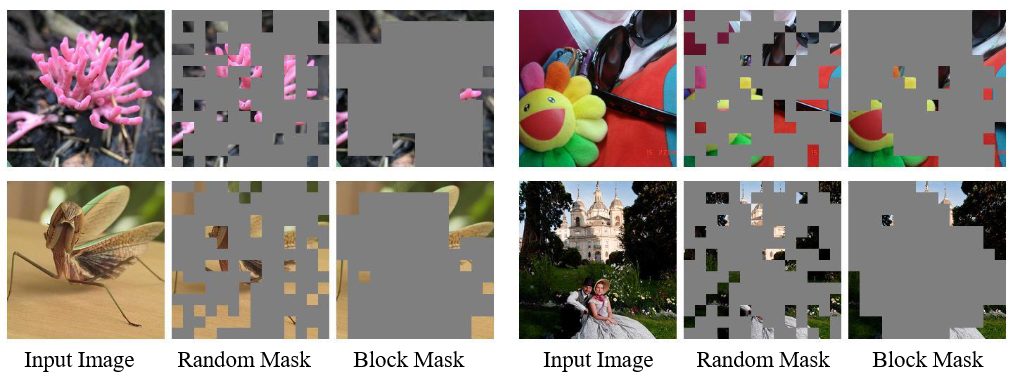

- Denoising autoencoder: Any such autoencoder is designed to study to reconstruct an enter from a corrupted model of the enter. The corrupted enter is created by including noise to the unique enter, and the community is skilled to take away the noise and reconstruct the unique enter. For instance, BART is a well-liked denoising autoencoder for pretraining sequence-to-sequence fashions. The mannequin was skilled by corrupting textual content with an arbitrary noising operate and studying a mannequin to reconstruct the unique textual content. It is rather efficient for pure language era, textual content translation, textual content era and comprehension duties.

- Variational autoencoders (VAE): Variational autoencoders are a sort of generative mannequin that learns a probabilistic illustration of the enter information. A VAE mannequin is skilled to study a mapping from the enter information to a chance distribution in a lower-dimensional latent house, after which to generate new samples from this distribution. VAEs are generally utilized in picture and textual content era duties.

- Video Autoencoder: Video Autoencoder have been launched for studying representations in a self-supervised method. For instance, a model was developed that may study representations of 3D construction and digicam pose in a sequence of video frames as enter (see Pose Estimation). Therefore, Video Autoencoder may be skilled straight utilizing a pixel reconstruction loss, with none floor reality 3D or digicam pose annotations. This autoencoder sort can be utilized for digicam pose estimation and video era by movement following.

- Masked Autoencoders (MAE): A masked autoencoder is an easy autoencoding strategy that reconstructs the unique sign given its partial statement. A MAE variant consists of masked autoencoders for level cloud self-supervised studying, named Point-MAE. This strategy has proven nice effectiveness and excessive generalization functionality on numerous duties, together with object classification, few-show studying, and part-segmentation. Particularly, Level-MAE outperforms all the opposite self-supervised studying strategies.

How Autoencoders work in Laptop Imaginative and prescient

Autoencoder fashions are generally used for picture processing duties in laptop imaginative and prescient. On this use case, the enter is a picture and the output is a reconstructed picture. The mannequin learns to encode the picture right into a compressed illustration. Then, the mannequin decodes this illustration to generate a brand new picture that’s as shut as attainable to the unique enter.

Enter and output are two essential elements of an autoencoder mannequin. The enter to an autoencoder is the information that we wish to encode and decode. And the output is the reconstructed information that the mannequin produces after encoding and decoding the enter.

The primary goal of an autoencoder is to reconstruct the enter as precisely as attainable. That is achieved by feeding the enter information via a collection of layers (together with hidden layers) that encode and decode the enter. The mannequin then compares the reconstructed output to the unique enter and adjusts its parameters to reduce the distinction between them.

Along with reconstructing the enter, autoencoder fashions additionally study a compressed illustration of the enter information. This compressed illustration is created by the bottleneck layer of the mannequin, which has fewer neurons than the enter and output layers. By studying this compressed illustration, the mannequin can seize an important options of the enter information in a lower-dimensional house.

Step-by-step technique of autoencoders

Autoencoders extract options from photographs in a step-by-step course of as follows:

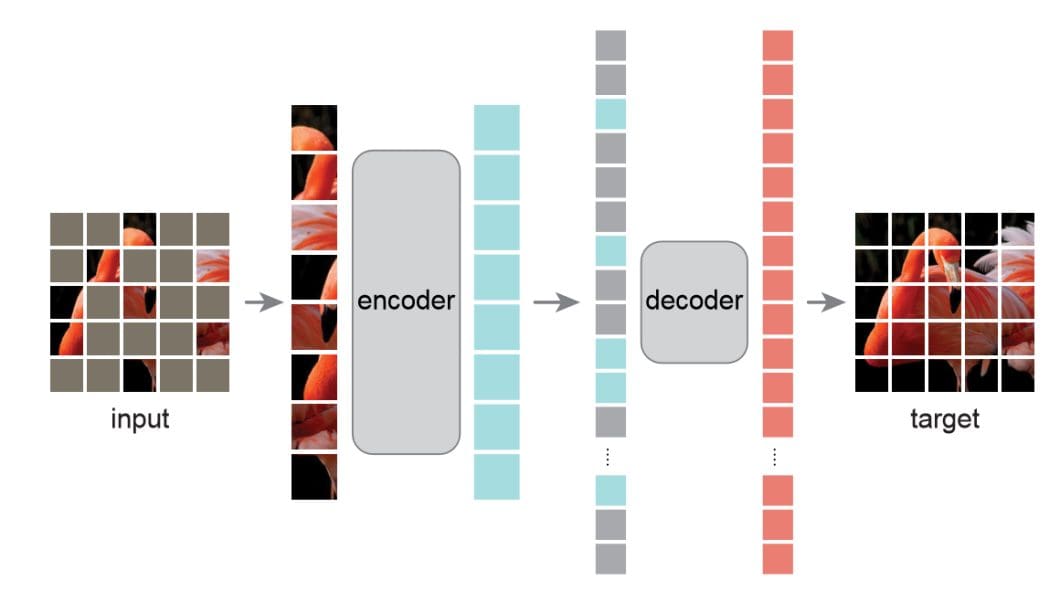

- Enter Picture: The autoencoder takes a picture as enter, which is usually represented as a matrix of pixel values. The enter picture may be of any dimension, however it’s sometimes normalized to enhance the efficiency of the autoencoder.

- Encoding: The autoencoder compresses the enter picture right into a lower-dimensional illustration, often called the latent house, utilizing the encoder. The encoder is a collection of convolutional layers that extract totally different ranges of options from the enter picture. Every layer applies a set of filters to the enter picture and outputs a function map that highlights particular patterns and constructions within the picture.

- Latent Illustration: The output of the encoder is a compressed illustration of the enter picture within the latent house. This latent illustration captures an important options of the enter picture and is usually a smaller dimensional illustration of the enter picture.

- Decoding: The autoencoder reconstructs the enter picture from the latent illustration utilizing the decoder. The decoder is a set of a number of deconvolutional layers that steadily enhance the dimensions of the function maps till the ultimate output is similar dimension because the enter picture. Each layer applies a set of filters that up-sample the function maps, leading to a reconstructed picture.

- Output Picture: The output of the decoder is a reconstructed picture that’s just like the enter picture. Nonetheless, the reconstructed picture will not be an identical to the enter picture for the reason that autoencoder has realized to seize an important options of the enter picture within the latent illustration.

By compressing and reconstructing enter photographs, autoencoders extract an important options of the pictures within the latent house. These options can then be used for duties similar to picture classification, object detection, and picture retrieval.

Limitations and Advantages of Autoencoders for Laptop Imaginative and prescient

Conventional function extraction strategies contain the necessity to manually design function descriptors that seize essential patterns and constructions in photographs. These function descriptors are then used to coach machine studying fashions for duties similar to picture classification and object detection.

Nonetheless, designing function descriptors manually is usually a time-consuming and error-prone course of that won’t seize all of the essential options in a picture.

Benefits of Autoencoders

Benefits of Autoencoders over conventional function extraction strategies embrace:

- First, autoencoders study options robotically from the enter information, making them simpler in capturing advanced patterns and constructions in photographs (sample recognition). That is notably helpful when coping with giant and complicated datasets the place manually designing function descriptors will not be sensible and even attainable.

- Second, autoencoders are appropriate for studying extra sturdy options that generalize higher to new information. Different function extraction strategies usually depend on handcrafted options that won’t generalize nicely to new information. Autoencoders, alternatively, study options which can be optimized for the particular dataset, leading to extra sturdy options that may generalize nicely to new information.

- Lastly, autoencoders are in a position to study extra advanced and summary options that will not be attainable with conventional function extraction strategies. For instance, autoencoders can study options that seize the general construction of a picture, such because the presence of sure objects or the general structure of the scene. These kind of options could also be troublesome to seize utilizing conventional function extraction strategies, which generally depend on low-level options similar to edges and textures.

Disadvantages of Autoencoders

Disadvantages of autoencoders embrace the next limitations:

- One main limitation is that autoencoders may be computationally costly (see value of laptop imaginative and prescient), notably when coping with giant datasets and complicated fashions.

- Moreover, autoencoders could also be liable to overfitting, the place the mannequin learns to seize noise or different artifacts within the coaching information that don’t generalize nicely to new information.

Actual-world Purposes of Autoencoders

The next listing reveals duties solved with autoencoder within the present analysis literature:

| Activity | Description | Papers | Share |

|---|---|---|---|

| Anomaly Detection | Figuring out information factors that deviate from the norm | 39 | 6.24% |

| Picture Denoising | Eradicating noise from corrupted information | 27 | 4.32% |

| Time Collection | Analyzing and predicting sequential information | 21 | 3.36% |

| Self-Supervised Studying | Studying representations from unlabeled information | 21 | 3.36% |

| Semantic Segmentation | Segmenting a picture into significant elements | 16 | 2.56% |

| Disentanglement | Separating underlying elements of variation | 14 | 2.24% |

| Picture Technology | Producing new photographs from realized distributions | 14 | 2.24% |

| Unsupervised Anomaly Detection | Figuring out anomalies with out labeled information | 12 | 1.92% |

| Picture Classification | Assigning an enter picture to a predefined class | 10 | 1.60% |

Autoencoder Laptop Imaginative and prescient Purposes

Autoencoders have been utilized in numerous laptop imaginative and prescient purposes, together with picture denoising, picture compression, picture retrieval, and picture era. For instance, in medical imaging, autoencoders have been used to enhance the standard of MRI photographs by eradicating noise and artifacts.

Different issues that may be solved with autoencoders embrace facial recognition, anomaly detection, or function detection. Visible anomaly detection is essential in lots of purposes, similar to AI prognosis help in healthcare, and high quality assurance in industrial manufacturing purposes.

In laptop imaginative and prescient, autoencoders are additionally broadly used for unsupervised function studying, which can assist enhance the accuracy of supervised studying fashions. For extra, learn our article about supervised vs. unsupervised studying.

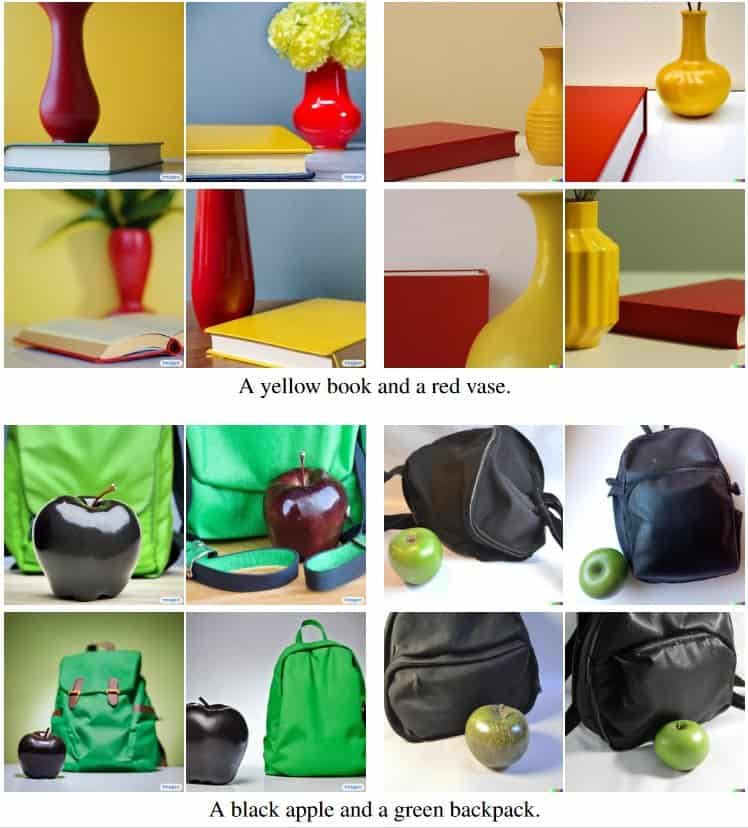

Picture era with Autoencoders

Variational autoencoders, particularly, have been used for picture era duties, similar to producing real looking photographs of faces or landscapes. By sampling from the latent house, variational autoencoders can produce an infinite variety of new photographs which can be just like the coaching information.

For instance, the favored generative machine studying mannequin DALL-E makes use of a variational autoencoder for AI picture era. It consists of two components, an autoencoder, and a transformer. The discrete autoencoder learns to precisely characterize photographs in a compressed latent house and the transformer learns the correlations between languages and the discrete picture illustration.

Future and Outlook

Autoencoders have super potential in laptop imaginative and prescient, and ongoing analysis is exploring methods to beat their limitations. For instance, new regularization strategies, similar to dropout and batch normalization, can assist forestall overfitting.

Moreover, developments in AI {hardware}, similar to the event of specialised {hardware} for neural networks, can assist enhance the scalability of autoencoder fashions.

In Laptop Imaginative and prescient Analysis, groups are consistently creating new strategies to cut back overfitting, enhance effectivity, enhance interpretability, enhance information augmentation, and broaden autoencoders’ capabilities to extra advanced duties.

Conclusion

In conclusion, autoencoders are versatile and highly effective software in machine studying, with various purposes in laptop imaginative and prescient. They will robotically study advanced options from enter information, and extract helpful data via dimensionality discount.

Whereas autoencoders have limitations similar to computational expense and potential overfitting, they provide important advantages over conventional function extraction strategies. Ongoing analysis is exploring methods to enhance autoencoder fashions, together with new regularization strategies and {hardware} developments.

Autoencoders have super potential for future improvement, and their capabilities in laptop imaginative and prescient are solely anticipated to broaden.

Examine associated matters and weblog articles: