Chatbots are AI brokers that may simulate human dialog with the person. These packages are used for all kinds of duties and are actually extra common than ever on any web site. The generative AI capabilities of Massive Language Fashions (LLMs) have made chatbots extra superior and extra succesful than ever. This makes any enterprise need their very own chatbot, answering FAQs or addressing considerations.

This elevated curiosity in chatbots means builders will discover ways to create, use, and set them up. Thus, on this article, we’ll concentrate on creating LangChain Chatbots. Langchain is a well-liked framework for this kind of improvement and we’ll discover it on this hands-on information. We’ll undergo chatbots, LLMs, and Langchain, and create your first chatbot. Let’s get began.

About us: Viso Suite is the world’s solely end-to-end Pc Imaginative and prescient Platform. The answer helps international organizations to develop, deploy, and scale all laptop imaginative and prescient purposes in a single place. Get a demo in your group.

Understanding Chatbots and Massive Language Fashions (LLMs)

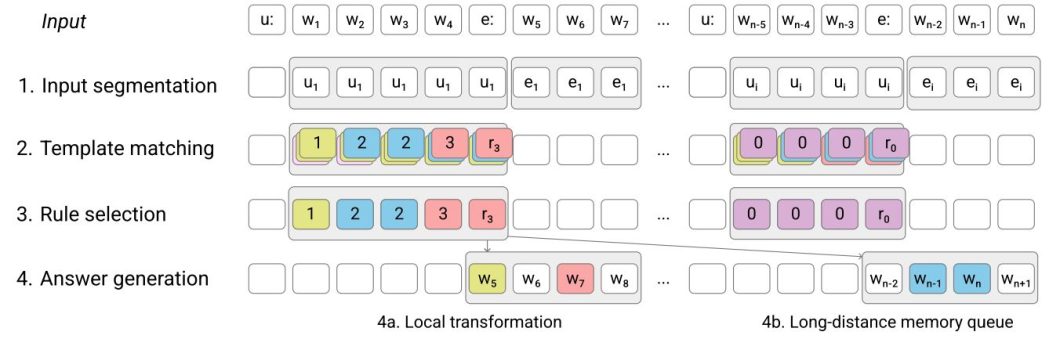

In recent times we’ve got seen a formidable improvement within the capabilities of Synthetic Intelligence (AI). Chatbots are an idea in AI that existed for a very long time. Early strategies used rule-based pattern-recognition techniques to simulate human dialog. Rule-based techniques are premade scripts developed by a programmer, the place, the chatbot would choose essentially the most appropriate reply from the script utilizing if-else statements. To take this a step additional, researchers additionally used sample recognition to permit this system to interchange a few of its premade sentences with phrases utilized by the person in a pre-made template. Nonetheless, this method has many limitations, and as AI analysis deepened, chatbots developed as properly to begin utilizing generative fashions like LLMs.

What are Massive Language Fashions (LLMs)?

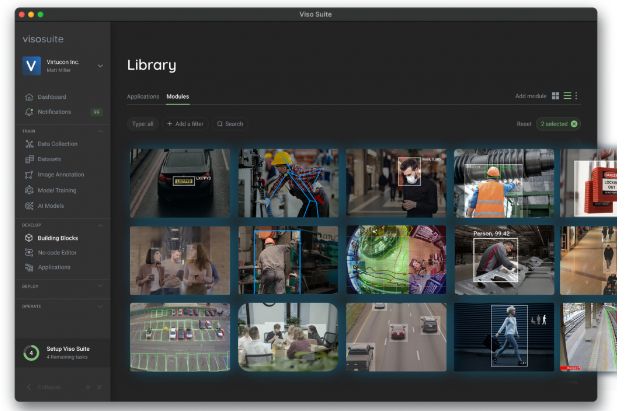

Massive Language Fashions (LLMs) are a preferred kind of generative AI fashions that use Pure Language Processing (NLP) to know and simulate human speech. Language understanding and technology is a long-standing analysis matter. There have been 2 main developments on this subject, one in every of them was predictive modeling which depends on statistical fashions like Synthetic Neural Networks (ANNs). Transformer-based structure was the second main improvement. It allowed for the event of common LLMs like ChatGPT, permitting for higher Chatbots.

The transformer structure permits us to effectively pre-train very huge language fashions on giant quantities of knowledge on (Graphical Processing Items) GPUs. This improvement makes Chatbots and LLM techniques able to taking motion like GPT4 powering Microsoft’s Co-Pilot techniques. These techniques can carry out multi-step reasoning taking selections and actions. LLMs are thus changing into the fundamental constructing block for Massive Motion Fashions (LAMs). Constructing techniques round these LLMs offers builders the flexibility to create all types of AI-powered chatbots. Let’s discover the several types of chatbots and the way they work.

Varieties of Chatbots

Whereas LLMs have expanded the capabilities of chatbots, they aren’t all created equally. Growing and constructing a chatbot would probably imply fine-tuning an LLM to your personal wants. Thus there are a number of varieties of a chatbot.

Rule Primarily based Chatbots

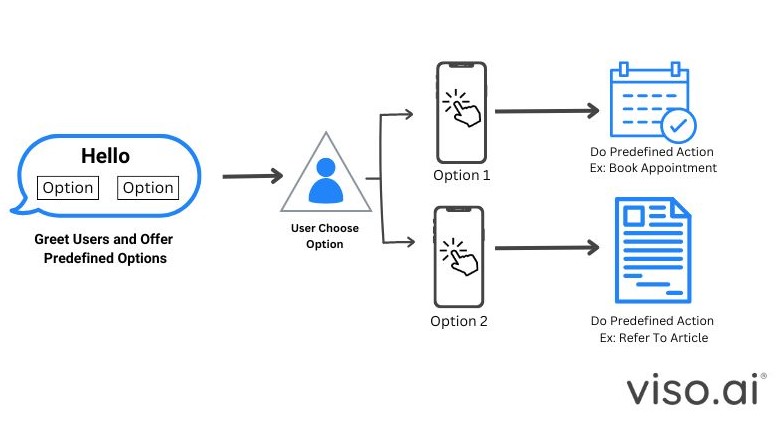

With rule-based chatbots, we’ve got a predefined template of solutions and a immediate template structured as a tree. The person message is matched to the fitting intent utilizing if-else guidelines. The intent is then matched to the response. This often requires a sophisticated rule-based system and common expressions to match the person statements to intents.

Rule-based and AI Chatbots

A extra refined design could be to make use of a rule-based system and put a Transformer-based LLM inside it utilized by chatbots like ELIZA.

When somebody sends a message, the system does a couple of issues. First, it checks if the message matches any predefined guidelines. On the similar time, it takes your complete dialog historical past as context and generates a response that’s related and coherent with the general dialog move. This mix of rule-based effectivity and LLM flexibility permits for a extra pure and dynamic conversational expertise.

Different Categorization

- Aim-based: Primarily based on targets to perform by way of a fast dialog with the client. This dialog permits the bot to perform set targets. For instance, reply questions, increase tickets, or resolve issues.

- Information-based: The chatbot can have a supply of data to entry and speak about. They’re both already educated on this knowledge or have open-domain knowledge sources to depend on and reply from.

- Service-based: It might present a sure service to an organization or a shopper. These chatbots are often rule-based and supply a selected service somewhat than a dialog. For instance, a shopper might order a meal from a restaurant.

Constructing a Chatbot with LangChain

The sphere of Pure Language Processing (NLP) is targeted on dialog and dialogue. It is among the goals of enhancing human-computer interplay. These techniques have gained rising curiosity over time. Thus, improvement has developed quickly towards making these techniques simpler to construct, combine, and use. This implies simpler methods to construct LLM-based chatbots and apps.

Langchain is a number one language mannequin integration framework that permits us to create chatbots, digital assistants, LLM-powered purposes, and extra. Let’s first get into some essential Langchain ideas and parts.

Langchain Defined

Langchain is an open-source Python library comprising of instruments and abstractions to assist simplify constructing LLM purposes. This framework allows builders to create extra interactive and complicated chatbots by chaining collectively completely different parts. We are able to incorporate reminiscence, and make use of brokers and rules-based techniques round chatbots. There are a couple of primary parts to know in Langchain that may assist us construct chatbots effectively.

- Chains: That is the best way all of the parts are linked in Langchain. Chains are a sequence of parts executed sequentially. For instance, parts like immediate templates, language fashions, and output parsers.

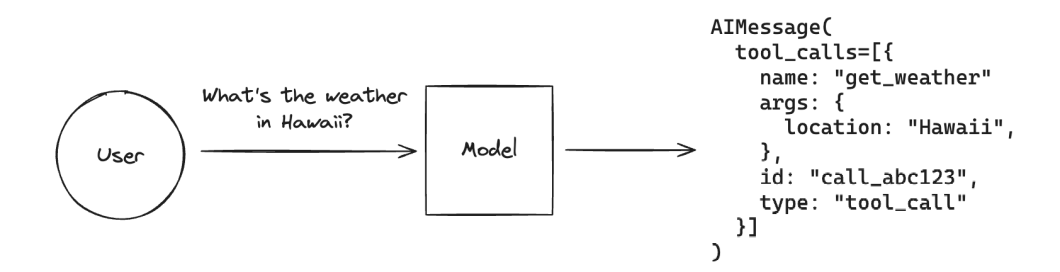

- Brokers: That is how chatbots could make selections and take actions primarily based on the context of the dialog. LLMs can decide one of the best plan of action and execute instruments or API calls accordingly. It could possibly even infer different fashions and change into a multimodal AI.

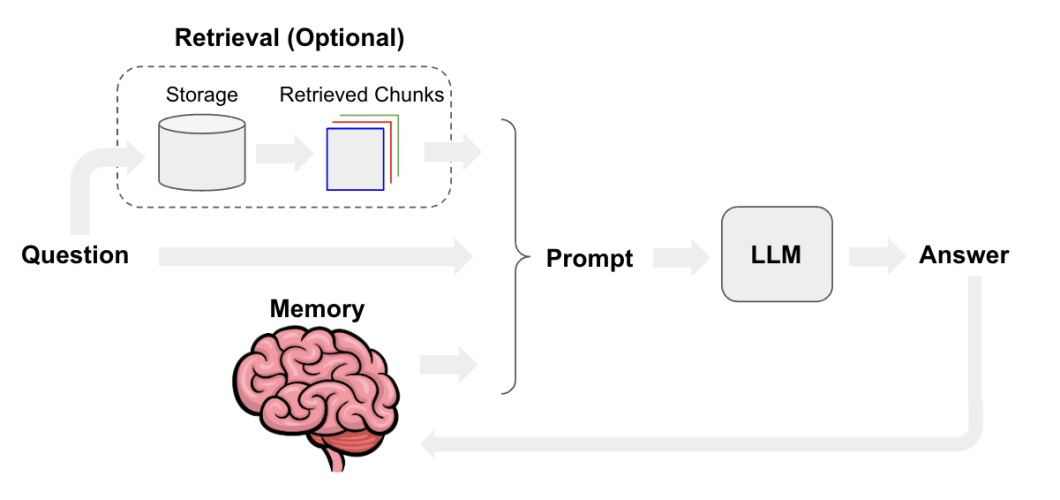

- Reminiscence: Permits chatbots to retain info from earlier interactions and person inputs, offering context and continuity to the dialog.

The mix of chains, brokers, and reminiscence makes Langchain an amazing framework for chatbot improvement. Chains make for reusable parts in improvement. Brokers allow decision-making and using exterior instruments, and reminiscence brings context and personalization to a dialog. Subsequent, let’s discover creating chatbots with Langchain.

Creating Your First LangChain Chatbot

Langchain will help facilitate the event of chatbots utilizing its parts. Chatbots generally use retrieval-augmented technology (RAG) to higher reply domain-specific questions. For instance, you possibly can join your stock database or web site as a supply of data to the chatbot. Extra superior strategies like chat historical past and reminiscence might be carried out with Langchain as properly. Let’s get began and see tips on how to create a real-time chatbot utility.

For this information, we’ll create a primary chatbot with Llama 2 because the LLM mannequin. Llama is an open-source LLM by Meta that has nice efficiency and we will obtain variations of it that may be run domestically on fashionable CPUs/GPUs.

Step1: Selecting the LLM mannequin

The very first thing we have to do is to obtain an open-source LLM like Llama by way of Huggingface repositories like this. These small fashions might be run domestically on fashionable CPUs/GPUs and the “.gguf” format will assist us simply load the mannequin. You might alternatively obtain the “.bin” mannequin from the official Llama web site or use a Huggingface-hosted open-source LLM. For this information, we’ll obtain the GGUF file and run it domestically.

- Go to the Llama 2 7B Chat – GGUF repository

- Go to recordsdata and variations

- Obtain the GGUF file you need from the checklist, and ensure to learn by way of the repository to know the completely different variations provided.

Step2: Putting in and setup

When the mannequin is put in, place it within the folder the place we’ll construct our Python Langchain code round it. Talking of which let’s create a Python file in the identical folder and begin importing and putting in the wanted libraries. Go forward and open your favourite code editor, we might be utilizing Visible Studio Code. First, run this command in your terminal to put in the wanted packages.

pip set up langchain llama-cpp-python langchain_community langchain_core

Subsequent, we need to import the libraries we want as follows.

from langchain_community.llms import LlamaCpp from langchain_core.prompts import PromptTemplate from langchain_core.output_parsers import StrOutputParser

Word that the primary import might be modified to “from langchain_community.llms import HuggingFaceHub” in case you are importing a mannequin hosted on Huggingface.

Now we’re prepared to begin loading and making ready our parts. Firstly, allow us to load the LLM mannequin.

llm = LlamaCpp( model_path="/Native LLM/dolphin-llama2-7b.Q4_K_M.gguf", n_gpu_layers=40, n_batch=512, max_tokens=100, verbose=False, # Allow detailed logging for debugging )

Right here we load the mannequin and provides it some important parameters. The variety of GPU layers determines how a lot the GPU might be working, the much less it’s the extra work on the CPU. The batch dimension determines how a lot enter the mannequin might be processing at one time. Max tokens outline how lengthy the output is. Lastly, verbose will simply decide if we get detailed debugging info.

Step3: Immediate-Template and Chain

We’ll use two extra parts, a immediate template and a sequence. A immediate template is a crucial enter to the mannequin from which we will do many issues like RAG, and reminiscence. In our case we’ll hold it easy, the immediate template will give the chatbot some context, cross the query to it, and inform it to reply. The chain will then join the mannequin with the immediate template.

template = """SYSTEM:{system_message}

USER: {query}

ASSISTANT:

"""

immediate = PromptTemplate(template=template, input_variables=[ "question", "system_message"])

system_message = """You're a customer support representitve. You're employed for a website hosting firm. Your job is to know person question and reply.

If its a technical downside reply with one thing like I'll increase a ticket to assist. Be sure you solely reply to the given query."""

Right here we create our immediate template. This manner we inform the mannequin tips on how to act, what to anticipate, and tips on how to output. The way in which the template is structured is determined by the mannequin we’re utilizing. For instance, right here we’re utilizing the dolphin Llama 2 7b mannequin, the template for this mannequin makes use of three actors SYSTEM, USER, and ASSISTANT. The system immediate tells the chatbot what to do, the person immediate is what the person asks or says, and the assistant is for the chatbot to place its reply. Then we construction this as a immediate template for Langchain by way of the “PromptTemplate” methodology. We give it two parameters, the template we outlined, and the variables to make use of.

Now, let’s join all the pieces by way of a sequence.

output_parser=StrOutputParser() chain=immediate|llm|output_parser

Right here we did two issues, we first outlined an output parser, which can make our output look higher. Then we outline a variable to carry the chain, which is linked with the “|” character, and applies to any Langchain part. Subsequent, let’s run this chatbot and take a look at it.

Step4: Inference and Take a look at:

We are able to now begin utilizing the chain we simply made, to do this we’re going to use a easy whereas loop to run the chain on each enter we give.

print("Hello, how can we assist?")

whereas True:

query = enter("> ")

reply = chain.invoke({"query":query, "system_message": system_message})

print(reply, 'n')

This whereas loop will do the next.

- Get enter from the person.

- Move the enter to the chain we made and run the chain utilizing the invoke operate. The invoke operate will take a dictionary of the variable title and the variable itself.

- We print the reply to the person.

We additionally added a easy print assertion saying “Hello, how can we assist?” earlier than the loop as a welcome notice to the person. Now let’s see some instance enter/output.

Hello, how can we assist? > Hello My web site has been down all day I'm sorry to listen to that your web site has been experiencing points. I perceive that that is inflicting some inconvenience. To raised help you and resolve this downside, I'll increase a ticket with our assist staff to look into the difficulty and work on getting your web site again up and working as quickly as doable. Please present extra details about the error or problem you might be encountering, similar to any error messages or steps that you've got already taken, and I'll make certain to incorporate this within the

We see that the chatbot responds properly to the question and in accordance with system directions within the template. Nonetheless, the chatbot output is restricted due to the utmost token restrict we set. Additional changes might be made to how the output is displayed, and we will use issues like summarization, reminiscence, and RAG to boost the chatbot’s performance.

> Thanks, I want this resolved asap please I perceive your concern and can make certain to prioritize your request. Please present extra particulars in regards to the problem you might be experiencing, in order that I can higher help you in resolving it as quickly as doable.

The bot can proceed the chat so long as the loop is working, nevertheless it is not going to have entry to earlier messages as a result of we don’t have a reminiscence part. Whereas reminiscence is extra superior, it is strongly recommended to dive into Langchain tutorials and implement it if you happen to’re . Now that we’ve explored tips on how to construct chatbots with LangChain, let’s have a look at what the longer term holds for chatbots.

Chatbots: The Street Forward

We have now seen substantial improvement within the subject of LLMs and Chatbots lately. Every single day, it seems like builders and researchers are pushing the boundaries, opening up new prospects for us to make use of and work together with Synthetic Intelligence. We have now seen that constructing chatbot-based apps and web sites has change into simpler than ever. This enhances the person expertise in any enterprise or firm. Nonetheless, we will nonetheless really feel the distinction between speaking with a chatbot and a human, thus, researchers are always working to make person interplay smoother by making these AI fashions really feel as pure as doable.

Going ahead we will anticipate finding these AI chatbots in all places, whether or not it’s for buyer assist, eating places, on-line companies, actual property, banking, and nearly each different subject or enterprise there’s. As well as, these AI chatbots pave the best way to create better-speaking brokers. When researchers mix these fashions with superior speech algorithms we get a smoother and extra pure dialog.

Whereas the way forward for chatbots appears limitless, there’s a critical facet to all this progress which is ethics. We should always acknowledge points like privateness breaches, misinformation unfold, or AI biases that may sneak into conversations. Nonetheless, spreading consciousness to the builders and customers will make certain these AI chatbots play honest and hold issues sincere.

Additional Reads for Chatbots

If you wish to learn and perceive extra in regards to the ideas associated to AI fashions, we suggest you to learn the next blogs:

FAQs

Q1. What’s LangChain?

A. A framework for constructing LLM-powered purposes, like chatbots, by chaining collectively completely different parts.

Q2. How do LLMs improve chatbots?

A. LLMs allow chatbots to know and generate human-like textual content, resulting in extra pure and fascinating conversations.

Q3. What are the several types of chatbots?

A. The several types of chatbots are:

- Rule-based (predefined guidelines)

- AI-powered (utilizing LLMs)

- Activity-oriented (particular duties)

- Conversational (open-ended)

This autumn. Why use Langchain to develop chatbots?

A. Langchain provides a modular method that empowers builders to construct extra refined chatbot techniques by connecting varied parts and utilizing their most popular LLM. This flexibility is essential for creating extremely personalized and interactive chatbot experiences. Moreover, Langchain provides different options that will in any other case be laborious to construct, like reminiscence, brokers, and retrieval.