Think about searching for related issues based mostly on deeper insights as an alternative of simply key phrases. That is what vector databases and similarity searches assist with. Vector databases allow vector similarity search. It makes use of the gap between vectors to seek out information factors in search queries.

Nevertheless, similarity search in high-dimensional information might be sluggish and resource-intensive. Enter Quantization methods! They play an necessary position in optimizing information storage and accelerating information retrieval in vector databases.

This text explores numerous quantization methods, their sorts, and real-world use instances.

What’s Quantization and How Does it Work?

Quantization is the method of changing steady information into discrete information factors. Particularly once you’re coping with billion-scale parameters, quantization is crucial for managing and processing. In vector databases, quantization transforms high-dimensional information into compressed house whereas preserving necessary options and vector distances.

Quantization considerably reduces reminiscence bottlenecks and improves storage effectivity.

The method of quantization contains three key processes:

1. Compressing Excessive-Dimensional Vectors

In quantization, we use methods like codebook technology, function engineering, and encoding. These methods compress high-dimensional vector embeddings right into a low-dimensional subspace. In different phrases, the vector is cut up into quite a few subvectors. Vector embeddings are numerical representations of audio, photographs, movies, textual content, or sign information, enabling simpler processing.

2. Mapping to Discrete Values

This step includes mapping the low-dimensional subvectors to discrete values. The mapping additional reduces the variety of bits of every subvector.

3. Compressed Vector Storage

Lastly, the mapped discrete values of the subvectors are positioned within the database for the unique vector. Compressed information representing the identical info in fewer bits optimizes its storage.

Advantages of Quantization for Vector Databases

Quantization presents a spread of advantages, leading to improved computation and diminished reminiscence footprint.

1. Environment friendly Scalable Vector Search

Quantization optimizes the vector search by decreasing the comparability computation value. Subsequently, vector search requires fewer sources, enhancing its total effectivity.

2. Reminiscence Optimization

Quantized vectors means that you can retailer extra information throughout the similar house. Moreover, information indexing and search are additionally optimized.

3. Pace

With environment friendly storage and retrieval comes quicker computation. Diminished dimensions enable quicker processing, together with information manipulation, querying, and predictions.

Some in style vector databases like Qdrant, Pinecone, and Milvus provide numerous quantization methods with completely different use instances.

Use Instances

The flexibility of quantization to scale back information dimension whereas preserving vital info makes it a useful asset.

Let’s dive deeper into a number of of its functions.

1. Picture and Video processing

Pictures and video information have a broader vary of parameters, considerably rising computational complexity and reminiscence footprint. Quantization compresses the info with out dropping necessary particulars, enabling environment friendly storage and processing. This speeds searches for photographs and movies.

2. Machine Studying Mannequin Compression

Coaching AI fashions on giant information units is an intensive process. Quantization helps by decreasing model size and complexity with out compromising its effectivity.

3. Sign Processing

Sign information represents steady information factors like GPS or surveillance footage. Quantization maps information into discrete values, permitting quicker storage and evaluation. Moreover, environment friendly storage and evaluation velocity up search operations, enabling quicker sign comparability.

Totally different Quantization Methods

Whereas quantization permits seamless dealing with of billion-scale parameters, it dangers irreversible info loss. Nevertheless, discovering the precise stability between acceptable info loss and compression improves effectivity.

Every quantization approach comes with execs and cons. Earlier than you select, you need to perceive compression necessities, in addition to the strengths and limitations of every approach.

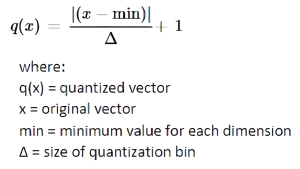

1. Binary Quantization

Binary quantization is a technique that converts all vector embeddings into 0 or 1. If a price is larger than 0, it’s mapped to 1, in any other case it’s marked as 0. Subsequently, it converts high-dimensional information into considerably lower-dimensional permitting quicker similarity search.

Formulation

The Formulation is:

Binary quantization system. Picture by writer.

Right here’s an instance of how binary quantization works on a vector.

Graphical illustration of binary quantization. Picture by writer.

Strengths

- Quickest search, surpassing each scalar and product quantization methods.

- Reduces reminiscence footprint by a factor of 32.

Limitations

- Increased ratio of data loss.

- Vector parts require a imply roughly equal to zero.

- Poor efficiency on low-dimensional information resulting from increased info loss.

- Rescoring is required for the most effective outcomes.

Vector databases like Qdrant and Weaviate provide binary quantization.

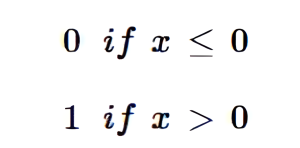

2. Scalar Quantization

Scalar quantization converts floating level or decimal numbers into integers. This begins with figuring out a minimal and most worth for every dimension. The recognized vary is then divided into a number of bins. Lastly, every worth in every dimension is assigned to a bin.

The extent of precision or element in quantized vectors relies upon upon the variety of bins. Extra bins end in increased accuracy by capturing finer particulars. Subsequently, the accuracy of vector search additionally relies upon upon the variety of bins.

Formulation

The system is:

Scalar quantization system. Picture by writer.

Right here’s an instance of how scalar quantization works on a vector.

Graphical illustration of scalar quantization. Picture by writer.

Strengths

- Vital memory optimization.

- Small info loss.

- Partially reversible course of.

- Quick compression.

- Environment friendly scalable search resulting from small info loss.

Limitations

- A slight lower in search high quality.

- Low-dimensional vectors are extra vulnerable to info loss as every information level carries necessary info.

Vector databases akin to Qdrant and Milvus provide scalar quantization.

3. Product Quantization

Product quantization divides the vectors into subvectors. For every part, the middle factors, or centroids, are calculated utilizing clustering algorithms. Their closest centroids then characterize each subvector.

Similarity search in product quantization works by dividing the search vector into the identical variety of subvectors. Then, a listing of comparable outcomes is created in ascending order of distance from every subvector’s centroid to every question subvector. For the reason that vector search course of compares the gap from question subvectors to the centroids of the quantized vector, the search outcomes are much less correct. Nevertheless, product quantization accelerates the similarity search course of and better accuracy might be achieved by rising the variety of subvectors.

Formulation

Discovering centroids is an iterative course of. It makes use of the recalculation of Euclidean distance between every information level to its centroid till convergence. The system of Euclidean distance in n-dimensional house is:

Product quantization system. Picture by writer.

Right here’s an instance of how product quantization works on a vector.

Graphical illustration of product quantization. Picture by writer.

Strengths

- Highest compression ratio.

- Higher storage effectivity than different methods.

Limitations

- Not appropriate for low-dimensional vectors.

- Useful resource-intensive compression.

Vector databases like Qdrant and Weaviate provide product quantization.

Selecting the Proper Quantization Methodology

Every quantization methodology has its execs and cons. Selecting the best methodology relies upon upon elements which embrace however should not restricted to:

- Information dimension

- Compression-accuracy tradeoff

- Effectivity necessities

- Useful resource constraints.

Think about the comparability chart under to know higher which quantization approach fits your use case. This chart highlights accuracy, velocity, and compression elements for every quantization methodology.

Picture by Qdrant

From storage optimization to quicker search, quantization mitigates the challenges of storing billion-scale parameters. Nevertheless, understanding necessities and tradeoffs beforehand is essential for profitable implementation.

For extra info on the newest traits and expertise, go to Unite AI.

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?