Deepfakes are altering the way in which we see movies and pictures – we are able to not belief pictures or video footage. Superior AI strategies now allow the technology of extremely reasonable pictures, making it more and more troublesome, and even inconceivable, to differentiate between what’s actual and what’s digitally created.

Within the following, we are going to clarify what Deepfakes are, the way to determine them and focus on the influence of AI-generated images and movies.

What are Deepfakes?

Deepfakes signify a type of artificial media whereby a person inside an present picture or video is changed or manipulated utilizing superior synthetic intelligence strategies. The first goal is to create alterations or fabrications which can be just about indistinguishable from genuine content material.

This subtle expertise entails using deep studying algorithms, particularly generative adversarial networks (GANs), to mix and manipulate visible and auditory components, leading to extremely convincing and sometimes misleading multimedia content material.

Technical advances and the discharge of highly effective instruments for creating deepfakes are impacting numerous domains. This raises issues about misinformation and privateness, together with the necessity for robust detection and authentication mechanisms.

Historical past and Rise of Deep-Pretend Know-how

The idea emerged from educational analysis within the early 2010s, specializing in facial recognition and laptop imaginative and prescient. In 2014, the introduction of Generative Adversarial Networks (GANs) marked a significant development within the area. This breakthrough in deep studying applied sciences enabled extra subtle and reasonable picture manipulation.

Developments in AI algorithms and machine studying are fueling the speedy evolution of deepfakes. To not point out the rising availability of knowledge and computational energy. Early deepfakes required important talent and computing assets. Which means solely a small group of extremely specialised people have been able to creating them.

Nevertheless, this expertise is turning into more and more accessible, with user-friendly deepfake creation instruments enabling wider utilization. This democratization of deepfake expertise has led to an explosion in each artistic and malicious makes use of. At this time, it’s a subject of serious public curiosity and concern, particularly within the face of political election cycles.

The Function of AI and Machine Studying

AI and machine studying, notably GANs, are the first applied sciences for creating deepfakes. These networks contain two fashions: a generator and a discriminator. The generator creates pictures or movies whereas the discriminator evaluates their authenticity.

By way of iterative coaching, the generator constantly improves its output to idiot the discriminator. This continues till the system finally produces extremely reasonable and convincing deepfakes.

One other essential facet is the coaching information. To create a convincing deepfake, the AI requires an enormous dataset of pictures or movies of the goal particular person. The standard and number of this information considerably affect the realism of the output.

AI fashions and deep studying algorithms analyze this information, studying intricate particulars about an individual’s facial options, expressions, and actions. The AI then makes an attempt to duplicate these facets in different contexts or onto one other particular person’s face.

Whereas it holds immense potential in areas like leisure, journalism, and social media, it additionally poses important moral, safety, and privateness challenges. Understanding its workings and implications is essential in at present’s digital age.

Deepfakes within the Actual World

Deepfakes have gotten more durable to differentiate from actuality whereas concurrently turning into extra commonplace. That is resulting in friction between proponents of its potential and people with basic moral issues about its use.

Leisure and Media: Altering Narratives

Deepfakes are opening new avenues for creativity and storytelling. Filmmakers or content material creators can use the likeness of people or characters with out their precise presence.

AI affords the power to insert actors into scenes post-production or resurrect deceased celebrities for brand spanking new roles. Nevertheless, it additionally raises moral questions on consent and inventive integrity. It additionally blurs the road between actuality and fiction, doubtlessly deceptive viewers.

Filmmakers are utilizing deep faux expertise in mainstream content material, together with blockbuster movies:

- Martin Scorsese’s “The Irishman”: An instance of utilizing deepfake expertise to de-age actors.

- Star Wars: Rogue One: AI was used to supply the deceased actor Peter Cushing’s character, Grand Moff Wilhuff Tarkin.

- Roadrunner: On this Anthony Bourdain biopic, audio deepfake tech (voice cloning) was used to synthesize his voice.

The rising use of AI technology led to the SAG-AFTRA (Display screen Actors Guild‐American Federation of Tv and Radio Artists) strike. Actors raised issues concerning dropping management over their digital likenesses and being changed with AI-generated performances.

Political and Cybersecurity: Influencing Perceptions

Deepfakes within the political enviornment are a potent instrument for misinformation. It’s able to distorting public opinion and undermining democratic processes en masse. It may create alarmingly reasonable movies of leaders or public figures, resulting in false narratives and societal discord.

A well known instance of political deepfake misuse was the altered video of Nancy Pelosi. It concerned slowing down a real-life video clip of her, making her appear impaired. There are additionally situations of audio deepfakes, just like the fraudulent use of a CEO’s voice, in main company heists.

Some of the notable situations of an AI-created political commercial in america occurred when the Republican Nationwide Committee released a 30-second ad that was disclosed as being fully generated by AI.

Enterprise and Advertising and marketing: Rising Makes use of

Within the enterprise and advertising world, deepfakes provide a novel approach to interact prospects and tailor content material. However, additionally they pose important dangers to the authenticity of name messaging. Misuse of this expertise can result in faux endorsements or deceptive company communications. This has the potential to subvert shopper belief and hurt model status.

Advertising and marketing campaigns are using deep fakes for extra personalised and impactful promoting. For instance, audio deepfakes additional lengthen these capabilities, enabling the creation of artificial movie star voices for focused promoting. An instance is David Beckham’s multilingual public service announcement utilizing deepfake expertise.

The Darkish Facet of Deepfakes: Safety and Privateness Issues

The influence of deepfake expertise on our society will not be restricted to our media. It has the potential as a instrument of cybercrime and to sow public discord on a big scale.

Threats to Nationwide Safety and Particular person Identification

Deepfakes pose a big risk to particular person id and nationwide safety.

From a nationwide safety perspective, deepfakes are a possible weapon in cyber warfare. Dangerous actors are already utilizing them to create faux movies or audio recordings for malicious functions like blackmail, espionage, and spreading disinformation. By doing so, actors can weaponize deepfakes to create false narratives, stir political unrest, or incite battle between nations.

It’s not laborious to think about organized campaigns utilizing deepfake movies to sow public discord on social media. Artificially created footage has the potential to affect elections and heighten geopolitical tensions.

Private Dangers and Defamation Potential

For people, the broad accessibility to generative AI results in a heightened threat of id theft and private defamation. One latest case highlights the potential influence of manipulated content material: A Pennsylvania mother used deepfake videos to harass members of her daughter’s cheerleading group. The movies brought about important private and reputational hurt to the victims. In consequence, the mom was discovered responsible of harassment in court docket.

Influence on Privateness and Public Belief

Deepfakes additionally severely influence privateness and erode public belief. Because the authenticity of digital content material turns into more and more questionable, the credibility of media, political figures, and establishments is undermined. This mistrust pervades all facets of digital life, from faux information to social media platforms.

An example is Chris Ume’s social account, the place he posts deepfake movies of himself as Tom Cruise. Though it’s just for leisure, it serves as a stark reminder of how straightforward it’s to create hyper-realistic deepfakes. Such situations show the potential for deepfakes to sow widespread mistrust in digital content material.

The escalating sophistication of deepfakes, coupled with their potential for misuse, presents a vital problem. It underscores the necessity for concerted efforts from expertise builders, legislators, and the general public to counter these dangers.

Sure he CANADA! 🐳 @milesfisher @vfxchrisume #TED2023 pic.twitter.com/TyJvhz7a2k

— Metaphysic.ai (@Metaphysic_ai) April 17, 2023

How one can detect Deepfakes

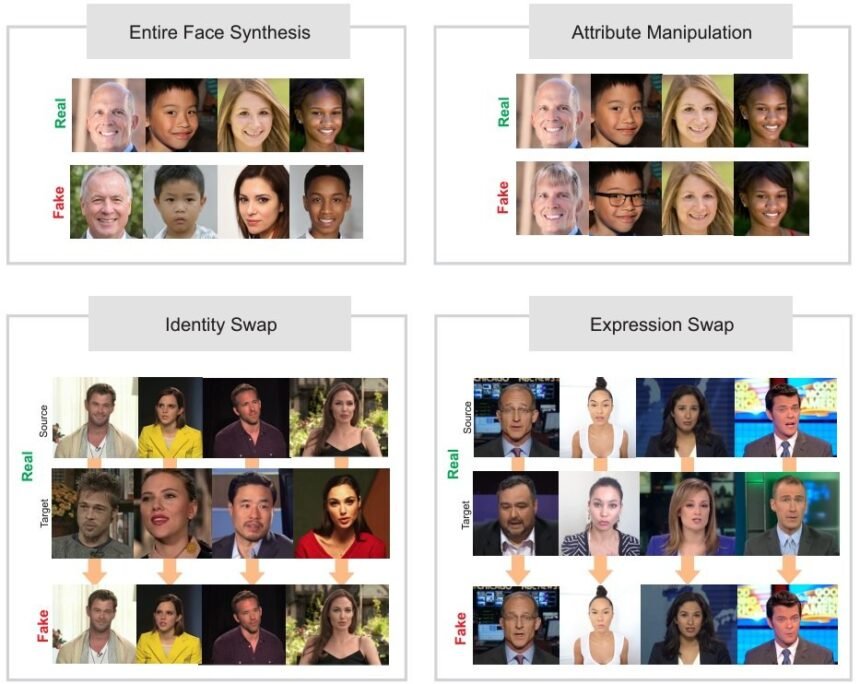

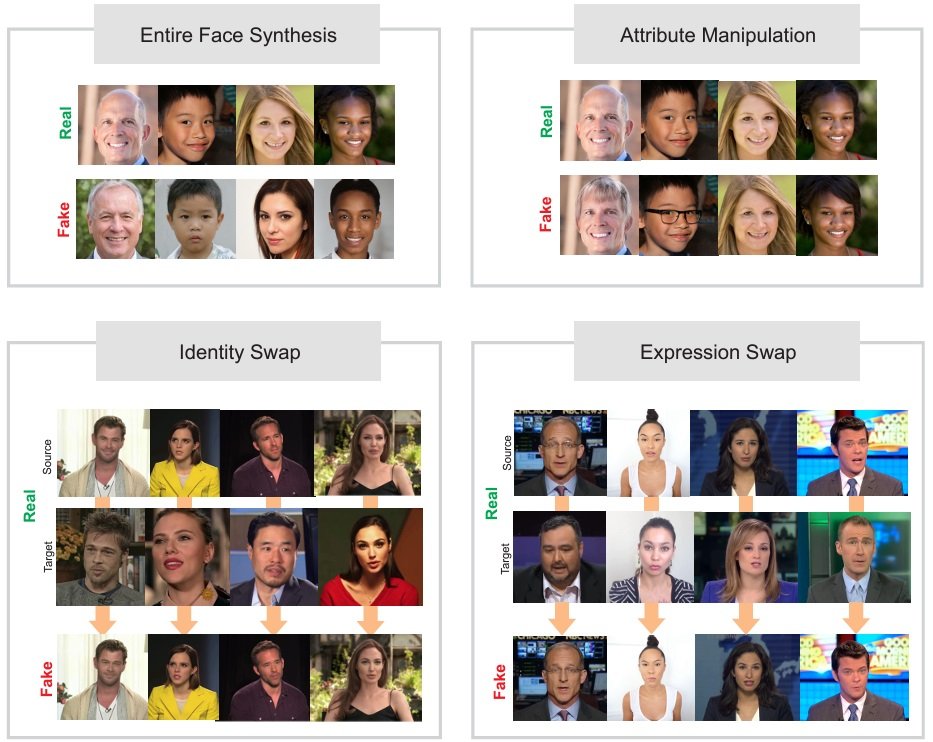

The power to detect deepfakes has been an essential subject in analysis. An attention-grabbing research paper discusses the strategies of deepfake recognition within the area of digital face manipulations. The research particulars numerous sorts of facial manipulations, deepfake strategies, public databases for analysis, and benchmarks for detection instruments. Within the following, we are going to spotlight a very powerful findings:

Key findings and detection strategies:

- Managed Eventualities Detection: The research exhibits that the majority present face manipulations are straightforward to detect underneath managed situations. In different phrases, when testing deepfake detectors in the identical situations you practice them. This leads to very low error charges in manipulation detection.

- Generalization Challenges: There’s nonetheless a necessity for additional analysis on the generalization means of pretend detectors towards unseen situations.

- Fusion Strategies: The research means that fusion strategies at a characteristic or rating degree may present higher adaptation to totally different situations. This consists of combining totally different sources of data like steganalysis, deep studying options, RGB depth, and infrared data.

- A number of Frames and Extra Data Sources: Detection techniques that use face-weighting and take into account a number of frames, in addition to different sources of data like textual content, keystrokes, or audio accompanying movies, may considerably enhance detection capabilities.

Areas Highlighted for Enchancment and Future Tendencies:

- Face Synthesis: Present manipulations based mostly on GAN architectures, like StyleGAN, present very reasonable pictures. Nevertheless, most detectors can distinguish between actual and faux pictures with excessive accuracy because of particular GAN fingerprints. Eradicating these fingerprints continues to be difficult. One problem is including noise patterns whereas sustaining reasonable output.

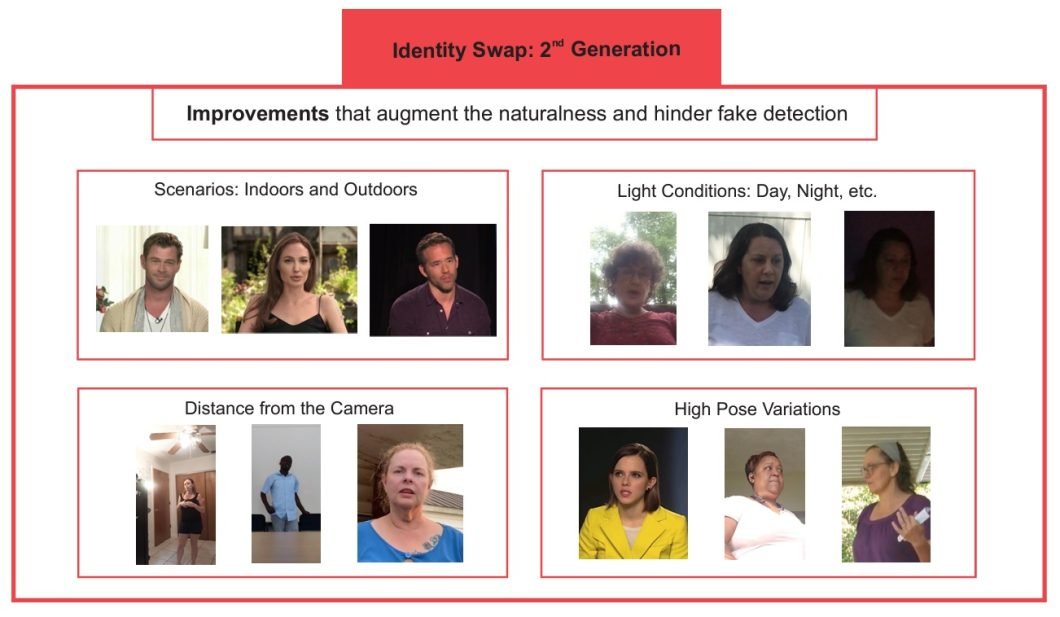

- Identification Swap: It’s troublesome to find out one of the best strategy for id swap detection because of various elements, similar to coaching for particular databases and ranges of compression. The research highlights the poor generalization outcomes of those approaches to unseen situations. There may be additionally a must standardize metrics and experimental protocols

- DeepFake Databases: The newest DeepFake databases, like DFDC and Celeb-DF, present excessive efficiency degradation in detection. Within the case of Celeb-DF, AUC outcomes fall under 60%. This means a necessity for improved detection techniques, probably by way of large-scale challenges and benchmarks.

- Attribute Manipulation: Much like face synthesis, most attribute manipulations are based mostly on GAN architectures. There’s a shortage of public databases for analysis on this space and an absence of normal experimental protocols.

- Expression Swap: Facial features swap detection is primarily centered on the FaceForensics++ database. The research encourages the creation and publication of extra reasonable databases utilizing latest strategies.

How one can mitigate the dangers of Deepfakes

Regardless of their potential to open new doorways by way of content material creation, there are severe issues concerning ethics in deepfakes. To mitigate the dangers, people and organizations should undertake proactive and knowledgeable methods:

- Training and Consciousness: Keep knowledgeable in regards to the nature and capabilities of deepfake expertise. Common coaching classes for workers may help them acknowledge potential threats.

- Implementing Verification Processes: Confirm the supply and authenticity earlier than sharing or appearing on doubtlessly manipulated media. Use reverse picture looking instruments and fact-checking web sites.

- Investing in Detection Know-how: Organizations, notably these in media and communications, ought to spend money on superior deepfake detection software program to scrutinize content material. For instance, there are industrial and open-source instruments that may distinguish between a human voice and AI voices.

- Authorized Preparedness: Perceive the authorized implications and put together insurance policies to handle potential misuse. This consists of clear tips on mental property rights and privateness.

Outlook and Future Challenges

At a worldwide degree, we count on a much wider use of AI technology instruments. Even at present, a study performed by Amazon Internet Providers (AWS) AI Lab researchers discovered {that a} “stunning quantity of the online” is poor-quality AI-generated content material.

collective motion is essential to handle related challenges:

- Worldwide Collaboration: Governments, tech firms, and NGOs should collaborate to standardize deepfake detection and set up common moral tips.

- Growing Sturdy Authorized Frameworks: There’s a necessity for complete authorized frameworks that deal with deepfake creation and distribution whereas respecting freedom of expression and innovation.

- Fostering Moral AI Growth: Encourage the moral growth of AI applied sciences, emphasizing transparency and accountability in AI algorithms used for media.

The long run outlook in countering deepfakes hinges on balancing technological developments with moral concerns. That is difficult, as innovation tends to outpace our means to adapt and regulate fields like AI and machine studying. Nevertheless, it’s very important to make sure improvements serve the larger good with out compromising particular person rights and societal stability.