Synthetic intelligence retains evolving at an unimaginable tempo, and DeepSeek-R1 is the most recent mannequin that’s making headlines. However how does it stack up towards OpenAI’s choices?

On this article, we’ll discover what DeepSeek-R1 brings to the desk—its options, efficiency in key benchmarks, and real-world use instances—so you’ll be able to determine if it’s proper to your wants.

What Is DeepSeek-R1?

DeepSeek-R1 is a next-generation “reasoning-first” AI mannequin that goals to advance past conventional language fashions by specializing in the way it arrives at its conclusions.

Constructed utilizing large-scale reinforcement studying (RL) strategies, DeepSeek-R1 and its predecessor, DeepSeek-R1-Zero, emphasize transparency, mathematical proficiency, and logical coherence.

It has just lately surpassed OpenAI’s ChatGPT within the Apple App Retailer, claiming the highest spot without cost apps within the U.S. as of January 2025.

Key factors to know:

- Open-Supply Launch: DeepSeek presents the principle mannequin (DeepSeek-R1) plus six distilled variants (starting from 1.5B to 70B parameters) underneath the MIT license. This open method has garnered important curiosity amongst builders and researchers.

- Reinforcement Studying Focus: DeepSeek-R1’s reliance on RL (fairly than purely supervised coaching) permits it to “uncover” reasoning patterns extra organically.

- Hybrid Coaching: After preliminary RL exploration, supervised fine-tuning information was added to handle readability and language-mixing points, bettering total readability.

From R1-Zero to R1: How DeepSeek-Developed

DeepSeek-R1-Zero was the preliminary model, educated by way of large-scale reinforcement studying (RL) with out supervised fine-tuning. This pure-RL method helped the mannequin uncover highly effective reasoning patterns like self-verification and reflection. Nonetheless, it additionally launched issues reminiscent of:

- Poor Readability: Outputs had been typically laborious to parse.

- Language Mixing: Responses may mix a number of languages, decreasing readability.

- Infinite Loops: With out SFT safeguards, the mannequin often fell into repetitive solutions.

DeepSeek-R1 fixes these points by including a supervised pre-training step earlier than RL. The end result – Extra coherent outputs and strong reasoning, similar to OpenAI on math, coding, and logic benchmarks.

Steered: What’s Generative AI?

Core Options & Structure

- Combination of Consultants (MoE) Structure: DeepSeek-R1 makes use of a big MoE setup—671B whole parameters with 37B activated throughout inference. This design ensures solely the related elements of the mannequin are used for a given question, reducing prices and rushing up processing.

- Constructed-In Explainability: Not like many “black field” AIs, DeepSeek-R1 consists of step-by-step reasoning in its output. Customers can hint how a solution was fashioned—essential to be used instances like scientific analysis, healthcare, or monetary audits.

- Multi-Agent Studying: DeepSeek-R1 helps multi-agent interactions, permitting it to deal with simulations, collaborative problem-solving, and duties requiring a number of decision-making elements.

- Value-Effectiveness: DeepSeek cites a comparatively low growth value (round $6 million) and emphasizes decrease operational bills, due to the MoE method and environment friendly RL coaching.

- Ease of Integration: For builders, DeepSeek-R1 is suitable with common frameworks like TensorFlow and PyTorch, alongside ready-to-use modules for speedy deployment.

How Does DeepSeek-R1 Examine to OpenAI’s Fashions?

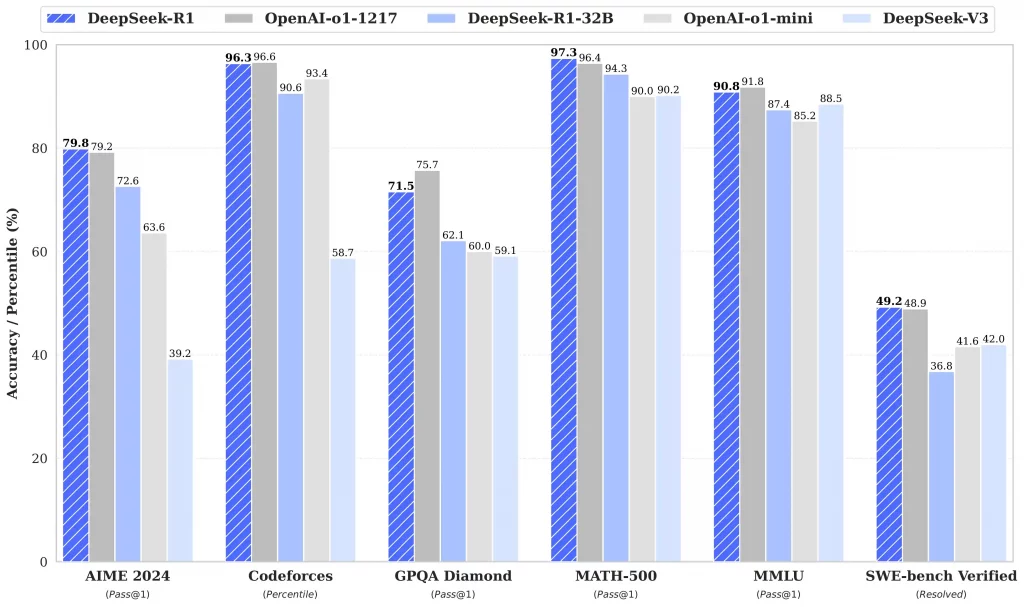

DeepSeek-R1 straight competes with OpenAI’s “o1” collection (e.g., GPT-based fashions) throughout math, coding, and reasoning duties. Right here’s a snapshot of how they fare on important benchmarks, based mostly on reported information:

| Benchmark | DeepSeek-R1 | OpenAI o1-1217 | Notes |

|---|---|---|---|

| AIME 2024 | 79.8% | 79.2% | Superior math competitors |

| MATH-500 | 97.3% | 96.4% | Excessive-school math issues |

| Codeforces | 96.3% | 96.6% | Coding competitors percentile |

| GPQA Diamond | 71.5% | 75.7% | Factual Q&A duties |

- The place DeepSeek Shines: Mathematical reasoning and code era, due to RL-driven CoT.

- The place OpenAI Has an Edge: Basic information Q&A, plus barely higher coding scores on some sub-benchmarks.

Distilled Fashions: Qwen and Llama

DeepSeek goes past simply the principle R1 mannequin. They distill the reasoning talents into smaller, dense fashions based mostly on each Qwen (1.5B to 32B) and Llama (8B & 70B). As an example:

- DeepSeek-R1-Distill-Qwen-7B: Achieves over 92% on MATH-500, outperforming many equally sized fashions.

- DeepSeek-R1-Distill-Llama-70B: Hits 94.5% on MATH-500 and 57.5% on LiveCodeBench—near some OpenAI-coded fashions.

This modular method means smaller organizations can faucet high-level reasoning while not having large GPU clusters.

Sensible Use Circumstances

- Superior Math & Analysis

- Universities and R&D labs can harness the step-by-step problem-solving talents for complicated proofs or engineering duties.

- Coding & Debugging

- Automate code translation (e.g., from C# in Unity to C++ in Unreal).

- Help with debugging by pinpointing logical errors and providing optimized options.

- Explainable AI in Regulated Industries

- Finance, healthcare, and authorities require transparency. DeepSeek-R1’s chain-of-thought clarifies the way it arrives at every conclusion.

- Multi-Agent Programs

- Coordinate robotics, autonomous autos, or simulation duties the place a number of AI brokers should work together seamlessly.

- Scalable Deployments & Edge Eventualities

- Distilled fashions match resource-limited environments.

- Massive MoE variations can deal with enterprise-level volumes and lengthy context queries.

Additionally Learn: Janus-Professional-7B AI Picture Generator – By DeepSeek

Entry & Pricing

- Web site & Chat: chat.deepseek.com (consists of “DeepThink” mode for reasoning).

- API: platform.deepseek.com, OpenAI-compatible format.

- Pricing Mannequin:

- Free Chat: Day by day cap of ~50 messages.

- Paid API: Billed per 1M tokens, with various charges for “deepseek-chat” and “deepseek-reasoner.”

- Context Caching: Reduces token utilization and whole value for repeated queries.

Future Prospects

- Ongoing Updates: DeepSeek plans to enhance multi-agent coordination, cut back latency, and provide extra industry-specific modules.

- Strategic Partnerships: Collaboration with cloud giants (AWS, Microsoft, Google Cloud) is in dialogue to broaden deployment avenues.

- Rising International Affect: Having briefly topped the U.S. App Retailer without cost AI apps, DeepSeek is turning into a critical contender and should reshape how open-source AI is adopted worldwide.

Last Ideas

DeepSeek-R1 represents a major stride in open-source AI reasoning, with efficiency rivalling (and generally surpassing) OpenAI’s fashions on math and code duties. Its reinforcement-learning-first method, mixed with supervised refinements, yields wealthy, traceable chain-of-thought outputs that attraction to regulated and research-focused fields. In the meantime, distilled variations put superior reasoning in attain of smaller groups.

For these focused on mastering these applied sciences and understanding their full potential, Nice Studying’s AI and ML course presents a sturdy curriculum that blends educational information with sensible expertise.

Steered: