Federated Studying, first launched by Google in 2016, is a distributed machine studying paradigm that enables Deep Studying fashions to be skilled in a decentralized setting on delicate information whereas sustaining privateness.

Deep Studying fashions are data-hungry, which implies the extra information, the higher the mannequin’s efficiency. Edge gadgets resembling cell phones, IoT sensors, and cameras maintain big quantities of information. This information may be invaluable for coaching Deep Studying (DL) fashions. Utilizing non-public information to coach a mannequin can result in privateness breaches. Cellphones retailer delicate info resembling pictures, and sensors detect and maintain invaluable info relating to the settings wherein folks place them.

About us: viso.ai gives Viso Suite, the world’s solely end-to-end Pc Imaginative and prescient Platform. The know-how allows world organizations to develop, deploy, and scale all laptop imaginative and prescient purposes in a single place. Get a demo.

Introduction to Federated Studying

In Pc Imaginative and prescient purposes, privateness is extraordinarily vital. For instance, footage taken on cell phones include the faces of individuals. If the fallacious folks get entry to this information, they might compromise the consumer’s identification.

One other instance is the information from surveillance purposes which might be put in to cater to visitors violations or detect if somebody isn’t sporting a masks. This will get much more delicate inside the well being division. The X-ray scans and different diagnoses include affected person diagnoses and a knowledge breach would violate affected person confidentiality. This can be a risk to the safety and privateness of residents, sufferers, and workers.

That is the place Federated Studying is available in; it permits DL fashions (particularly for Pc Imaginative and prescient) to be skilled on the information saved in edge gadgets with out compromising privateness.

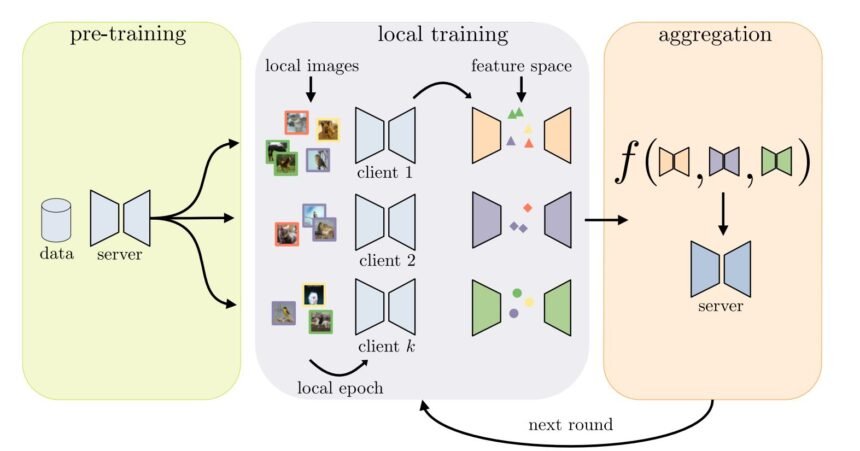

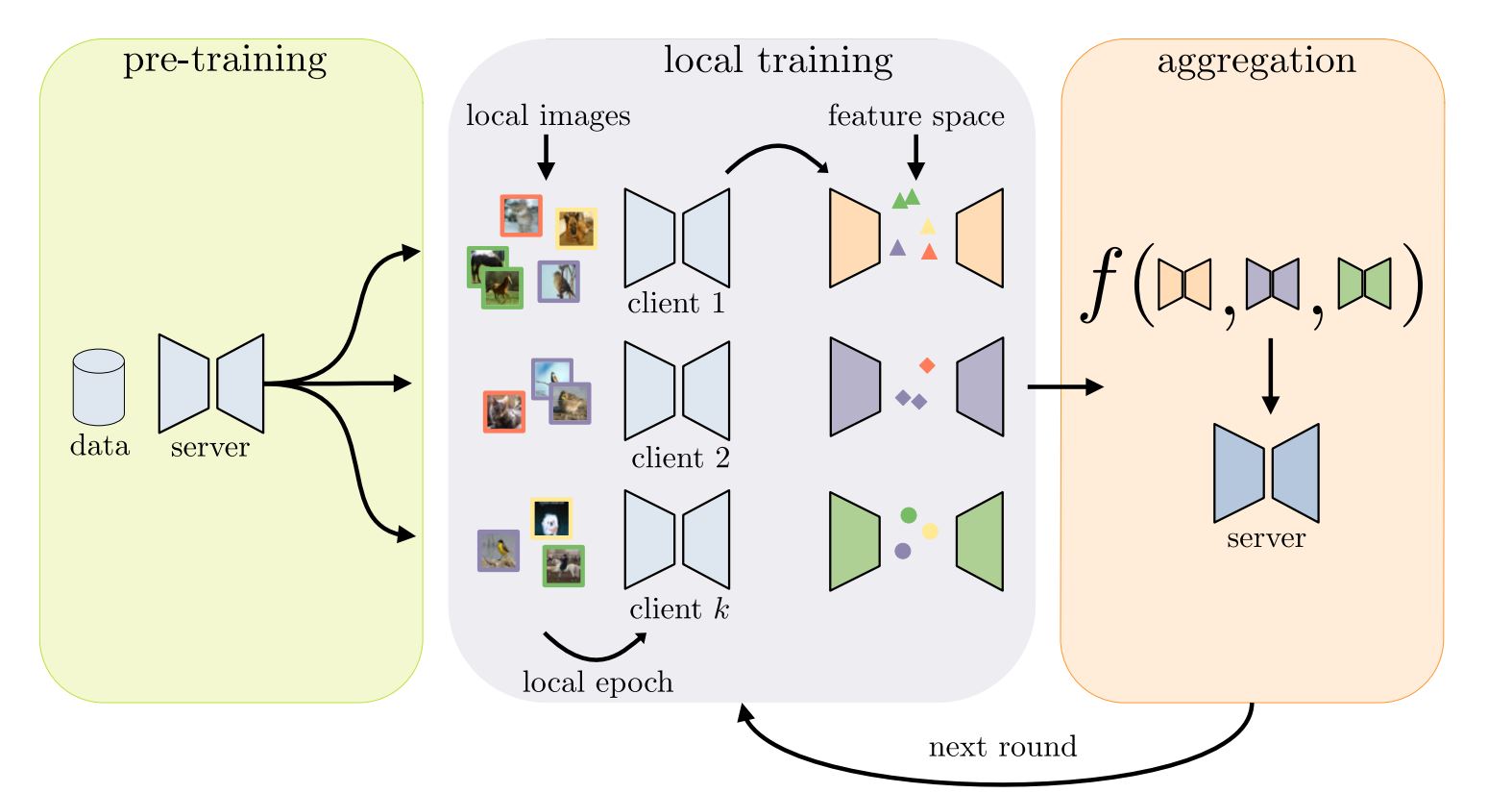

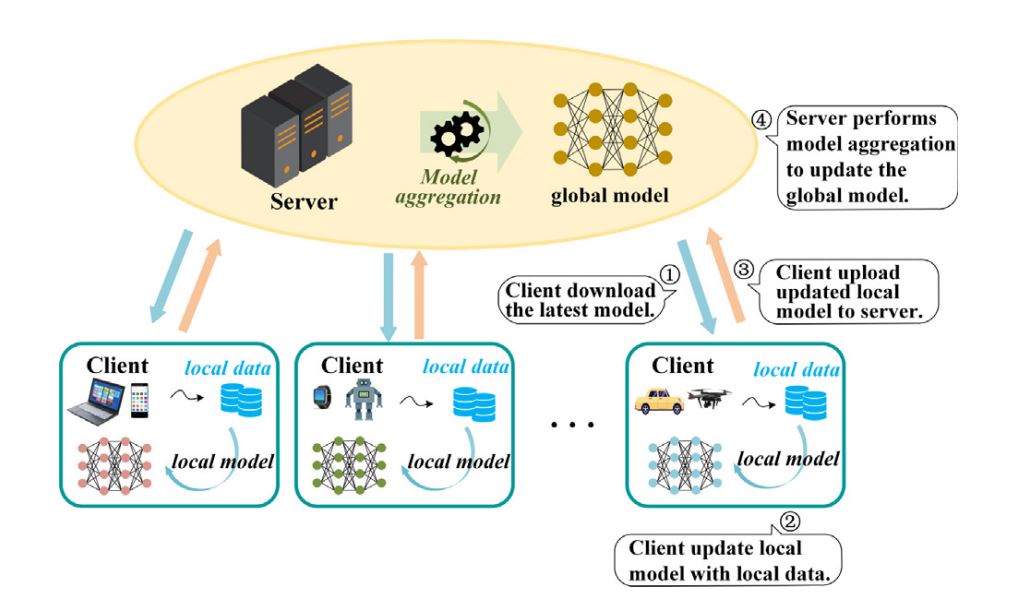

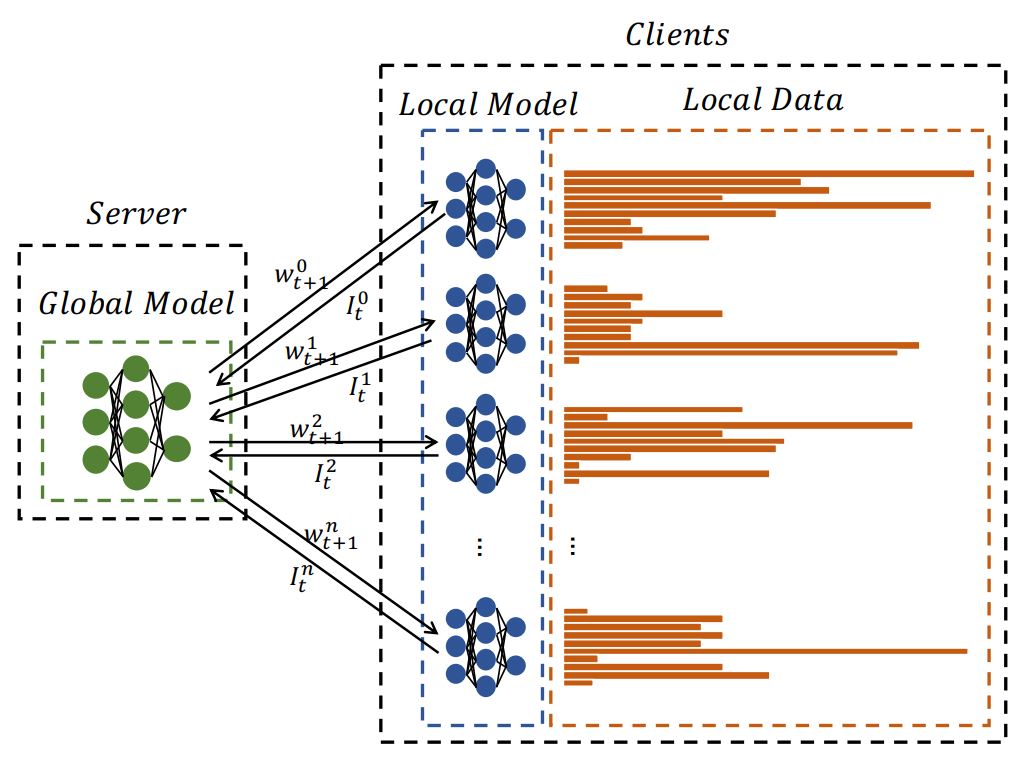

The concept behind Federated Studying (FL) is to ship native fashions to consumer’s gadgets, after which practice the native mannequin on the delicate information. As soon as the coaching is finished, it shares the native mannequin’s realized parameters (resembling weights, and gradients) with the server in an encrypted setting, and at last, the worldwide mannequin within the server merges the weights obtained from shopper gadgets into the worldwide mannequin.

On this weblog, we’ll dive deep into Federated Studying, the totally different methods used, and at last take a look at the challenges that should be solved.

What’s Federated Studying?

Federated Studying permits a number of decentralized gadgets or servers to collaboratively study a shared mannequin whereas retaining the consumer’s information domestically saved on every system. As an alternative of sending the consumer’s non-public information to a central server for coaching, every system trains the mannequin domestically utilizing its dataset after which sends the mannequin updates (gradients, weights, and so forth.) to a central server. Lastly, these updates (gradients and weights) are aggregated to a worldwide mannequin. This method is totally different from normal DL coaching.

That is fully totally different in comparison with a regular DL workflow. In normal DL approaches, we ship the information itself to central servers, and the mannequin will get skilled on the information. Nonetheless, this method has a number of limitations resembling communication overhead, and privateness dangers.

Furthermore, information privateness legal guidelines and regulatory our bodies resembling GDPR (The Common Information Safety Regulation) of the EU area and HIPPA (Well being Insurance coverage and Portability and Accountability Act) within the US limit centralized storage of delicate information, as there are very excessive possibilities of information theft.

Alternatively, in Federated Studying, particular person gadgets practice the fashions, so the information by no means leaves the consumer’s system. Through the sharing course of, the system encrypts gradients and weights earlier than transferring them to the worldwide mannequin. Consequently, FL complies with varied regulatory our bodies.

Furthermore, FL has the potential to revolutionize industries resembling Healthcare and Finance, the place the information may be very delicate and normal DL processes can’t be utilized. Consequently, these industries are lagging within the adoption of DL, as they’ll’t share the non-public info of customers. FL permits these industries to reap the benefits of developments made in AI applied sciences.

Evolution of Federated Studying

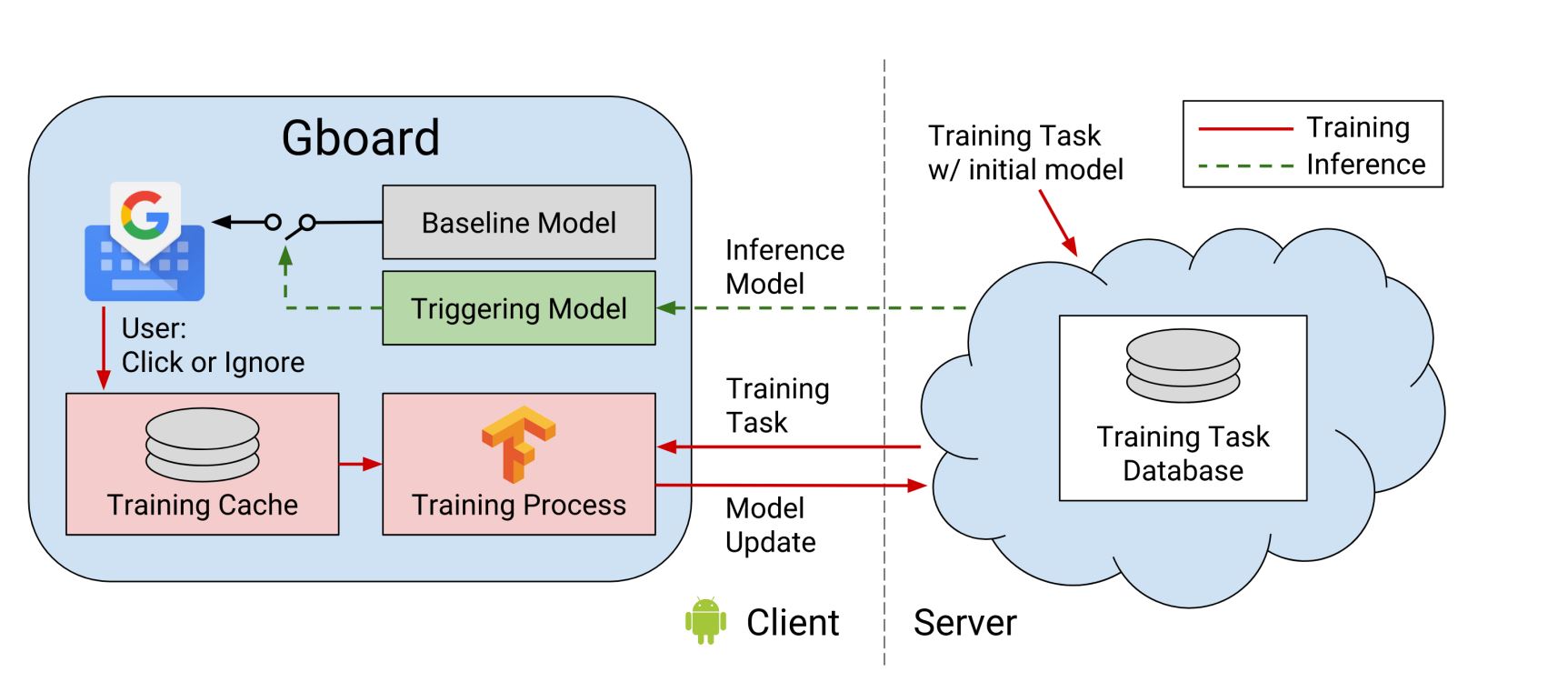

The time period “Federated Studying” was first launched by Google of their printed analysis paper in 2016, wherein they tried to handle the restrictions of the usual method of coaching DL fashions. On this paper, they mentioned the heavy utilization of shopper’s community bandwidth and privateness dangers. They launched the thought of sending native ML fashions to the shopper’s gadgets and solely sending the parameters realized to the servers.

In a while, they applied FL of their Gboard (Google’s keyboard). Right here they might solely ship the parameters realized when the telephone was linked to Wi-Fi and was charging.

Quick ahead to at present, we see varied corporations, organizations, and researchers displaying elevated curiosity in FL. They’ve developed a number of Federated Studying frameworks to make integrating and utilizing FL simpler.

How does Federated Studying work?

As we mentioned above, FL’s core precept is that the information by no means leaves the consumer’s system, and the system solely sends parameters resembling gradients and weights.

Here’s what the workflow appears to be like like in FL.

Federated Studying Workflow

- Information Gathering and Preprocessing

- Native information: Edge gadgets retailer non-public information that’s explicit to their setting.

- Information normalization and augmentation: The native Machine Studying mannequin in edge gadgets normalizes and performs varied information augmentation.

- Mannequin Coaching and Updates

- Native mannequin coaching: Every system trains its native mannequin utilizing its information.

- Communication and synchronization: After gadgets full the coaching for desired epochs, they share the mannequin parameters with a central server for aggregation. Researchers use a number of strategies, resembling encryption and different safety measures, to make sure protected sharing, which we’ll talk about shortly.

- Aggregation: Aggregating is likely one of the most vital steps in FL. To merge the weights obtained from particular person gadgets into the worldwide mannequin, researchers use totally different algorithms, FedAvg (weighted averaging primarily based on the scale and high quality of the native datasets) being the most well-liked algorithm.

- Distributing the International Mannequin: After updating and testing the worldwide mannequin for accuracy, researchers ship it again to all members for the subsequent spherical of native coaching.

- Mannequin Analysis and Deployment

- International mannequin validation: Earlier than finalizing the worldwide mannequin with the brand new weights, researchers take a number of precautions, because the weights obtained from gadgets can alter the mannequin’s efficiency, probably making it unusable. To handle this, they carry out validation checks and cross-validation checks to make sure the mannequin’s accuracy stays intact.

Federated Studying Averaging Algorithms

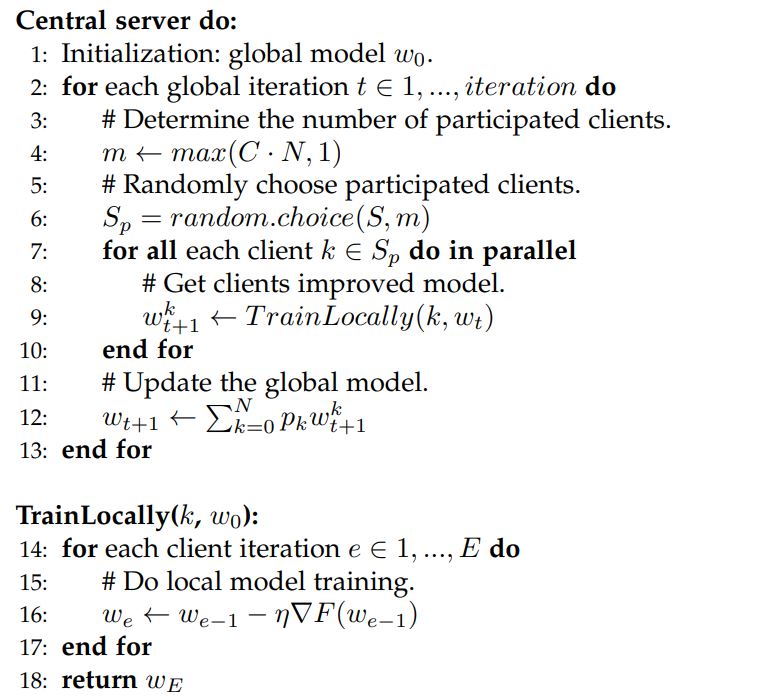

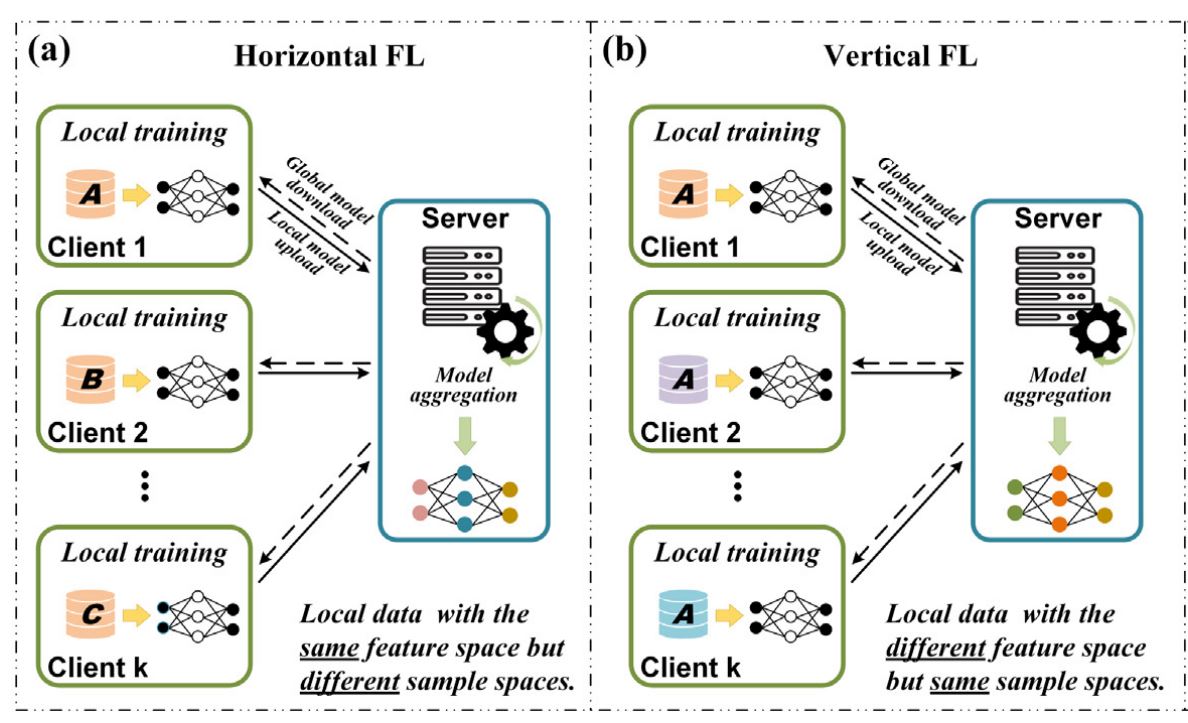

FedAvg

This is likely one of the earliest and mostly used strategies in FL for mannequin aggregation.

On this methodology, the system randomly chooses a bunch of purchasers at every spherical of coaching. Through the aggregation course of, it weighs the purchasers’ parameters primarily based on the proportion of every shopper’s information dimension and averages them to supply a worldwide mannequin.

One vital factor to notice right here is that not all purchasers are chosen, they’re randomly chosen. This helps with community overhead and shopper heterogeneity (as some purchasers could have bad-quality datasets or information from very particular environments).

FedProx

Researchers have launched a number of variations of FedAvg, and FedProx is one among them. They launched FedProx to sort out the issue of shopper heterogeneity and information distribution when coping with non-IID (non-Identically Independently Distributed information).

FedProx modifies the unique FedAvg by introducing a proximal time period into the shopper’s native mannequin. This time period makes certain that the native mannequin doesn’t drastically deviate from the worldwide mannequin by penalizing the mannequin if it diverges. The equation is as follows:

Lprox(θ)=Lnative(θ)+μ/2∥θ−θt∥2

Right here,

- Lnative(θ) is the native loss operate on the shopper’s information.

- θ is the native mannequin parameter.

- θt is the worldwide mannequin parameters on the present iteration.

- μ is a hyperparameter controlling the energy of the proximal time period.

- ∥θ−θt∥2 makes certain that the mannequin doesn’t deviate. If the mannequin does, the time period will increase quadratically, and because of this, the loss will increase considerably.

Community Buildings in Federated Studying

Major community buildings resembling centralized and decentralized buildings are utilized in Federated Studying. In a centralized FL system, a server is positioned on the middle, forming a star community construction. A number of purchasers connect with this central server for mannequin aggregation and synchronization.

In a decentralized FL system, there isn’t a central server. As an alternative, purchasers immediately talk with one another in a peer-to-peer (P2P) community, making a mesh construction. This design helps overcome the presence of untrusted servers and presents benefits resembling elevated resilience to community failures and communication delays.

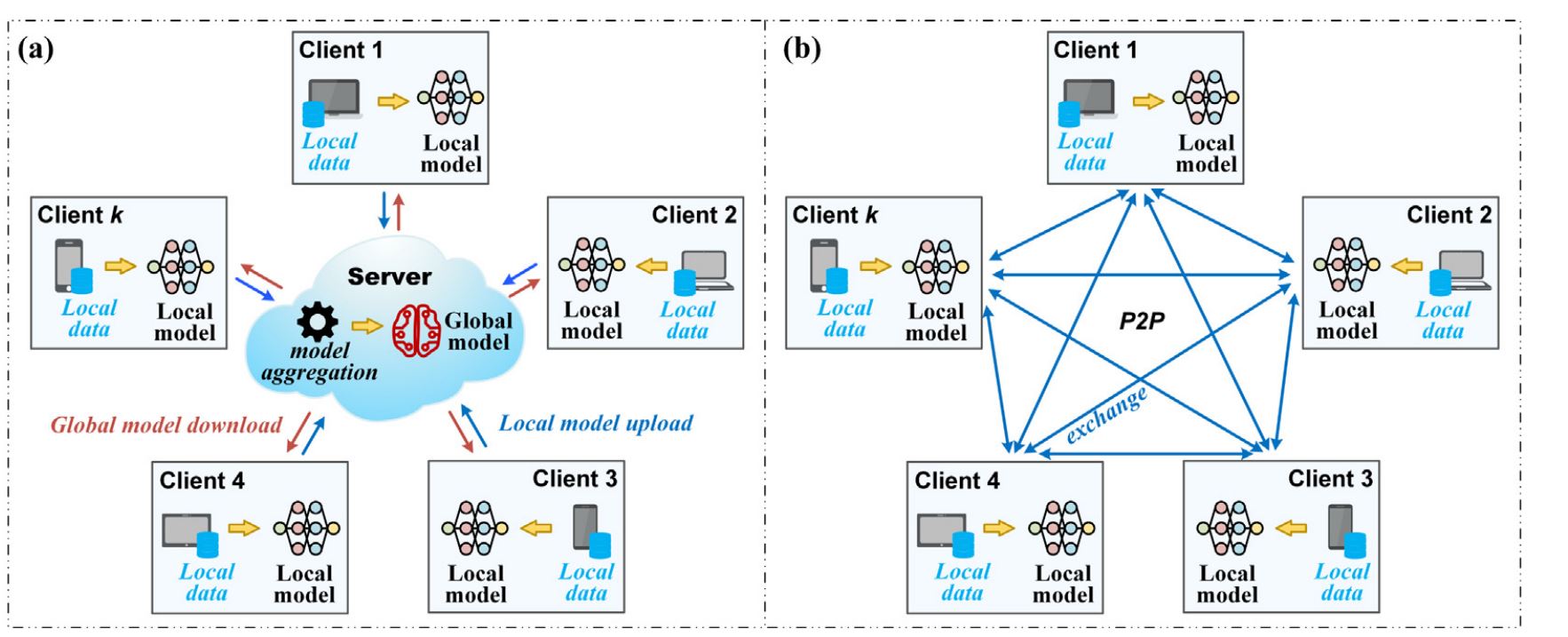

Information-based Federated Studying

Federated Studying may be divided into three classes primarily based on characteristic and pattern house distribution.

Function house right here means the attributes or traits (options) which might be used to explain information cases. For instance, every shopper in FL is likely to be utilizing information options like age, earnings, and placement. Regardless of variations in particular cases (consumer information), the characteristic house stays constant throughout purchasers.

Whereas, pattern house means, a set of doable information samples. For instance, a shopper has consumer information for customers inside a selected metropolis, whereas one other shopper has consumer information for customers in one other metropolis. Every set of consumer information represents a special pattern house, though the characteristic house (like age, earnings, and placement) is identical.

Classes of Federated Studying

We’ve got three classes in FL: horizontal FL, vertical FL, and federated switch studying.

- Horizontal Federated Studying: In Horizontal FL, purchasers share the identical characteristic house however have totally different pattern areas. Which means that totally different purchasers pattern information from totally different objects, resembling A, B, C, and so forth., however all the information share the identical traits, resembling age and earnings. For instance, researchers who carried out a examine to detect COVID-19 an infection used chest CT pictures as coaching information for every shopper. They sampled these pictures from folks of various ages and genders, making certain that every one the information had the identical characteristic house.

- Vertical Federated Studying: In Vertical FL, purchasers pattern information from the identical object (object A) however distribute totally different options (totally different age demographics) amongst themselves. Corporations or organizations that don’t compete, resembling e-commerce platforms and promoting corporations with totally different information traits, generally use this method to collaborate and practice a shared studying mannequin.

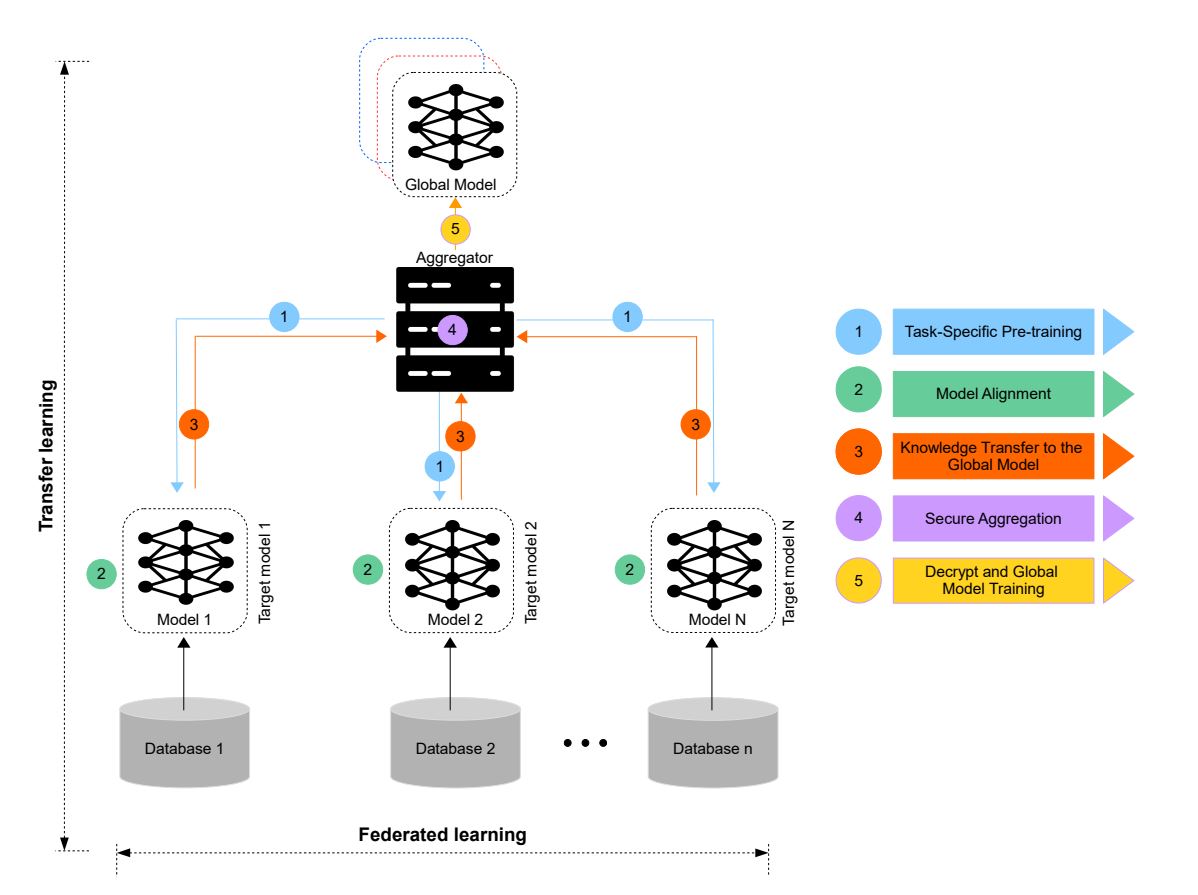

Horizontal and Vertical FL –source - Federated switch studying: Federated switch studying offers with information that’s from totally different members not solely in samples but in addition in characteristic areas. For instance, native information could come from totally different objects (objects A, B, C…) with totally different options (for instance totally different colours). These realized representations are then utilized to the pattern prediction job with solely one-sided options.

One frequent use case is in FedHealth, a framework for researching wearable healthcare utilizing federated switch studying. The method begins with a cloud server that develops a cloud mannequin utilizing a fundamental dataset. As soon as the server learns the fundamental dataset, it makes use of switch studying to coach the mannequin on the native shopper’s dataset.

Federated Switch Studying –source

Challenges of Federated Studying

Compared to normal DL coaching, FL comes up with a set of latest challenges. The purchasers often have totally different {hardware} capabilities, resembling computation energy, community connectivity, and sensors utilized.

Furthermore, challenges additionally come up in information used, as some purchasers have extra information than others, higher high quality information, or might need skewed datasets. These challenges may even break a mannequin, as a substitute of bettering it.

Communication Overhead

Communication overhead is a vital facet of FL environments, because it includes sharing mannequin parameters from a number of purchasers with servers for a number of rounds.

Though FL is best than sending datasets utilized in normal DL, community bandwidth and computation energy may be limiting. Some IoT gadgets could also be positioned in distant areas with restricted web connectivity. Moreover, the elevated participation of shopper gadgets additional introduces delays when synchronizing.

Researchers have proposed varied methods to beat communication overhead. One method compresses the information being transferred. One other technique is figuring out and excluding irrelevant fashions from aggregation, as this could decrease communication prices.

Heterogeneity of shopper information

This is likely one of the main challenges FL faces, as it might hinder convergence charges. To beat this problem, researchers make the most of a number of methods. For instance, they’ll use FedCor, an FL framework that employs a correlation-based shopper choice technique to enhance the convergence charge of FL.

This framework works by correlating loss and totally different purchasers utilizing a Gaussian course of (GP). This course of is used to pick purchasers in a means that considerably reduces the anticipated world loss in every spherical. The experiments carried out on this technique confirmed that FedCor can enhance convergence charges by 34% to 99% on FMNIST and 26% to 51% on CIFAR-10.

One other technique to beat the difficulty of sluggish nodes and irregular community connectivity is to make use of a reinforcement learning-based central server, which steadily weights the purchasers primarily based on the standard of their fashions and their responses. That is then used to group purchasers that obtain optimum efficiency.

Privateness and assaults

Though FL is designed to maintain information privateness in thoughts, there are nonetheless eventualities the place an FL system can lead to privateness breaches. Malicious actors can reverse-engineer the gradients and weights shared with the server to entry actual non-public info. Furthermore, the FL system can also be liable to adversary assaults, the place a malicious consumer may have an effect on the worldwide mannequin by sending poisoned native information that might cease the mannequin from converging and making it unusable.

Researchers can defend towards poisoning assaults by detecting malicious customers by anomaly detection of their native fashions. They analyze whether or not the characteristic distribution of malicious customers differs from the remainder of the customers within the community.

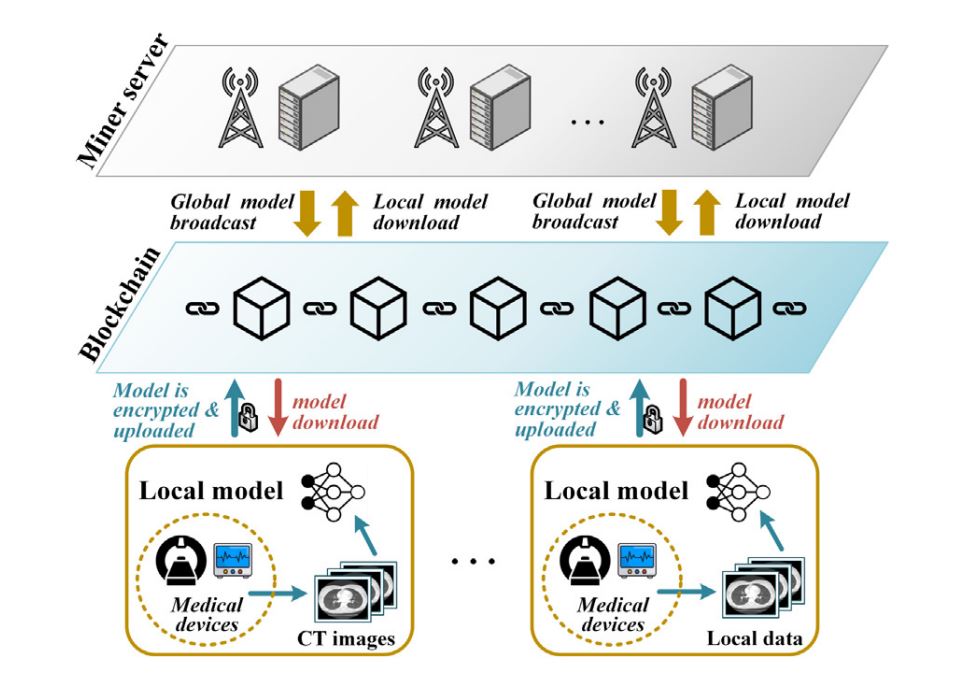

To handle the privateness dangers, researchers have proposed a blockchain-based FL system, formally often called FLchain.

- FLchain: On this, the cell gadgets ship their mannequin parameters to servers as transactions, and the server shops the native mannequin parameters on the blockchain, after validating them with different nodes. Lastly, the aggregation node verifies the domestically saved mannequin from the blockchain and aggregates them.

Blockchain-based Federated Studying – source

One other methodology to cut back the chance of privateness is differential privateness. On this, every shopper provides noise to its domestically skilled parameters earlier than importing it to the server. The noise added doesn’t alter the efficiency of the mannequin considerably, and on the similar time makes it tough to research the distinction between skilled parameters.

Researchers and builders extensively use cryptographic strategies resembling homomorphic encryption and secret sharing to protect privateness. For instance, they encrypt mannequin updates earlier than sharing them with the server for aggregation utilizing a public key that solely the nodes or a number of members of the community can entry. Devoted members should collaborate to decrypt the knowledge.

Implementing Federated Studying

On this part, we’ll undergo the code for implementing FL in TensorFlow. To get began, you want first to get the TensorFlow library for Federated Studying.

pip set up tensorflow-federated

After you have gotten the dependencies, we have to initialize the mode. The next code will create a sequential mannequin with a dense neural community.

import tensorflow as tf

def create_keras_model():

mannequin = tf.keras.fashions.Sequential([

tf.keras.layers.Input(shape=(784,)),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

return mannequin

Now, we have to present information for the mannequin. For this tutorial, we’ll use synthetically created information, however you possibly can attempt together with your dataset, or import the MINST handwriting dataset from TensorFlow.

import numpy as np

def create_federated_data(num_clients=10, num_samples=100):

federated_data = []

for _ in vary(num_clients):

x = np.random.rand(num_samples, 784).astype(np.float32)

y = np.random.randint(0, 10, dimension=(num_samples,)).astype(np.int32)

dataset = tf.information.Dataset.from_tensor_slices((x, y))

dataset = dataset.batch(10)

federated_data.append(dataset)

return federated_data

The next line of code will convert the Keras mannequin you created earlier to a TensorFlow Federated mannequin.

import tensorflow_federated as tff

def create_tff_model():

def model_fn():

keras_model = create_keras_model() #initialize keras mannequin first

return tff.studying.fashions.from_keras_model(

keras_model,

input_spec=(

tf.TensorSpec(form=[None, 784], dtype=tf.float32), # The enter options are anticipated to be tensors

tf.TensorSpec(form=[None], dtype=tf.int32)

),

loss=tf.keras.losses.SparseCategoricalCrossentropy(),

metrics=[tf.keras.metrics.SparseCategoricalAccuracy()]

)

return model_fn

The next code creates the Fedavg operate that we mentioned above within the weblog. Server and shopper studying charges are additionally outlined right here.

def federated_averaging_process():

return tff.studying.algorithms.build_weighted_fed_avg(

model_fn=create_tff_model(),

client_optimizer_fn=lambda: tf.keras.optimizers.Adam(learning_rate=0.01),

server_optimizer_fn=lambda: tf.keras.optimizers.Adam(learning_rate=1.0)

)

Right here, we outline the primary operate that runs the mannequin, by creating and invoking the capabilities we outlined above. Furthermore, as you possibly can see we’ve initialized a loop, that runs the FL course of 10 occasions.

def predominant():

federated_data = create_federated_data()

federated_averaging = federated_averaging_process()

state = federated_averaging.initialize()

for round_num in vary(1, 11): # Variety of rounds

state, metrics = federated_averaging.subsequent(state, federated_data)

print(f'Spherical {round_num}, Metrics: {metrics}')

if __name__ == '__main__':

predominant()

What’s subsequent for Federated Studying?

On this weblog, we seemed in-depth at coaching machine studying fashions utilizing Federated Studying (FL). We understood that the FL coaching course of works by sending mannequin parameters (mannequin weight and gradient) obtained by coaching the native mannequin on the consumer’s uncooked information after which sending these parameters to the central mannequin saved within the server. We then checked out how FL makes mannequin coaching safe and makes privateness a precedence. And the several types of FL programs that exist. We additionally checked out totally different aggregating algorithms which might be utilized in FL.

Lastly, we checked out a number of the limitations that exist inside the Federated Studying mannequin, and the way these may be solved. After which went by a code implementation on the MINST handwriting dataset.

In conclusion, FL gives a possibility for varied fields which have been skeptical about adopting DL because of the nature of their information, and issues relating to privateness of the information. FL is an ongoing course of and a number of other ongoing analysis is happening to make the system higher. Nonetheless, we are able to see that FL holds a shiny future up forward at making the lives of individuals higher with Synthetic Intelligence.

Often Requested Questions (FAQS)

Q1. How safe is federated studying towards cyber assaults?

A. The target of Federated Studying is to make sure the privateness and safety of customers’ information, nonetheless, it isn’t totally proof against cyber assaults. A number of analysis papers have been printed, discussing the opportunity of cyber-attacks on FL. A few of these assaults embody:

- Adversary Assault: That is the place the malicious individual tries to deduce the consumer’s info from the gradient or mannequin weight replace.

- Mannequin Poisoning: The attacker can ship corrupt mannequin weight to the worldwide mannequin, this would possibly critically lower the worldwide mannequin’s efficiency.

FL comes with varied precautionary steps to keep away from these assaults. Methods resembling safe multi-party-computation, holomorphic encryption, differential privateness, and strong weight aggregation strategies are used.

Q2. How does federated studying differ from conventional machine studying?

A. Federated studying differs from normal ML primarily based on:

Information Distribution: ML makes use of a central database that shops the information, and the information is fed to ML for coaching. In distinction, FL makes use of distributed coaching, the place the information is saved in a number of gadgets, the place a neighborhood ML calculates mannequin parameters and sends them to a worldwide mannequin for aggregation.

Q3. Can federated studying be used with real-time information?

A. Sure, FL can be utilized with real-time information. Furthermore, FL is continuously utilized in eventualities the place real-time information switch is required. Units resembling sensors, cameras, and IoT included with FL can compute gradients and loss periodically on the newly generated information and ship updates to the worldwide mannequin.

This autumn. What are some in style frameworks and instruments for federated studying?

A. There exist a number of frameworks that make it simple to arrange and run FL.

- TensorFlow Federated (TFF): An open-source framework by Google designed for machine studying and different computations on decentralized information.

- PySyft: This can be a Python library for safe and personal machine studying. It options FL, Differential Privateness, and Encrypted computations.

- Federated AI Know-how Enabler (FATE): That is an Industrial Grade Federated Studying Framework, constructed by Webank. It helps federated studying architectures and safe computation for any machine studying algorithm.

The put up Federated Studying: Balancing Information Privateness & AI Efficiency appeared first on viso.ai.