We’ve talked quite a bit concerning the capabilities and potential of Deep Studying Picture Era right here on the Paperspace by DigitalOcean Weblog. Not solely are picture technology instruments enjoyable and intuitive to make use of, however they’re probably the most extensively democratized and distributed AI fashions accessible to the general public. Actually, the one Deep Studying know-how with a bigger social footprint are Giant Language Fashions.

For the final two years, Secure Diffusion, the primary publicly distributed and purposeful picture synthesis mannequin, has fully dominated the scene. We’ve written about opponents like PixArt Alpha/Sigma and achieved analysis into others like AuraFlow, however, on the time of every launch, nothing has set the tone like Secure Diffusion fashions. Secure Diffusion 3 stays the most effective open supply fashions on the market, and lots of are nonetheless attempting to emulate their success.

Final week, this paradigm modified with the discharge of FLUX from Black Forest Labs. FLUX represents a palpable step ahead in picture synthesis applied sciences by way of immediate understanding, object recognition, vocabulary, writing functionality, and way more. On this tutorial, we’re going to focus on what little is out there to the general public concerning the two open-source FLUX fashions, FLUX.1 [schnell] and FLUX.1-[dev], earlier than the discharge of any Flux associated paper from the analysis crew. Afterwards, we are going to present how you can run Flux on a Paperspace Core Machine powered by an NVIDIA H100 GPU.

The FLUX Mannequin

FLUX was created by the Black Forest Labs crew, which is comprised largely of former Stability AI staffers. The engineers on the crew had been straight answerable for the event/invention of each VQGAN and Latent Diffusion, along with the Secure Diffusion mannequin suite.

Little or no has been made public concerning the growth of the FLUX fashions, however we do know the next:

That is essentially the most of what we all know concerning the enhancements to typical Latent Diffusion Modeling methods they’ve added for FLUX.1. Luckily, they’re going to launch an official tech report for us to learn within the close to future. Within the meantime, they do present a bit extra qualitative and comparative data in the remainder of their launch assertion.

Let’s dig a bit deeper and focus on what data was made accessible of their official weblog put up:

The discharge of FLUX is supposed to “outline a brand new state-of-the-art in picture element, immediate adherence, model variety and scene complexity for text-to-image synthesis” (Supply). To raised obtain this, they’ve launched three variations of FLUX: Professional, Dev, and Schnell.

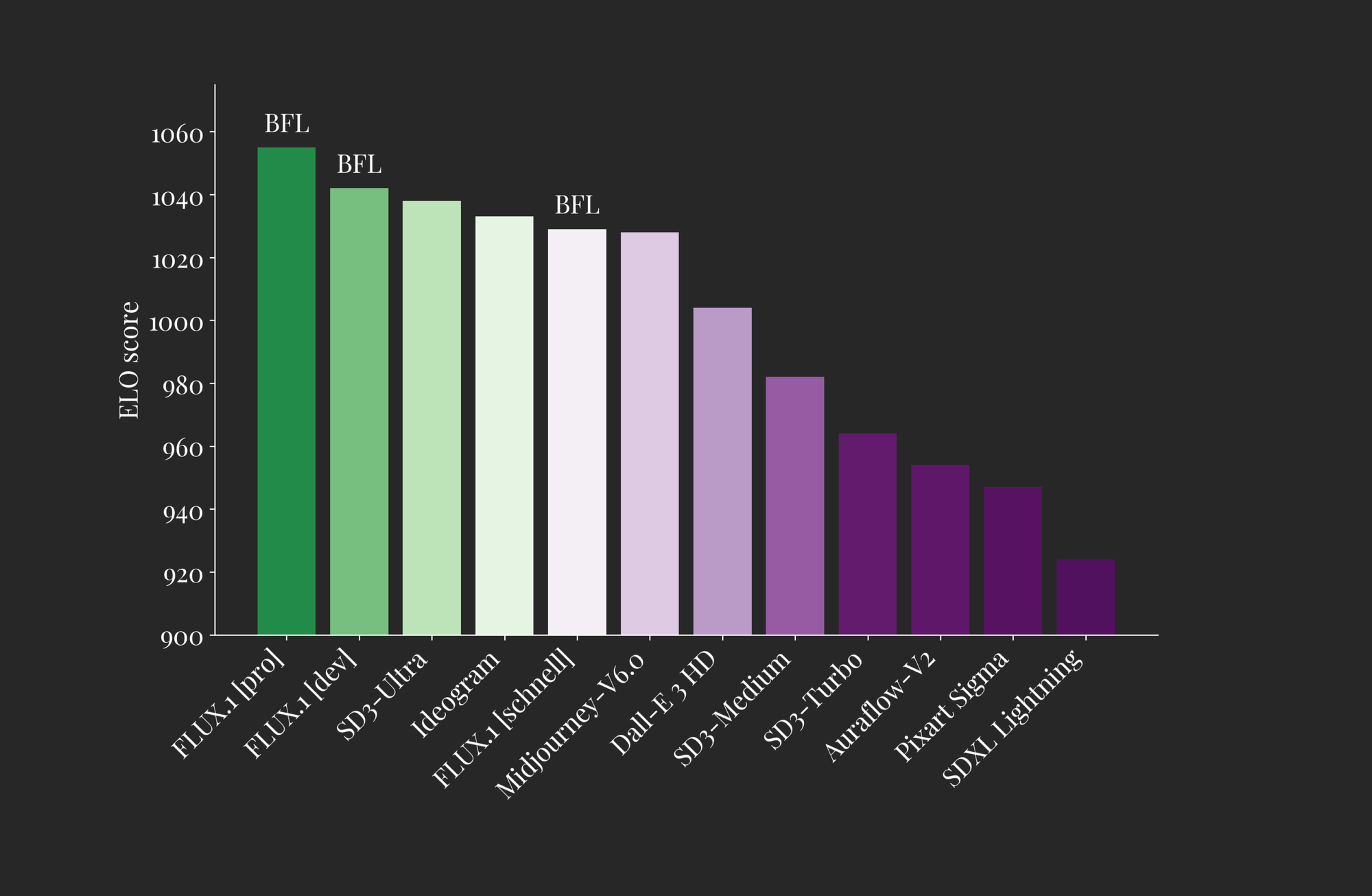

The primary is barely accessible through API, whereas the latter two are open-sourced to various levels. As we are able to see from the plot above, every of the FLUX fashions performs comparably to the highest performant fashions accessible each closed and open supply by way of high quality of outputs (ELO Rating). From this, we are able to infer that every of the FLUX fashions has peak high quality picture technology each by way of understanding of the textual content enter and potential scene complexity.

Let us take a look at their variations between these variations extra carefully:

- FLUX.1 [pro]: is their greatest performant model of the mannequin. It affords state-of-the-art picture synthesis that outmatches even Secure Diffusion 3 Extremely and Ideogram by way of immediate following, element, high quality, and output variety. (Supply)

- FLUX.1 [dev]: FLUX.1 [dev] is an “open-weight, guidance-distilled mannequin for non-commercial functions” (Supply). It was distilled straight from the FLUX.1 [pro] mannequin, and affords practically the identical degree of efficiency at picture technology in a considerably extra environment friendly bundle. This makes FLUX.1 [dev] essentially the most highly effective open supply mannequin accessible for picture synthesis. FLUX.1 [dev] weights can be found on HuggingFace, however keep in mind the license is restricted to solely non-commercial use

- FLUX.1 [schnell]: Their quickest mannequin, schnell is designed for native growth and private use. This mannequin is able to producing prime quality pictures in as little as 4 steps, making it one of many quickest picture technology fashions ever. Like dev, schnell is out there on HuggingFace and inference code might be discovered on GitHub

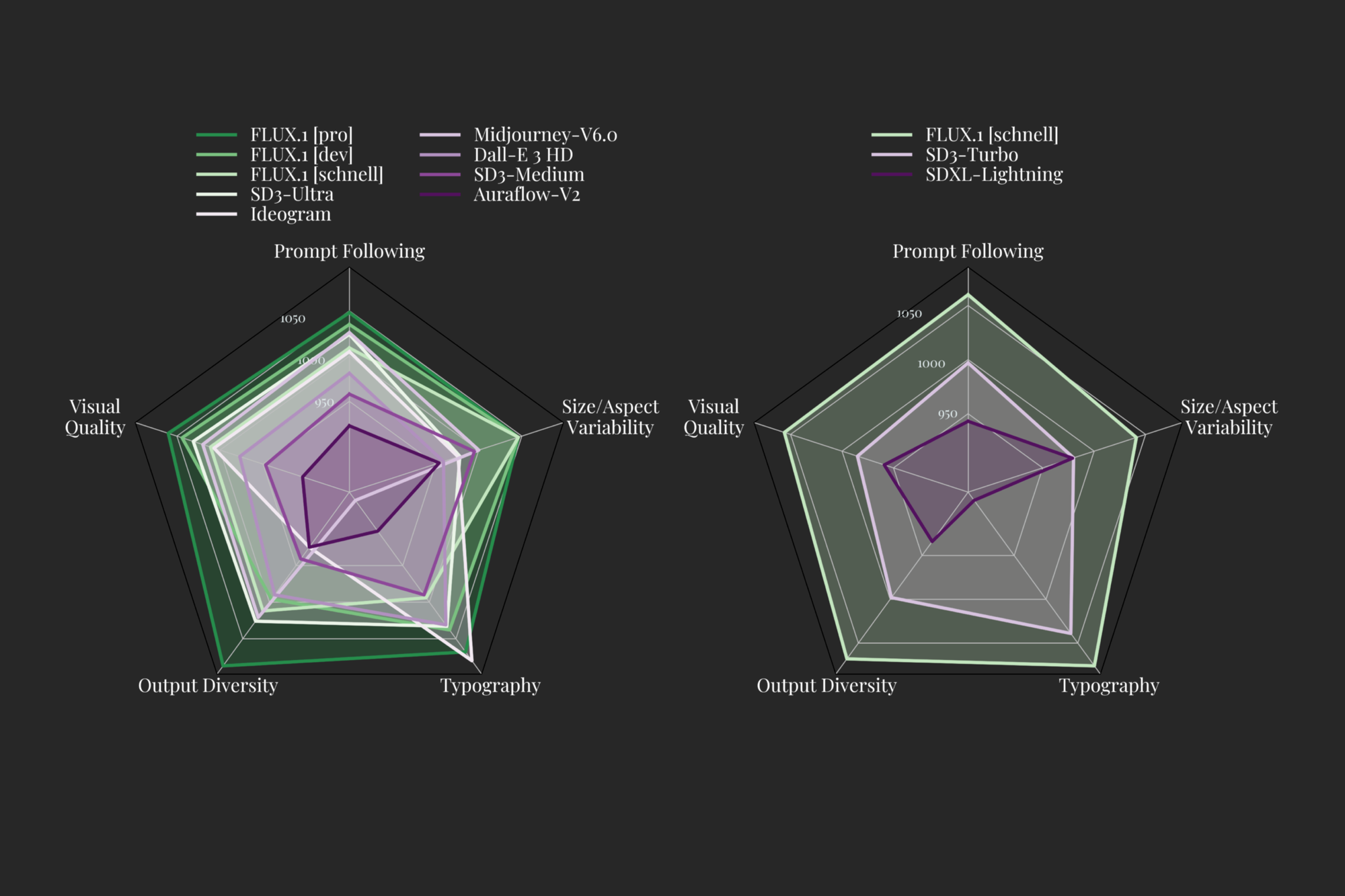

The researchers have recognized 5 traits to measure Picture Era fashions extra particularly on, particularly: Visible High quality, Immediate Following, Measurement/Facet Variability, Typography and Output Variety. The above plot reveals how every main Picture Era mannequin compares, based on the Black Forest Crew, by way of their ELO Measure. They assert that every of the professional and dev variations of the fashions outperforms Ideogram, Secure Diffusion3 Extremely, and MidJourney V6 in every class. Moreover, they present within the weblog that the mannequin is able to a various vary of resolutions and facet ratios.

All collectively, the discharge weblog paints an image of an extremely highly effective picture technology mannequin. Now that we have now seen their claims, let’s run the Gradio demo they supply on a Paperspace Core H100 and see how the mannequin holds as much as them.

FLUX Demo

To run the FLUX demos for schnell and dev, we first have to create a Paperspace Core Machine. We suggest utilizing an H100 or A100-80G GPU for this process, however an A6000 must also deal with the fashions with out challenge. See the Paperspace Documentation for particulars on getting began with Core and establishing SSH.

Setup

As soon as our machine is created and we have now efficiently SSH’d into our Machine from our native, we are able to navigate to the listing of our alternative we want to work in. We selected Downloads. From there, we are able to clone the official FLUX GitHub repository onto our Machine and transfer into the brand new listing.

cd Downloads

git clone https://github.com/black-forest-labs/flux

cd fluxAs soon as the repository is cloned and we’re inside, we are able to start establishing the demo itself. First, we are going to create a brand new digital setting, and set up all the necessities for FLUX to run.

python3.10 -m venv .venv

supply .venv/bin/activate

pip set up -e '.[all]'It will take just a few moments, however as soon as it’s accomplished, we’re nearly able to run our demo. All that’s left is to log in to HuggingFace, and navigate to the FLUX dev web page. There, we might want to comply with their licensing requirement if we wish to entry the mannequin. Skip this step in case you plan to solely use schnell.

Subsequent, go to the HuggingFace tokens web page and create or refresh a brand new Learn token. We’re going to take this and run

huggingface-cli loginin our terminal to present the entry token to the HuggingFace cache. It will be certain that we are able to obtain our fashions once we run the demo in a second.

Beginning the Demo

To start the demo, all we have to do now’s execute the related python script for whichever demo we’re desirous to run. Listed here are the examples:

## schnell demo

python demo_gr.py --name flux-schnell --device cuda

## dev demo

python demo_gr.py --name flux-dev --device cudaWe suggest beginning with schnell, because the distilled mannequin is definitely a lot sooner and extra environment friendly to make use of. From our expertise utilizing it, dev requires a bit extra fine-tuning and distillation, whereas schnell is definitely in a position to take higher benefit of the fashions capabilities. Extra on this later.

When you run the code, the demo will start spinning up. The fashions shall be downloaded onto your Machine’s HuggingFace cache. This course of might take round 5 minutes in complete for every mannequin obtain (schnell and dev). As soon as accomplished, click on on the shared Gradio public hyperlink to get began. Alternatively, you possibly can open it domestically in your browser utilizing the Core Machine desktop view.

Operating the Demo

Actual time technology of pictures at 1024×1024 on H100 utilizing FLUX.1 [schnell]

The demo itself may be very intuitive, courtesy of Gradio’s extremely easy-to-use interface. On the high left, we have now our immediate entry subject the place we are able to enter our textual content immediate description of the picture we wish. Each FLUX fashions are very sturdy by way of immediate dealing with, so we encourage you to attempt some wild mixtures of phrases.

For the dev mannequin, there’s a picture to picture choice subsequent. So far as we are able to inform, this functionality is just not very robust with flux. It was not in a position to translate the picture’s objects from noise again into significant connections with the immediate in our restricted testing.

Subsequent, there’s an optionally available toggle for Superior Choices. These enable us to regulate the peak, width, and variety of inference steps used for the output. On schnell, the steerage worth is locked to three.5, however this worth might be adjusted for dev demoing. Lastly, we are able to management the seed, which permits for replica of beforehand generated pictures.

After we fill in every of those, we’re in a position to generate a single picture:

First impressions with FLUX

We’ve now had a few week to experiment with FLUX, and we’re very impressed. It’s simple to see how this mannequin has quickly grown in reputation in success following its launch given what it represents in real utility and development.

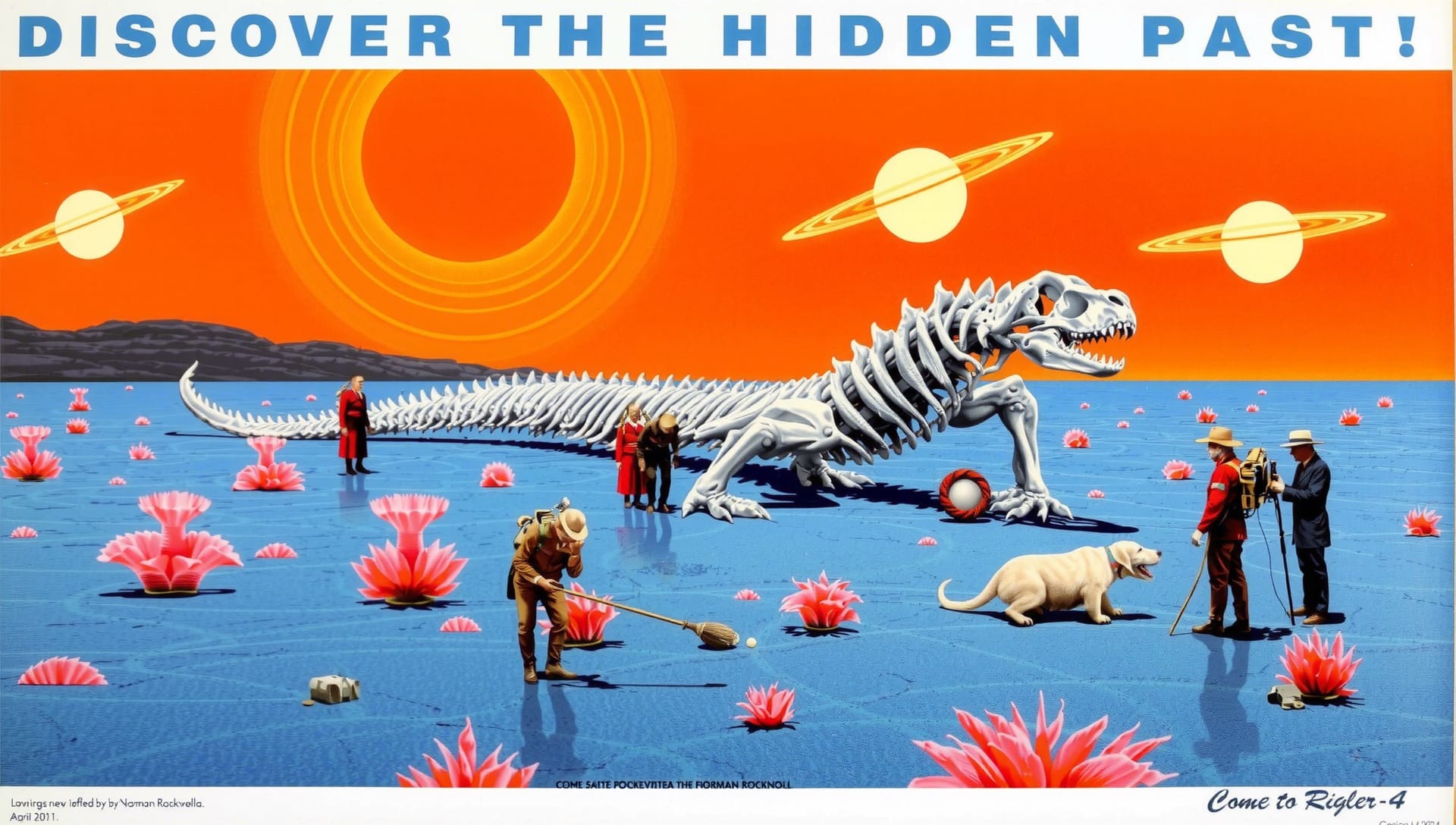

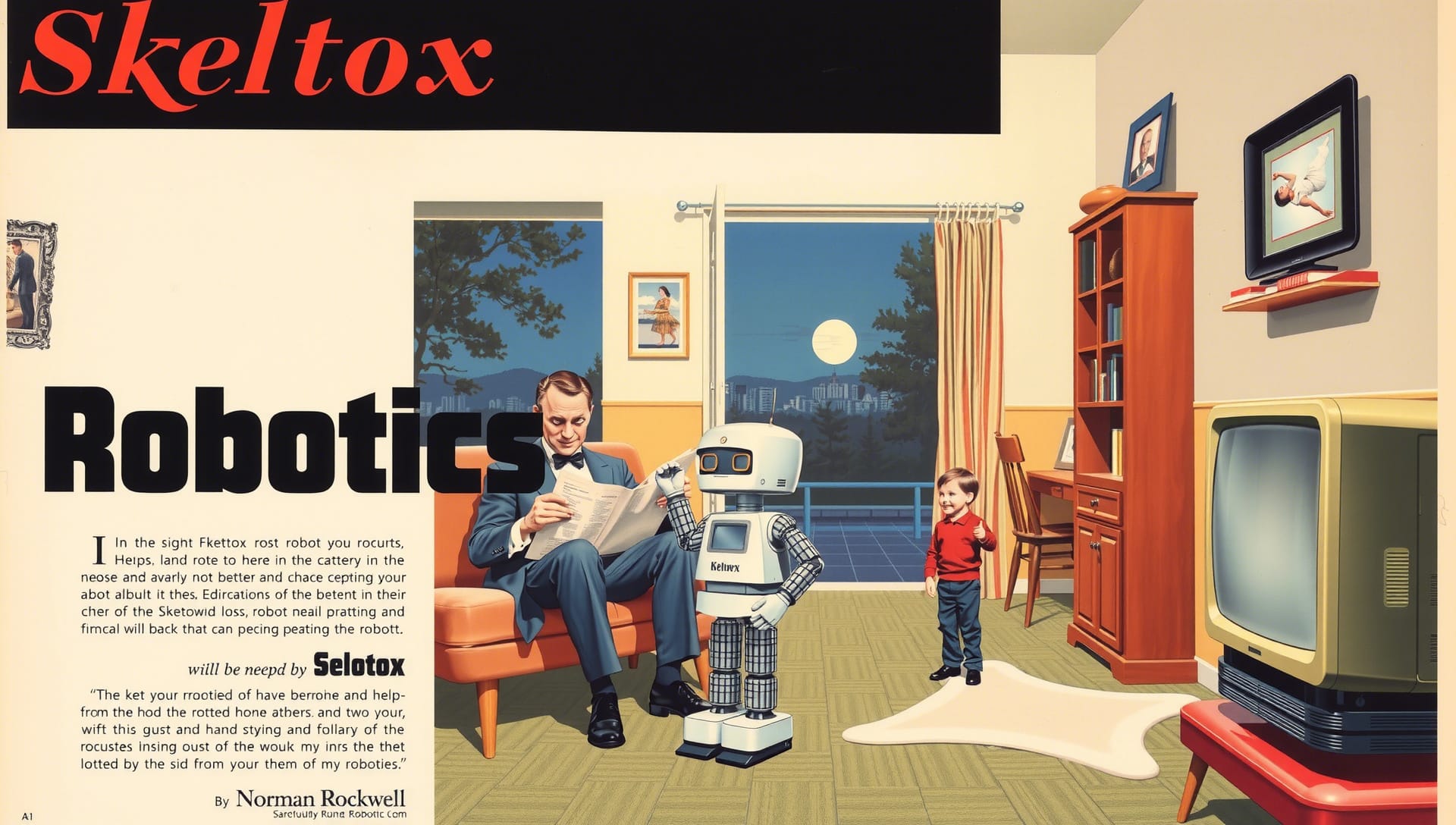

We’ve been testing its efficacy throughout all kinds of various inventive duties, largely with schnell. Have a look beneath:

As we are able to see, it captured a lot of the textual content we needed written with a surprising rendition of the panorama described within the immediate. The folks and canine are a bit uncanny valley wanting with how they match into the picture, and “Rigel” is spelled as “Rigler” within the backside nook. Nonetheless, it is a improbable illustration of the immediate.

Right here we present attempting to seize a preferred artist’s, Norman Rockwell, model. It succeeds decently right here. We had a number of generated choices from this identical immediate to select from, however opted for it due to the astounding scene accuracy. The gibberish textual content and lack of a subtitle for the commercial are obtrusive issues, however the composition is indisputably spectacular.

Making an attempt for one thing in a distinct facet ratio now, we see a lot of the identical degree of success as present earlier than. A lot of the immediate is seize precisely, however the figurine is lacking shorts and coca cola, and they’re holding the guitar as an alternative. This reveals that the mannequin can nonetheless wrestle with composition of a number of objects on a single topic. The immediate accuracy and writing nonetheless make this a really fascinating ultimate output for the immediate.

Lastly, we have now a tall picture generated from a easy immediate. With none textual content, we are able to see that the mannequin nonetheless manages to generate an aesthetically pleasing picture that captures the immediate nicely. With out extra textual content, there’s notably much less artifacting. This will point out that less complicated prompts will render higher on FLUX fashions.

Ideas for utilizing FLUX

Prompting for textual content

Getting textual content to seem in your picture might be considerably tough, as there isn’t any deliberate set off phrase or image to get FLUX to try to generate textual content. That being mentioned, we are able to make it extra prone to print textual content by including citation marks round our desired textual content within the immediate, and by intentionally writing out the kind of textual content we want to see seem. See the instance above.

Basic Immediate Engineering

FLUX is extremely intuitive to make use of in comparison with earlier iterations of Diffusion fashions. Even in comparison with Ideogram or MidJourney, it could possibly perceive our prompts with little to no work to engineer the textual content in direction of machine understanding. We do have some suggestions for getting the most effective consequence, nonetheless.

Our first piece of recommendation is to order the phrases within the immediate and to make use of commas. The order of the phrases within the immediate straight corresponds to their weight when producing the ultimate picture, so a foremost topic ought to all the time be close to the beginning of the immediate. If we wish to add extra particulars, utilizing commas helps separate the phrases for the mannequin to learn. Like a human, it wants this punctuation to grasp the place to clauses begin in cease. Commas appear to carry extra weight in FLUX than they did with Secure Diffusion.

Moreover in our expertise, there’s a noticeable tradeoff between quantity of element (phrases) in our textual content immediate, the corresponding quantity of element within the picture, and the ensuing high quality of scene composition. Extra phrases appears to translate to greater immediate accuracy, however that precludes the inclusion of extra objects or traits for the mannequin to generate on high of the unique topic. For instance, it will be easy to alter the hair coloration of an individual by altering a single phrase. As a way to change their complete outfit, we have to add a phrase or sentence to the immediate with plenty of element. This phrase might disrupt the unseen diffusion course of, and make it tough for the mannequin to appropriately recreate the specified scene.

Facet Ratios

FLUX was educated throughout all kinds of facet ratios and resolutions of pictures starting from .2 to 2 MegaPixels in dimension. Whereas that is true, it definitely appears to shine in sure areas and resolutions. In our expertise training with the mannequin, it performs nicely with 1024 x 1024 and bigger resolutions. 512 x 512 pictures come out much less detailed total, even with the lowered variety of pixels taken into consideration. We additionally discovered the next resolutions work extraordinarily nicely in comparison with close by values:

- 674 x 1462 (iPhone/frequent good cellphone facet ratio is 9:19.5)

- 768 x 1360 (default)

- 896 x 1152

- 1024 x 1280

- 1080 x 1920 (frequent wallpaper ratio)

Closing Ideas

On this article, we checked out a few of these capabilities intimately earlier than demoing the mannequin utilizing H100s operating on Paperspace. After wanting on the launch work and attempting the mannequin out ourselves, we are able to say for sure that FLUX is essentially the most highly effective and succesful picture technology mannequin to ever be launched. It represents a palpable step ahead for these applied sciences, and the chances are rising extra countless for what these kinds of fashions might someday be able to doing.

We encourage everybody to attempt FLUX out on Paperspace as quickly as attainable! Paperspace H100s make producing pictures in simply moments, simple, and it’s a snap to setup the setting following the directions within the demo above.