VentureBeat presents: AI Unleashed – An unique government occasion for enterprise information leaders. Community and study with business friends. Learn More

Anybody who has dealt in a customer-facing job — and even simply labored with a workforce of various people — is aware of that each particular person on Earth has their very own distinctive, generally baffling, preferences.

Understanding the preferences of each particular person is tough even for us fellow people. However what about for AI fashions, which haven’t any direct human expertise upon which to attract, not to mention use as a frame-of-reference to use to others when attempting to grasp what they need?

A workforce of researchers from main establishments and the startup Anthropic, the corporate behind the massive language mannequin (LLM)/chatbot Claude 2, is engaged on this very downside and has provide you with a seemingly apparent but resolution: get AI fashions to ask extra questions of customers to seek out out what they really need.

Getting into a brand new world of AI understanding by means of GATE

Anthropic researcher Alex Tamkin, along with colleagues Belinda Z. Li and Jacob Andreas of the Massachusetts Institute of Expertise’s (MIT’s) Laptop Science and Synthetic Intelligence Laboratory (CSAIL), together with Noah Goodman of Stanford, printed a research paper earlier this month on their technique, which they name “generative lively activity elicitation (GATE).”

Their objective? “Use [large language] fashions themselves to assist convert human preferences into automated decision-making techniques”

In different phrases: take an LLM’s present functionality to investigate and generate textual content and use it to ask written questions of the consumer on their first interplay with the LLM. The LLM will then learn and incorporate the consumer’s solutions into its generations going ahead, dwell on the fly, and (that is necessary) infer from these solutions — based mostly on what different phrases and ideas they’re associated to within the LLM’s database — as to what the consumer is finally asking for.

Because the researchers write: “The effectiveness of language fashions (LMs) for understanding and producing free-form textual content means that they could be able to eliciting and understanding consumer preferences.”

The three GATES

The strategy can really be utilized in varied other ways, based on the researchers:

- Generative lively studying: The researchers describe this technique because the LLM mainly producing examples of the type of responses it will probably ship and asking how the consumer likes them. One instance query they supply for an LLM to ask is: “Are you curious about the next article? The Artwork of Fusion Delicacies: Mixing Cultures and Flavors […] .” Based mostly on what the consumer responds, the LLM will ship roughly content material alongside these strains.

- Sure/no query technology: This technique is so simple as it sounds (and will get). The LLM will ask binary sure or no questions akin to: “Do you take pleasure in studying articles about well being and wellness?” after which keep in mind the consumer’s solutions when responding going ahead, avoiding data that it associates with these questions that obtained a “no” reply.

- Open-ended questions: Just like the primary technique, however even broader. Because the researchers write, the LLM will search to acquire the “the broadest and most summary items of information” from the consumer, together with questions akin to “What hobbies or actions do you take pleasure in in your free time […], and why do these hobbies or actions captivate you?”

Promising outcomes

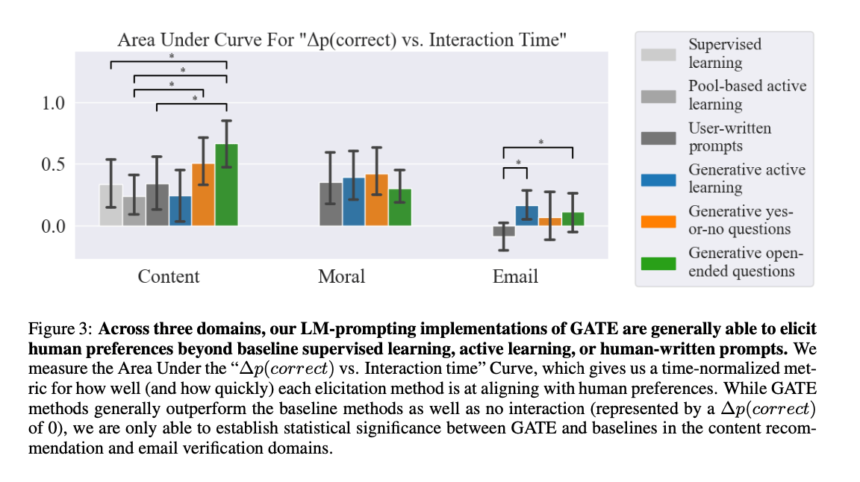

The researchers tried out the GATE technique in three domains — content material advice, ethical reasoning, and e mail validation.

By fine-tuning Anthropic rival’s GPT-4 from OpenAI and recruiting 388 paid individuals at $12 per hour to reply questions from GPT-4 and grade its responses, the researchers found GATE typically yields extra correct fashions than baselines whereas requiring comparable or much less psychological effort from customers.

Particularly, they found that the GPT-4 fine-tuned with GATE did a greater job at guessing every consumer’s particular person preferences in its responses by about 0.05 factors of significance when subjectively measured, which seems like a small quantity, however is definitely quite a bit when ranging from zero, because the researchers’ scale does.

In the end, the researchers state that they “introduced preliminary proof that language fashions can efficiently implement GATE to elicit human preferences (generally) extra precisely and with much less effort than supervised studying, lively studying, or prompting-based approaches.”

This might save enterprise software program builders a whole lot of time when booting up LLM-powered chatbots for buyer or employee-facing functions. As a substitute of coaching them on a corpus of knowledge and attempting to make use of that to determine particular person buyer preferences, fine-tuning their most popular fashions to carry out the Q/A dance specified above might make it simpler for them to craft participating, constructive, and useful experiences for his or her meant customers.

So, in case your favourite AI chatbot of selection begins asking you questions on your preferences within the close to future, there’s an excellent likelihood it might be utilizing the GATE technique to try to offer you higher responses going ahead.

Нужно собрать информацию о пользователе? Этот бот поможет детальный отчет мгновенно.

Воспользуйтесь продвинутые инструменты для поиска цифровых следов в соцсетях .

Узнайте контактные данные или активность через систему мониторинга с верификацией результатов.

глаз бога информация о человеке

Система функционирует в рамках закона , обрабатывая общедоступную информацию.

Получите расширенный отчет с геолокационными метками и списком связей.

Доверьтесь проверенному решению для исследований — результаты вас удивят !

Find countless engaging and valuable materials here .

From expert articles to quick tips , there’s something to suit all needs .

Enhance your knowledge with curated information crafted to inspire plus entertain you .

Our platform delivers an intuitive navigation easily access the information you need .

Join of a growing community who benefit from our high-quality content regularly .

Dive in now and discover wealth of knowledge this platform provides .

https://globalentrepreneurshipmovement.org

Hello to all, how is everything, I think every one is getting more from this web site, and your views are pleasant for new people.

Brazil prostitution

Hi to all, how is all, I think every one is getting more from this website, and your views are pleasant designed for new viewers.

https://www.sarajulez.de/test-et-avis-atlas-pro-ontv-fonctionnalites-avantages-et-inconvenients/

Поворотное зеркало в полный рост Трюмо с тремя зеркалами: идеальное решение для создания макияжа и причесок с разных ракурсов.

https://bitcoinchik.ru/forum/topic/nuzhno-podobrat-maslo-dlya-zapravki-konditsionera-na-freone/#postid-2530 смазка, растворяющаяся в хладагенте

срочный выездной шиномонтаж https://vyezdnoj-shinomontazh-77.ru

выездной шиномонтаж москва https://vyezdnoj-shinomontazh-77.ru

шумоизоляция арок авто https://shumoizolyaciya-arok-avto-77.ru