Be part of us in returning to NYC on June fifth to collaborate with govt leaders in exploring complete strategies for auditing AI fashions concerning bias, efficiency, and moral compliance throughout numerous organizations. Discover out how one can attend right here.

Do you have to add glue to your pizza, stare directly at the sun for half-hour per day, eat rocks or a poisonous mushroom, deal with a snake bite with ice, and jump off the Golden Gate Bridge?

In accordance with data served up via Google Search’s new “AI Overview” function, these clearly silly and dangerous recommendations will not be solely good concepts, however the high of all attainable outcomes a consumer ought to see when looking with its signature product.

What’s going on, the place did all this dangerous data come from, and why is Google placing on the high of its search outcomes pages proper now? Let’s dive in.

What’s Google AI Overview?

In its bid to catch-up to rival OpenAI and its hit chatbot ChatGPT within the massive language mannequin (LLM) chatbot and search sport, Google launched a brand new function referred to as “Search Generative Experience” almost a 12 months in the past in Might 2023.

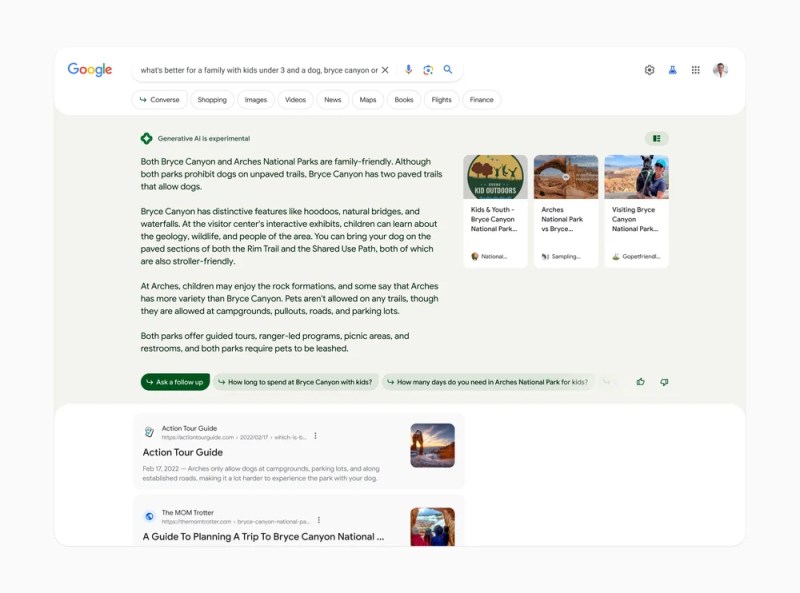

It was described then as: “an AI-powered snapshot of key data to think about, with hyperlinks to dig deeper,” and appeared mainly as a brand new paragraph of textual content proper beneath the Google Search enter bar, above the normal listing of blue hyperlinks the consumer sometimes acquired when looking Google.

The function was mentioned to be powered by Search-specific AI fashions. On the time, it was an “opt-in” service and customers needed to undergo plenty of hoops to show it on.

However 10 days in the past at Google’s I/O convention, amid a fleet of AI-related bulletins, the corporate introduced the Search Generative Expertise had been renamed AI Overviews and was coming as the default experience on Google Search to all customers, starting with these within the U.S.

There are methods to show it off or carry out Google Searches with out AI Overviews (specifically the “Internet” tab on Google Search), however now, on this case, customers must take just a few further steps to take action.

Why is Google AI Overview controversial?

Ever since Google turned AI Overviews on because the default for customers within the U.S., some have been taking to X and different social websites to put up the horrible, terrible, no-good outcomes that come up within the function when looking completely different queries.

In some instances, the AI-powered function chooses to show wildly incorrect, inflammatory and downright harmful data.

Even celebrities together with musician Lil Nas X have joined within the pile-on:

Different outcomes are extra innocent however nonetheless incorrect and make Google look silly and unreliable:

The poor high quality AI generated outcomes have taken on a lifetime of their very own and even develop into a meme with some customers photoshopping solutions into screenshots to make Google look even worse that it already does in the true outcomes:

Google has certified the AI Overview function as being “experimental,” placing the next textual content on the backside of every consequence: “Generative AI is experimental,” and linking to a page that describes the new feature in more detail.

On that web page, Google writes: “AI Overviews can take the work out of looking by offering an AI-generated snapshot with key data and hyperlinks to dig deeper…With consumer suggestions and human opinions, we consider and enhance the standard of our outcomes and merchandise responsibly.”

Will Google pull AI/Overview?

However some customers took to X (previously Twitter) to name upon Google or predict that the search big would find yourself eradicating the AI Overview function, no less than briefly, just like the tack taken by Google after its Gemini AI picture technology function was proven to create racially and traditionally inaccurate photographs earlier this 12 months, inflaming outstanding Silicon Valley libertarians and politically conservative figures equivalent to Marc Andreessen and Elon Musk.

In an announcement to The Verge, a Google spokesperson mentioned of the AI Overview function that customers had been displaying examples “typically very unusual queries, and aren’t consultant of most individuals’s experiences.”

As well as, The Verge reported that: “The corporate has taken motion in opposition to violations of its insurance policies…and are utilizing these ‘remoted examples’ to proceed to refine the product.”

But as some have noticed on X, this sounds an terrible lot like sufferer blaming.

Others have posited that AI builders may very well be held legally chargeable for harmful outcomes equivalent to the sort proven in AI Overview:

Importantly, tech journalists and different digitally literate customers have famous that Google seems to be utilizing its AI fashions to create summaries of content material it has listed in its Search index beforehand, content material it didn’t originate however is nonetheless relying upon to supply its customers with “key data.”

In the end, it’s exhausting to say what share of searches show this faulty data.

However one factor is obvious: AI Overview appears to be extra susceptible than Google Search was beforehand to disinformation from untrustworthy sources, or data posted as a joke which the underlying AI fashions liable for summarization can’t perceive as such, and as a substitute deal with as severe.

Now, whether or not customers really act upon the knowledge offered in these outcomes stays to be seen — but when they do, it’s clearly unwise and will pose dangers to their well being and security.

Let’s hope customers are good sufficient to verify alternate sources. Say, rival AI search startup Perplexity, which appears to have much less issues surfacing right data than Google’s AI Overviews for the time being (an unlucky irony for the search big and its customers, given Google’s position in first conceiving of and articulating the machine studying transformer structure on the coronary heart of the trendy generative AI/LLM increase).