Katja Grace, 22 December 2022

Averting doom by not constructing the doom machine

In the event you concern that somebody will construct a machine that may seize management of the world and annihilate humanity, then one form of response is to attempt to construct additional machines that may seize management of the world even earlier with out destroying it, forestalling the ruinous machine’s conquest. Another or complementary form of response is to attempt to avert such machines being constructed in any respect, a minimum of whereas the diploma of their apocalyptic tendencies is ambiguous.

The latter strategy appears to me just like the form of fundamental and apparent factor worthy of a minimum of consideration, and in addition in its favor, matches properly within the style ‘stuff that it isn’t that onerous to think about occurring in the actual world’. But my impression is that for individuals nervous about extinction danger from synthetic intelligence, methods underneath the heading ‘actively decelerate AI progress’ have traditionally been dismissed and ignored (although ‘don’t actively velocity up AI progress’ is standard).

The dialog close to me through the years has felt a bit like this:

Some individuals: AI would possibly kill everybody. We must always design a godlike super-AI of excellent goodness to stop that.

Others: wow that sounds extraordinarily formidable

Some individuals: yeah but it surely’s essential and in addition we’re extraordinarily good so idk it might work

[Work on it for a decade and a half]

Some individuals: okay that’s fairly exhausting, we hand over

Others: oh huh shouldn’t we perhaps attempt to cease the constructing of this harmful AI?

Some individuals: hmm, that might contain coordinating quite a few individuals—we could also be conceited sufficient to suppose that we’d construct a god-machine that may take over the world and remake it as a paradise, however we aren’t delusional

This looks like an error to me. (And currently, to a bunch of different individuals.)

I don’t have a powerful view on whether or not something within the area of ‘attempt to decelerate some AI analysis’ must be carried out. However I believe a) the naive first-pass guess must be a powerful ‘most likely’, and b) an honest quantity of pondering ought to occur earlier than writing off all the pieces on this giant area of interventions. Whereas usually the tentative reply appears to be, ‘in fact not’ after which the subject appears to be averted for additional pondering. (At the least in my expertise—the AI security neighborhood is giant, and for many issues I say right here, totally different experiences are most likely had in several bits of it.)

Possibly my strongest view is that one shouldn’t apply such totally different requirements of ambition to those totally different courses of intervention. Like: sure, there look like substantial difficulties in slowing down AI progress to good impact. However in technical alignment, mountainous challenges are met with enthusiasm for mountainous efforts. And it is vitally non-obvious that the size of issue right here is far bigger than that concerned in designing acceptably secure variations of machines able to taking on the world earlier than anybody else on this planet designs harmful variations.

I’ve been speaking about this with individuals over the previous many months, and have gathered an abundance of causes for not making an attempt to decelerate AI, most of which I’d prefer to argue about a minimum of a bit. My impression is that arguing in actual life has coincided with individuals transferring towards my views.

Fast clarifications

First, to fend off misunderstanding—

- I take ‘slowing down harmful AI’ to incorporate any of:

- decreasing the velocity at which AI progress is made basically, e.g. as would happen if basic funding for AI declined.

- shifting AI efforts from work main extra on to dangerous outcomes to different work, e.g. as would possibly happen if there was broadscale concern about very giant AI fashions, and other people and funding moved to different initiatives.

- Halting classes of labor till robust confidence in its security is feasible, e.g. as would happen if AI researchers agreed that sure programs posed catastrophic dangers and shouldn’t be developed till they didn’t. (This would possibly imply a everlasting finish to some programs, in the event that they have been intrinsically unsafe.)

(So particularly, I’m together with each actions whose direct goal is slowness basically, and actions whose goal is requiring security earlier than particular developments, which means slower progress.)

- I do suppose there may be severe consideration on some variations of these items, usually underneath different names. I see individuals fascinated about ‘differential progress’ (b. above), and strategizing about coordination to decelerate AI sooner or later sooner or later (e.g. at ‘deployment’). And I believe a whole lot of consideration is given to avoiding actively rushing up AI progress. What I’m saying is lacking are, a) consideration of actively working to decelerate AI now, and b) capturing straightforwardly to ‘decelerate AI’, moderately than wincing from that and solely contemplating examples of it that present up underneath one other conceptualization (maybe that is an unfair analysis).

- AI Security is an enormous neighborhood, and I’ve solely ever been seeing a one-person window into it, so perhaps issues are totally different e.g. in DC, or in several conversations in Berkeley. I’m simply saying that for my nook of the world, the extent of disinterest on this has been notable, and in my opinion misjudged.

Why not decelerate AI? Why not think about it?

Okay, so if we tentatively suppose that this subject is price even fascinated about, what do we predict? Is slowing down AI a good suggestion in any respect? Are there nice causes for dismissing it?

Scott Alexander wrote a put up a short time again elevating causes to dislike the concept, roughly:

- Do you wish to lose an arms race? If the AI security neighborhood tries to gradual issues down, it should disproportionately decelerate progress within the US, after which individuals elsewhere will go quick and get to be those whose competence determines whether or not the world is destroyed, and whose values decide the longer term if there may be one. Equally, if AI security individuals criticize these contributing to AI progress, it should principally discourage essentially the most pleasant and cautious AI capabilities corporations, and the reckless ones will get there first.

- One would possibly ponder ‘coordination’ to keep away from such morbid races. However coordinating something with the entire world appears wildly difficult. As an illustration, some nations are giant, scary, and exhausting to speak to.

- Agitating for slower AI progress is ‘defecting’ in opposition to the AI capabilities people, who’re good associates of the AI security neighborhood, and their friendship is strategically worthwhile for guaranteeing that security is taken critically in AI labs (in addition to being non-instrumentally beautiful! Hello AI capabilities associates!).

Different opinions I’ve heard, a few of which I’ll deal with:

- Slowing AI progress is futile: for all of your efforts you’ll most likely simply die just a few years later

- Coordination primarily based on convincing folks that AI danger is an issue is absurdly formidable. It’s virtually not possible to persuade AI professors of this, not to mention any actual fraction of humanity, and also you’d must persuade an enormous variety of individuals.

- What are we going to do, construct highly effective AI by no means and die when the Earth is eaten by the solar?

- It’s really higher for security if AI progress strikes quick. This is likely to be as a result of the quicker AI capabilities work occurs, the smoother AI progress can be, and that is extra necessary than the period of the interval. Or rushing up progress now would possibly pressure future progress to be correspondingly slower. Or as a result of security work might be higher when carried out simply earlier than constructing the relevantly dangerous AI, by which case the very best technique is likely to be to get as near harmful AI as doable after which cease and do security work. Or if security work may be very ineffective forward of time, perhaps delay is okay, however there may be little to achieve by it.

- Particular routes to slowing down AI should not price it. As an illustration, avoiding engaged on AI capabilities analysis is unhealthy as a result of it’s so useful for studying on the trail to engaged on alignment. And AI security individuals working in AI capabilities generally is a pressure for making safer decisions at these corporations.

- Superior AI will assist sufficient with different existential dangers as to signify a internet decreasing of existential danger total.

- Regulators are ignorant concerning the nature of superior AI (partly as a result of it doesn’t exist, so everyone seems to be ignorant about it). Consequently they gained’t be capable of regulate it successfully, and convey about desired outcomes.

My impression is that there are additionally much less endorsable or much less altruistic or extra foolish motives floating round for this consideration allocation. Some issues which have come up a minimum of as soon as in speaking to individuals about this, or that appear to be happening:

- It’s uncomfortable to ponder initiatives that might put you in battle with different individuals. Advocating for slower AI seems like making an attempt to impede another person’s challenge, which feels adversarial and may really feel prefer it has a better burden of proof than simply working by yourself factor.

- ‘Gradual-down-AGI’ sends individuals’s minds to e.g. industrial sabotage or terrorism, moderately than extra boring programs, akin to, ‘foyer for labs growing shared norms for when to pause deployment of fashions’. This understandably encourages dropping the thought as quickly as doable.

- My weak guess is that there’s a form of bias at play in AI danger pondering basically, the place any pressure that isn’t zero is taken to be arbitrarily intense. Like, if there may be stress for brokers to exist, there’ll arbitrarily rapidly be arbitrarily agentic issues. If there’s a suggestions loop, it is going to be arbitrarily robust. Right here, if stalling AI can’t be endlessly, then it’s basically zero time. If a regulation gained’t impede each harmful challenge, then is nugatory. Any finite financial disincentive for harmful AI is nothing within the face of the all-powerful financial incentives for AI. I believe this can be a unhealthy psychological behavior: issues in the actual world typically come all the way down to precise finite portions. That is very probably an unfair analysis. (I’m not going to debate this later; that is just about what I’ve to say.)

- I sense an assumption that slowing progress on a know-how can be a radical and unheard-of transfer.

- I agree with lc that there appears to have been a quasi-taboo on the subject, which maybe explains a whole lot of the non-discussion, although nonetheless requires its personal rationalization. I believe it means that issues about uncooperativeness play an element, and the identical for pondering of slowing down AI as centrally involving delinquent methods.

I’m unsure if any of this absolutely resolves why AI security individuals haven’t considered slowing down AI extra, or whether or not individuals ought to attempt to do it. However my sense is that most of the above causes are a minimum of considerably incorrect, and motives considerably misguided, so I wish to argue about a whole lot of them in flip, together with each arguments and obscure motivational themes.

Restraint is just not radical

There appears to be a standard thought that know-how is a form of inevitable path alongside which the world should tread, and that making an attempt to decelerate or keep away from any a part of it could be each futile and excessive.

However empirically, the world doesn’t pursue each know-how—it barely pursues any applied sciences.

Sucky applied sciences

For a begin, there are various machines that there isn’t any stress to make, as a result of they don’t have any worth. Take into account a machine that sprays shit in your eyes. We are able to technologically try this, however most likely no one has ever constructed that machine.

This would possibly seem to be a silly instance, as a result of no severe ‘know-how is inevitable’ conjecture goes to assert that absolutely pointless applied sciences are inevitable. However in case you are sufficiently pessimistic about AI, I believe that is the fitting comparability: if there are sorts of AI that might trigger big internet prices to their creators if created, in keeping with our greatest understanding, then they’re a minimum of as ineffective to make because the ‘spray shit in your eyes’ machine. We would by accident make them as a result of error, however there may be not some deep financial pressure pulling us to make them. If unaligned superintelligence destroys the world with excessive likelihood while you ask it to do a factor, then that is the class it’s in, and it isn’t unusual for its designs to only rot within the scrap-heap, with the machine that sprays shit in your eyes and the machine that spreads caviar on roads.

Okay, however perhaps the related actors are very dedicated to being incorrect about whether or not unaligned superintelligence can be an awesome factor to deploy. Or perhaps you suppose the scenario is much less instantly dire and constructing existentially dangerous AI actually can be good for the individuals making choices (e.g. as a result of the prices gained’t arrive for some time, and the individuals care loads a few shot at scientific success relative to a bit of the longer term). If the obvious financial incentives are giant, are applied sciences unavoidable?

Extraordinarily worthwhile applied sciences

It doesn’t appear to be it to me. Listed below are just a few applied sciences which I’d guess have substantial financial worth, the place analysis progress or uptake seems to be drastically slower than it may very well be, for causes of concern about security or ethics:

- Big quantities of medical analysis, together with actually necessary medical analysis e.g. The FDA banned human trials of strep A vaccines from the 70s to the 2000s, despite 500,000 world deaths yearly. Lots of people additionally died whereas covid vaccines went by all the right trials.

- Nuclear power

- Fracking

- Varied genetics issues: genetic modification of meals, gene drives, early recombinant DNA researchers famously organized a moratorium after which ongoing analysis tips together with prohibition of sure experiments (see the Asilomar Convention)

- Nuclear, organic, and perhaps chemical weapons (or perhaps these simply aren’t helpful)

- Varied human reproductive innovation: cloning of people, genetic manipulation of people (a notable instance of an economically worthwhile know-how that’s to my data barely pursued throughout totally different nations, with out express coordination between these nations, although it could make these nations extra aggressive. Somebody used CRISPR on infants in China, however was imprisoned for it.)

- Leisure drug improvement

- Geoengineering

- A lot of science about people? I just lately ran this survey, and was reminded how encumbering moral guidelines are for even extremely innocuous analysis. So far as I might inform the EU now makes it unlawful to gather knowledge within the EU except you promise to delete the information from wherever that it may need gotten to if the one that gave you the information needs for that sooner or later. In all, coping with this and IRB-related issues added perhaps greater than half of the hassle of the challenge. Plausibly I misunderstand the foundations, however I doubt different researchers are radically higher at figuring them out than I’m.

- There are most likely examples from fields thought-about distasteful or embarrassing to affiliate with, but it surely’s exhausting as an outsider to inform which fields are genuinely hopeless versus erroneously thought-about so. If there are economically worthwhile well being interventions amongst these thought-about wooish, I think about they might be a lot slower to be recognized and pursued by scientists with good reputations than a equally promising know-how not marred in that approach. Scientific analysis into intelligence is extra clearly slowed by stigma, however it’s much less clear to me what the economically worthwhile upshot can be.

- (I believe there are various different issues that may very well be on this record, however I don’t have time to assessment them for the time being. This web page would possibly acquire extra of them in future.)

It appears to me that deliberately slowing down progress in applied sciences to provide time for even probably-excessive warning is commonplace. (And that is simply taking a look at issues slowed down over warning or ethics particularly—most likely there are additionally different causes issues get slowed down.)

Moreover, amongst worthwhile applied sciences that no one is particularly making an attempt to decelerate, it appears frequent sufficient for progress to be massively slowed by comparatively minor obstacles, which is additional proof for an absence of overpowering energy of the financial forces at play. As an illustration, Fleming first took discover of mould’s impact on micro organism in 1928, however no one took a severe, high-effort shot at growing it as a drug till 1939. Moreover, within the 1000’s of years previous these occasions, numerous individuals observed quite a few instances that mould, different fungi or crops inhibited bacterial development, however didn’t exploit this commentary even sufficient for it to not be thought-about a brand new discovery within the Nineteen Twenties. In the meantime, individuals dying of an infection was fairly a factor. In 1930 about 300,000 Individuals died of bacterial diseases per 12 months (round 250/100k).

My guess is that folks make actual decisions about know-how, and so they achieve this within the face of financial forces which can be feebler than generally thought.

Restraint is just not terrorism, normally

I believe individuals have traditionally imagined bizarre issues once they consider ‘slowing down AI’. I posit that their central picture is typically terrorism (which understandably they don’t wish to take into consideration for very lengthy), and generally some type of implausibly utopian world settlement.

Listed below are another issues that ‘decelerate AI capabilities’ might appear to be (the place the very best positioned individual to hold out every one differs, however in case you are not that individual, you might e.g. speak to somebody who’s):

- Don’t actively ahead AI progress, e.g. by devoting your life or thousands and thousands of {dollars} to it (this one is usually thought-about already)

- Attempt to persuade researchers, funders, {hardware} producers, establishments and so on that they too ought to cease actively forwarding AI progress

- Attempt to get any of these individuals to cease actively forwarding AI progress even when they don’t agree with you: by negotiation, funds, public reproof, or different activistic means.

- Attempt to get the message to the world that AI is heading towards being critically endangering. If AI progress is broadly condemned, this can trickle into myriad choices: job decisions, lab insurance policies, nationwide legal guidelines. To do that, for example produce compelling demos of danger, agitate for stigmatization of dangerous actions, write science fiction illustrating the issues broadly and evocatively (I believe this has really been useful repeatedly previously), go on TV, write opinion items, assist set up and empower the people who find themselves already involved, and so on.

- Assist set up the researchers who suppose their work is probably omnicidal into coordinated motion on not doing it.

- Transfer AI sources from harmful analysis to different analysis. Transfer investments from initiatives that result in giant however poorly understood capabilities, to initiatives that result in understanding these items e.g. concept earlier than scaling (see differential technological improvement basically).

- Formulate particular precautions for AI researchers and labs to soak up totally different well-defined future conditions, Asilomar Convention type. These might embrace extra intense vetting by explicit events or strategies, modifying experiments, or pausing strains of inquiry totally. Manage labs to coordinate on these.

- Scale back obtainable compute for AI, e.g. by way of regulation of manufacturing and commerce, vendor decisions, buying compute, commerce technique.

- At labs, select insurance policies that decelerate different labs, e.g. scale back public useful analysis outputs

- Alter the publishing system and incentives to scale back analysis dissemination. E.g. A journal verifies analysis outcomes and releases the very fact of their publication with none particulars, maintains data of analysis precedence for later launch, and distributes funding for participation. (That is how Szilárd and co. organized the mitigation of Forties nuclear analysis serving to Germany, besides I’m unsure if the compensatory funding concept was used.)

- The above actions can be taken by decisions made by scientists, or funders, or legislators, or labs, or public observers, and so on. Talk with these events, or assist them act.

Coordination is just not miraculous world authorities, normally

The frequent picture of coordination appears to be express, centralized, involving of each occasion on this planet, and one thing like cooperating on a prisoners’ dilemma: incentives push each rational occasion towards defection always, but perhaps by deontological virtues or subtle determination theories or robust worldwide treaties, everybody manages to not defect for sufficient teetering moments to search out one other answer.

That may be a doable approach coordination may very well be. (And I believe one which shouldn’t be seen as so hopeless—the world has really coordinated on some spectacular issues, e.g. nuclear non-proliferation.) But when what you need is for many individuals to coincide in doing one factor once they may need carried out one other, then there are fairly just a few methods of reaching that.

Take into account another case research of coordinated habits:

- Not consuming sand. The entire world coordinates to barely eat any sand in any respect. How do they handle it? It’s really not in nearly anybody’s curiosity to eat sand, so the mere upkeep of adequate epistemological well being to have this well known does the job.

- Eschewing bestiality: most likely some individuals suppose bestiality is ethical, however sufficient don’t that partaking in it could danger big stigma. Thus the world coordinates pretty properly on doing little or no of it.

- Not sporting Victorian apparel on the streets: that is related however with no ethical blame concerned. Historic costume is arguably typically extra aesthetic than trendy costume, however even individuals who strongly agree discover it unthinkable to put on it basically, and assiduously keep away from it aside from once they have ‘excuses’ akin to a particular occasion. This can be a very robust coordination in opposition to what seems to in any other case be a ubiquitous incentive (to be nicer to have a look at). So far as I can inform, it’s powered considerably by the truth that it’s ‘not carried out’ and would now be bizarre to do in any other case. (Which is a really general-purpose mechanism.)

- Political correctness: public discourse has robust norms about what it’s okay to say, which don’t seem to derive from a overwhelming majority of individuals agreeing about this (as with bestiality say). New concepts about what constitutes being politically appropriate generally unfold broadly. This coordinated habits appears to be roughly as a result of decentralized utility of social punishment, from each a core of proponents, and from individuals who concern punishment for not punishing others. Then perhaps additionally from people who find themselves involved by non-adherence to what now seems to be the norm given the actions of the others. This differs from the above examples, as a result of it looks like it might persist even with a really small set of individuals agreeing with the object-level causes for a norm. If failing to advocate for the norm will get you publicly shamed by advocates, then you definately would possibly are inclined to advocate for it, making the stress stronger for everybody else.

These are all instances of very broadscale coordination of habits, none of which contain prisoners’ dilemma kind conditions, or individuals making express agreements which they then have an incentive to interrupt. They don’t contain centralized group of big multilateral agreements. Coordinated habits can come from everybody individually desirous to make a sure selection for correlated causes, or from individuals desirous to do issues that these round them are doing, or from distributed behavioral dynamics akin to punishment of violations, or from collaboration in fascinated about a subject.

You would possibly suppose they’re bizarre examples that aren’t very associated to AI. I believe, a) it’s necessary to recollect the plethora of bizarre dynamics that really come up in human group habits and never get carried away theorizing about AI in a world drained of all the pieces however prisoners’ dilemmas and binding commitments, and b) the above are literally all probably related dynamics right here.

If AI in reality poses a big existential danger inside our lifetimes, such that it’s internet unhealthy for any explicit particular person, then the scenario in concept seems to be loads like that within the ‘avoiding consuming sand’ case. It’s an possibility {that a} rational individual wouldn’t wish to take in the event that they have been simply alone and never dealing with any form of multi-agent scenario. If AI is that harmful, then not taking this inferior possibility might largely come from a coordination mechanism so simple as distribution of fine data. (You continue to must cope with irrational individuals and other people with uncommon values.)

However even failing coordinated warning from ubiquitous perception into the scenario, different fashions would possibly work. As an illustration, if there got here to be considerably widespread concern that AI analysis is unhealthy, that may considerably reduce participation in it, past the set of people who find themselves involved, by way of mechanisms much like these described above. Or it’d give rise to a large crop of native regulation, imposing no matter habits is deemed acceptable. Such regulation needn’t be centrally organized internationally to serve the aim of coordinating the world, so long as it grew up elsewhere equally. Which could occur as a result of totally different locales have related pursuits (all rational governments must be equally involved about shedding energy to automated power-seeking programs with unverifiable objectives), or as a result of—as with people—there are social dynamics which assist norms arising in a non-centralized approach.

Okay, perhaps in precept you would possibly hope to coordinate to not do self-destructive issues, however realistically, if the US tries to decelerate, gained’t China or Fb or somebody much less cautious take over the world?

Let’s be extra cautious concerning the recreation we’re enjoying, game-theoretically talking.

The arms race

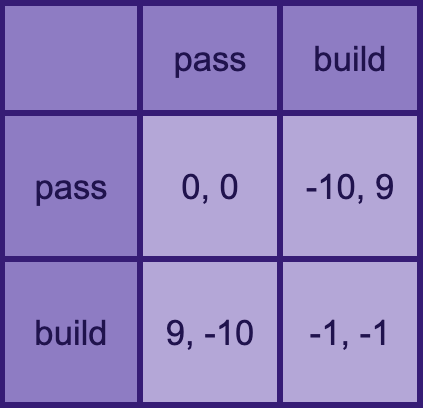

What’s an arms race, recreation theoretically? It’s an iterated prisoners’ dilemma, appears to me. Every spherical seems to be one thing like this:

On this instance, constructing weapons prices one unit. If anybody ends the spherical with extra weapons than anybody else, they take all of their stuff (ten models).

In a single spherical of the sport it’s at all times higher to construct weapons than not (assuming your actions are devoid of implications about your opponent’s actions). And it’s at all times higher to get the hell out of this recreation.

This isn’t very like what the present AI scenario seems to be like, if you happen to suppose AI poses a considerable danger of destroying the world.

The suicide race

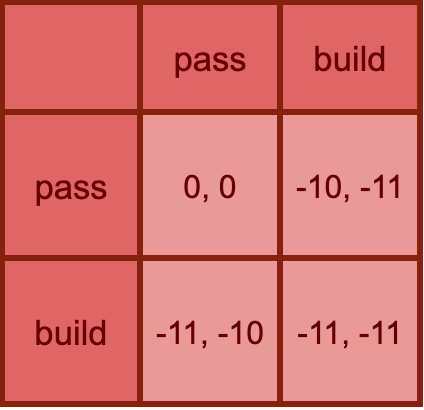

A more in-depth mannequin: as above besides if anybody chooses to construct, all the pieces is destroyed (everybody loses all their stuff—ten models of worth—in addition to one unit in the event that they constructed).

That is importantly totally different from the basic ‘arms race’ in that urgent the ‘everybody loses now’ button isn’t an equilibrium technique.

That’s: for anybody who thinks highly effective misaligned AI represents near-certain demise, the existence of different doable AI builders isn’t any motive to ‘race’.

However few persons are that pessimistic. How a few milder model the place there’s an excellent likelihood that the gamers ‘align the AI’?

The security-or-suicide race

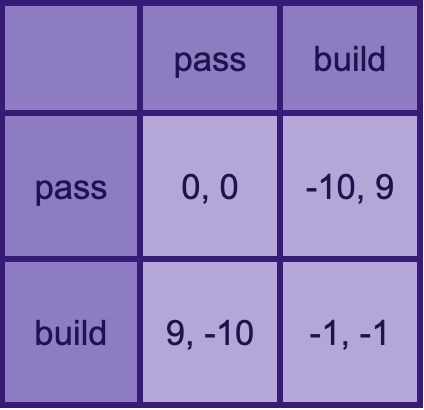

Okay, let’s do a recreation just like the final however the place if anybody builds, all the pieces is simply perhaps destroyed (minus ten to all), and within the case of survival, everybody returns to the unique arms race enjoyable of redistributing stuff primarily based on who constructed greater than whom (+10 to a builder and -10 to a non-builder if there may be considered one of every). So if you happen to construct AI alone, and get fortunate on the probabilistic apocalypse, can nonetheless win large.

Let’s take 50% as the possibility of doom if any constructing occurs. Then now we have a recreation whose anticipated payoffs are half approach between these within the final two video games:

Now you wish to do regardless of the different participant is doing: construct in the event that they’ll construct, cross in the event that they’ll cross.

If the chances of destroying the world have been very low, this is able to turn out to be the unique arms race, and also you’d at all times wish to construct. If very excessive, it could turn out to be the suicide race, and also you’d by no means wish to construct. What the chances must be in the actual world to get you into one thing like these totally different phases goes to be totally different, as a result of all these parameters are made up (the draw back of human extinction is just not 10x the analysis prices of constructing highly effective AI, for example).

However my level stands: even when it comes to simplish fashions, it’s very non-obvious that we’re in or close to an arms race. And due to this fact, very non-obvious that racing to construct superior AI quicker is even promising at a primary cross.

In much less game-theoretic phrases: if you happen to don’t appear wherever close to fixing alignment, then racing as exhausting as you’ll be able to to be the one who it falls upon to have solved alignment—particularly if meaning having much less time to take action, although I haven’t mentioned that right here—might be unstrategic. Having extra ideologically pro-safety AI designers win an ‘arms race’ in opposition to much less involved groups is futile if you happen to don’t have a approach for such individuals to implement sufficient security to really not die, which looks like a really reside risk. (Robby Bensinger and perhaps Andrew Critch someplace make related factors.)

Conversations with my associates on this type of subject can go like this:

Me: there’s no actual incentive to race if the prize is mutual demise

Them: positive, but it surely isn’t—if there’s a sliver of hope of surviving unaligned AI, and in case your facet taking management in that case is a bit higher in expectation, and if they will construct highly effective AI anyway, then it’s price racing. The entire future is on the road!

Me: Wouldn’t you continue to be higher off directing your personal efforts to security, since your security efforts may also assist everybody find yourself with a secure AI?

Them: It would most likely solely assist them considerably—you don’t know if the opposite facet will use your security analysis. But in addition, it’s not simply that they’ve much less security analysis. Their values are most likely worse, by your lights.

Me: In the event that they succeed at alignment, are international values actually worse than native ones? Most likely any people with huge intelligence at hand have an identical shot at creating a wonderful human-ish utopia, no?

Them: No, even if you happen to’re proper that being equally human will get you to related values ultimately, the opposite events is likely to be extra silly than our facet, and lock-in7 some poorly thought-through model of their values that they need for the time being, or even when all initiatives can be so silly, our facet may need higher poorly thought-through values to lock in, in addition to being extra probably to make use of security concepts in any respect. Even when racing may be very prone to result in demise, and survival may be very prone to result in squandering many of the worth, in that sliver of glad worlds a lot is at stake in whether or not it’s us or another person doing the squandering!

Me: Hmm, appears difficult, I’m going to wish paper for this.

The difficult race/anti-race

Here’s a spreadsheet of fashions you may make a duplicate of and play with.

The primary mannequin is like this:

- Every participant divides their effort between security and capabilities

- One participant ‘wins’, i.e. builds ‘AGI’ (synthetic basic intelligence) first.

- P(Alice wins) is a logistic perform of Alice’s capabilities funding relative to Bob’s

- Every gamers’ whole security is their very own security funding plus a fraction of the opposite’s security funding.

- For every participant there may be some distribution of outcomes in the event that they obtain security, and a set of outcomes if they don’t, which takes under consideration e.g. their proclivities for enacting silly near-term lock-ins.

- The result is a distribution over winners and states of alignment, every of which is a distribution of worlds (e.g. utopia, near-term good lock-in..)

- That each one offers us plenty of utils (Scrumptious utils!)

The second mannequin is identical besides that as a substitute of dividing effort between security and capabilities, you select a velocity, and the quantity of alignment being carried out by every occasion is an exogenous parameter.

These fashions most likely aren’t excellent, however to this point assist a key declare I wish to make right here: it’s fairly non-obvious whether or not one ought to go quicker or slower in this type of state of affairs—it’s delicate to a whole lot of totally different parameters in believable ranges.

Moreover, I don’t suppose the outcomes of quantitative evaluation match individuals’s intuitions right here.

For instance, right here’s a scenario which I believe sounds intuitively like a you-should-race world, however the place within the first mannequin above, it is best to really go as slowly as doable (this must be the one plugged into the spreadsheet now):

- AI is fairly secure: unaligned AGI has a mere 7% likelihood of inflicting doom, plus an extra 7% likelihood of inflicting quick time period lock-in of one thing mediocre

- Your opponent dangers unhealthy lock-in: If there’s a ‘lock-in’ of one thing mediocre, your opponent has a 5% likelihood of locking in one thing actively horrible, whereas you’ll at all times choose good mediocre lock-in world (and mediocre lock-ins are both 5% pretty much as good as utopia, -5% pretty much as good)

- Your opponent dangers messing up utopia: Within the occasion of aligned AGI, you’ll reliably obtain the very best end result, whereas your opponent has a 5% likelihood of ending up in a ‘mediocre unhealthy’ state of affairs then too.

- Security funding obliterates your likelihood of attending to AGI first: transferring from no security in any respect to full security means you go from a 50% likelihood of being first to a 0% likelihood

- Your opponent is racing: Your opponent is investing all the pieces in capabilities and nothing in security

- Security work helps others at a steep low cost: your security work contributes 50% to the opposite participant’s security

Your finest guess right here (on this mannequin) remains to be to maximise security funding. Why? As a result of by aggressively pursuing security, you will get the opposite facet half technique to full security, which is price much more than than the misplaced likelihood of successful. Particularly since if you happen to ‘win’, you achieve this with out a lot security, and your victory with out security is worse than your opponent’s victory with security, even when that too is way from excellent.

So in case you are in a scenario on this area, and the opposite occasion is racing, it’s not apparent whether it is even in your slender pursuits inside the recreation to go quicker on the expense of security, although it could be.

These fashions are flawed in some ways, however I believe they’re higher than the intuitive fashions that assist arms-racing. My guess is that the subsequent higher nonetheless fashions stay nuanced.

Different equilibria and different video games

Even when it could be in your pursuits to race if the opposite individual have been racing, ‘(do nothing, do nothing)’ is usually an equilibrium too in these video games. At the least for numerous settings of the parameters. It doesn’t essentially make sense to do nothing within the hope of attending to that equilibrium if you realize your opponent to be mistaken about that and racing anyway, however along with speaking together with your ‘opponent’, it looks like a theoretically good technique.

This has all been assuming the construction of the sport. I believe the standard response to an arms race scenario is to recollect that you’re in a extra elaborate world with all types of unmodeled affordances, and attempt to get out of the arms race.

Warning is cooperative

One other large concern is that pushing for slower AI progress is ‘defecting’ in opposition to AI researchers who’re associates of the AI security neighborhood.

As an illustration Steven Byrnes:

“I believe that making an attempt to decelerate analysis in the direction of AGI by regulation would fail, as a result of everybody (politicians, voters, lobbyists, enterprise, and so on.) likes scientific analysis and technological improvement, it creates jobs, it cures illnesses, and so on. and so on., and also you’re saying we should always have much less of that. So I believe the hassle would fail, and in addition be massively counterproductive by making the neighborhood of AI researchers see the neighborhood of AGI security / alignment individuals as their enemies, morons, weirdos, Luddites, no matter.”

(Additionally an excellent instance of the view criticized earlier, that regulation of issues that create jobs and treatment illnesses simply doesn’t occur.)

Or Eliezer Yudkowsky, on fear that spreading concern about AI would alienate prime AI labs:

I don’t think this is a natural or reasonable way to see things, because:

- The researchers themselves probably don’t want to destroy the world. Many of them also actually agree that AI is a severe existential danger. So in two pure methods, pushing for warning is cooperative with many if not most AI researchers.

- AI researchers don’t have an ethical proper to hazard the world, that somebody can be stepping on by requiring that they transfer extra cautiously. Like, why does ‘cooperation’ appear to be the protection individuals bowing to what the extra reckless capabilities individuals need, to the purpose of fearing to signify their precise pursuits, whereas the capabilities individuals uphold their facet of the ‘cooperation’ by going forward and constructing harmful AI? This example would possibly make sense as a pure consequence of various individuals’s energy within the scenario. However then don’t name it a ‘cooperation’, from which safety-oriented events can be dishonorably ‘defecting’ have been they to think about exercising any energy they did have.

It may very well be that folks answerable for AI capabilities would reply negatively to AI security individuals pushing for slower progress. However that must be referred to as ‘we’d get punished’ not ‘we shouldn’t defect’. ‘Defection’ has ethical connotations that aren’t due. Calling one facet pushing for his or her most popular end result ‘defection’ unfairly disempowers them by wrongly setting commonsense morality in opposition to them.

At the least if it’s the security facet. If any of the obtainable actions are ‘defection’ that the world basically ought to condemn, I declare that it’s most likely ‘constructing machines that may plausibly destroy the world, or standing by whereas it occurs’.

(This could be extra difficult if the individuals concerned have been assured that they wouldn’t destroy the world and I merely disagreed with them. However about half of surveyed researchers are literally extra pessimistic than me. And in a scenario the place the median AI researcher thinks the sphere has a 5-10% likelihood of inflicting human extinction, how assured can any accountable individual be in their very own judgment that it’s secure?)

On prime of all that, I fear that highlighting the narrative that wanting extra cautious progress is defection is additional damaging, as a result of it makes it extra probably that AI capabilities individuals see AI security individuals as pondering of themselves as betraying AI researchers, if anybody engages in any such efforts. Which makes the efforts extra aggressive. Like, if each time you see associates, you seek advice from it as ‘dishonest on my companion’, your companion might fairly really feel harm by your continuous need to see associates, although the exercise itself is innocuous.

‘We’ should not the US, ‘we’ should not the AI security neighborhood

“If ‘we’ attempt to decelerate AI, then the opposite facet would possibly win.” “If ‘we’ ask for regulation, then it’d hurt ‘our’ relationships with AI capabilities corporations.” Who’re these ‘we’s? Why are individuals strategizing for these teams particularly?

Even when slowing AI have been uncooperative, and it have been necessary for the AI Security neighborhood to cooperate with the AI capabilities neighborhood, couldn’t one of many many individuals not within the AI Security neighborhood work on it?

I’ve a longstanding irritation with inconsiderate discuss what ‘we’ ought to do, with out regard for what collective one is talking for. So I could also be too delicate about it right here. However I believe confusions arising from this have real penalties.

I believe when individuals say ‘we’ right here, they typically think about that they’re strategizing on behalf of, a) the AI security neighborhood, b) the USA, c) themselves or d) they and their readers. However these are a small subset of individuals, and never even clearly those the speaker can most affect (does the truth that you might be sitting within the US actually make the US extra prone to take heed to your recommendation than e.g. Estonia? Yeah most likely on common, however not infinitely a lot.) If these naturally identified-with teams don’t have good choices, that hardly means there aren’t any choices available, or to be communicated to different events. May the speaker communicate to a special ‘we’? Possibly somebody within the ‘we’ the speaker has in thoughts is aware of somebody not in that group? If there’s a technique for anybody on this planet, and you’ll speak, then there may be most likely a technique for you.

The starkest look of error alongside these strains to me is in writing off the slowing of AI as inherently damaging of relations between the AI security neighborhood and different AI researchers. If we grant that such exercise can be seen as a betrayal (which appears unreasonable to me, however perhaps), absolutely it might solely be a betrayal if carried out by the AI security neighborhood. There are fairly lots of people who aren’t within the AI security neighborhood and have a stake on this, so perhaps a few of them might do one thing. It looks like an enormous oversight to surrender on all slowing of AI progress since you are solely contemplating affordances obtainable to the AI Security Group.

One other instance: if the world have been within the fundamental arms race scenario generally imagined, and the USA can be prepared to make legal guidelines to mitigate AI danger, however couldn’t as a result of China would barge forward, then meaning China is in an awesome place to mitigate AI danger. Not like the US, China might suggest mutual slowing down, and the US would go alongside. Possibly it’s not not possible to speak this to related individuals in China.

An oddity of this type of dialogue which feels associated is the persistent assumption that one’s capacity to behave is restricted to the USA. Possibly I fail to know the extent to which Asia is an alien and distant land the place company doesn’t apply, however for example I simply wrote to love a thousand machine studying researchers there, and perhaps 100 wrote again, and it was loads like interacting with individuals within the US.

I’m fairly ignorant about what interventions will work in any explicit nation, together with the US, however I simply suppose it’s bizarre to return to the desk assuming which you can basically solely have an effect on issues in a single nation. Particularly if the scenario is that you just imagine you have got distinctive data about what’s within the pursuits of individuals in different nations. Like, truthful sufficient I might be deal-breaker-level pessimistic if you happen to wished to get an Asian authorities to elect you chief or one thing. However if you happen to suppose superior AI is extremely prone to destroy the world, together with different nations, then the scenario is completely totally different. If you’re proper, then everybody’s incentives are principally aligned.

I extra weakly suspect some associated psychological shortcut is misshaping the dialogue of arms races basically. The thought that one thing is a ‘race’ appears a lot stickier than options, even when the true incentives don’t actually make it a race. Like, in opposition to the legal guidelines of recreation concept, individuals type of count on the enemy to attempt to imagine falsehoods, as a result of it should higher contribute to their racing. And this seems like realism. The unsure particulars of billions of individuals one barely is aware of about, with all method of pursuits and relationships, simply actually needs to kind itself into an ‘us’ and a ‘them’ in zero-sum battle. This can be a psychological shortcut that might actually kill us.

My impression is that in apply, for most of the applied sciences slowed down for danger or ethics, talked about in part ‘Extraordinarily worthwhile applied sciences’ above, nations with pretty disparate cultures have converged on related approaches to warning. I take this as proof that none of moral thought, social affect, political energy, or rationality are literally very siloed by nation, and basically the ‘nations in contest’ mannequin of all the pieces isn’t excellent.

Convincing individuals doesn’t appear that onerous

After I say that ‘coordination’ can simply appear to be standard opinion punishing an exercise, or that different nations don’t have a lot actual incentive to construct machines that may kill them, I believe a standard objection is that convincing individuals of the actual scenario is hopeless. The image appears to be that the argument for AI danger is extraordinarily subtle and solely capable of be appreciated by essentially the most elite of mental elites—e.g. it’s exhausting sufficient to persuade professors on Twitter, so absolutely the lots are past its attain, and international governments too.

This doesn’t match my total expertise on numerous fronts.

Some observations:

- The median surveyed ML researcher appears to suppose AI will destroy humanity with 5-10% likelihood, as I discussed

- Usually persons are already intellectually satisfied however haven’t built-in that into their habits, and it isn’t exhausting to assist them set up to behave on their tentative beliefs

- As famous by Scott, a whole lot of AI security individuals have gone into AI capabilities together with operating AI capabilities orgs, so these individuals presumably think about AI to be dangerous already

- I don’t keep in mind ever having any bother discussing AI danger with random strangers. Generally they’re additionally pretty nervous (e.g. a make-up artist at Sephora gave an prolonged rant concerning the risks of superior AI, and my driver in Santiago excitedly concurred and confirmed me Homo Deus open on his entrance seat). The type of the issues are most likely a bit totally different from these of the AI Security neighborhood, however I believe broadly nearer to, ‘AI brokers are going to kill us all’ than ‘algorithmic bias can be unhealthy’. I can’t keep in mind what number of instances I’ve tried this, however pre-pandemic I used to speak to Uber drivers loads, as a result of having no concept find out how to keep away from it. I defined AI danger to my therapist just lately, as an apart relating to his sense that I is likely to be catastrophizing, and I really feel prefer it went okay, although we might have to debate once more.

- My impression is that most individuals haven’t even come into contact with the arguments that may convey one to agree exactly with the AI security neighborhood. As an illustration, my guess is that lots of people assume that somebody really programmed trendy AI programs, and if you happen to advised them that in reality they’re random connections jiggled in an gainful route unfathomably many instances, simply as mysterious to their makers, they may additionally concern misalignment.

- Nick Bostrom, Eliezer Yudkokwsy, and different early thinkers have had first rate success at convincing a bunch of different individuals to fret about this downside, e.g. me. And to my data, with out writing any compelling and accessible account of why one ought to achieve this that might take lower than two hours to learn.

- I arrogantly suppose I might write a broadly compelling and accessible case for AI danger

My weak guess is that immovable AI danger skeptics are concentrated in mental circles close to the AI danger individuals, particularly on Twitter, and that folks with much less of a horse within the mental standing race are extra readily like, ‘oh yeah, superintelligent robots are most likely unhealthy’. It’s not clear that most individuals even want convincing that there’s a downside, although they don’t appear to think about it essentially the most urgent downside on this planet. (Although all of this can be totally different in cultures I’m extra distant from, e.g. in China.) I’m fairly non-confident about this, however skimming survey proof suggests there may be substantial although not overwhelming public concern about AI within the US.

Do you should persuade everybody?

I may very well be incorrect, however I’d guess convincing the ten most related leaders of AI labs that this can be a huge deal, price prioritizing, really will get you an honest slow-down. I don’t have a lot proof for this.

Shopping for time is large

You most likely aren’t going to keep away from AGI endlessly, and perhaps big efforts will purchase you a few years. May that even be price it?

Appears fairly believable:

- No matter form of different AI security analysis or coverage work individuals have been doing may very well be occurring at a non-negligible price per 12 months. (Together with all different efforts to make the scenario higher—if you happen to purchase a 12 months, that’s eight billion further individual years of time, so solely a tiny bit must be spent usefully for this to be large. If lots of people are nervous, that doesn’t appear loopy.)

- Geopolitics simply adjustments fairly typically. In the event you critically suppose an enormous determiner of how badly issues go is incapacity to coordinate with sure teams, then yearly will get you non-negligible alternatives for the scenario altering in a positive approach.

- Public opinion can change loads rapidly. In the event you can solely purchase one 12 months, you would possibly nonetheless be shopping for an honest shot of individuals coming round and granting you extra years. Maybe particularly if new proof is actively avalanching in—individuals modified their minds loads in February 2020.

- Different stuff occurs over time. In the event you can take your doom at this time or after a few years of random occasions occurring, the latter appears non-negligibly higher basically.

Additionally it is not apparent to me that these are the time-scales on the desk. My sense is that issues that are slowed down by regulation or basic societal distaste are sometimes slowed down way more than a 12 months or two, and Eliezer’s tales presume that the world is stuffed with collectives both making an attempt to destroy the world or badly mistaken about it, which isn’t a foregone conclusion.

Delay might be finite by default

Whereas some individuals fear that any delay can be so quick as to be negligible, others appear to concern that if AI analysis have been halted, it could by no means begin once more and we might fail to go to area or one thing. This sounds so wild to me that I believe I’m lacking an excessive amount of of the reasoning to usefully counterargue.

Obstruction doesn’t want discernment

One other purported danger of making an attempt to gradual issues down is that it’d contain getting regulators concerned, and so they is likely to be pretty ignorant concerning the particulars of futuristic AI, and so tenaciously make the incorrect laws. Relatedly, if you happen to name on the general public to fret about this, they may have inexacting worries that decision for impotent options and distract from the actual catastrophe.

I don’t purchase it. If all you need is to decelerate a broad space of exercise, my guess is that ignorant laws just do nice at that day-after-day (normally unintentionally). Specifically, my impression is that if you happen to mess up regulating issues, a normal end result is that many issues are randomly slower than hoped. In the event you wished to hurry a selected factor up, that’s a really totally different story, and would possibly require understanding the factor in query.

The identical goes for social opposition. No person want perceive the small print of how genetic engineering works for its ascendancy to be critically impaired by individuals not liking it. Possibly by their lights it nonetheless isn’t optimally undermined but, however simply not liking something within the neighborhood does go a great distance.

This has nothing to do with regulation or social shaming particularly. You have to perceive a lot much less a few automobile or a rustic or a dialog to mess it up than to make it run properly. It’s a consequence of the final rule that there are various extra methods for a factor to be dysfunctional than purposeful: destruction is simpler than creation.

Again on the object stage, I tentatively count on efforts to broadly decelerate issues within the neighborhood of AI progress to decelerate AI progress on internet, even when poorly aimed.

Possibly it’s really higher for security to have AI go quick at current, for numerous causes. Notably:

- Implementing what could be applied as quickly as doable most likely means smoother progress, which might be safer as a result of a) it makes it more durable for one occasion shoot forward of everybody and achieve energy, and b) individuals make higher decisions throughout if they’re appropriate about what’s going on (e.g. they don’t put belief in programs that become way more highly effective than anticipated).

- If the principle factor achieved by slowing down AI progress is extra time for security analysis, and security analysis is more practical when carried out within the context of extra superior AI, and there’s a certain quantity of slowing down that may be carried out (e.g. as a result of one is in reality in an arms race however has some lead over opponents), then it’d higher to make use of one’s slowing finances later.

- If there may be some underlying curve of potential for progress (e.g. if cash that is likely to be spent on {hardware} simply grows a certain quantity every year), then maybe if we push forward now that may naturally require they be slower later, so it gained’t have an effect on the general time to highly effective AI, however will imply we spend extra time within the informative pre-catastrophic-AI period.

- (Extra issues go right here I believe)

And perhaps it’s price it to work on capabilities analysis at current, for example as a result of:

- As a researcher, engaged on capabilities prepares you to work on security

- You suppose the room the place AI occurs will afford good choices for an individual who cares about security

These all appear believable. But in addition plausibly incorrect. I don’t know of a decisive evaluation of any of those concerns, and am not going to do one right here. My impression is that they might principally all go both approach.

I’m really notably skeptical of the ultimate argument, as a result of if you happen to imagine what I take to be the conventional argument for AI danger—that superhuman synthetic brokers gained’t have acceptable values, and can aggressively manifest no matter values they do have, to the ultimately annihilation of humanity—then the feelings of the individuals turning on such machines seem to be a really small issue, as long as they nonetheless flip the machines on. And I believe that ‘having an individual with my values doing X’ is often overrated. However the world is messier than these fashions, and I’d nonetheless pay loads to be within the room to attempt.

It’s not clear what function these psychological characters ought to play in a rational evaluation of find out how to act, however I believe they do play a task, so I wish to argue about them.

Technological selection is just not luddism

Some applied sciences are higher than others [citation not needed]. The perfect pro-technology visions ought to disproportionately contain superior applied sciences and keep away from shitty applied sciences, I declare. In the event you suppose AGI is extremely prone to destroy the world, then it’s the pinnacle of shittiness as a know-how. Being against having it into your techno-utopia is about as luddite as refusing to have radioactive toothpaste there. Colloquially, Luddites are in opposition to progress if it comes as know-how. Even when that’s a horrible place, its sensible reversal is just not the endorsement of all ‘know-how’, no matter whether or not it comes as progress.

Non-AGI visions of near-term thriving

Maybe slowing down AI progress means foregoing our personal technology’s hope for life-changing applied sciences. Some individuals thus discover it psychologically troublesome to goal for much less AI progress (with its actual private prices), moderately than capturing for the maybe unlikely ‘secure AGI quickly’ state of affairs.

I’m unsure that this can be a actual dilemma. The slender AI progress now we have seen already—i.e. additional functions of present methods at present scales—appears plausibly capable of assist loads with longevity and different drugs for example. And to the extent AI efforts may very well be centered on e.g. medically related slender programs over creating agentic scheming gods, it doesn’t sound loopy to think about making extra progress on anti-aging and so on because of this (even earlier than bearing in mind the likelihood that the agentic scheming god doesn’t prioritize your bodily wellbeing as hoped). Others disagree with me right here.

Strong priors vs. particular galaxy-brained fashions

There are issues which can be robustly good on this planet, and issues which can be good on extremely particular inside-view fashions and horrible if these fashions are incorrect. Slowing harmful tech improvement looks like the previous, whereas forwarding arms races for harmful tech between world superpowers appears extra just like the latter. There’s a basic query of how a lot to belief your reasoning and danger the galaxy-brained plan. However no matter your tackle that, I believe we should always all agree that the much less thought you have got put into it, the extra it is best to regress to the robustly good actions. Like, if it simply occurred to you to take out a big mortgage to purchase a elaborate automobile, you most likely shouldn’t do it as a result of more often than not it’s a poor selection. Whereas when you’ve got been fascinated about it for a month, you would possibly make certain sufficient that you’re within the uncommon scenario the place it should repay.

On this explicit subject, it seems like persons are going with the particular galaxy-brained inside-view terrible-if-wrong mannequin off the bat, then not fascinated about it extra.

Cheems mindset/can’t do angle

Suppose you have got a buddy, and also you say ‘let’s go to the seashore’ to them. Generally the buddy is like ‘hell sure’ after which even if you happen to don’t have towels or a mode of transport or time or a seashore, you make it occur. Different instances, even when you’ve got all of these issues, and your buddy nominally needs to go to the seashore, they may be aware that they’ve a package deal coming later, and that it is likely to be windy, and their jacket wants washing. And while you remedy these issues, they may be aware that it’s not that lengthy till meal time. You would possibly infer that within the latter case your buddy simply doesn’t wish to go to the seashore. And generally that’s the essential factor happening! However I believe there are additionally broader variations in attitudes: generally persons are in search of methods to make issues occur, and generally they’re in search of causes that they will’t occur. That is generally referred to as a ‘cheems angle’, or I prefer to name it (extra accessibly) a ‘can’t do angle’.

My expertise in speaking about slowing down AI with individuals is that they appear to have a can’t do angle. They don’t need it to be an affordable course: they wish to write it off.

Which each appears suboptimal, and is unusual in distinction with historic attitudes to extra technical problem-solving. (As highlighted in my dialogue from the beginning of the put up.)

It appears to me that if the identical diploma of can’t-do angle have been utilized to technical security, there can be no AI security neighborhood as a result of in 2005 Eliezer would have observed any obstacles to alignment and given up and gone house.

To cite a buddy on this, what wouldn’t it appear to be if we *really tried*?

This has been a miscellany of critiques in opposition to a pile of causes I’ve met for not fascinated about slowing down AI progress. I don’t suppose we’ve seen a lot motive right here to be very pessimistic about slowing down AI, not to mention motive for not even fascinated about it.

I might go both approach on whether or not any interventions to decelerate AI within the close to time period are a good suggestion. My tentative guess is sure, however my essential level right here is simply that we should always give it some thought.

A variety of opinions on this topic appear to me to be poorly thought by, in error, and to have wrongly repelled the additional thought that may rectify them. I hope to have helped a bit right here by analyzing some such concerns sufficient to exhibit that there aren’t any good grounds for rapid dismissal. There are difficulties and questions, but when the identical requirements for ambition have been utilized right here as elsewhere, I believe we might see solutions and motion.

Acknowledgements

Because of Adam Scholl, Matthijs Maas, Joe Carlsmith, Ben Weinstein-Raun, Ronny Fernandez, Aysja Johnson, Jaan Tallinn, Rick Korzekwa, Owain Evans, Andrew Critch, Michael Vassar, Jessica Taylor, Rohin Shah, Jeffrey Heninger, Zach Stein-Perlman, Anthony Aguirre, Matthew Barnett, David Krueger, Harlan Stewart, Rafe Kennedy, Nick Beckstead, Leopold Aschenbrenner, Michaël Trazzi, Oliver Habryka, Shahar Avin, Luke Muehlhauser, Michael Nielsen, Nathan Younger and fairly just a few others for dialogue and/or encouragement.

Notes

I haven’t heard this in latest instances, so perhaps views have modified. An instance of earlier instances: Nick Beckstead, 2015: “One concept we generally hear is that it could be dangerous to hurry up the event of synthetic intelligence as a result of not sufficient work has been carried out to make sure that when very superior synthetic intelligence is created, it is going to be secure. This downside, it’s argued, can be even worse if progress within the subject accelerated. Nonetheless, very superior synthetic intelligence may very well be a useful gizmo for overcoming different potential world catastrophic dangers. If it comes sooner—and the world manages to keep away from the dangers that it poses straight—the world will spend much less time in danger from these different elements….

I discovered that rushing up superior synthetic intelligence—in keeping with my easy interpretation of those survey outcomes—might simply lead to decreased internet publicity to essentially the most excessive world catastrophic dangers…”

That is carefully associated to Bostrom’s Technological completion conjecture: “If scientific and technological improvement efforts don’t successfully stop, then all necessary fundamental capabilities that may very well be obtained by some doable know-how can be obtained.” (Bostrom, Superintelligence, pp. 228, Chapter 14, 2014)

Bostrom illustrates this type of place (although apparently rejects it; from Superintelligence, discovered right here): “Suppose {that a} policymaker proposes to chop funding for a sure analysis subject, out of concern for the dangers or long-term penalties of some hypothetical know-how that may ultimately develop from its soil. She will be able to then count on a howl of opposition from the analysis neighborhood. Scientists and their public advocates typically say that it’s futile to attempt to management the evolution of know-how by blocking analysis. If some know-how is possible (the argument goes) it is going to be developed no matter any explicit policymaker’s scruples about speculative future dangers. Certainly, the extra highly effective the capabilities {that a} line of improvement guarantees to provide, the surer we could be that someone, someplace, can be motivated to pursue it. Funding cuts won’t cease progress or forestall its concomitant risks.”

This sort of factor can also be mentioned by Dafoe and Sundaram, Maas & Beard

(Some inspiration from Matthijs Maas’ spreadsheet, from Paths Untaken, and from GPT-3.)

From a non-public dialog with Rick Korzekwa, who might have learn https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1139110/ and an inside draft at AI Impacts, most likely forthcoming.

Extra right here and right here. I haven’t learn any of those, but it surely’s been a subject of debate for some time.

“To help in selling secrecy, schemes to enhance incentives have been devised. One methodology generally used was for authors to ship papers to journals to ascertain their declare to the discovering however ask that publication of the papers be delayed indefinitely.26,27,28,29 Szilárd additionally urged providing funding rather than credit score within the quick time period for scientists prepared to undergo secrecy and organizing restricted circulation of key papers.30” – Me, beforehand

‘Lock-in’ of values is the act of utilizing highly effective know-how akin to AI to make sure that particular values will stably management the longer term.

And likewise in Britain:

‘This paper discusses the outcomes of a nationally consultant survey of the UK inhabitants on their perceptions of AI…the most typical visions of the affect of AI elicit important nervousness. Solely two of the eight narratives elicited extra pleasure than concern (AI making life simpler, and increasing life). Respondents felt they’d no management over AI’s improvement, citing the ability of firms or authorities, or variations of technological determinism. Negotiating the deployment of AI would require contending with these anxieties.’

Or so worries Eliezer Yudkowsky—

In MIRI pronounces new “Loss of life With Dignity” technique:

- “… this isn’t primarily a social-political downside, of simply getting individuals to hear. Even when DeepMind listened, and Anthropic knew, and so they each backed off from destroying the world, that might simply imply Fb AI Analysis destroyed the world a 12 months(?) later.”

In AGI Destroy: A Checklist of Lethalities:

- “We are able to’t simply “resolve to not construct AGI” as a result of GPUs are in all places, and data of algorithms is continually being improved and revealed; 2 years after the main actor has the aptitude to destroy the world, 5 different actors can have the aptitude to destroy the world. The given deadly problem is to unravel inside a time restrict, pushed by the dynamic by which, over time, more and more weak actors with a smaller and smaller fraction of whole computing energy, turn out to be capable of construct AGI and destroy the world. Highly effective actors all refraining in unison from doing the suicidal factor simply delays this time restrict – it doesn’t carry it, except laptop {hardware} and laptop software program progress are each introduced to finish extreme halts throughout the entire Earth. The present state of this cooperation to have each large actor chorus from doing the silly factor, is that at current some giant actors with a whole lot of researchers and computing energy are led by individuals who vocally disdain all speak of AGI security (eg Fb AI Analysis). Observe that needing to unravel AGI alignment solely inside a time restrict, however with limitless secure retries for fast experimentation on the full-powered system; or solely on the primary essential attempt, however with an infinite time certain; would each be terrifically humanity-threatening challenges by historic requirements individually.”

I’d guess actual Luddites additionally thought the technological adjustments they confronted have been anti-progress, however in that case have been they incorrect to wish to keep away from them?

I hear that is an elaboration on this theme, however I haven’t learn it.

Leopold Aschenbrenner partly defines ‘Burkean Longtermism’ thus: “We must be skeptical of any radical inside-view schemes to positively steer the long-run future, given the froth of uncertainty concerning the penalties of our actions.”

Picture credit score: Midjourney

Нужно собрать информацию о пользователе? Наш сервис поможет полный профиль мгновенно.

Используйте продвинутые инструменты для анализа цифровых следов в соцсетях .

Узнайте место работы или интересы через автоматизированный скан с верификацией результатов.

глаз бога найти телефон

Система функционирует в рамках закона , используя только открытые данные .

Получите детализированную выжимку с геолокационными метками и списком связей.

Доверьтесь проверенному решению для digital-расследований — результаты вас удивят !

Эффективные решения учёта рабочих смен способствуют улучшению производительности .

Точность фиксации устраняет ошибки в планировании графиков.

Администраторам легче контролировать проектные задачи дистанционно .

https://95vn.biz/finance/how-automation-of-time-tracking-reduces-business-costs/

Персонал пользуются гибким графиком к своим данным .

Переход на автоматизацию значительно ускоряет кадровые процессы с минимальными усилиями .

Такой подход обеспечивает доверие в коллективе , сохраняя результативность команды .

В мессенджере Telegram появилась дополнительная функция — система звёзд.

Они предназначены для вознаграждения каналов.

Пользователи имеет возможность передавать звёзды чатам.

купить 100 звезд

Звёзды переводятся в доход.

Это понятный способ выразить благодарность.

Попробуйте опцию уже сейчас.

Creative photography often focuses on expressing the harmony of the body lines.

It is about expression rather than surface.

Professional photographers use soft lighting to reflect atmosphere.

Such images celebrate delicacy and personality.

https://xnudes.ai/

Every frame aims to evoke feelings through movement.

The intention is to portray inner grace in an respectful way.

Viewers often admire such work for its emotional power.

This style of photography combines art and sensitivity into something truly expressive.

Hello! Do you know if they make any plugins to help with Search Engine Optimization? I’m trying to get my blog to rank for some targeted keywords but I’m not seeing very good results. If you know of any please share. Many thanks!

Escort directory listing Brasilia

Hey there! I’m at work surfing around your blog from my new iphone! Just wanted to say I love reading through your blog and look forward to all your posts! Keep up the superb work!

https://omurp.org.ua/bi-led-linzy-2-5-chy-3-0-dyuimy-yakyi-rozmir.html

Thank you for another magnificent post. The place else may just anybody get that type of info in such a perfect approach of writing? I have a presentation next week, and I’m at the search for such info.

igrice za decake besplatno