Be part of our every day and weekly newsletters for the most recent updates and unique content material on industry-leading AI protection. Be taught Extra

Giant language fashions (LLMs) are excellent at answering easy questions however require particular prompting methods to deal with complicated duties that want reasoning and planning. Also known as “System 2” methods, these prompting schemes improve the reasoning capabilities of LLMs by forcing them to generate intermediate steps towards fixing an issue.

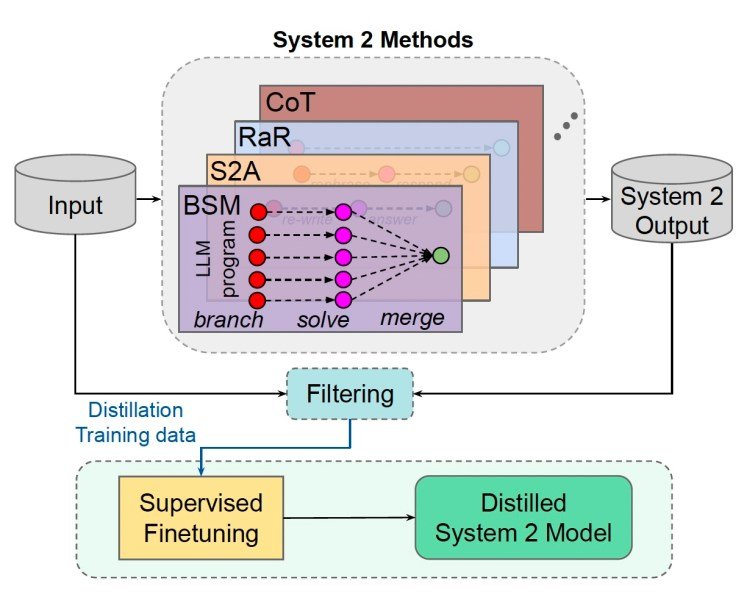

Whereas efficient, System 2 methods make LLM purposes sluggish and computationally costly. In a brand new paper, researchers at Meta FAIR current “System 2 distillation,” a method that teaches LLMs complicated duties with out requiring intermediate steps.

System 1 and System 2 in cognitive science and LLMs

In cognitive science, System 1 and System 2 refer to 2 distinct modes of considering. System 1 considering is quick, intuitive and computerized. It’s what we use when recognizing patterns, making fast judgments, or understanding acquainted symbols. For instance, we use System 1 considering to determine site visitors indicators, acknowledge faces, and affiliate fundamental symbols with their meanings.

System 2 considering, however, is sluggish, deliberate and analytical. It requires aware effort and is used for complicated problem-solving, equivalent to manipulating summary symbols, fixing mathematical equations or planning a visit.

LLMs are often thought of analogous to System 1 considering. They’ll generate textual content in a short time, however they battle with duties that require deliberate reasoning and planning.

In recent times, AI researchers have proven that LLMs could be made to imitate System 2 considering by prompting them to generate intermediate reasoning steps earlier than offering their remaining reply. For instance, “Chain of Thought” is a prompting method that instructs the LLM to elucidate its reasoning course of step-by-step, which regularly results in extra correct outcomes for logical reasoning duties. A number of System 2 prompting methods are tailor-made for various duties.

“Many of those strategies are proven to provide extra correct outcomes attributable to this express reasoning, however sometimes accomplish that at a lot greater inference value and latency for a response,” the Meta AI researchers write. “As a result of latter, many of those approaches should not utilized in manufacturing programs, which largely use System 1 generations.”

System 2 distillation

An attention-grabbing remark about System 2 considering in people is that once we repeatedly carry out a process that requires deliberate effort, it step by step turns into ingrained in our System 1. For instance, whenever you be taught to drive, you employ loads of aware effort to manage the automotive, observe site visitors guidelines and navigate. However as you acquire extra expertise, driving turns into second nature. You not want to consider every step, and you’ll carry out them intuitively and robotically.

This phenomenon impressed the Meta AI researchers to develop “System 2 distillation” for LLMs.

Distillation is a standard method in machine studying (ML), the place a bigger mannequin, known as the “instructor,” is used to coach a smaller mannequin, or the “scholar.” For instance, builders usually use frontier fashions equivalent to GPT-4 and Claude to generate coaching examples for smaller fashions equivalent to Llama-2 7B.

Nonetheless, System 2 distillation doesn’t use a separate instructor mannequin. As a substitute, the researchers discovered a approach to distill the information gained from the mannequin’s personal System 2 reasoning capabilities into its fast-paced and compute-efficient System 1 era.

The method begins by prompting the LLM to unravel an issue utilizing System 2 prompting methods. The responses are then verified for correctness by means of an unsupervised mechanism. For instance, they use “self-consistency,” the place the mannequin is given the identical immediate a number of occasions. Its solutions are then in contrast, and the one which exhibits up most frequently is taken into account the right reply and is chosen for the distillation dataset. If the solutions are too inconsistent, then the instance and its solutions are discarded.

Subsequent, they discard the intermediate steps generated by System 2 reasoning and solely maintain the ultimate solutions. Lastly, they fine-tuned the mannequin on the preliminary query and the reply. This enables the mannequin to skip the reasoning steps and bounce straight to the reply.

System 2 distillation in motion

The researchers evaluated their technique on a variety of reasoning duties and 4 totally different System 2 prompting methods. For the bottom mannequin, they used Llama-2-70B, which is giant sufficient to have the capability for internalizing new information.

The System 2 approaches they used of their experiments embody Chain-of-Thought, System 2 Attention, Rephrase and Respond and Department-Remedy-Merge. A few of these methods require the mannequin to be prompted a number of occasions, which makes them each sluggish and costly. For instance, Rephrase and Reply first prompts the mannequin to rephrase the unique question with elaboration, after which it re-prompts the mannequin with the rephrased query. Department-Remedy-Merge is much more difficult and requires a number of back-and-forths with the mannequin.

The outcomes present that System 2 distillation can considerably enhance the efficiency of LLMs on complicated reasoning duties, usually matching or exceeding the accuracy of the unique System 2 strategies. Moreover, the distilled fashions can generate responses a lot quicker and with much less compute as a result of they don’t need to undergo the intermediate reasoning steps.

For instance, they discovered that distillation was profitable for duties that use System 2 Consideration to take care of biased opinions or irrelevant info. It additionally confirmed spectacular leads to some reasoning duties, the place Rephrase and Reply is used to make clear and enhance responses, and for fine-grained analysis and processing of duties by means of Branch-Solve-Merge.

“Now we have proven that in lots of circumstances it’s attainable to distill this System 2 reasoning into the outputs of the LLM with out intermediate generations whereas sustaining, or generally even enhancing, efficiency,” the researchers write.

Nonetheless, the researchers additionally discovered that, like people, LLMs can’t distill all forms of reasoning expertise into their fast-paced inference mechanism. For instance, they had been unable to efficiently distill complicated math reasoning duties that required Chain-of-Thought prompting. This implies that some duties may all the time require deliberate reasoning.

There’s rather more to be discovered about System 2 distillation, equivalent to how properly it really works on smaller fashions and the way distillation impacts the mannequin’s broader efficiency on duties that weren’t included within the distillation coaching dataset. Additionally it is value noting that LLM benchmarks are sometimes vulnerable to contamination, the place the mannequin already has some type of information of the take a look at examples, leading to bloated outcomes on take a look at units.

Nonetheless, distillation will certainly be a strong optimization software for mature LLM pipelines that carry out particular duties at every step.

“Trying ahead, programs that may distill helpful duties on this means liberate extra time to spend on reasoning concerning the duties that they can not but do properly, simply as people do,” the researchers write.

Source link