Microsoft has launched Phi-3, a brand new household of small language fashions (SLMs) that purpose to ship excessive efficiency and cost-effectiveness in AI purposes. These fashions have proven sturdy outcomes throughout benchmarks in language comprehension, reasoning, coding, and arithmetic when in comparison with fashions of comparable and bigger sizes. The discharge of Phi-3 expands the choices out there to builders and companies seeking to leverage AI whereas balancing effectivity and price.

Phi-3 Mannequin Household and Availability

The primary mannequin within the Phi-3 lineup is Phi-3-mini, a 3.8B parameter mannequin now out there on Azure AI Studio, Hugging Face, and Ollama. Phi-3-mini comes instruction-tuned, permitting it for use “out-of-the-box” with out in depth fine-tuning. It encompasses a context window of as much as 128K tokens, the longest in its measurement class, enabling processing of bigger textual content inputs with out sacrificing efficiency.

To optimize efficiency throughout {hardware} setups, Phi-3-mini has been fine-tuned for ONNX Runtime and NVIDIA GPUs. Microsoft plans to increase the Phi-3 household quickly with the discharge of Phi-3-small (7B parameters) and Phi-3-medium (14B parameters). These extra fashions will present a wider vary of choices to satisfy various wants and budgets.

Picture: Microsoft

Phi-3 Efficiency and Improvement

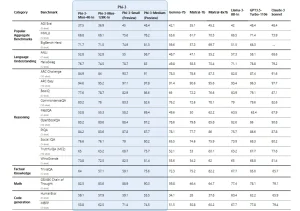

Microsoft experiences that the Phi-3 fashions have demonstrated vital efficiency enhancements over fashions of the identical measurement and even bigger fashions throughout varied benchmarks. In line with the corporate, Phi-3-mini has outperformed fashions twice its measurement in language understanding and era duties, whereas Phi-3-small and Phi-3-medium have surpassed a lot bigger fashions, equivalent to GPT-3.5T, in sure evaluations.

Microsoft states that the event of the Phi-3 fashions has adopted the corporate’s Responsible AI principles and requirements, which emphasize accountability, transparency, equity, reliability, security, privateness, safety, and inclusiveness. The fashions have reportedly undergone security coaching, evaluations, and red-teaming to make sure adherence to accountable AI deployment practices.

Picture: Microsoft

Potential Purposes and Capabilities of Phi-3

The Phi-3 household is designed to excel in eventualities the place assets are constrained, low latency is crucial, or cost-effectiveness is a precedence. These fashions have the potential to allow on-device inference, permitting AI-powered purposes to run effectively on a variety of units, together with these with restricted computing energy. The smaller measurement of Phi-3 fashions may additionally make fine-tuning and customization extra reasonably priced for companies, enabling them to adapt the fashions to their particular use circumstances with out incurring excessive prices.

In purposes the place quick response occasions are essential, Phi-3 fashions supply a promising answer. Their optimized structure and environment friendly processing can allow fast era of outcomes, enhancing consumer experiences and opening up prospects for real-time AI interactions. Moreover, Phi-3-mini’s sturdy reasoning and logic capabilities make it well-suited for analytical duties, equivalent to information evaluation and insights era.