Are you able to convey extra consciousness to your model? Contemplate turning into a sponsor for The AI Impression Tour. Be taught extra concerning the alternatives here.

Mistral, essentially the most well-seeded startup in European historical past and a French firm devoted to pursuing open-source AI fashions and enormous language fashions (LLMs), has struck gold with its newest launch — at the very least among the many early adopter/AI influencer crowd on X and LinkedIn.

Final week, in what’s turning into its signature model, Mistral unceremoniously dumped its new mannequin — Mixtral 8x7B, so named as a result of it employs a way referred to as “mixture of experts,” a mix of various fashions every specializing in a unique class of duties — on-line as a torrent hyperlink, with none rationalization or weblog put up or demo video showcasing its capabilities.

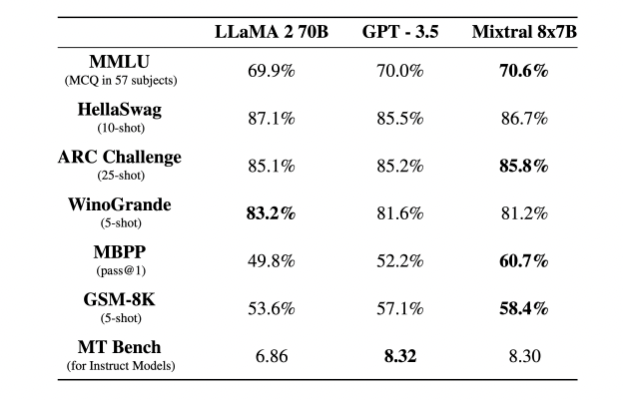

At present, Mistral published a weblog put up additional detailing the mannequin and exhibiting benchmarks through which it equates or outperforms OpenAI’s closed-source GPT-3.5, in addition to Meta’s Llama 2 household, the latter the earlier chief in open-source AI. The corporate acknowledged it labored with CoreWeave and Scaleway for technical assist throughout coaching. It additionally said that Mixtral 8x7B is certainly out there for business utilization beneath an Apache 2.0 license.

AI early adopters have already downloaded Mixtral 8x7B and begun working it and taking part in with and have been blown away by its efficiency. Due to its small footprint, it will possibly additionally run domestically on machines with out devoted GPUs together with Apple Mac computers with its new M2 Ultra CPU.

And, because the College of Pennsylvania Wharton Faculty of Enterprise professor and AI influencer Ethan Mollick famous on X, Mistral 8x7B has seemingly “no security guardrails,” which means that these customers chaffing beneath OpenAI’s more and more tight content material insurance policies have a mannequin of comparable efficiency that they will get to provide materials deemed “unsafe” or NSFW by different fashions. Nonetheless, the dearth of security guardrails additionally might current a problem to policymakers and regulators.

You possibly can attempt it for your self here by way of HuggingFace (hat tip to Merve Noyan for the hyperlink). The HuggingFace implementation does comprise guardrails, as once we examined it on the widespread “inform me how one can create napalm” immediate, it refused to take action.

Mistral additionally has much more highly effective fashions up its sleeves, as HyperWrite AI CEO Matt Schumer noted on X, the corporate is already serving up an alpha model of Mistral-medium on its utility programming interface (API) which additionally launched this weekend, suggesting a bigger, much more performant mannequin is within the works.

The corporate additionally closed a $415 million Series A funding round led by A16z at a valuation of $2 billion.