The that means of modality is outlined as “a selected mode by which one thing exists or is skilled or expressed.” In synthetic intelligence, we use this time period to speak concerning the sort(s) of enter and output information an AI system can interpret. In human phrases, modality’s that means refers back to the senses of contact, style, scent, sight, and listening to. Nevertheless, AI methods can combine with a wide range of sensors and output mechanisms to work together by means of a further array of knowledge varieties.

Understanding Modality

Every sort presents distinctive insights that improve the AI’s skill to know and work together with its environments.

Forms of Modalities:

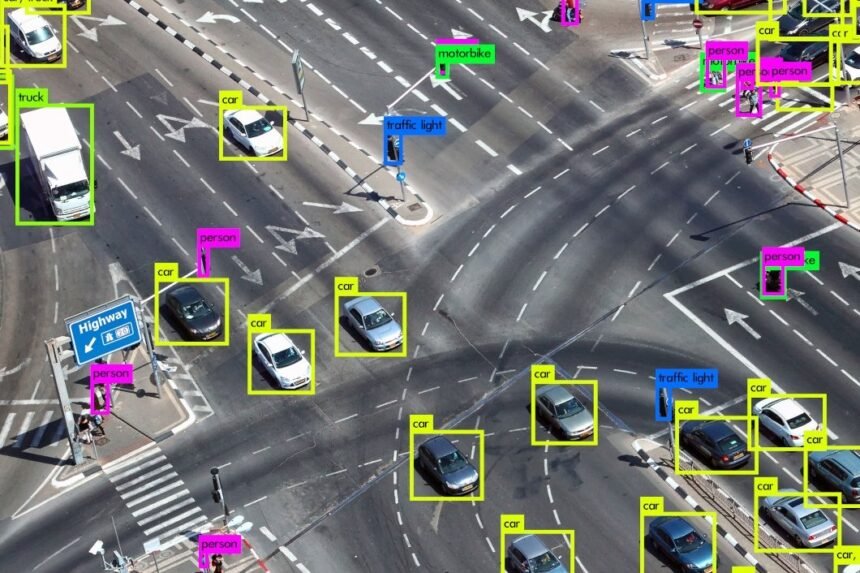

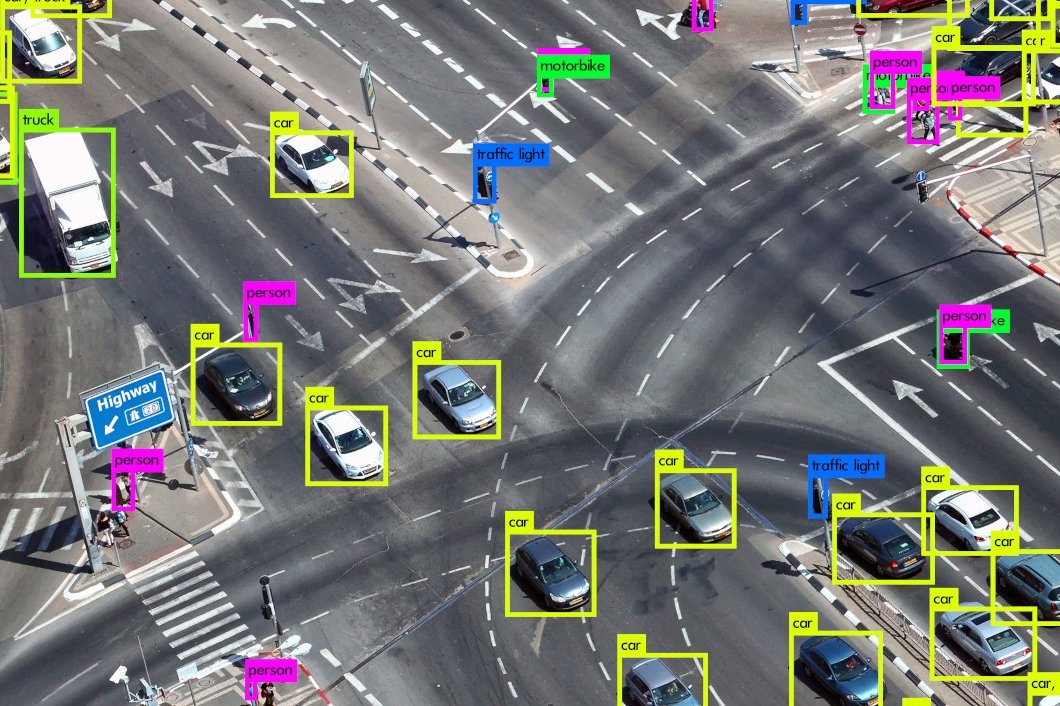

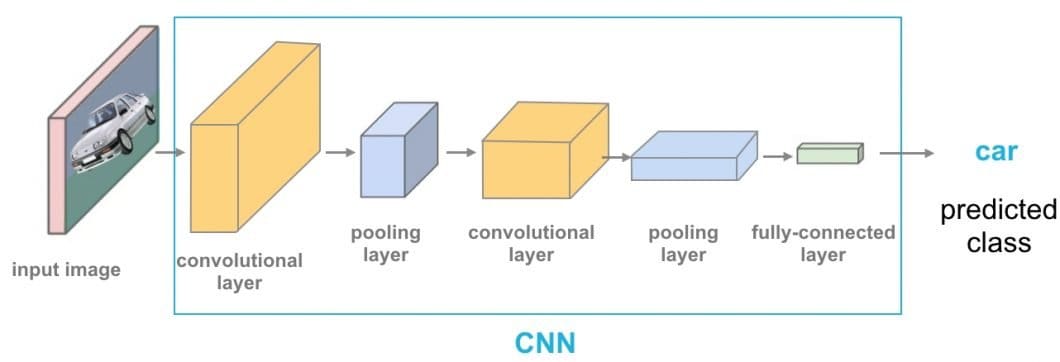

- Visible: Fashions similar to Convolutional Neural Networks (CNNs) allow the processing of visible information for duties like picture recognition and video evaluation. As an example, Google’s DeepMind leverages pc imaginative and prescient applied sciences for correct predictions of protein buildings.

- Sound: This refers back to the skill to course of auditory information. Sometimes, AI methods use fashions like Recurrent Neural Networks (RNNs) to interpret sound waves. The most typical purposes as we speak are for voice recognition and ambient sound detection. For instance, voice assistants (e.g., Siri, Alexa) use auditory modalities to course of consumer instructions.

- Textual: These modalities need to do with understanding and producing human texts. These methods typically leverage giant language fashions (LLM) and pure language processing (NLP) in addition to Transformer-based architectures. Chatbots, translation instruments, and generative AIs, like ChatGPT, depend on these phrase modalities.

- Tactile: This pertains to touch-based sensory modalities for haptic applied sciences. A poignant instance as we speak is robots that may carry out delicate duties, similar to dealing with fragile gadgets.

Initially, AI methods had been centered closely on singular modalities. Early fashions, like perceptrons laid the groundwork for visible modality within the Nineteen Fifties, for instance. NLP was one other main breakthrough for a wide range of modalities in AI methods. Whereas its apparent software is in human-readable textual content, it additionally led to pc imaginative and prescient fashions, similar to LeNet, for handwriting recognition. NLPs nonetheless underpin the interactions between people and most generative AI instruments.

The introduction of RNNs and CNNs within the late twentieth century was a watershed second for auditory and visible modalities. One other leap ahead occurred with the disclosing of Transformer architectures, like GPT and BERT, in 2017. These significantly enhanced the power to know and generate language.

In the present day, the main focus is shifting towards multi-modal AI methods that may work together with the world in multifaceted methods.

Multi-Modal Methods in AI

Multi-modal AI is the pure evolution of methods that may interpret and work together with the world. These methods mix multimodal information, similar to textual content, pictures, sound, and video, to kind extra subtle fashions of the setting. In flip, this permits for extra nuanced interpretations of, and responses to, the skin world.

Whereas incorporating particular person modalities might assist AIs excel specifically duties, a multi-model method significantly expands the horizon of capabilities.

Breakthrough Fashions and Applied sciences

Meta AI is among the entities on the forefront of multi-modal AI analysis. It’s within the technique of creating fashions that may perceive and generate content material throughout completely different modalities. One of many staff’s breakthroughs is the Omnivore mannequin, which acknowledges pictures, movies, and 3D information utilizing the identical parameters.

The staff additionally developed its FLAVA challenge to offer a foundational mannequin for multimodal duties. It could actually carry out over 35 duties, from picture and textual content recognition to joint text-image duties. For instance, in a single immediate, FLAVA can describe a picture, clarify its that means, and reply particular questions. It additionally has spectacular zero-shot capabilities to categorise and retrieve textual content and picture content material.

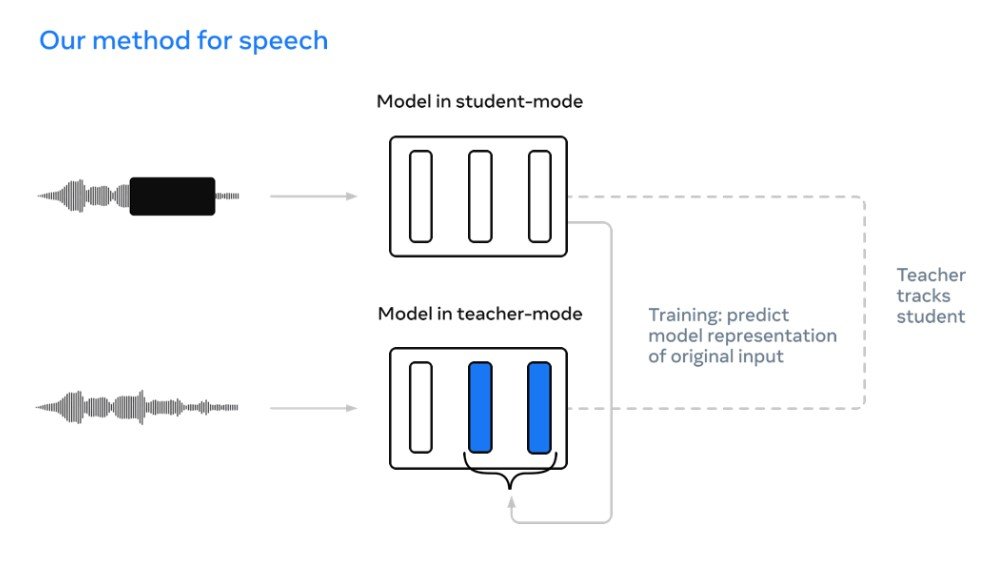

Data2vec, one other Meta initiative, proves that “very same mannequin structure and self-supervised coaching process can be utilized to develop state-of-the-art fashions for recognition of pictures, speech, and textual content.” In easy phrases, it helps the truth that implementing a number of modalities doesn’t necessitate excessive developmental overhead.

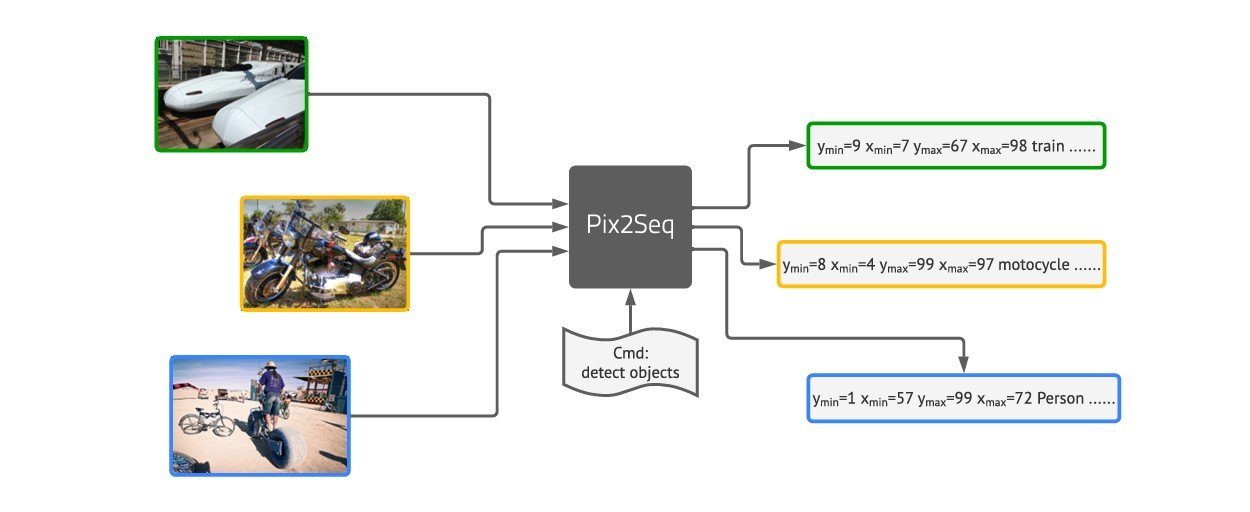

Google has additionally contributed considerably to the sphere with fashions like Pix2Seq. This mannequin takes a singular method by fixing seemingly unimodal duties utilizing a multi-modal structure. For instance, it treats object detection as a language modeling job by tokenizing visible inputs. MaxViT, a imaginative and prescient transformer, ensures that native and non-local info is mixed effectively.

On the know-how entrance, NVIDIA has been instrumental in pushing multi-modal AI innovation. The NVIDIA L40S GPU is a common information middle GPU designed to speed up AI workloads. This contains numerous modalities, together with Massive Language Mannequin (LLM) inference, coaching, graphics, and video purposes. It might nonetheless show pivotal in creating the following technology of AI for audio, speech, 2D, video, and 3D.

Powered by NVIDIA L40S GPUs, the ThinkSystem SR675 V3 represents {hardware} able to subtle multi-modal AI. For instance, the creation of digital twins and immersive metaverse simulations.

Actual-Life Purposes

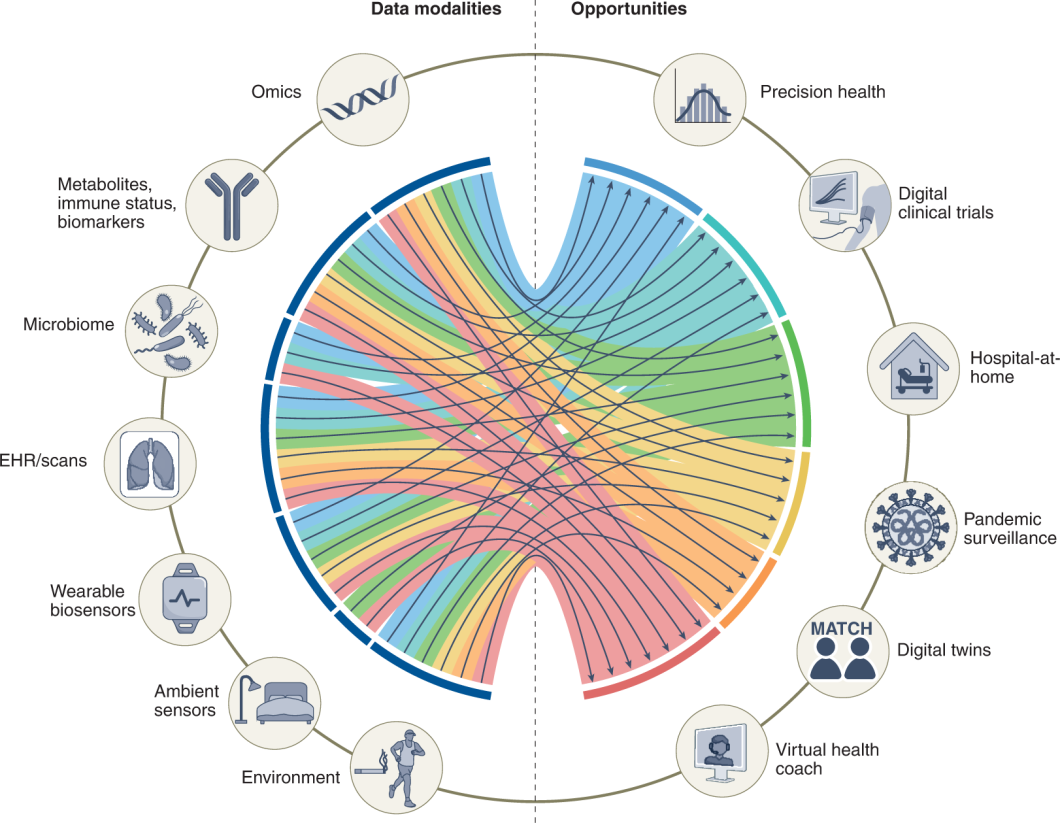

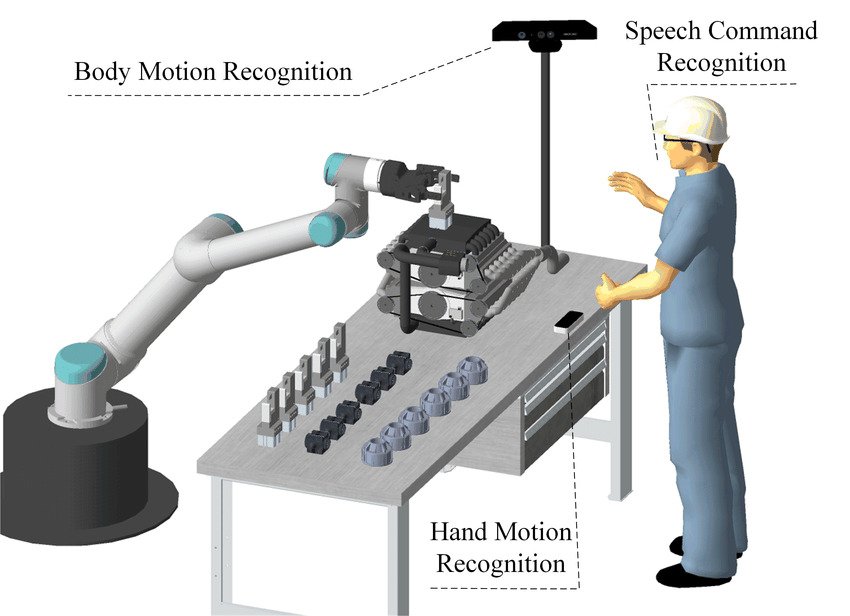

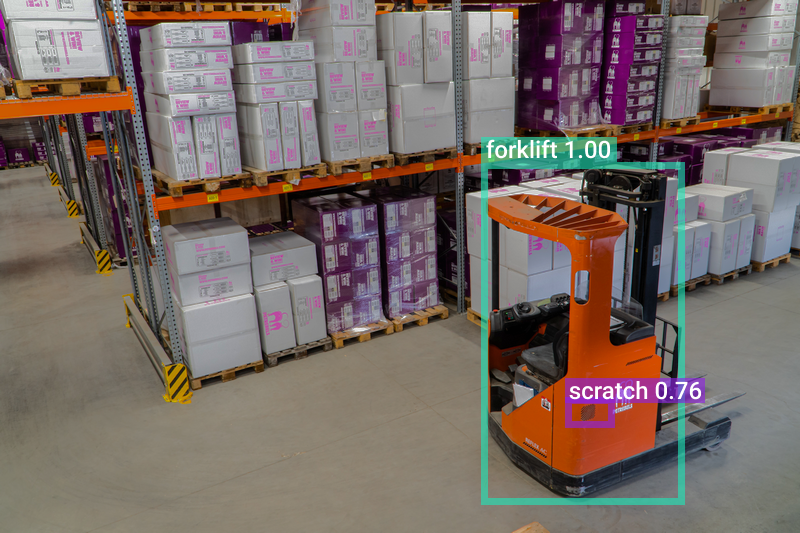

The purposes of multi-modal AI methods are huge, and we’re solely firstly. For instance, autonomous automobiles require a mixture of visible, auditory, and textual modalities to reply to human instructions and navigate. In healthcare, multi-modal diagnostics incorporate imaging, reviews, and affected person information to offer extra exact diagnoses. Multi-modal AI assistants can perceive and reply to completely different inputs like voice instructions and visible cues.

And, on the very forefront, we’re seeing superior new robotics methods utilizing muti-modal capabilities. In a latest demo, Determine 01 demonstrated the power to mix human language inputs with a visible interpretation. This allowed it to carry out typical human duties in a kitchen, primarily based on verbal directions. We’re seeing comparable developments with different opponents, similar to Tesla’s Optimus.

Technological Frameworks and Fashions Supporting Multi-Modal AI

The success of multi-modal methods necessitates the mixing of varied complicated neural community architectures. Most use circumstances for multi-modal AIs require an in-depth understanding of each the content material and context of the info it’s fed. To complicate issues additional, they need to be capable of effectively course of modalities from a number of sources concurrently.

This raises the query of finest combine disparate information varieties whereas balancing the necessity to improve relevance and reduce noise. Even coaching AI methods on a number of modalities on the similar time can result in points like co-learning. The impression of this may vary from easy interference to catastrophic forgetting.

Nevertheless, because of the sphere’s speedy evolution, superior frameworks and fashions that tackle these shortcomings emerge on a regular basis. Some are designed particularly to assist harmoniously synthesize the data from completely different information varieties. PyTorch’s TorchMultimodal library is one instance such instance. It gives researchers and builders with the constructing blocks and end-to-end examples for state-of-the-art multi-modal fashions.

Notable fashions embrace BERT, which presents a deep understanding of textual content material, and CNNs for picture recognition. Torch multimodal permits the mix of those highly effective unimodal fashions right into a multi-modal system.

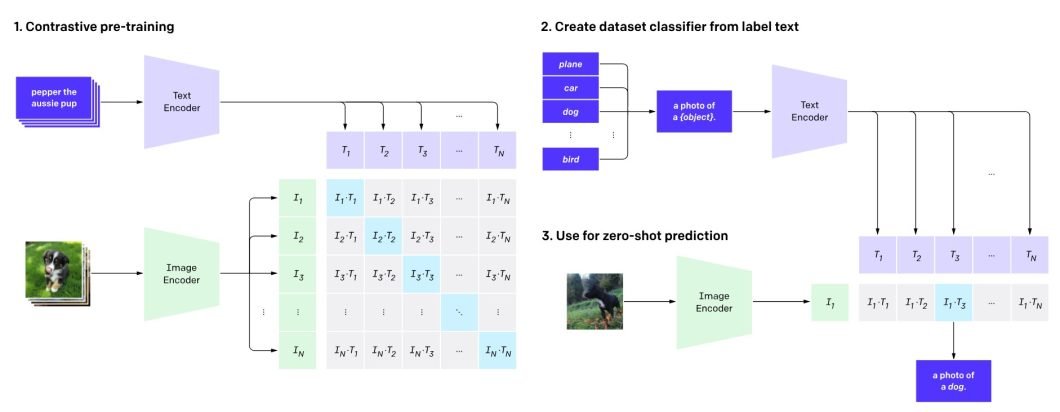

This has additionally led to revolutionary breakthroughs. For instance, the event of CLIP has modified the best way pc imaginative and prescient methods study textual and AI representations. OR, Multimodal GPT, which extends OpenAI’s GPT structure to deal with multi-modal technology.

Challenges to Creating Multi-Modal AI Methods

There are a number of challenges on the subject of integrating completely different information varieties right into a single AI mannequin:

- Illustration: That is the problem of encoding completely different information varieties in a approach that makes it attainable to course of them uniformly. Joint representations mix information into a typical “house”, whereas coordinated representations preserve them separated however structurally linked. It’s troublesome to combine completely different modalities on account of variances in noise, lacking information, construction, and codecs.

- Translation: Some purposes might require wholly changing information from one sort to a different. The precise course of can differ primarily based on the modality of each information varieties and the applying. Usually, the translated information nonetheless requires extra analysis by both a human or utilizing metrics like BLUE and ROUGE.

- Alignment: In lots of use circumstances, modalities additionally have to be synchronized. For instance, audio and visible inputs might have to be aligned in accordance with particular timestamps or visible/auditory queues. Extra disparate information varieties might not naturally align on account of inherent structural variations.

- Fusion: When you’ve solved illustration, you continue to have to merge the modalities to carry out complicated duties, like making selections or predictions. That is typically difficult on account of their completely different charges of generalization and ranging noise ranges.

- Co-learning: As touched on earlier, poor co-learning can negatively impression the coaching of each modalities. Nevertheless, when completed proper, it could possibly enhance the power to switch data between them for mutual profit. It’s largely difficult for a similar causes as illustration and fusion.

Discovering options to those challenges is a steady space of growth. Among the model-agnostic approaches, like these developed by Meta, supply essentially the most promising path ahead.

Moreover, deep studying fashions showcase the power to robotically study representations from giant multi-modal information units. This has the potential to additional enhance accuracy and effectivity, particularly the place the info is extremely numerous. The addition of neural networks additionally helps remedy challenges associated to the complexity and dimensionality of multi-modal information.

Affect of Modality on AI and Pc Imaginative and prescient

Developments in multi-modal predict a future the place AI and pc imaginative and prescient seamlessly combine into our each day lives. As they mature, they’ll turn out to be more and more vital parts of superior AR and VR, robotics, and IoT.

In robotics, AR exhibits promise in providing strategies to simplify programming and enhance management. Particularly, Augmented Actuality Visualization Methods enhance complicated decision-making by combining real-world physicality with AR’s immersive capabilities. Combining imaginative and prescient, eye monitoring, haptics, and sound makes interplay extra immersive.

For instance, ABB Robotics makes use of it in its AR methods to overlay modeled options into real-life environments. Amongst different issues, it permits customers to create superior simulations in its RobotStudio software program earlier than deploying options. PTC Actuality Lab’s Kinetic AR challenge is researching utilizing multi-modal fashions for robotic movement planning and programming.

In IoT, Multimodal Interplay Methods (MIS) merge real-world contexts with immersive AR content material. This opens up new avenues for consumer interplay. Developments in networking and computational energy permit for real-time, pure, and user-friendly interfaces.