Are you able to convey extra consciousness to your model? Think about changing into a sponsor for The AI Impression Tour. Be taught extra concerning the alternatives here.

Massive language fashions like ChatGPT and Llama-2 are infamous for his or her in depth reminiscence and computational calls for, making them pricey to run. Trimming even a small fraction of their measurement can result in important value reductions.

To deal with this difficulty, researchers at ETH Zurich have unveiled a revised version of the transformer, the deep studying structure underlying language fashions. The brand new design reduces the scale of the transformer significantly whereas preserving accuracy and growing inference pace, making it a promising structure for extra environment friendly language fashions.

Transformer blocks

Language fashions function on a basis of transformer blocks, uniform models adept at parsing sequential information, corresponding to textual content passages.

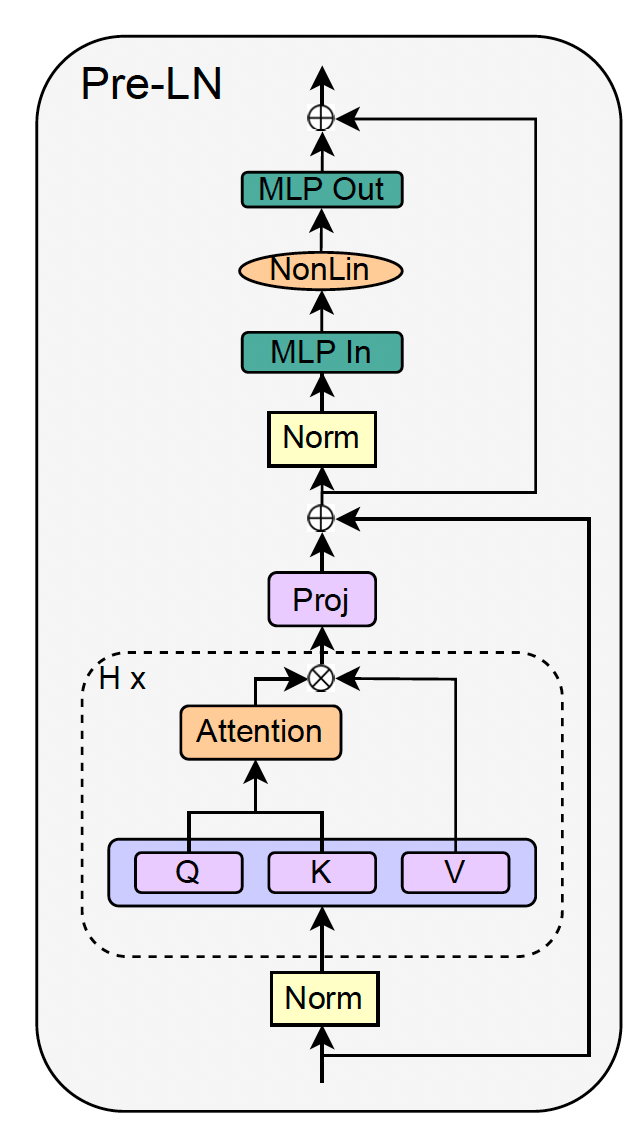

The transformer block focuses on processing sequential information, corresponding to a passage of textual content. Inside every block, there are two key sub-blocks: the “consideration mechanism” and the multi-layer perceptron (MLP). The eye mechanism acts like a highlighter, selectively specializing in completely different components of the enter information (like phrases in a sentence) to seize their context and significance relative to one another. This helps the mannequin decide how the phrases in a sentence relate, even when they’re far aside.

After the eye mechanism has accomplished its work, the MLP, a mini neural community, additional refines and processes the highlighted data, serving to to distill the information right into a extra refined illustration that captures complicated relationships.

Past these core parts, transformer blocks are geared up with further options corresponding to “residual connections” and “normalization layers.” These parts speed up studying and mitigate points widespread in deep neural networks.

As transformer blocks stack to represent a language mannequin, their capability to discern complicated relationships in coaching information grows, enabling the delicate duties carried out by modern language fashions. Regardless of the transformative influence of those fashions, the elemental design of the transformer block has remained largely unchanged since its creation.

Making the transformer extra environment friendly

“Given the exorbitant value of coaching and deploying giant transformer fashions these days, any effectivity positive factors within the coaching and inference pipelines for the transformer structure signify important potential financial savings,” write the ETH Zurich researchers. “Simplifying the transformer block by eradicating non-essential parts each reduces the parameter depend and will increase throughput in our fashions.”

The staff’s experiments reveal that paring down the transformer block doesn’t compromise coaching pace or efficiency on downstream duties. Customary transformer fashions function a number of consideration heads, every with its personal set of key (Okay), question (Q), and worth (V) parameters, which collectively map the interaction amongst enter tokens. The researchers found that they might remove the V parameters and the next projection layer that synthesizes the values for the MLP block, with out dropping efficacy.

Furthermore, they eliminated the skip connections, which historically assist avert the “vanishing gradients” difficulty in deep studying fashions. Vanishing gradients make coaching deep networks tough, because the gradient turns into too small to impact important studying within the earlier layers.

In addition they redesigned the transformer block to course of consideration heads and the MLP concurrently slightly than sequentially. This parallel processing marks a departure from the standard structure.

To compensate for the discount in parameters, the researchers adjusted different non-learnable parameters, refined the coaching methodology, and carried out architectural tweaks. These modifications collectively keep the mannequin’s studying capabilities, regardless of the leaner construction.

Testing the brand new transformer block

The ETH Zurich staff evaluated their compact transformer block throughout language fashions of various depths. Their findings have been important: they managed to shrink the standard transformer’s measurement by roughly 16% with out sacrificing accuracy, they usually achieved sooner inference occasions. To place that in perspective, making use of this new structure to a big mannequin like GPT-3, with its 175 billion parameters, may lead to a reminiscence saving of about 50 GB.

“Our simplified fashions are in a position to not solely prepare sooner but in addition to make the most of the additional capability that extra depth offers,” the researchers write. Whereas their approach has confirmed efficient on smaller scales, its utility to bigger fashions stays untested. The potential for additional enhancements, corresponding to tailoring AI processors to this streamlined structure, may amplify its influence.

“We imagine our work can result in easier architectures being utilized in apply, thereby serving to to bridge the hole between principle and apply in deep studying, and decreasing the price of giant transformer fashions,” the researchers write.