Be part of us in returning to NYC on June fifth to collaborate with govt leaders in exploring complete strategies for auditing AI fashions concerning bias, efficiency, and moral compliance throughout various organizations. Discover out how one can attend right here.

In its Q1 2025 earnings name on Wednesday, Nvidia CEO Jensen Huang highlighted the explosive progress of generative AI (GenAI) startups utilizing Nvidia’s accelerated computing platform.

“There’s a protracted line of generative AI startups, some 15,000, 20,000 startups in all completely different fields from multimedia to digital characters, design to utility productiveness, digital biology,” stated Huang. “The transferring of the AV trade to Nvidia in order that they’ll prepare end-to-end fashions to broaden the working area of self-driving vehicles—the listing is simply fairly extraordinary.”

Huang emphasised that demand for Nvidia’s GPUs is “unimaginable” as corporations race to deliver AI purposes to market utilizing Nvidia’s CUDA software program and Tensor Core structure. Client web corporations, enterprises, cloud suppliers, automotive corporations and healthcare organizations are all investing closely in “AI factories” constructed on hundreds of Nvidia GPUs.

The Nvidia CEO stated the shift to generative AI is driving a “foundational, full-stack computing platform shift” as computing strikes from info retrieval to producing clever outputs.

“[The computer] is now producing contextually related, clever solutions,” Huang defined. “That’s going to vary computing stacks everywhere in the world. Even the PC computing stack goes to get revolutionized.”

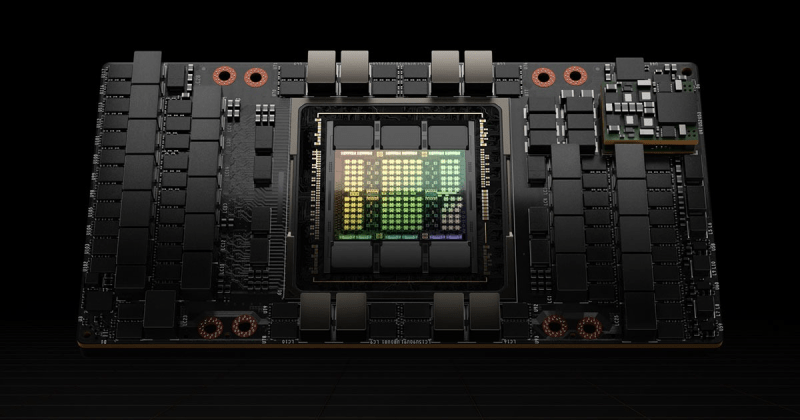

To fulfill surging demand, Nvidia started transport its H100 “Hopper” structure GPUs in Q1 and introduced its next-gen “Blackwell” platform, which delivers 4-30X sooner AI coaching and inference than Hopper. Over 100 Blackwell techniques from main laptop makers will launch this yr to allow large adoption.

Huang stated Nvidia’s end-to-end AI platform capabilities give it a significant aggressive benefit over extra slender options as AI workloads quickly evolve. He expects demand for Nvidia’s Hopper, Blackwell and future architectures to outstrip provide effectively into subsequent yr because the GenAI revolution takes maintain.

Struggling to maintain up with demand for AI chips

Regardless of the record-breaking $26 billion in income Nvidia posted in Q1, the corporate stated buyer demand is considerably outpacing its means to provide GPUs for AI workloads.

“We’re racing each single day,” stated Huang concerning Nvidia’s efforts to satisfy orders. “Prospects are placing a number of stress on us to ship the techniques and stand them up as rapidly as attainable.”

Huang famous that demand for Nvidia’s present flagship H100 GPU will exceed provide for a while at the same time as the corporate ramps manufacturing of the brand new Blackwell structure.

“Demand for H100 via this quarter continued to extend…We anticipate demand to outstrip provide for a while as we now transition to H200, as we transition to Blackwell,” he stated.

The Nvidia CEO attributed the urgency to the aggressive benefit gained by corporations which are first to market with groundbreaking AI fashions and purposes.

“The following firm who reaches the following main plateau will get to announce a groundbreaking AI, and the second after that will get to announce one thing that’s 0.3% higher,” Huang defined. “Time to coach issues an awesome deal. The distinction between time to coach that’s three months earlier is every part.”

In consequence, Huang stated cloud suppliers, enterprises, and AI startups really feel immense stress to safe as a lot GPU capability as attainable to beat rivals to milestones. He predicted the provision crunch for Nvidia’s AI platforms will persist effectively into 2024.

“Blackwell is effectively forward of provide and we anticipate demand might exceed provide effectively into subsequent yr,” Huang said.

Nvidia GPUs are delivering compelling returns for cloud AI hosts

Huang additionally supplied particulars on how cloud suppliers and different corporations can generate robust monetary returns by internet hosting AI fashions on Nvidia’s accelerated computing platforms.

“For each $1 spent on Nvidia AI infrastructure, cloud suppliers have a possibility to earn $5 in GPU occasion internet hosting income over 4 years,” Huang said.

Huang supplied the instance of a language mannequin with 70 billion parameters utilizing Nvidia’s newest H200 GPUs. He claimed a single server may generate 24,000 tokens per second and assist 2,400 concurrent customers.

“Meaning for each $1 spent on Nvidia H200 servers at present costs per token, an API supplier [serving tokens] can generate $7 in income over 4 years,” Huang stated.

Huang added that ongoing software program enhancements by Nvidia proceed to spice up the inference efficiency of its GPU platforms. Within the newest quarter, optimizations delivered a 3X speedup on the H100, enabling a 3X price discount for patrons.

Huang asserted that this robust return on funding is fueling breakneck demand for Nvidia silicon from cloud giants like Amazon, Google, Meta, Microsoft and Oracle as they race to provision AI capability and appeal to builders.

Mixed with Nvidia’s unmatched software program instruments and ecosystem assist, he argued these economics make Nvidia the platform of selection for GenAI deployments.

Nvidia making aggressive push into ethernet networking for AI

Whereas Nvidia is finest identified for its GPUs, the corporate can be a significant participant in datacenter networking with its Infiniband expertise.

In Q1, Nvidia reported robust year-over-year progress in networking, pushed by Infiniband adoption.

Nonetheless, Huang emphasised that Ethernet is a significant new alternative for Nvidia to deliver AI computing to a wider market. In Q1, the corporate started transport its Spectrum-X platform, which is optimized for AI workloads over Ethernet.

“Spectrum-X opens a model new market to Nvidia networking and permits Ethernet-only datacenters to accommodate large-scale AI,” stated Huang. “We anticipate Spectrum-X to leap to a multi-billion greenback product line inside a yr.”

Huang stated Nvidia is “all-in on Ethernet” and can ship a significant roadmap of Spectrum switches to enrich its Infiniband and NVLink interconnects. This three-pronged networking technique will permit Nvidia to focus on every part from single-node AI techniques to large clusters.

Nvidia additionally started sampling its 51.2 terabit per second Spectrum-4 Ethernet swap through the quarter. Huang stated main server makers like Dell are embracing Spectrum-X to deliver Nvidia’s accelerated AI networking to market.

“In the event you spend money on our structure immediately, with out doing something, it’s going to go to increasingly clouds and increasingly datacenters, and every part simply runs,” assured Huang.

File Q1 outcomes pushed by knowledge heart and gaming

Nvidia delivered report income of $26 billion in Q1, up 18% sequentially and 262% year-over-year, considerably surpassing its outlook of $24 billion.

The Knowledge Middle enterprise was the first driver of progress, with income hovering to $22.6 billion, up 23% sequentially and an astonishing 427% year-over-year. CFO Colette Kress highlighted the unimaginable progress within the knowledge heart phase:

“Compute income grew greater than 5X and networking income greater than 3X from final yr. Sturdy sequential knowledge heart progress was pushed by all buyer varieties, led by enterprise and client web corporations. Giant cloud suppliers proceed to drive robust progress as they deploy and ramp Nvidia AI infrastructure at scale.”

Gaming income was $2.65 billion, down 8% sequentially however up 18% year-over-year. This was according to Nvidia’s expectations of a seasonal decline. Kress famous, “The GeForce RTX SUPER GPU market reception is powerful, and finish demand and channel stock stay wholesome throughout the product vary.”

Skilled Visualization income was $427 million, down 8% sequentially however up 45% year-over-year. Automotive income reached $329 million, rising 17% sequentially and 11% year-over-year.

For Q2, Nvidia expects income of roughly $28 billion, plus or minus 2%, with sequential progress anticipated throughout all market platforms.

Nvidia inventory was up 5.9% after hours to $1,005.75 after the corporate introduced a ten:1 inventory break up.

Vital Disclosure: The writer owns securities of Nvidia Company (NVDA). Not funding recommendation. Seek the advice of an expert funding advisor earlier than making funding choices.