Securing synthetic intelligence (AI) and machine studying (ML) workflows is a fancy problem that may contain a number of parts.

Seattle-based startup Protect AI is rising its platform answer to the problem of securing AI at this time with the acquisition of privately-held Laiyer AI, which is the lead agency behind the favored LLM Guard open-source challenge. Monetary phrases of the deal usually are not being publicly disclosed. The acquisition will enable Shield AI to increase the capabilities of its AI safety platform to higher shield organizations in opposition to potential dangers from the event and utilization of huge language fashions (LLMs).

The core industrial platform developed by Shield AI is named Radar, which gives visibility, detection and administration capabilities for AI/ML fashions. The corporate raised $35 million in a Sequence A spherical of funding in July 2023 to assist increase its AI safety efforts.

“We need to drive the business to undertake MLSecOps,” Daryan (D) Dehghanpisheh, president and founding father of Shield AI advised VentureBeat. “The adoption of MLSecOps basically helps you see, know and handle all types of your AI danger and safety vulnerabilities.”

What LLM Guard and Laiyer carry to Shield AI

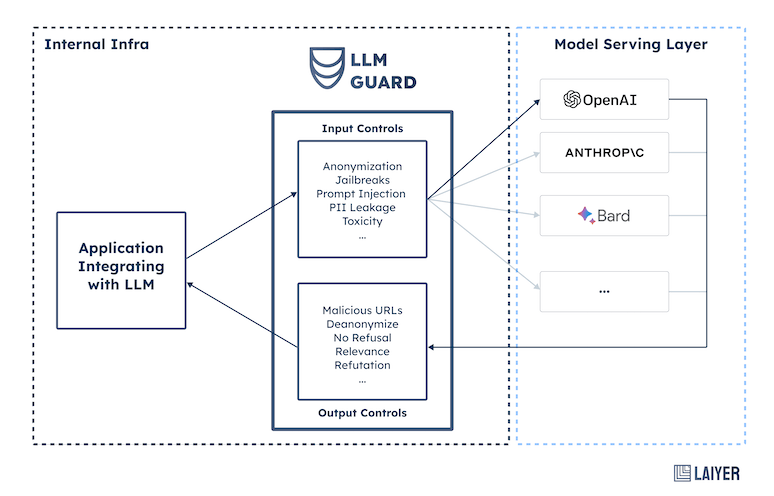

The LLM Guard open-source challenge that Laiyer AI leads helps to manipulate the utilization of LLM operations.

LLM Guard has enter controls to assist shield in opposition to immediate injection assaults, which is an more and more harmful danger for AI utilization. The open supply know-how additionally has enter management to assist restrict the chance of personally identifiable info (PII) leakage in addition to poisonous language. On the output aspect, LLM Guard can assist shield customers in opposition to varied dangers together with malicious URLs.

Dehghanpisheh emphasised that Shield AI stays dedicated to protecting the core LLM Guard know-how open supply. The plan is to develop a industrial providing referred to as Laiyer AI that can present extra efficiency and enterprise capabilities that aren’t current within the core open-source challenge.

Shield AI may even be working to combine the LLM Guard know-how into its broader platform strategy to assist shield AI utilization from the mannequin growth and choice stage, proper by means of to deployment.

Open supply expertise extends to vulnerability scanning

The strategy of beginning with an open-source effort and constructing it right into a industrial product is one thing that Shield AI has carried out earlier than.

The corporate additionally leads the ModelScan open supply challenge which helps to establish safety dangers in machine studying fashions. The mannequin scan know-how is the premise for Shield AI’s industrial Guardian know-how which was introduced simply final week on Jan. 24.

Scanning fashions for vulnerabilities is an advanced course of. Not like a conventional virus scanner in software software program, there generally aren’t particular recognized vulnerabilities in ML mannequin code to scan in opposition to.

“The factor it’s essential to perceive a couple of mannequin is that it’s a self-executing piece of code,” Dehghanpisheh defined. “The issue is it’s very easy to embed executable calls in that mannequin file that may be doing issues that don’t have anything to do with machine studying.”

These executable calls may doubtlessly be malicious, which is a danger that Shield AI’s Guardian know-how helps to establish.

Shield AI’s rising platform ambitions

Having particular person level merchandise to assist shield AI is simply the start of Dehghanpisheh’s imaginative and prescient for Shield AI.

Within the safety house, organizations possible are already utilizing any variety of completely different applied sciences from a number of distributors. The objective is to carry Shield AI’s instruments along with its Radar platform that may additionally combine into current safety instruments that a company would possibly use, like SIEM (Safety Info and Occasion Administration) instruments that are generally deployed in safety operations facilities (SOCs).

Dehghanpisheh defined that Radar helps to offer a invoice of supplies about what’s within an AI mannequin. Having visibility into the parts that allow a mannequin is important for governance in addition to safety. With the Guardian know-how, Shield AI is now in a position to scan fashions to establish potential dangers earlier than a mannequin is deployed, whereas the LLM Guard protects in opposition to utilization dangers. The general platform objective for Shield AI is to have a full strategy to enterprise AI safety.

“You’ll be capable of have one coverage which you can invoke at an enterprise stage that encompasses all types of AI safety,” he mentioned.

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.