Apple Machine Studying analysis launched a set of Autoregressive Picture Fashions (AIM) earlier this 12 months. The gathering of various mannequin sizes, ranging from a couple of hundred million parameters, up to a couple billion.

The research geared toward visualizing the coaching performances of the fashions scaled in dimension. This text will discover the completely different experiments, datasets used, and derived conclusions. Nonetheless, first, we should perceive autoregressive modeling and its use in picture modeling.

About us: Viso Suite is a versatile and scalable infrastructure developed for enterprises to combine laptop imaginative and prescient into their tech ecosystems seamlessly. Viso Suite permits enterprise ML groups to coach, deploy, handle, and safe laptop imaginative and prescient functions in a single interface.

Autoregressive Fashions

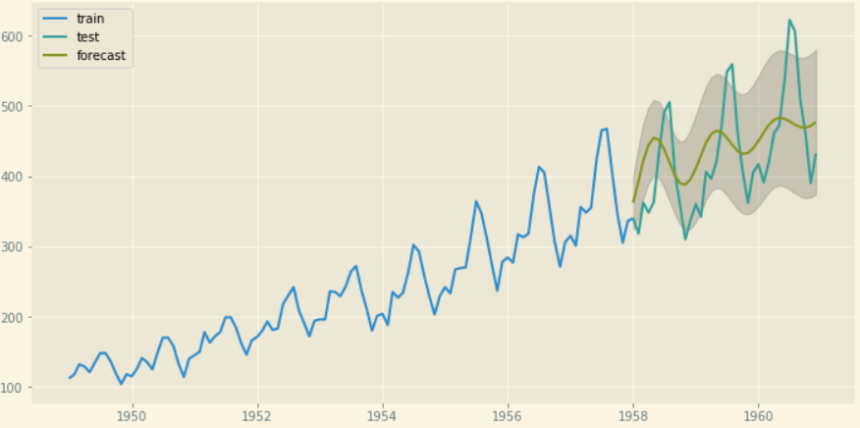

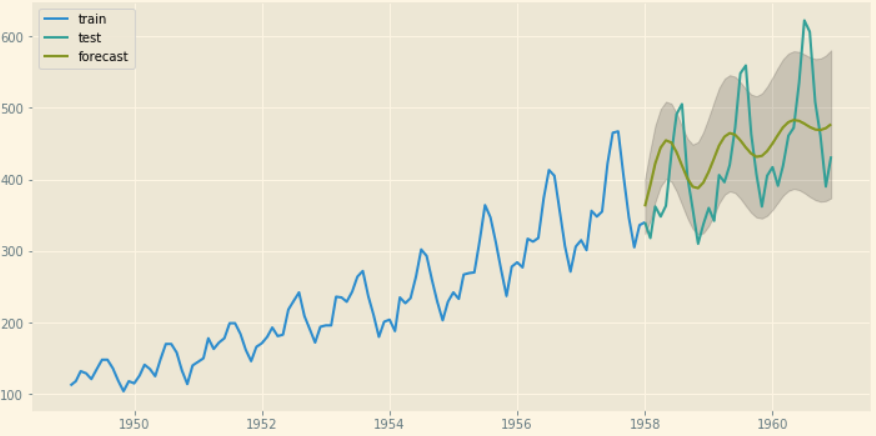

Autoregressive fashions are a household of fashions that use historic information to foretell future information factors. They study the underlying patterns of the info factors and their causal relationships to foretell future information factors. Widespread examples of autoregressive fashions embrace Autoregressive Built-in Transferring Common (ARIMA) and Seasonal Autoregressive Built-in Transferring Common (SARIMA). These fashions are largely utilized in time-series forecasting in gross sales and income.

Autoregressive Picture Fashions

Autoregressive Picture Modeling (AIM) makes use of the identical method however on picture pixels as information factors. The method divides the picture into segments and treats the segments as a sequence of information factors. The mannequin learns to foretell the following picture phase given the earlier information level.

Widespread fashions like PixelCNN and PixelRNN (Recurrent Neural Networks) use autoregressive modeling to foretell visible information by analyzing current pixel info. These fashions are utilized in functions reminiscent of picture enhancement. A few of these functions embrace upscaling and generative networks to create new photographs from scratch.

Pre-training Massive-Scale Autoregressive Picture Fashions

Pre-training an AI mannequin includes coaching a large-scale basis mannequin on an intensive and generic dataset. The coaching process can revolve round photographs or textual content relying on the duties the mannequin is meant to resolve.

Autoregressive picture fashions take care of picture datasets and are pre-trained on widespread datasets like MS COCO and ImageNet. The researchers at Apple used the DFN dataset launched by Fang et al. Let’s discover the dataset intimately.

Dataset

The dataset contains 12.8 billion image-text pairs filtered from the Widespread Crawl dataset (text-to-image fashions). This dataset is additional filtered to take away not protected for work content material, blur faces, and take away duplicated photographs. Lastly, alignment scores are calculated between the photographs and the captions and solely the highest 15% of information parts are retained. The ultimate subset incorporates 2 billion cleaned and filtered photographs, which the authors label as DFN 2B.

Structure

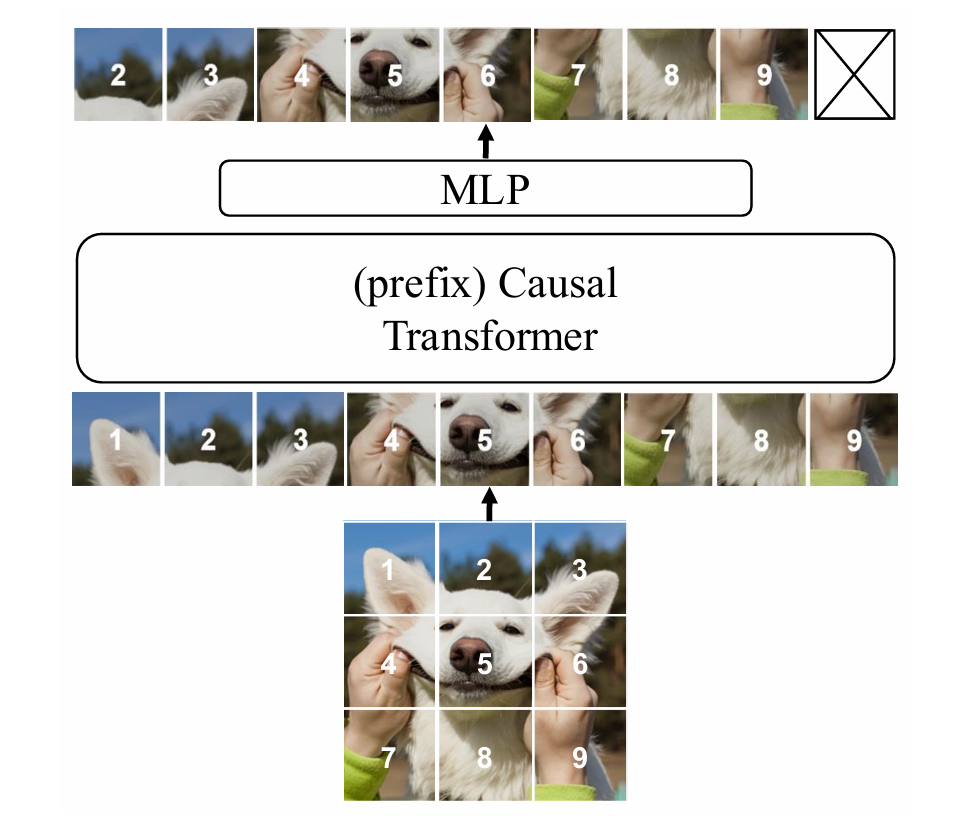

The coaching method stays the identical as that of ordinary autoregressive fashions. The enter picture is split into Ok equal elements and organized in a linear mixture to kind a sequence. Every picture phase acts as a token, and in contrast to language modeling, the structure offers with a set variety of segments.

The picture segments are handed to a transformer structure, which makes use of self-attention to grasp the pixel info. All future tokens are masked in the course of the self-attention mechanism to make sure the mannequin doesn’t ‘cheat’ in the course of the coaching.

A easy multi-layer perceptron is used because the prediction head on prime of the transformer implementation. The 12-block MLP community initiatives the patch options to pixel area for the ultimate predictions. This head is barely utilized throughout pre-training and changed throughout downstream duties in line with activity necessities.

Experimentation

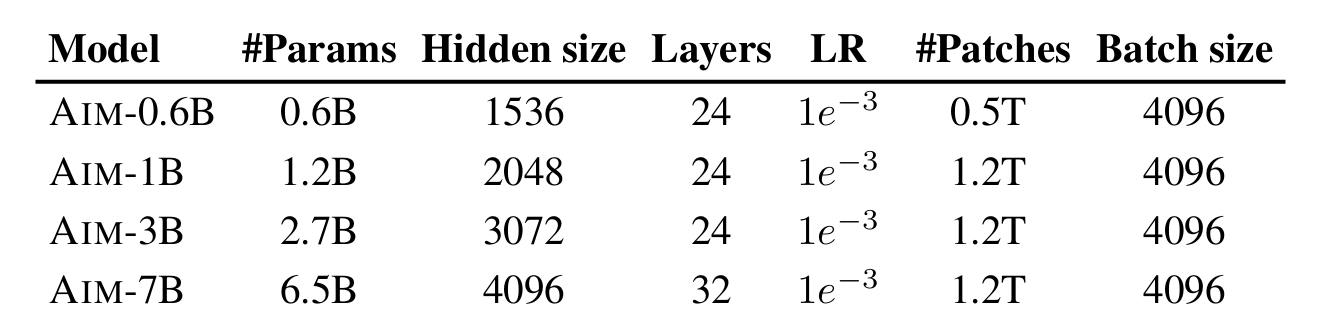

A number of variations of the Autoregressive Picture Fashions had been created with variations in peak and depth. The fashions are curated with completely different layers and completely different hidden models inside every layer. The mixtures are summarised within the desk under:

The coaching can also be carried out on different-sized datasets, together with the DFN-2B mentioned above and a mix of DFN-2B and IN-1k known as DFN-2B+.

Outcomes

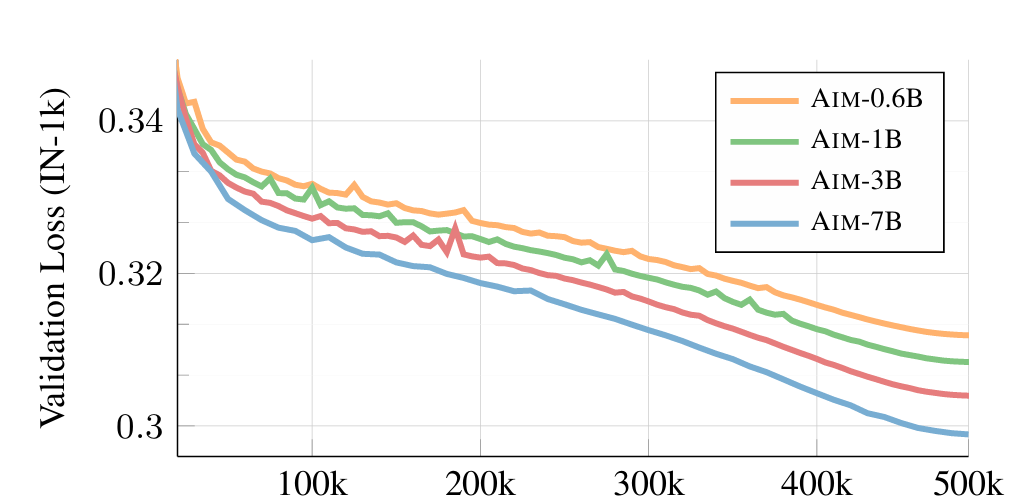

The completely different era fashions had been examined and noticed for efficiency throughout a number of iterations. The outcomes are as follows:

- Altering Mannequin Dimension: The experiment reveals that rising mannequin parameters barely improves the coaching efficiency. The loss reduces shortly, and the fashions carry out higher because the parameters enhance.

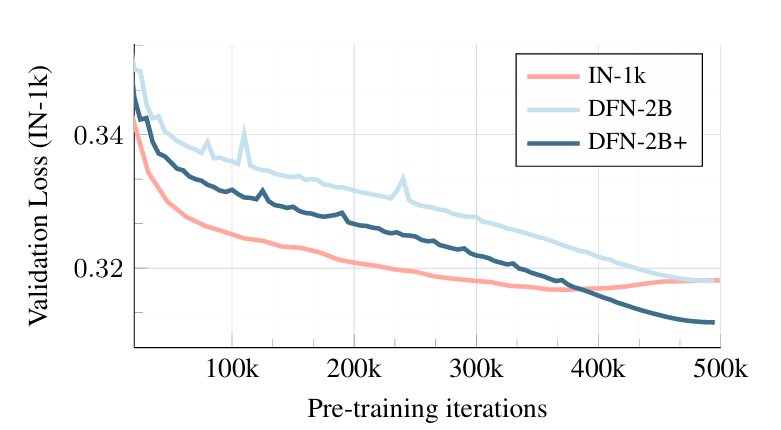

- Coaching Information Dimension: The AIM-0.6B mannequin is skilled in opposition to three dataset sizes to watch validation loss. The smallest information set IN-1k begins with a decrease validation loss that continues to lower however appears to bounce again after 375k iterations. The bounce again means that the mannequin has begun to overfit.

The bigger DFN-2B dataset begins with the next validation loss and reduces on the identical price because the earlier however doesn’t counsel overfitting. A mixed dataset (DFN-2B+) performs the most effective by surpassing the IN-1k dataset in validation loss and doesn’t overfit.

Conclusions

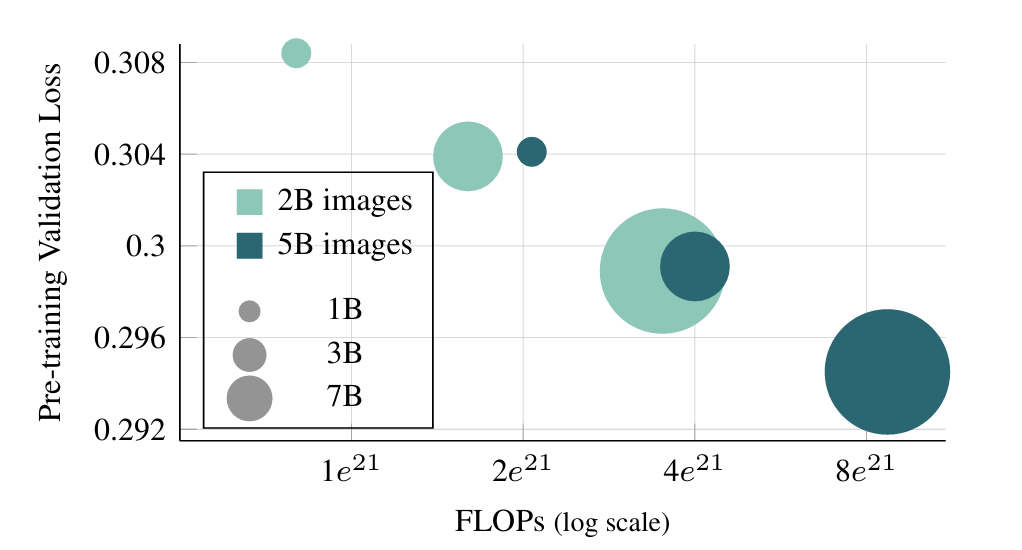

The experiments’ observations concluded that the proposed fashions scale effectively by way of efficiency. Coaching with a bigger dataset (massive photographs processed) carried out higher with rising iterations. The identical was noticed with rising mannequin capability (rising the variety of parameters).

General, the fashions displayed related traits as seen in Massive Language Fashions, the place bigger fashions show higher loss after quite a few iterations. Apparently sufficient, lower-capacity fashions skilled for an extended schedule obtain comparable validation loss in comparison with higher-capacity fashions skilled for a shorter schedule whereas utilizing an analogous quantity of FLOPs.

Efficiency Comparability on Downstream Duties

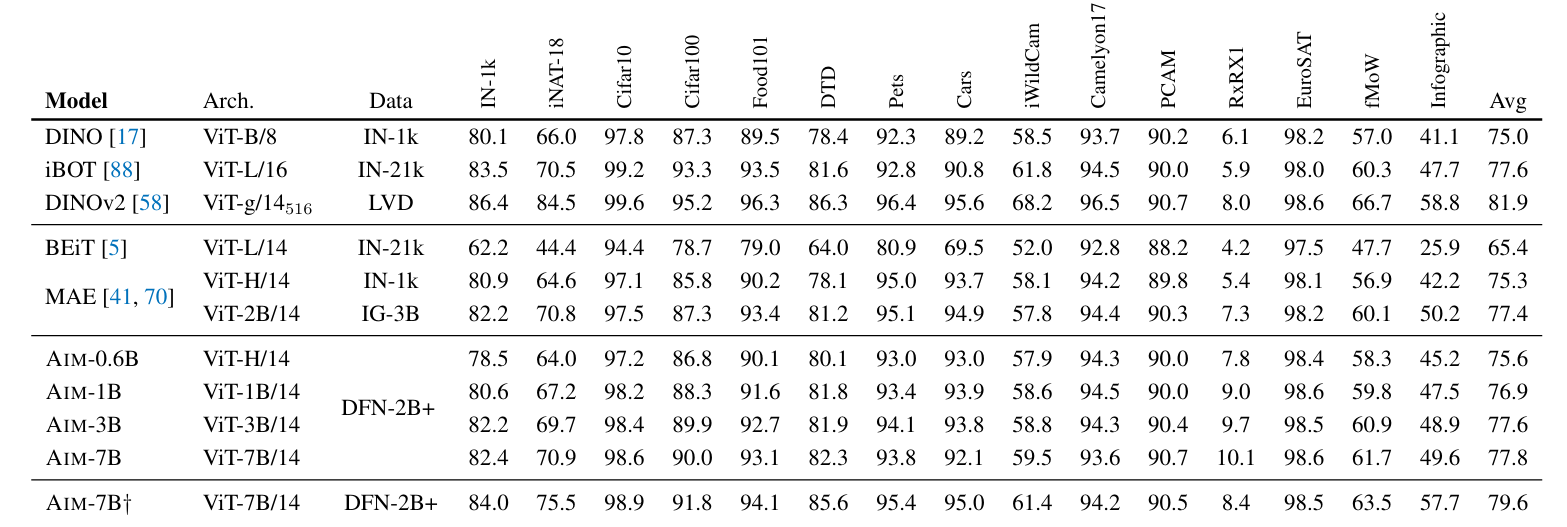

The AIM fashions had been in contrast in opposition to a variety of different generative and autoregressive fashions on a number of downstream duties. The outcomes are summarised within the desk under:

AIM outperforms most generative diffusion fashions reminiscent of BEiT and MAE for a similar capability and even bigger. It achieves related efficiency in comparison with the joint embedding fashions like DINO and iBOT and falls simply behind the rather more advanced DINOv2.

General, the AIM household offers the proper mixture of efficiency, accuracy, and scalability.

Abstract

The Autoregressive Picture Fashions (AIMs), launched by Apple analysis, show state-of-the-art scaling capabilities. The fashions are unfold throughout completely different parameter counts and every of them gives a steady pre-training expertise all through.

These AIM fashions use a transformer structure mixed with an MLP head for pretraining and are skilled on a cleaned-up dataset from the Information Filtering Networks (DFN). The experimentation section examined completely different mixtures of mannequin sizes and check units in opposition to completely different subsets of the principle information. In every state of affairs, the pre-training efficiency scaled fairly linearly with rising mannequin and information dimension.

The AIM fashions have distinctive scaling capabilities as noticed from their validation losses. The fashions additionally show aggressive efficiency in opposition to related picture era and joint embedding fashions and strike the proper steadiness between pace and accuracy.