One of many essential points for laptop imaginative and prescient (CV) builders is to keep away from overfitting. That’s a scenario when your CV mannequin works nice on the coaching knowledge however is inefficient in predicting the take a look at knowledge. The reason being that your mannequin is overfitting. To repair this downside you want regularization, most frequently finished by Batch Normalization (BN).

The regularization strategies allow CV fashions to converge sooner and forestall over-fitting. Due to this fact, the educational course of turns into extra environment friendly. There are a number of strategies for regularization and right here, we current the idea of batch normalization.

About us: Viso Suite is the pc imaginative and prescient resolution for enterprises. By masking all the ML pipeline in an easy-to-use no-code interface, groups can simply combine laptop imaginative and prescient into their enterprise workflows. To learn the way your crew can get began with laptop imaginative and prescient, e-book a demo of Viso Suite.

What’s Batch Normalization?

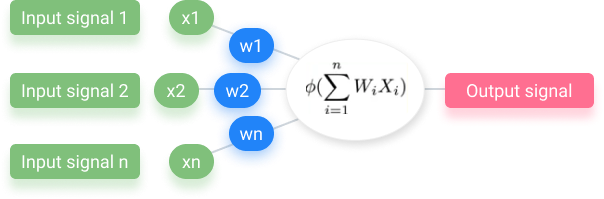

Batch normalization is a technique that may improve the effectivity and reliability of deep neural community fashions. It is rather efficient in coaching convolutional neural networks (CNN), offering sooner neural community convergence. As a supervised studying methodology, BN normalizes the activation of the interior layers throughout coaching. The following layer can analyze the info extra successfully by resetting the output distribution from the earlier layer.

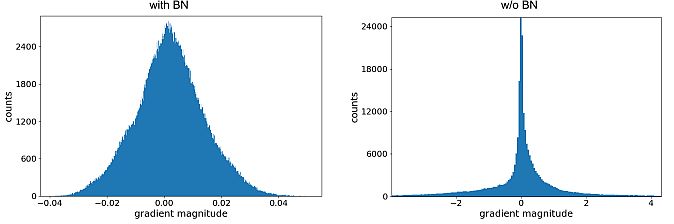

Inside covariate shift denotes the impact that parameters change within the earlier layers have on the inputs of the present layer. This makes the optimization course of extra advanced and slows down the mannequin convergence.

In batch normalization – the activation worth doesn’t matter, making every layer be taught individually. This method results in sooner studying charges. Additionally, the quantity of data misplaced between processing phases could lower. That may present a major enhance within the precision of the community.

How Does Batch Normalization Work?

The batch normalization methodology enhances the effectivity of a deep neural community by discarding the batch imply and dividing it into the batch commonplace deviation. The gradient descent methodology scales the outputs by a parameter if the loss perform is giant. Subsequently – it updates the weights within the subsequent layer.

Batch normalization goals to enhance the coaching course of and enhance the mannequin’s generalization functionality. It reduces the necessity for exact initialization of the mannequin’s weights and allows greater studying charges. That may speed up the coaching course of.

Batch normalization multiplies its output by an ordinary deviation parameter (γ). Additionally, it provides a imply parameter (beta) when utilized to a layer. Because of the coaction between batch normalization and gradient descent, knowledge could also be disarranged when adjusting these two weights for every output. In consequence, a discount of information loss and improved community stability shall be achieved by establishing the opposite related weights.

Generally, CV specialists apply batch normalization earlier than the layer’s activation. It’s usually used together with different regularization features. Additionally, deep studying strategies, together with picture classification, pure language processing, and machine translation make the most of batch normalization.

Batch Normalization in CNN Coaching

Inside Covariate Shift Discount

Google researchers Sergey Ioffe and Christian Szegedy defined the internal covariate shift as a change within the order of community activations as a result of change in community parameters throughout coaching. To enhance the coaching, they aimed to cut back the interior covariate shift. Their objective was to extend the coaching velocity by optimizing the distribution of layer inputs as coaching progressed.

Earlier researchers (Lyu, Simoncelli, 2008) utilized statistics over a single coaching instance, or, within the case of picture networks, over totally different characteristic maps at a given location. They wished to protect the data within the community. Due to this fact, they normalized the activations in a coaching pattern relative to the statistics of all the coaching dataset.

The gradient descent optimization doesn’t keep in mind the truth that the normalization will occur. Ioffe and Szegedy wished to make sure that the community at all times produces activations for parameter values. Because of the gradient loss, they utilized the normalization and calculated its dependence on the mannequin parameters.

Coaching and Inference with Batch-Normalized CNNs

Coaching may be extra environment friendly by normalizing activations that rely upon a mini-batch, however it isn’t essential throughout inference. (Mini-batch is a portion of the coaching dataset). The researchers wanted the output to rely solely on the enter. By making use of shifting averages, they tracked the accuracy of a mannequin, whereas it educated.

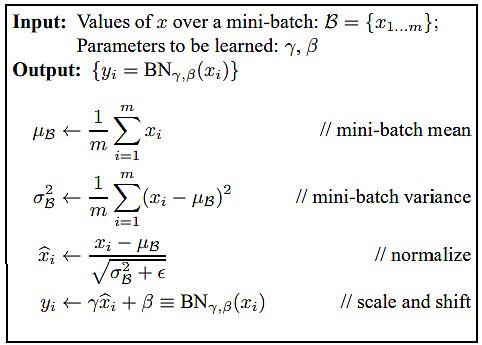

Normalization may be finished by making use of a linear transformation to every activation, as means and variances are mounted throughout inference. To batch-normalize a CNN, researchers specified a subset of activations and inserted the BN rework for every (Algorithm under).

Authors thought-about a mini-batch B of measurement m. They carried out normalization to every activation independently, by specializing in a selected activation x(ok) and omitting ok for readability. They bought m values for every activation within the mini-batch:

B = {x1…m}

They denoted normalized values as x1…m, and their linear transformations had been y1…m. Researchers have outlined the rework

BN γ,β : x1…m → y1…m

to be the Batch Normalizing rework. They carried out the BN Remodel algorithm given under. Within the algorithm, σ is a continuing added to the mini-batch variance for numerical stability.

The BN rework has been added to a community to govern all activations. By y = BN γ,β(x), researchers indicated that the parameters γ and β must be discovered. Nonetheless, they famous that the BN rework doesn’t independently course of the activation in every coaching instance.

Consequently, BN γ,β(x) relies upon each on the coaching instance and the opposite samples within the mini-batch. They handed the scaled and shifted values y to different community layers. The normalized activations xb had been inner to the transformation, however their presence was essential. The distributions of values of all xb had the anticipated worth of 0 and the variance of 1.

All layers that beforehand obtained x because the enter – now obtain BN(x). Batch normalization permits for the coaching of a mannequin utilizing batch gradient descent, or stochastic gradient descent with a mini-batch measurement m > 1.

Batch-Normalized Convolutional Networks

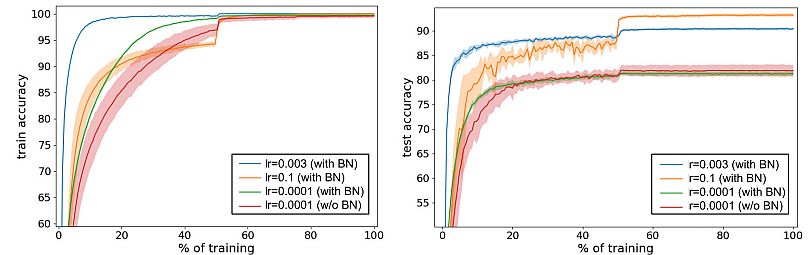

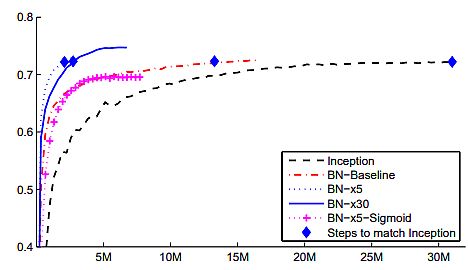

Szegedy et al., (2014) utilized batch normalization to create a brand new Inception community, educated on the ImageNet classification process. The community had a lot of convolutional and pooling layers.

- They included a SoftMax layer to foretell the picture class, out of 1000 potentialities. Convolutional layers embrace ReLU because the nonlinearity.

- The primary distinction between their CNN was that the 5 × 5 convolutional layers had been changed by two consecutive layers of three × 3 convolutions.

- By utilizing batch normalization researchers matched the accuracy of Inception in lower than half the variety of coaching steps.

- With slight modifications, they considerably elevated the coaching velocity of the community. BN-x5 wanted 14 instances fewer steps than Inception to succeed in 72.2% accuracy.

By growing the educational fee additional (BN-x30) they triggered the mannequin to coach slower firstly. Nonetheless, it was capable of attain the next closing accuracy. It reached 74.8% after 6·106 steps, i.e. 5 instances fewer steps than required by Inception.

Advantages of Batch Normalization

Batch normalization brings a number of advantages to the educational course of:

- Increased studying charges. The coaching course of is quicker since batch normalization allows greater studying charges.

- Improved generalization. BN reduces overfitting and improves the mannequin’s generalization capacity. Additionally, it normalizes the activations of a layer.

- Stabilized coaching course of. Batch normalization reduces the interior covariate shift occurring throughout coaching, bettering the soundness of the coaching course of. Thus, it makes it simpler to optimize the mannequin.

- Mannequin Regularization. Batch normalization treats the coaching instance along with different examples within the mini-batch. Due to this fact, the coaching community now not produces deterministic values for a given coaching instance.

- Lowered want for cautious initialization. Batch normalization decreases the mannequin’s dependence on the preliminary weights, making it simpler to coach.

What’s Subsequent?

Batch normalization gives an answer to handle challenges with coaching deep neural networks for laptop techniques. By normalizing the activations of every layer, batch normalization permits for smoother and extra steady optimization, leading to sooner convergence and improved generalization efficiency. As a result of it will probably mitigate points like inner covariate shifts it allows the event of extra strong and environment friendly neural community architectures.

For different related subjects in laptop imaginative and prescient, take a look at our different blogs: