Katja Grace, 4 August 2022

AI Impacts simply completed gathering information from a brand new survey of ML researchers, as just like the 2016 one as sensible, apart from a few new questions that appeared too attention-grabbing to not add.

This web page reviews on it preliminarily, and we’ll be including extra particulars there. However up to now, some issues that may curiosity you:

- 37 years till a 50% probability of HLMI in keeping with an advanced mixture forecast (and biasedly not together with information from questions in regards to the conceptually related Full Automation of Labor, which in 2016 prompted strikingly later estimates). This 2059 mixture HLMI timeline has turn out to be about eight years shorter within the six years since 2016, when the combination prediction was 2061, or 45 years out. Observe that each one of those estimates are conditional on “human scientific exercise continu[ing] with out main detrimental disruption.”

- P(extraordinarily dangerous end result)=5% The median respondent believes the likelihood that the long-run impact of superior AI on humanity can be “extraordinarily dangerous (e.g., human extinction)” is 5%. This is similar because it was in 2016 (although Zhang et al 2022 discovered 2% in an analogous however non-identical query). Many respondents put the prospect considerably greater: 48% of respondents gave a minimum of 10% probability of an especially dangerous end result. Although one other 25% put it at 0%.

- Express P(doom)=5-10% The degrees of badness concerned in that final query appeared ambiguous on reflection, so I added two new questions on human extinction explicitly. The median respondent’s likelihood of x-risk from people failing to regulate AI was 10%, weirdly greater than median probability of human extinction from AI on the whole, at 5%. This would possibly simply be as a result of totally different individuals bought these questions and the median is kind of close to the divide between 5% and 10%. Probably the most attention-grabbing factor right here might be that these are each very excessive—it appears the ‘extraordinarily dangerous end result’ numbers within the previous query weren’t simply catastrophizing merely disastrous AI outcomes.

- Assist for AI security analysis is up: 69% of respondents consider society ought to prioritize AI security analysis “extra” or “rather more” than it’s at present prioritized, up from 49% in 2016.

- The median respondent thinks there may be an “about even probability” that an argument given for an intelligence explosion is broadly right. The median respondent additionally believes machine intelligence will in all probability (60%) be “vastly higher than people in any respect professions” inside 30 years of HLMI, and that the speed of world technological enchancment will in all probability (80%) dramatically enhance (e.g., by an element of ten) because of machine intelligence inside 30 years of HLMI.

- Years/possibilities framing impact persists: should you ask individuals for possibilities of issues occurring in a hard and fast variety of years, you get later estimates than should you ask for the variety of years till a hard and fast likelihood will get hold of. This regarded very sturdy in 2016, and exhibits up once more within the 2022 HLMI information. Taking a look at simply the individuals we requested for years, the combination forecast is 29 years, whereas it’s 46 years for these requested for possibilities. (We haven’t checked in different information or for the larger framing impact but.)

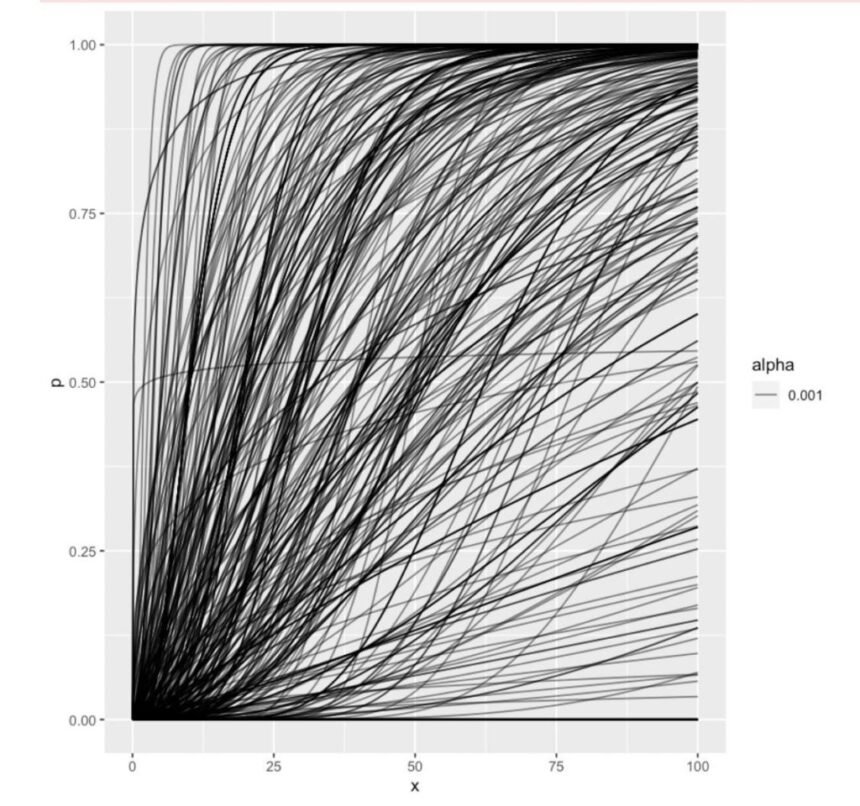

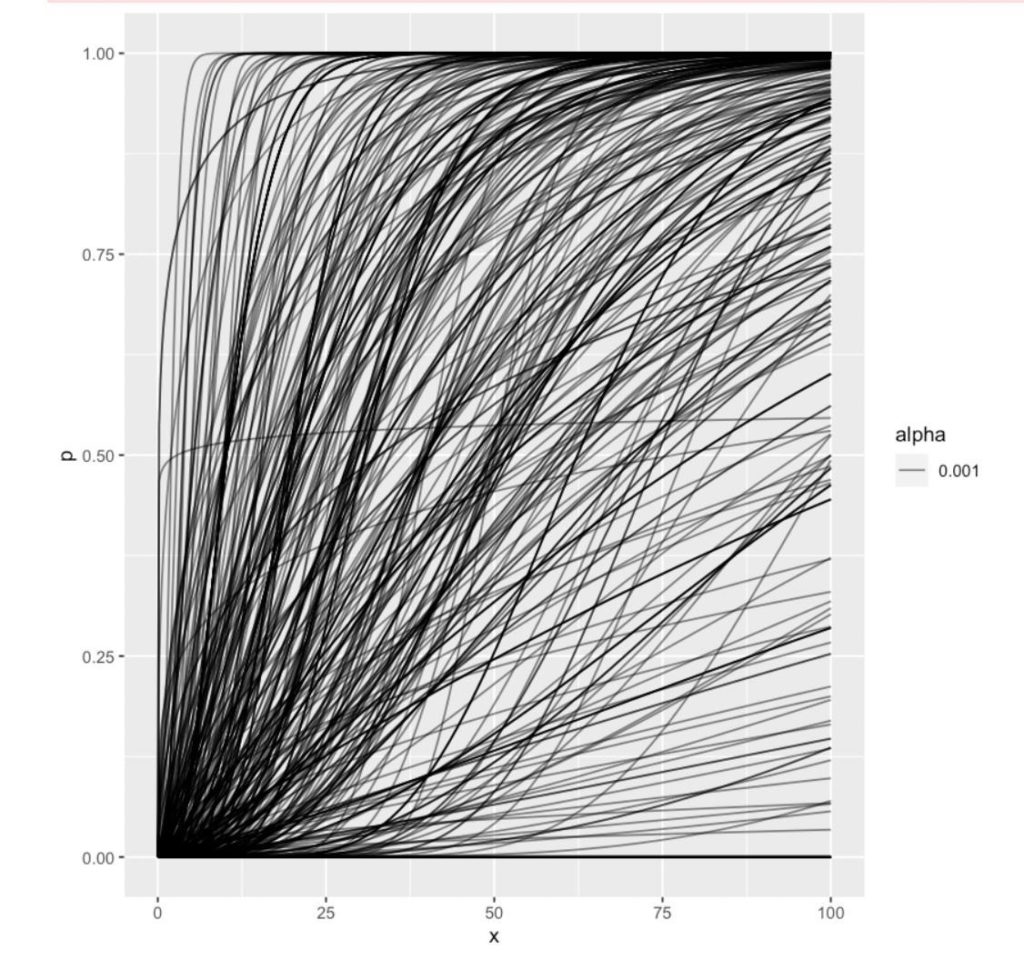

- Predictions differ so much. Pictured under: the tried reconstructions of individuals’s possibilities of HLMI over time, which feed into the combination quantity above. There are few occasions and possibilities that somebody doesn’t principally endorse the mixture of.

- You possibly can obtain the info right here (barely cleaned and anonymized) and do your personal evaluation. (If you happen to do, I encourage you to share it!)

The survey had numerous questions (randomized between members to make it an inexpensive size for any given individual), so this weblog put up doesn’t cowl a lot of it. A bit extra is on the web page and extra can be added.

Due to many individuals for assist and help with this mission! (Many however in all probability not all listed on the survey web page.)

Cowl picture: In all probability a bootstrap confidence interval round an mixture of the above forest of inferred gamma distributions, however truthfully everybody who could be certain about that form of factor went to mattress some time in the past. So, one for a future replace. I’ve extra confidently held views on whether or not one ought to let uncertainty be the enemy of placing issues up.