The AI fashions ChatGPT and Gemini, together with different fashionable counterparts, have revolutionized our technological interfaces.

As synthetic intelligence methods advance towards larger sophistication, researchers consider the flexibility to retrieve factual, up-to-date info for his or her responses. The revolutionary framework often known as Retrieval-Augmented Technology defines a essential improvement stage for giant language fashions (LLMs).

On this article, we discover what RAG is, the way it improves pure language processing, and why it’s changing into important for constructing clever, reliable AI methods.

What’s RAG in AI?

The hybrid mannequin RAG (Retrieval-Augmented Technology) bridges retrieval methods and generative fashions to generate responses. The system permits AI to retrieve applicable exterior info, which it then makes use of to create context-specific correct responses. RAG fashions symbolize an improved method over conventional methods as a result of they use a real-time data base, thus boosting reliability.

So, when somebody asks, “What’s RAG?” The only reply is: it’s a way that strengthens AI era by including a retrieval mechanism, bridging the hole between static mannequin data and dynamic, real-world information.

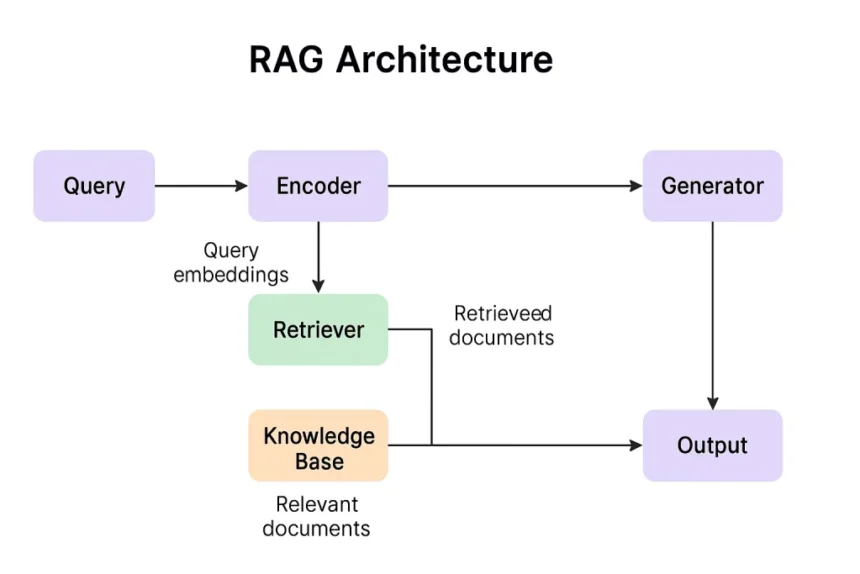

Key Parts of RAG Structure

Let’s break down the RAG structure additional:

| Part | Description |

| Encoder | Converts enter question into vector embeddings. |

| Retriever | Matches question embeddings with doc embeddings utilizing similarity search. |

| Generator | Synthesizes output by attending to each the question and retrieved passages. |

| Data Base | Static or dynamic database (e.g., Wikipedia, PDF corpus, proprietary information). |

This modular construction permits the RAG mannequin to be up to date and tailored throughout varied domains with out retraining the complete mannequin.

Learn to Improve Giant Language Fashions with RAG (Retrieval-Augmented Technology) to enhance accuracy, scale back hallucinations, and ship extra dependable AI-generated responses.

How Does the RAG Mannequin Work?

The Retrieval-Augmented Technology (RAG) mannequin enhances conventional language era by incorporating exterior doc retrieval. It performs two predominant duties:

The RAG mannequin structure consists of two main elements:

- Retriever: This module searches for related paperwork or textual content chunks from a big data base (like Wikipedia or proprietary datasets) utilizing embeddings and similarity scores.

- Generator: Primarily based on the retrieved paperwork, the generator (often a sequence-to-sequence mannequin like BART or T5) creates a response that mixes the consumer’s question with the fetched context.

Detailed Steps of RAG Mannequin Structure

1. Person Enter / Question Encoding

- A consumer submits a question (e.g., “What are the signs of diabetes?”).

- The question is encoded right into a dense vector illustration utilizing a pre-trained encoder (like BERT or DPR).

2. Doc Retrieval

- The encoded question is handed to a retriever (sometimes a dense passage retriever).

- The retriever searches an exterior data base (e.g., Wikipedia, firm docs) and returns the top-k related paperwork.

- Retrieval is predicated on similarity of vector embeddings between the question and paperwork.

Profit: The mannequin can entry real-world, up-to-date info past its static coaching.

3. Contextual Fusion

- The retrieved paperwork are mixed with the unique question.

- Every document-query pair is handled as an enter for era.

4. Textual content Technology

- A sequence-to-sequence generator mannequin (like BART or T5) takes the question and every doc to generate potential responses.

- These responses are fused utilizing:

- Marginalization: Weighted averaging of outputs.

- Rating: Choosing the right output utilizing confidence scores.

5. Ultimate Output

- A single coherent and fact-based reply is generated, grounded within the retrieved context.

Why Use RAG in Giant Language Fashions?

RAG LLMs supply main benefits over standard generative AI:

- Factual Accuracy: RAG grounds its responses in exterior information, lowering AI hallucination.

- Up-to-Date Responses: It may well pull real-time data, in contrast to conventional LLMs restricted to pre-training cutoffs.

- Area Adaptability: Simply adaptable to particular industries by modifying the underlying data base.

These advantages make RAG LLM frameworks supreme for enterprise functions, technical buyer help, and analysis instruments.

Discover the High Open-Supply LLMs which can be reshaping the way forward for AI improvement.

Purposes of RAG in Actual-World AI

RAG is already being adopted in a number of impactful AI use circumstances:

1. Superior Chatbots and Digital Assistants: By retrieving related info in actual time, RAG permits conversational brokers to supply correct, context-rich solutions, particularly in sectors like healthcare, finance, and authorized companies.

2. Enterprise Data Retrieval: Organizations use RAG-based fashions to attach inner doc repositories with conversational interfaces, making data accessible throughout groups.

3. Automated Analysis Assistants: In academia and R&D, RAG fashions assist summarize analysis papers, reply technical queries, and generate new hypotheses based mostly on current literature.

4. website positioning and Content material Creation: Content material groups can use RAG to generate weblog posts, product descriptions, and solutions which can be factually grounded in trusted sources supreme for AI-powered content material technique.

Challenges of Utilizing the RAG Mannequin

Regardless of its benefits, RAG comes with sure limitations:

- Retriever Precision: If irrelevant paperwork are retrieved, the generator might produce off-topic or incorrect solutions.

- Computational Complexity: Including a retrieval step will increase inference time and useful resource utilization.

- Data Base Upkeep: The accuracy of responses closely is dependent upon the standard and freshness of the data base.

Perceive the Transformer Structure that powers fashionable NLP fashions like BERT and GPT.

Way forward for Retrieval-Augmented Technology

The evolution of RAG structure will seemingly contain:

- Actual-Time Net Retrieval: Future RAG fashions might entry stay information instantly from the web for much more present responses.

- Multimodal Retrieval: Combining textual content, photos, and video for richer, extra informative outputs.

- Smarter Retrievers: Utilizing improved dense vector search and transformer-based retrievers to boost relevance and effectivity.

Conclusion

Retrieval-Augmented Technology (RAG) is remodeling how AI fashions work together with data. By combining highly effective era capabilities with real-time information retrieval, the RAG mannequin addresses main shortcomings of standalone language fashions.

As massive language fashions turn out to be central to instruments like buyer help bots, analysis assistants, and AI-powered search, understanding the RAG LLM structure is important for builders, information scientists, and AI fans alike.

Continuously Requested Questions

Q1. What does RAG stand for in machine studying?

RAG stands for Retrieval-Augmented Technology. It refers to a mannequin structure that mixes doc retrieval with textual content era to enhance the factual accuracy of AI responses.

Q2. How is the RAG mannequin completely different from conventional LLMs?

Not like conventional LLMs that rely solely on coaching information, the RAG mannequin retrieves real-time exterior content material to generate extra correct, up-to-date, and grounded responses.

What are the elements of RAG structure?

RAG structure consists of an encoder, retriever, generator, and a data base. The retriever fetches related paperwork, and the generator makes use of them to create context-aware outputs.

This autumn. The place is RAG utilized in real-world functions?

RAG is utilized in AI chatbots, enterprise data administration, educational analysis assistants, and content material era instruments for correct and domain-specific responses.

Q5. Can RAG fashions be fine-tuned for particular domains?

Sure, RAG fashions might be tailor-made to particular industries by updating the data base and adjusting the retriever to match domain-specific terminology.

White-glove service delivered, treats our penthouse with proper care. Our exclusive cleaning service now. Executive thanks.

Dry Cleaning in New York city by Sparkly Maid NYC