Ever questioned how Google understands your search so nicely?

The key lies in BERT, a strong AI language mannequin that helps computer systems perceive phrases in context.

Not like older fashions that learn textual content a technique, BERT appears at either side of a phrase to understand its true that means. Let’s discover the way it works and why it’s a game-changer for pure language processing.

What’s BERT?

BERT, which stands for Bidirectional Encoder Representations from Transformers, is a language mannequin developed by Google AI in 2018.

Not like earlier fashions that processed textual content in a single path, BERT reads textual content bidirectionally, permitting it to grasp the context of a phrase primarily based on each its previous and following phrases.

Key features of BERT embrace:

- Bidirectional Context: By analyzing textual content from each instructions, BERT captures the complete context of a phrase, resulting in a deeper understanding of language.

- Transformer Structure: BERT makes use of transformers, that are fashions designed to deal with sequential information by specializing in the relationships between all phrases in a sentence concurrently.

- Pre-training and Wonderful-tuning: Initially, BERT is pre-trained on giant textual content datasets to be taught language patterns. It could possibly then be fine-tuned for particular duties like query answering or sentiment evaluation, enhancing its efficiency in numerous purposes.

BERT’s bidirectional technique is essential in pure language processing (NLP) because it allows fashions to grasp the that means of a phrase relying on its context.

This ends in extra correct meanings, notably in compound sentences the place the that means of a phrase could also be affected by phrases previous & following it.

Additionally Learn: What’s a Massive Language Mannequin?

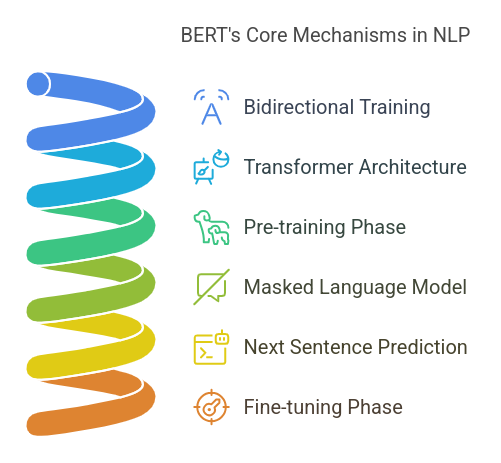

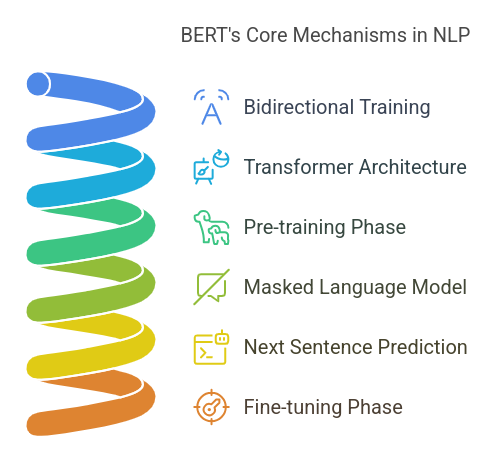

How BERT Works: The Core Mechanisms

BERT (Bidirectional Encoder Representations from Transformers) is a groundbreaking mannequin in pure language processing (NLP) that has considerably enhanced machines’ understanding of human language. Let’s delve into its core mechanisms step-by-step:

1. Bidirectional Coaching: Understanding Context from Each Left and Proper

Most conventional language fashions course of textual content unidirectionally, both left-to-right or right-to-left. BERT, however, makes use of bidirectional coaching and may due to this fact take a look at the entire context of a phrase by scanning each what has come earlier than it and what follows it. This enables BERT to grasp phrases absolutely in sentences.

2. Transformer Structure: Self-Consideration Mechanism for Contextual Studying

On the core of BERT’s structure is the Transformer mannequin, which makes use of a self-attention mechanism. This mechanism allows BERT to weigh the significance of every phrase in a sentence relative to the others, facilitating a deeper understanding of context & relationships between phrases.

3. Pre-training and Wonderful-tuning: Two-Step Studying Course of

BERT undergoes a two-step studying course of:

Pre-training: On this section, BERT is educated on giant textual content corpora utilizing two unsupervised duties:

- Masked Language Modeling (MLM): BERT randomly masks sure phrases in a sentence and learns to foretell them primarily based on the encircling context.

- Subsequent Sentence Prediction (NSP): BERT learns to foretell whether or not one sentence logically follows one other, aiding in understanding sentence relationships.

- Wonderful-tuning: After pre-training, BERT is fine-tuned on particular duties, similar to sentiment evaluation or query answering, by including task-specific layers and coaching on smaller, task-specific datasets.

4. Masked Language Mannequin (MLM): Predicting Lacking Phrases in a Sentence

Throughout pre-training, BERT employs the MLM activity, the place it randomly masks 15% of the phrases in a sentence and learns to foretell these masked phrases primarily based on the context offered by the remaining phrases. This course of helps BERT develop a deep understanding of language patterns and phrase relationships.

Recommended Learn: Phrase Embeddings in NLP

5. Subsequent Sentence Prediction (NSP): Understanding Sentence Relationships

Within the NSP activity, BERT is uncovered to pairs of sentences and educated to foretell if the second sentence logically follows from the primary. By means of this activity, BERT learns to grasp the connection between sentences, a capability that’s essential for duties similar to query answering & pure language inference.

By using bidirectional coaching, the Transformer mannequin, and a two-step studying process, BERT has raised the bar in NLP, reaching state-of-the-art efficiency on quite a few language understanding duties.

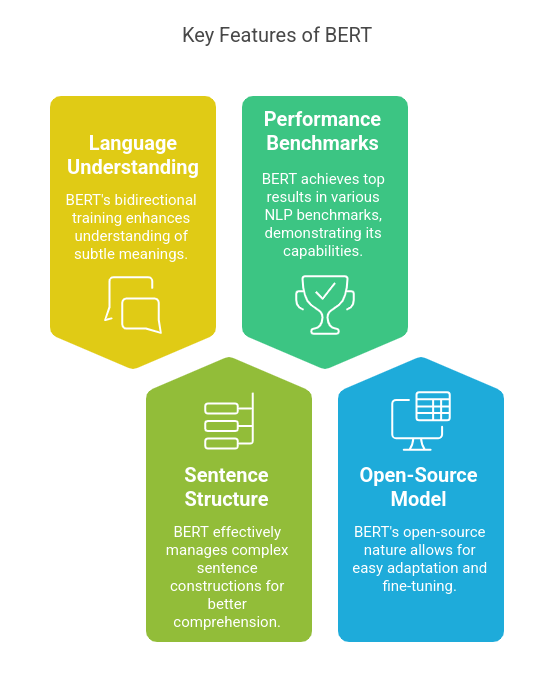

Key Options and Benefits of BERT

- Improved Understanding of Language subtleties and Polysemy: BERT’s bidirectional coaching permits it to understand the refined meanings of phrases, particularly these with a number of interpretations, by contemplating the context from each previous and following phrases.

- Efficient Dealing with of Complicated Sentence Buildings: By analyzing the whole sentence context, BERT adeptly manages intricate linguistic constructions, enhancing comprehension and processing accuracy.

- State-of-the-Artwork Efficiency in NLP Benchmarks: BERT has achieved main ends in numerous NLP benchmarks, such because the Normal Language Understanding Analysis (GLUE) and the Stanford Query Answering Dataset (SQuAD), showcasing its superior language understanding capabilities.

- Open-Supply Availability and Adaptability: As an open-source mannequin, BERT is accessible to researchers and builders, facilitating its adaptation and fine-tuning for a variety of NLP duties and purposes.

Purposes of BERT in Actual-World Eventualities

- Search Engines: BERT improves serps by higher understanding consumer queries, leading to extra correct and related search outcomes.

- Chatbots and Digital Assistants: By means of a greater understanding of context, BERT permits chatbots and digital assistants to have extra pure & coherent conversations with customers.

- Sentiment Evaluation: BERT’s deep contextual understanding allows extra correct sentiment classification, serving to to precisely interpret the emotional tone of textual information.

- Machine Translation and Textual content Summarization: BERT is used for context-sensitive translation and summarization, which boosts the standard of translated textual content and summaries.

By leveraging these options and purposes, BERT continues to play a necessary position in advancing the sector of Pure Language Processing.

Additionally Learn: Prime Purposes of Pure Language Processing (NLP)

Way forward for BERT and NLP Developments

The sphere of Pure Language Processing (NLP) has seen fast developments for the reason that introduction of BERT (Bidirectional Encoder Representations from Transformers).

These developments have led to extra refined fashions and purposes, shaping the way forward for NLP.

1. Evolution into Superior Fashions:

- RoBERTa: Constructing upon BERT, RoBERTa (Robustly Optimized BERT Pretraining Method) enhances coaching methodologies by using bigger datasets and longer coaching intervals, leading to improved efficiency on numerous NLP duties.

- ALBERT: A Lite BERT (ALBERT) minimizes mannequin dimension by sharing parameters and factorization strategies whereas preserving efficiency and enhancing effectivity.

- T5: The Textual content-To-Textual content Switch Transformer (T5) redefines NLP duties in a single text-to-text framework, permitting the mannequin to course of numerous duties like translation, summarization, and query answering beneath one structure.

2. Integration with Multimodal AI Programs:

Future NLP programs have gotten an increasing number of built-in with different modalities apart from textual content, together with pictures and movies.

This multimodal model allows fashions to grasp and produce content material that entails each language and imagery, which additional improves purposes similar to picture captioning, video evaluation, and others.

3. Optimizations for Effectivity and Deployment in Low-Useful resource Environments:

Efforts are being made to fine-tune NLP fashions for deployment in low-computational-resource environments.

Strategies like information distillation, quantization, and pruning are used to compress mannequin dimension and inference time, making refined NLP capabilities extra ubiquitous throughout units and purposes.

These developments maintain a promising future for NLP, with fashions turning into extra succesful, versatile, and environment friendly, thus increasing their applicability throughout a variety of real-world purposes.

Conclusion

BERT has revolutionized NLP, paving the way in which for superior fashions like RoBERTa, ALBERT, and T5 whereas driving improvements in multimodal AI and effectivity optimization.

As NLP continues to evolve, mastering these applied sciences turns into important for professionals aiming to excel in AI-driven fields.

For those who’re desperate to deepen your understanding of NLP and machine studying, discover Nice Studying’s AI course designed to equip you with industry-relevant expertise and hands-on expertise in cutting-edge AI purposes.

If you wish to find out about different primary NLP ideas, take a look at our free NLP programs.