A information Graph is a information base that makes use of graph information construction to retailer and function on the info. It offers well-organized human information and likewise powers functions similar to serps (Google and Bing), question-answering, and suggestion techniques.

A information graph (semantic community) represents the knowledge (storing not simply information but additionally its that means and context). This includes defining entities, summary ideas—and their interrelations in a machine- and human-understandable format.

This permits for deducing new, implicit information from current information, surpassing conventional databases. By leveraging the graph’s interconnected semantics to uncover hidden insights, the information base can reply complicated queries that transcend explicitly saved info.

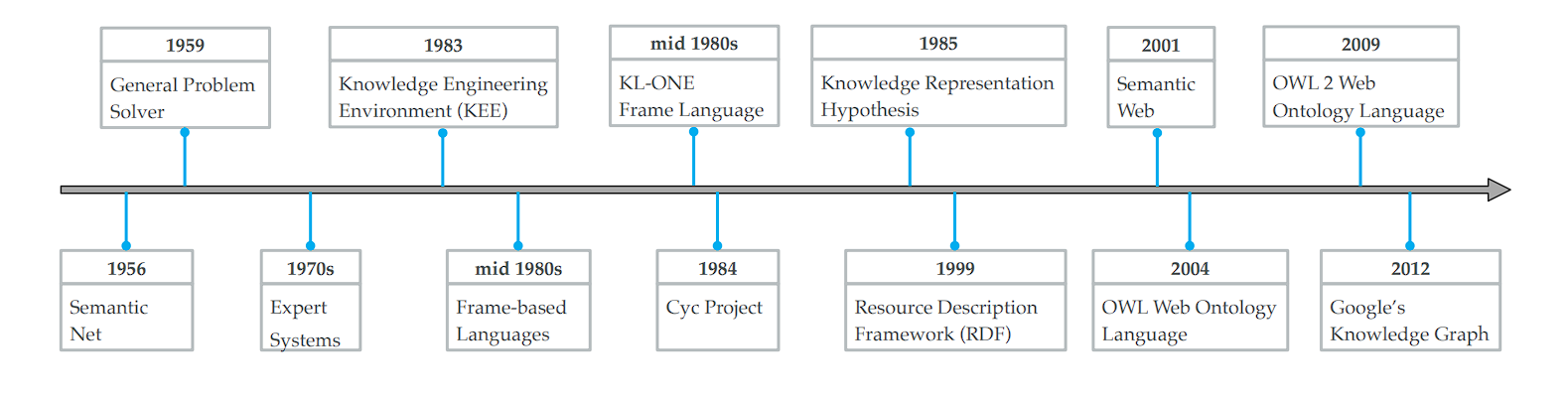

Historical past of Data Graphs

Through the years, these techniques have considerably developed in complexity and capabilities. Here’s a fast recap of all of the advances made in information bases:

- Early Foundations:

- In 1956, Richens laid the groundwork for graphical information illustration by proposing the semantic internet, marking the inception of visualizing information connections.

- The period of Data-Based mostly Techniques:

- MYCIN (developed within the early Seventies), is an skilled system for medical analysis that depends on a rule-based information base comprising round 600 guidelines.

- Evolution of Data Illustration:

- The Cyc mission (1984): The mission goals to assemble the essential ideas and guidelines about how the world works.

- Semantic Internet Requirements (2001):

- The introduction of requirements just like the Useful resource Description Framework (RDF) and the Internet Ontology Language (OWL) marked vital developments within the Semantic Internet, establishing key protocols for information illustration and alternate.

- The Emergence of Open Data Bases:

- Launch of a number of open information bases, together with WordNet, DBpedia, YAGO, and Freebase, broadening entry to structured information.

- Trendy Structured Data:

- The time period “information graph” got here into recognition in 2012 following its adoption by Google’s search engine, highlighting a information fusion framework generally known as the Data Vault (Google Data Graph) for setting up large-scale information graphs.

- Following Google’s instance, Fb, LinkedIn, Airbnb, Microsoft, Amazon, Uber, and eBay have explored information graph applied sciences, additional popularizing the time period.

Constructing Block of Knowlege Graphs

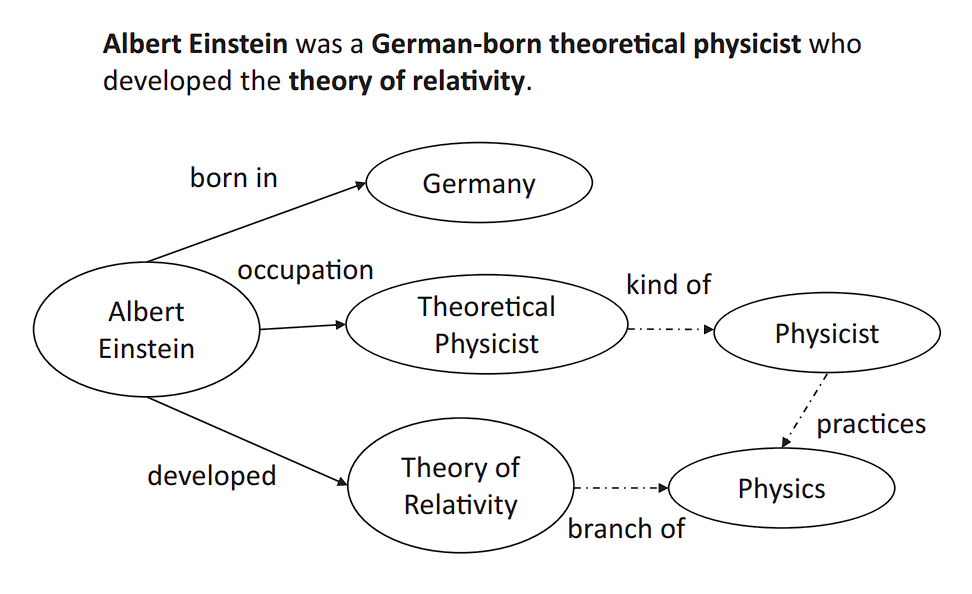

The core parts of a information graph are entities (nodes) and relationships (edges), which collectively kind the foundational construction of those graphs:

Entities (Nodes)

Nodes symbolize the real-world entities, ideas, or situations that the graph is modeling. Entities in a information graph typically symbolize issues in the actual world or summary ideas that one can distinctly establish.

- Individuals: People, similar to “Marie Curie” or “Neil Armstrong”

- Locations: Places like “Eiffel Tower” or “Canada”

Relationships (Edges)

Edges are the connections between entities throughout the information graph. They outline how entities are associated to one another and describe the character of their connection. Listed below are a couple of examples of edges.

- Works at: Connecting an individual to a company, indicating employment

- Situated in: Linking a spot or entity to its geographical location or containment inside one other place

- Married to: Indicating a conjugal relationship between two individuals.

When two nodes are linked utilizing an edge, this construction known as a triple.

Development of Data Graphs

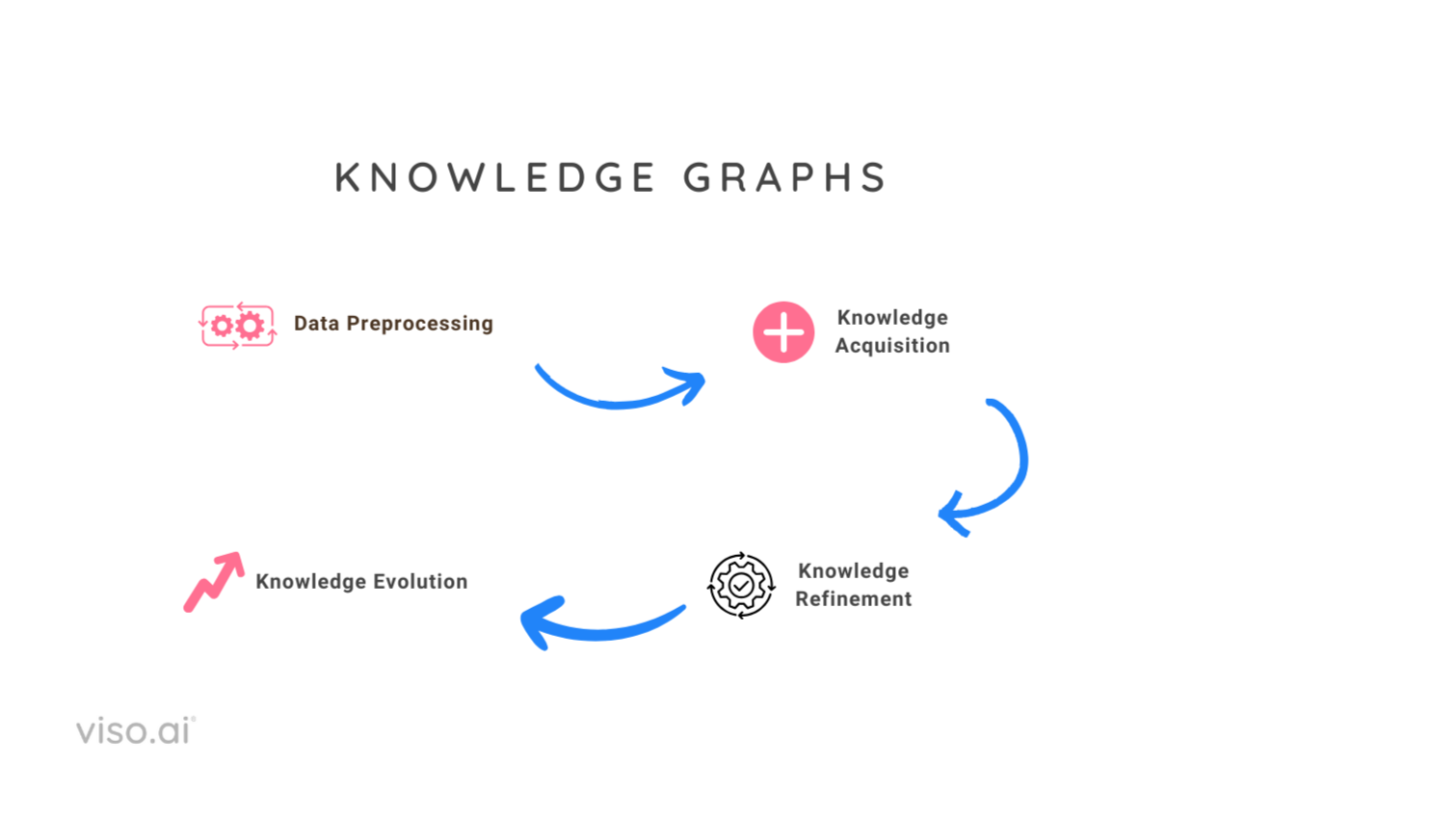

KGs are shaped by a sequence of steps. Listed below are these:

- Information Preprocessing: Step one includes gathering the info (often scrapped from the web). Then pre-processing the semi-structured information to remodel it into noise-free paperwork prepared for additional evaluation and information extraction.

- Data Acquisition: Data acquisition goals to assemble information graphs from unstructured textual content and different structured or semi-structured sources, full an current information graph, and uncover and acknowledge entities and relations. It consists of the next subtasks:

- Data graph completion: This goals at routinely predicting lacking hyperlinks for large-scale information graphs.

- Entity Discovery: It has additional subtypes:

- Entity Recognition

- Entity Typing

- Entity Disambiguation or Entity linking (EL)

- Relation extraction

- Data Refinement: The following section, after constructing the preliminary graph, focuses on refining this uncooked information construction, generally known as information refinement. This step addresses points in uncooked information graphs constructed from unstructured or semi-structured information. These points embody sparsity (lacking info) and incompleteness (inaccurate or corrupted info). The important thing duties concerned in information graph refinement are:

- Data Graph Completion: Filling in lacking triples and deriving new triples based mostly on current ones.

- Data Graph Fusion: Integrating info from a number of information graphs.

- Data Evolution: The ultimate step addresses the dynamic nature of information. It includes updating the information graph to replicate new findings, resolving contradictions with newly acquired info, and increasing the graph.

Information Preprocessing

Information preprocessing is an important step in creating information graphs from textual content information. Correct information preprocessing enhances the accuracy and effectivity of machine studying fashions utilized in subsequent steps. This includes:

- Noise Elimination: This consists of stripping out irrelevant content material, similar to HTML tags, ads, or boilerplate textual content, to concentrate on the significant content material.

- Normalization: Standardizing textual content by changing it to a uniform case, eradicating accents, and resolving abbreviations can cut back the complexity of ML and AI fashions.

- Tokenization and Half-of-Speech Tagging: Breaking down textual content into phrases or phrases and figuring out their roles helps in understanding the construction of sentences, which is essential for entity and relation extraction.

Information Sorts

Based mostly on the group of knowledge, it may be broadly labeled into structured, semi-structured, and unstructured information. Deep Studying algorithms are primarily used to course of and perceive unstructured and semi-structured information.

- Structured information is very organized and formatted in a manner that easy, simple search algorithms or different search operations can simply search. It follows a inflexible schema, organizing the info into tables with rows and columns. Every column specifies a datatype, and every row corresponds to a file. Relational databases (RDBMS) similar to MySQL and PostgreSQL handle this kind of information. Preprocessing typically includes cleansing, normalization, and have engineering.

- Semi-Structured Information: Semi-structured information doesn’t reside in relational databases. It doesn’t match neatly into tables, rows, and columns. Nonetheless, it accommodates tags or different markers—examples: XML information, JSON paperwork, e-mail messages, and NoSQL databases like MongoDB that retailer information in a format referred to as BSON (binary JSON). Instruments similar to Lovely Soup are used to extract related info.

- Unstructured information refers to info that lacks a predefined information mannequin or is just not organized in a predefined method. It represents the most typical type of information and consists of examples similar to pictures, movies, audio, and PDF information. Unstructured information preprocessing is extra complicated and might contain textual content cleansing, and have extraction. NLP libraries (similar to SpaCy) and varied machine studying algorithms are used to course of this kind of information.

Data Acquisition in KG

Data acquisition is step one within the development of information graphs, involving the extraction of entities, resolving their coreferences, and figuring out the relationships between them.

Entity Discovery

Entity discovery lays the muse for setting up information graphs by figuring out and categorizing entities inside information, which includes:

- Named Entity Recognition (NER): NER is the method of figuring out and classifying key parts in textual content into predefined classes such because the names of individuals, organizations, areas, expressions of occasions, portions, financial values, percentages, and so on.

- Entity Typing (ET): Categorizes entities into extra fine-grained varieties (e.g., scientists, artists). Data loss happens if ET duties are usually not carried out, e.g., Donald Trump is a politician and a businessman.

- Entity Linking (EL): Connects entity mentions to corresponding objects in a information graph.

NER in Unstructured Information

Named Entity Recognition (NER) performs a vital position in info extraction, aiming to establish and classify named entities (individuals, areas, organizations, and so on.) inside textual content information.

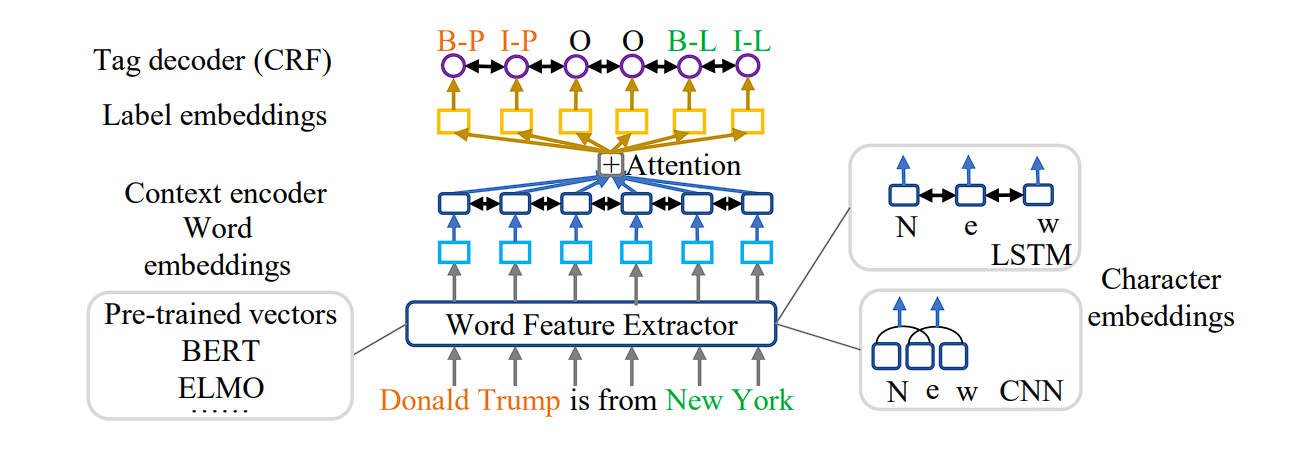

Deep studying fashions are revolutionizing NER, particularly for unstructured information. These fashions deal with NER as a sequence-to-sequence (seq2seq) downside, remodeling phrase sequences into labeled sequences (phrase + entity kind).

- Context Encoders: Deep studying architectures make use of varied encoders (CNNs, LSTMs, and so on.) to seize contextual info from the enter sentence. These encoders generate contextual embeddings that symbolize phrase that means in relation to surrounding phrases.

- Consideration Mechanisms: Consideration mechanisms additional improve deep studying fashions by specializing in particular components of the enter sequence which might be most related to predicting the entity tag for a specific phrase.

- Pre-trained Language Fashions: Using pre-trained language fashions like BERT or ELMo injects wealthy background information into the NER course of. These fashions present pre-trained phrase embeddings that seize semantic relationships between phrases, bettering NER efficiency.

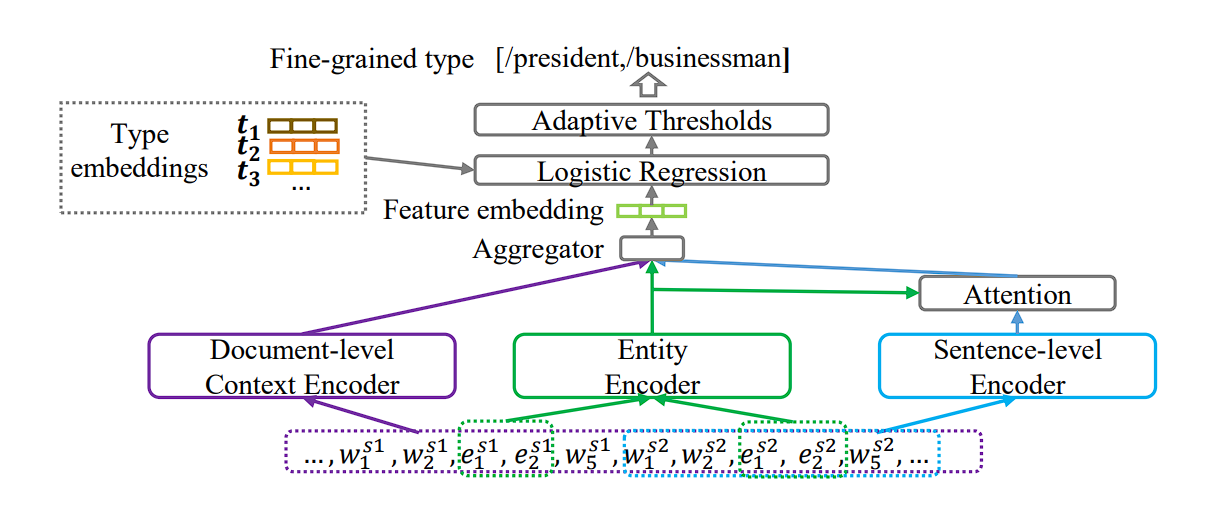

Entity Typing

Entity Recognition (NER) identifies entities inside textual content information, in distinction, Entity Typing (ET) assigns a extra particular, fine-grained kind to those entities, like classifying “Donald Trump” as each a “politician” and a “businessman.”

Priceless particulars about entities are misplaced with out ET. For example, merely recognizing “Donald Trump” doesn’t reveal his varied roles. High quality-grained typing closely depends on context. For instance, “Manchester United” may discuss with the soccer workforce or town itself relying on the encompassing textual content.

Much like Named Entity Recognition (NER), consideration mechanisms can concentrate on essentially the most related components of a sentence in Entity Typing (ET) to foretell the entity kind. This helps the mannequin establish the precise phrases or phrases that contribute most to understanding the entity’s position.

Entity Linking

Entity Linking (EL), often known as entity disambiguation, performs a significant position in enriching info extraction.

It connects textual mentions of entities in information to their corresponding entries inside a Data Graph (KG). For instance, the sentence “Tesla is constructing a brand new manufacturing unit.” EL helps disambiguate “Tesla” – is it the automotive producer, the scientist, or one thing else totally?

Relation Extraction

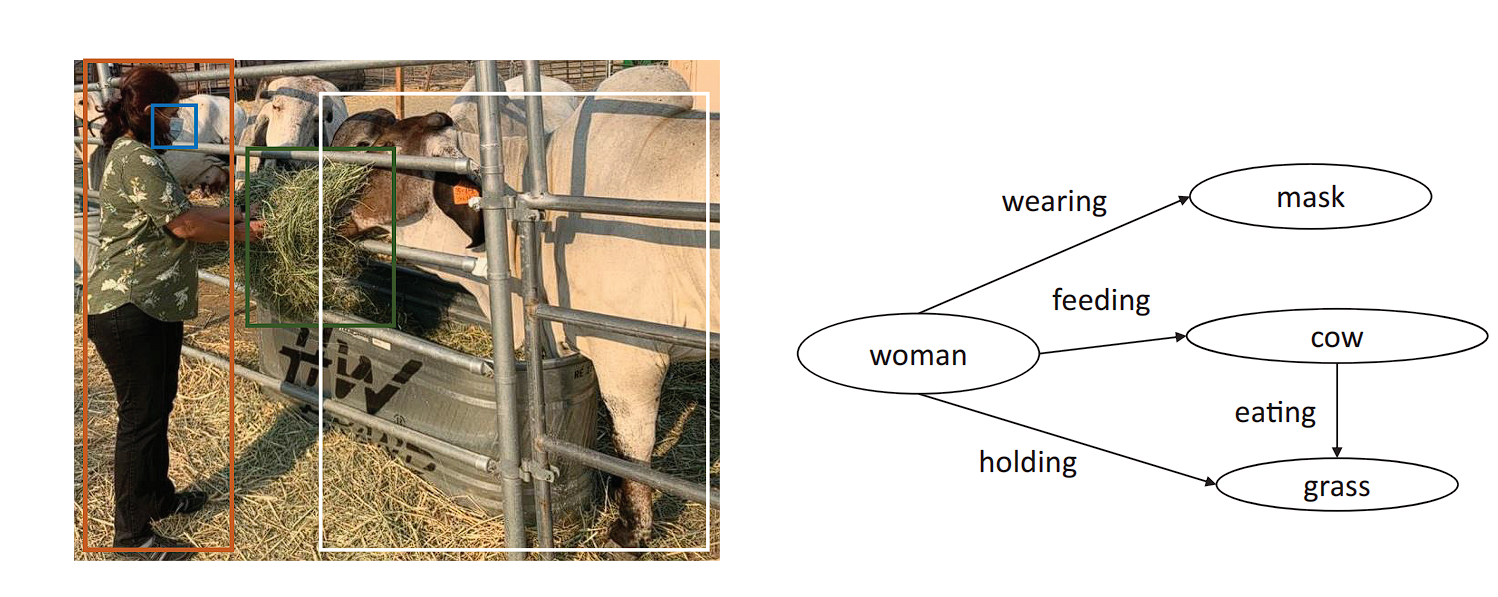

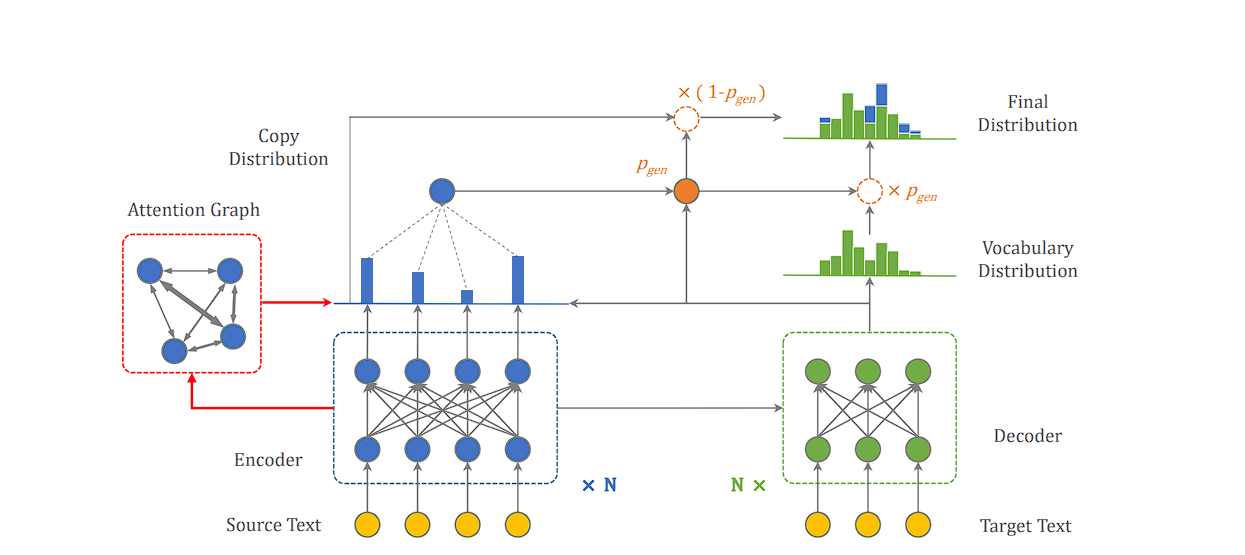

Relation extraction is the duty of detecting and classifying semantic relationships between entities inside a textual content. For instance, within the sentence “Barack Obama was born in Hawaii,” relation extraction would establish “Barack Obama” and “Hawaii” as entities and classify their relationship as “born in.”

Relation Extraction (RE) performs a vital position in populating Data Graphs (KGs) by figuring out relationships between entities talked about in textual content information.

Deep Studying Architectures for Relation Extraction Duties:

- CopyAttention: This mannequin incorporates a novel mechanism. It not solely generates new phrases for the relation and object entity however also can “copy” phrases straight from the enter sentence. That is significantly useful for relation phrases that use current vocabulary from the textual content itself.

Instruments and Applied sciences for constructing and managing information graphs

Constructing and managing information graphs includes a mix of software program instruments, libraries, and frameworks. Every utility is suited to completely different facets of the method we mentioned above. Listed below are to call a couple of.

Graph Databases(Neo4j): A graph database that gives a robust and versatile platform for constructing information graphs, with help for Cypher question language.

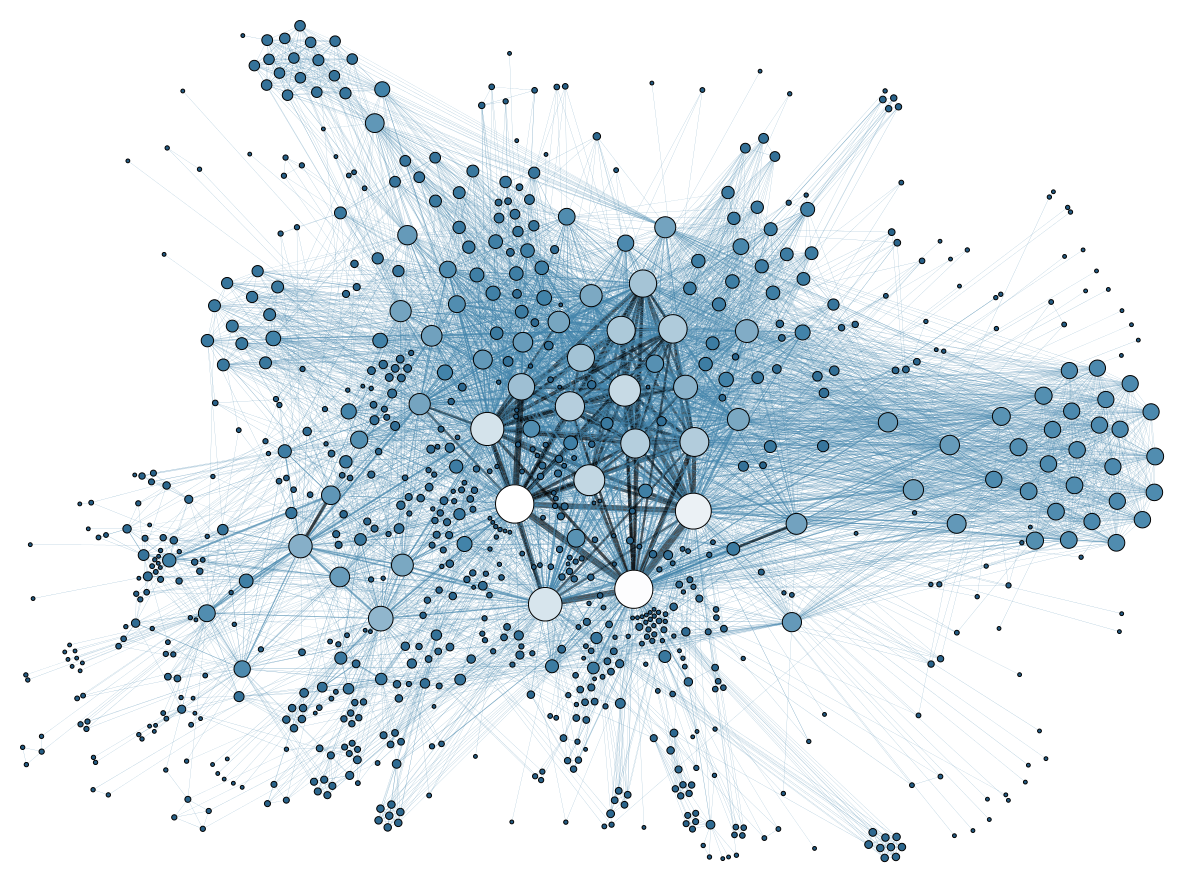

Graph Visualization and Evaluation (Gephi): Gephi is an open-source community evaluation and visualization software program package deal written in Java, designed to permit customers to intuitively discover and analyze every kind of networks and complicated techniques. Nice for researchers, information analysts, and anybody needing to visualise and discover the construction of enormous networks and information graphs.

Information Extraction and Processing(Lovely Soup & Scrapy): Python libraries for internet scraping information from internet pages.

Named Entity Recognition and Relationship Extraction:

- SpaCy: SpaCy is an open-source pure language processing (NLP) library in Python, providing highly effective capabilities for named entity recognition (NER), dependency parsing, and extra.

- Stanford NLP: The Stanford NLP Group’s software program offers a set of pure language evaluation instruments that may take uncooked textual content enter and provides the bottom types of phrases, their components of speech, and parse timber, amongst different issues.

- TensorFlow and PyTorch: Machine studying frameworks that can be utilized for constructing fashions to boost information graph illustration.