Depth evaluation is an space of laptop imaginative and prescient that includes estimating the space between imaged objects and the digicam. It permits for understanding a scene’s three-dimensional construction from two-dimensional information. Utilizing synthetic intelligence (AI), depth evaluation permits machines to understand the world extra like people. This empowers them to carry out duties like object detection, scene reconstruction, and navigating 3D house.

About Us: Viso Suite is the premier laptop imaginative and prescient infrastructure for enterprises. By integrating each step of the machine studying pipeline, Viso Suite locations full management of laptop imaginative and prescient functions within the palms of ML groups. Ebook a demo to be taught extra.

Placing Depth Sensing Into Context

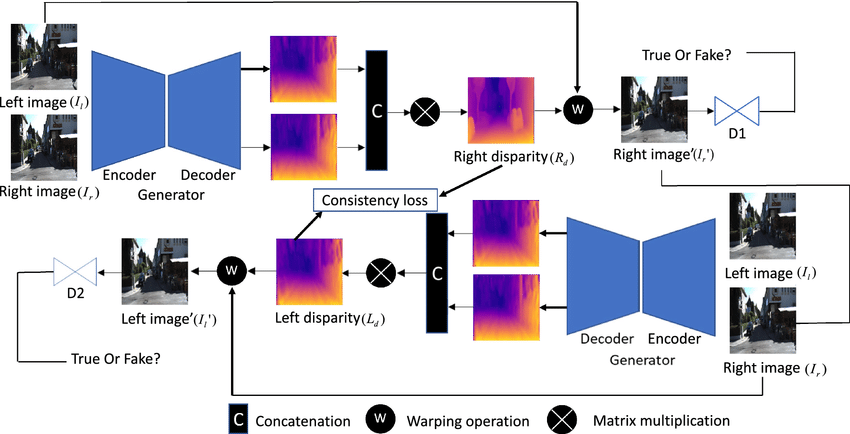

Depth sensing applied sciences successfully started with the strategy of stereo imaginative and prescient. These techniques inferred distances by analyzing the variations between photographs taken from barely completely different viewpoints. It really works in a manner that mimics human binocular imaginative and prescient.

The evolution continued with the structured mild techniques. This method includes projecting a recognized sample onto a scene and analyzing the distortions to calculate depth. Early fashions just like the Microsoft Kinect are examples of this in motion.

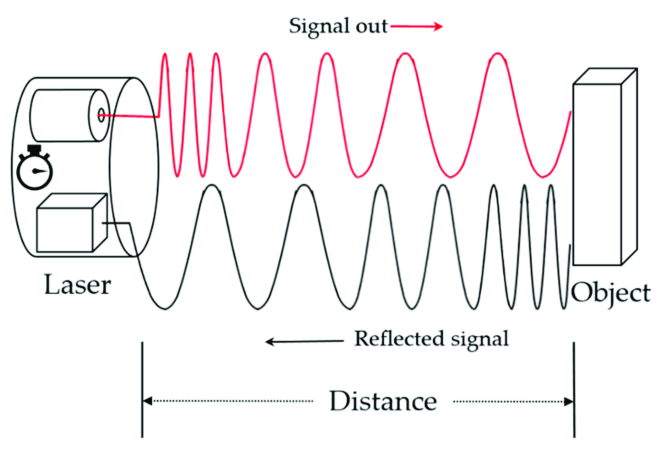

The introduction of Time-of-Flight (ToF) sensors represented one other breakthrough second. These sensors measure the time it takes for emitted mild to return, offering extremely exact depth data.

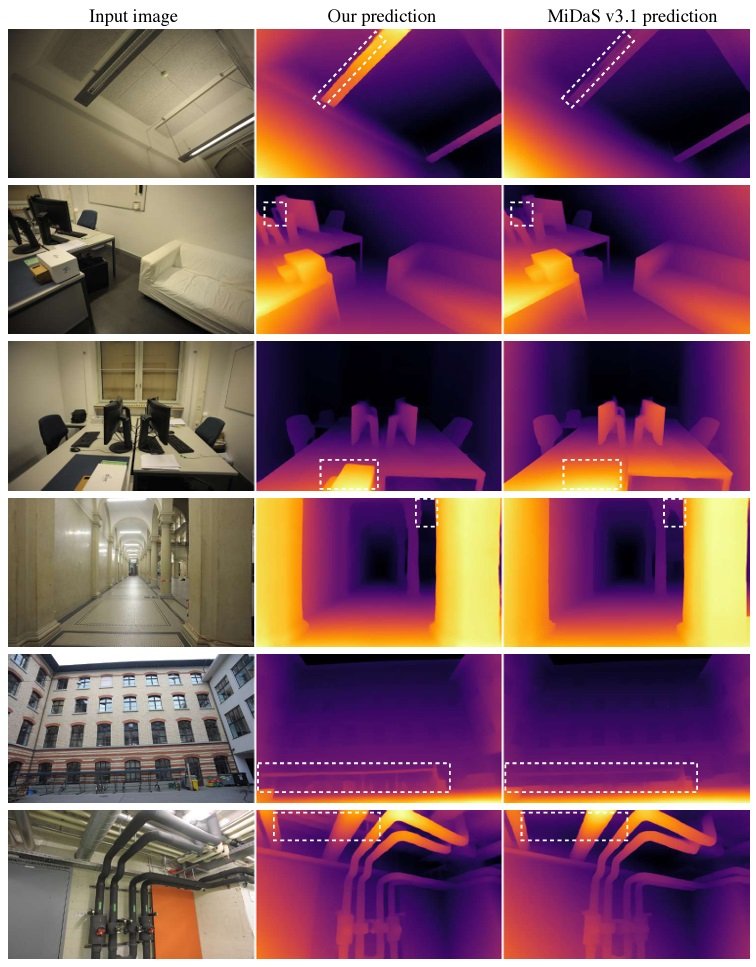

In recent times, AI has revolutionized depth evaluation by enabling monocular depth estimation—inferring depth from a single picture. This was a big leap ahead, because it eliminated the necessity for specialised {hardware}. Different fashions have since set new requirements for the accuracy and effectivity of in-depth prediction. This consists of fashions resembling MiDaS (Multi-scale Deep Networks for Monocular Depth Estimation) and DPT (Dense Prediction Transformers).

The introduction of large-scale datasets and advances in neural community architectures have additional propelled this subject. TikTok’s Depth Something mannequin is basically a end result of all of those developments.

Mastering depth evaluation methods opens up new potentialities in utility improvement, from augmented actuality to navigation techniques. It might probably push options ahead that fulfill the rising demand for clever, interactive techniques.

Intro to TikTok’s Depth Something

TikTok’s Depth Something is a groundbreaking strategy to monocular depth estimation. It successfully harnesses a mix of 1.5 million labeled photographs and over 62 million unlabeled photographs. It is a important differentiation from conventional methods, which primarily relied on smaller, labeled datasets. Leveraging the ability of large-scale unlabeled information provides a extra sturdy answer for understanding complicated visible scenes.

Depth Something has rapidly turn out to be an integral part of TikTok’s know-how ecosystem. It serves because the default depth processor for generative content material platforms resembling InstantID and InvokeAI. That is because of the mannequin’s versatility and the improved person expertise it provides via superior depth-sensing functionalities. It additionally has functions in video depth visualization, which opens new avenues for content material creation on TikTok’s platform.

Key Milestones in Depth Something’s Improvement

- 2024-02-27: CVPR 2024 formally endorses Depth Something.

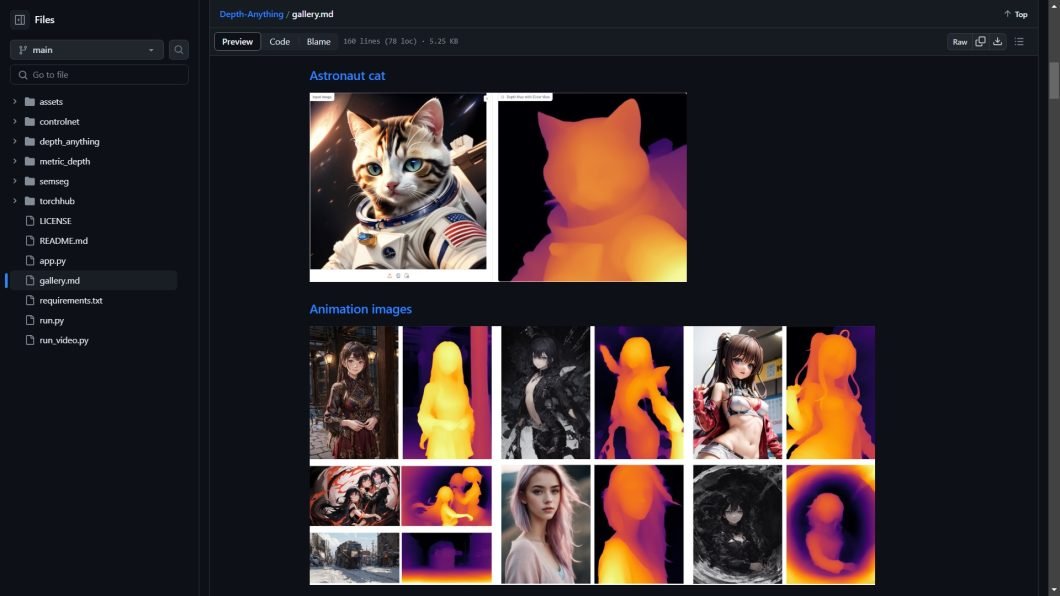

- 2024-02-05: The discharge of Depth Anything Gallery showcasing the mannequin’s capabilities.

- 2024-02-02: Implementation because the default depth processor for InstantID and InvokeAI, enhancing platform functionalities.

- 2024-01-25: Introduction of assist for video depth visualization, together with an accessible online demo.

- 2024-01-23: Integration of a brand new ControlNet based mostly on Depth Something into ControlNet WebUI and ComfyUI’s ControlNet.

- 2024-01-23: Assist for ONNX and TensorRT variations.

- 2024-01-22: Launch of the Depth Anything paper, undertaking web page, code, fashions, and demonstrations throughout platforms like HuggingFace and OpenXLab.

Depth Something is a formidable mannequin, performing exceptionally properly in comparison with current depth sensing methods. Listed here are a few of its key capabilities right now:

- Improved zero-shot relative depth estimation over fashions like MiDaS v3.1 (BEiTL-512)

- Enhanced zero-shot metric depth estimation in comparison with fashions like ZoeDepth

- Optimum in-domain fine-tuning and analysis on NYUv2 and KITTI

- Strong relative and metric depth estimation for any given picture.

- Improved depth-conditioned ControlNet providing exact synthesis.

- Potential for downstream functions in high-level scene understanding duties.

Depth Something isn’t merely a core ingredient of TikTok’s AI suite but in addition setting new requirements for depth estimation.

Depth Evaluation: A Technical Deep Dive

The important thing to Depth Something’s structure is ingeniously integrating each labeled and large-scale unlabeled information. The muse mannequin makes use of a transformer-based framework, bestowing it with the strengths of Imaginative and prescient Transformers (ViTs). This empowers it to seize complicated spatial hierarchies and contextual data important for correct depth notion.

It additionally uniquely leverages the idea of inheriting wealthy semantic priors from pre-trained encoders. By integrating semantic priors, Depth Something advantages from the huge, pre-existing data encoded in these fashions. This strategy permits the mannequin to inherit a wealthy understanding of visible scenes.

Depth Something additionally contains a distinctive hybrid coaching strategy. It makes use of an information engine to automate the annotation course of for the huge corpus of unlabeled photographs it harnesses. A smaller, pre-trained mannequin generates pseudo-depth labels for the photographs, searching for further visible data.

Then, it combines pseudo-labeled information with 1.5 million high-quality labeled photographs in a dual-path coaching mechanism. This setup incorporates each supervised studying for labeled information and semi-supervised studying for unlabeled information. This considerably enhances the mannequin’s generalization capabilities throughout varied scenes.

The coaching course of includes a novel optimization technique that adjusts the educational focus between labeled and pseudo-labeled information. For labeled photographs, it makes use of a regular regression loss perform, resembling Imply Squared Error (MSE). This minimizes the distinction between predicted and precise depth values.

For unlabeled photographs, the mannequin applies consistency loss. This course of encourages the mannequin to supply comparable depth predictions for barely perturbed variations of the identical picture. It amplifies the mannequin’s potential to interpret various visible situations whereas precisely deducing depth from delicate visible cues.

Key Applied sciences and Methodologies

- Relative and Metric Depth Estimation: Depth Something leverages an adaptive binning technique, It dynamically adjusts depth prediction ranges to optimize for each shut and distant objects inside the similar scene. This strategy is fine-tuned with benchmark metric depth data from NYUv2 and KITTI.

- Higher Depth-Conditioned ControlNet: By re-training ControlNet with Depth Something’s predictions, the mannequin attains a better precision in depth-conditioned synthesis. That is what permits Depth Something to generate practical and contextually correct augmented actuality (AR) content material and digital environments.

- Excessive-Degree Scene Understanding: Depth Something’s encoder is fine-tuned for semantic segmentation duties. Largely, because of utilizing wealthy characteristic representations realized throughout depth estimation. It performs very properly on scenes from Cityscapes and ADE20K proves its high-level capabilities.

A Efficiency Evaluation of Depth Something

The Depth Something paper showcases this mannequin’s marked developments over the MiDaS v3.1 BEiTL-512 mannequin. For this, it makes use of metrics like AbsRel (Absolute Relative Error) and δ1 (pixels with an error below 25%). A decrease AbsRel and a better δ1 rating point out improved depth estimation accuracy.

Comparative Evaluation

In keeping with these metrics, Depth Something outperforms MiDaS v3.1 throughout varied datasets:

| Dataset | Mannequin | AbsRel ↓ | δ1 ↑ |

|---|---|---|---|

| KITTI | MiDaS v3.1 | 0.127 | 0.850 |

| Depth Something (Small) | 0.080 | 0.936 | |

| Depth Something (Base) | 0.080 | 0.939 | |

| Depth Something (Massive) | 0.076 | 0.947 | |

| NYUv2 | MiDaS v3.1 | 0.048 | 0.980 |

| Depth Something (Small) | 0.053 | 0.972 | |

| Depth Something (Base) | 0.046 | 0.979 | |

| Depth Something (Massive) | 0.043 | 0.981 | |

| Sintel | MiDaS v3.1 | 0.587 | 0.699 |

| Depth Something (Small) | 0.464 | 0.739 | |

| Depth Something (Base) | 0.432 | 0.756 | |

| Depth Something (Massive) | 0.458 | 0.760 |

Observe: Decrease AbsRel and better δ1 values point out higher efficiency. The desk demonstrates Depth Something’s superiority throughout various environments.

Mannequin Variants and Effectivity

Depth Something can even cater to varied computational and use case necessities. Due to this fact, it provides three mannequin variants: Small, Base, and Massive. Beneath is a desk detailing their inference occasions throughout completely different {hardware} configurations:

| Mannequin Variant | Parameters | V100 (ms) | A100 (ms) | RTX 4090 (TensorRT, ms) |

|---|---|---|---|---|

| Small | 24.8M | 12 | 8 | 3 |

| Base | 97.5M | 13 | 9 | 6 |

| Massive | 335.3M | 20 | 13 | 12 |

Observe: This desk presents inference data for a single ahead go, excluding pre- and post-processing phases. The RTX 4090 outcomes embrace these phases when utilizing TensorRT.

These outcomes show Depth Something is a extremely correct and versatile mannequin that may adapt to varied situations. It options state-of-the-art efficiency throughout a number of datasets and computational effectivity throughout completely different {hardware} configurations.

Challenges and Limitations

One notable constraint of the mannequin is its reliance on the standard and variety of the coaching information. The mannequin demonstrates exceptional efficiency throughout varied datasets. Nonetheless, its accuracy in environments vastly completely different from these in its coaching set might be inconsistent.

Whereas its foreign money generalization is excellent, it may be improved to higher course of photographs below any circumstances.

The steadiness between utilizing unlabeled information for bettering the mannequin and the reliability of pseudo labels stays delicate. Future work may discover extra refined strategies for pseudo-label verification and mannequin coaching effectivity.

One other concern is that of knowledge privateness, particularly given the size of unlabeled information. Making certain that this huge dataset doesn’t infringe on particular person privateness rights requires meticulous information dealing with and anonymization protocols.

A remaining hurdle was the computational necessities to course of over 62 million photographs. The mannequin’s complicated structure and the sheer quantity of knowledge demanded substantial computational assets. This makes it a difficult optimization goal to coach and refine it with out entry to high-performance computing services.

Purposes and Implications

On social platforms like TikTok and YouTube, depth something permits for unleashing the ability of depth estimation or content material creation. Creators now have entry to superior options resembling 3D picture results and interactive AR filters. Past social media, Depth Something has the huge potential to affect many sectors.

For instruments like InvokeAI, this implies creating extra lifelike and interactive AI-generated artwork. Depth data permits for nuanced manipulation of parts based mostly on their perceived distance from the viewer. InstantID makes use of Depth Something to enhance id verification processes. It improves safety by enabling the system to higher discern between an actual individual and a photograph or video.

In AR experiences, its exact depth estimation permits for the straightforward integration of digital objects into real-world scenes. This might tremendously simplify complicated scene building duties in gaming, schooling, and retail. For autonomous automobiles, the power to precisely understand and perceive the 3D construction of the surroundings from monocular photographs can contribute to safer navigation.

In healthcare, comparable applied sciences may remodel telemedicine by enabling extra correct distant assessments of bodily circumstances.