Over the previous few years, tuning-based diffusion fashions have demonstrated exceptional progress throughout a big selection of picture personalization and customization duties. Nevertheless, regardless of their potential, present tuning-based diffusion fashions proceed to face a number of advanced challenges in producing and producing style-consistent pictures, and there could be three causes behind the identical. First, the idea of favor nonetheless stays broadly undefined and undetermined, and includes a mixture of components together with environment, construction, design, materials, shade, and rather more. Second inversion-based strategies are susceptible to type degradation, leading to frequent lack of fine-grained particulars. Lastly, adapter-based approaches require frequent weight tuning for every reference picture to keep up a steadiness between textual content controllability, and magnificence depth.

Moreover, the first purpose of a majority of favor switch approaches or type picture era is to make use of the reference picture, and apply its particular type from a given subset or reference picture to a goal content material picture. Nevertheless, it’s the extensive variety of attributes of favor that makes the job tough for researchers to gather stylized datasets, representing type accurately, and evaluating the success of the switch. Beforehand, fashions and frameworks that cope with fine-tuning based mostly diffusion course of, fine-tune the dataset of pictures that share a standard type, a course of that’s each time-consuming, and with restricted generalizability in real-world duties since it’s tough to collect a subset of pictures that share the identical or almost an identical type.

On this article, we’ll speak about InstantStyle, a framework designed with the purpose of tackling the problems confronted by the present tuning-based diffusion fashions for picture era and customization. We’ll speak in regards to the two key methods applied by the InstantStyle framework:

- A easy but efficient method to decouple type and content material from reference pictures throughout the function area, predicted on the belief that options throughout the identical function area may be both added to or subtracted from each other.

- Stopping type leaks by injecting the reference picture options solely into the style-specific blocks, and intentionally avoiding the necessity to use cumbersome weights for fine-tuning, usually characterizing extra parameter-heavy designs.

This text goals to cowl the InstantStyle framework in depth, and we discover the mechanism, the methodology, the structure of the framework together with its comparability with state-of-the-art frameworks. We may also speak about how the InstantStyle framework demonstrates exceptional visible stylization outcomes, and strikes an optimum steadiness between the controllability of textual components and the depth of favor. So let’s get began.

Diffusion based mostly textual content to picture generative AI frameworks have garnered noticeable and noteworthy success throughout a big selection of customization and personalization duties, significantly in constant picture era duties together with object customization, picture preservation, and magnificence switch. Nevertheless, regardless of the latest success and increase in efficiency, type switch stays a difficult process for researchers owing to the undetermined and undefined nature of favor, usually together with a wide range of components together with environment, construction, design, materials, shade, and rather more. With that being stated, the first purpose of stylized picture era or type switch is to use the particular type from a given reference picture or a reference subset of pictures to the goal content material picture. Nevertheless, the extensive variety of attributes of favor makes the job tough for researchers to gather stylized datasets, representing type accurately, and evaluating the success of the switch. Beforehand, fashions and frameworks that cope with fine-tuning based mostly diffusion course of, fine-tune the dataset of pictures that share a standard type, a course of that’s each time-consuming, and with restricted generalizability in real-world duties since it’s tough to collect a subset of pictures that share the identical or almost an identical type.

With the challenges encountered by the present method, researchers have taken an curiosity in creating fine-tuning approaches for type switch or stylized picture era, and these frameworks may be cut up into two completely different teams:

- Adapter-free Approaches: Adapter-free approaches and frameworks leverage the facility of self-attention throughout the diffusion course of, and by implementing a shared consideration operation, these fashions are able to extracting important options together with keys and values from a given reference type pictures straight.

- Adapter-based Approaches: Adapter-based approaches and frameworks alternatively incorporate a light-weight mannequin designed to extract detailed picture representations from the reference type pictures. The framework then integrates these representations into the diffusion course of skillfully utilizing cross-attention mechanisms. The first purpose of the mixing course of is to information the era course of, and to make sure that the ensuing picture is aligned with the specified stylistic nuances of the reference picture.

Nevertheless, regardless of the guarantees, tuning-free strategies usually encounter a couple of challenges. First, the adapter-free method requires an change of key and values throughout the self-attention layers, and pre-catches the important thing and worth matrices derived from the reference type pictures. When applied on pure pictures, the adapter-free method calls for the inversion of picture again to the latent noise utilizing methods like DDIM or Denoising Diffusion Implicit Fashions inversion. Nevertheless, utilizing DDIM or different inversion approaches would possibly outcome within the lack of fine-grained particulars like shade and texture, subsequently diminishing the type info within the generated pictures. Moreover, the extra step launched by these approaches is a time consuming course of, and might pose important drawbacks in sensible purposes. Alternatively, the first problem for adapter-based strategies lies in putting the best steadiness between the context leakage and magnificence depth. Content material leakage happens when a rise within the type depth leads to the looks of non-style components from the reference picture within the generated output, with the first level of issue being separating kinds from content material throughout the reference picture successfully. To deal with this difficulty, some frameworks assemble paired datasets that symbolize the identical object in several kinds, facilitating the extraction of content material illustration, and disentangled kinds. Nevertheless, because of the inherently undetermined illustration of favor, the duty of making large-scale paired datasets is restricted when it comes to the range of kinds it will probably seize, and it’s a resource-intensive course of as properly.

To deal with these limitations, the InstantStyle framework is launched which is a novel tuning-free mechanism based mostly on current adapter-based strategies with the power to seamlessly combine with different attention-based injecting strategies, and attaining the decoupling of content material and magnificence successfully. Moreover, the InstantStyle framework introduces not one, however two efficient methods to finish the decoupling of favor and content material, attaining higher type migration with out having the necessity to introduce extra strategies to realize decoupling or constructing paired datasets.

Moreover, prior adapter-based frameworks have been used broadly within the CLIP-based strategies as a picture function extractor, some frameworks have explored the opportunity of implementing function decoupling throughout the function area, and compared towards undetermination of favor, it’s simpler to explain the content material with textual content. Since pictures and texts share a function area in CLIP-based strategies, a easy subtraction operation of context textual content options and picture options can cut back content material leakage considerably. Moreover, in a majority of diffusion fashions, there’s a explicit layer in its structure that injects the type info, and accomplishes the decoupling of content material and magnificence by injecting picture options solely into particular type blocks. By implementing these two easy methods, the InstantStyle framework is ready to clear up content material leakage issues encountered by a majority of current frameworks whereas sustaining the power of favor.

To sum it up, the InstantStyle framework employs two easy, simple but efficient mechanisms to realize an efficient disentanglement of content material and magnificence from reference pictures. The Instantaneous-Model framework is a mannequin impartial and tuning-free method that demonstrates exceptional efficiency in type switch duties with an enormous potential for downstream duties.

Instantaneous-Model: Methodology and Structure

As demonstrated by earlier approaches, there’s a steadiness within the injection of favor circumstances in tuning-free diffusion fashions. If the depth of the picture situation is simply too excessive, it’d end in content material leakage, whereas if the depth of the picture situation drops too low, the type could not look like apparent sufficient. A serious motive behind this commentary is that in a picture, the type and content material are intercoupled, and as a result of inherent undetermined type attributes, it’s tough to decouple the type and intent. Because of this, meticulous weights are sometimes tuned for every reference picture in an try and steadiness textual content controllability and power of favor. Moreover, for a given enter reference picture and its corresponding textual content description within the inversion-based strategies, inversion approaches like DDIM are adopted over the picture to get the inverted diffusion trajectory, a course of that approximates the inversion equation to rework a picture right into a latent noise illustration. Constructing on the identical, and ranging from the inverted diffusion trajectory together with a brand new set of prompts, these strategies generate new content material with its type aligning with the enter. Nevertheless, as proven within the following determine, the DDIM inversion method for actual pictures is commonly unstable because it depends on native linearization assumptions, leading to propagation of errors, and results in lack of content material and incorrect picture reconstruction.

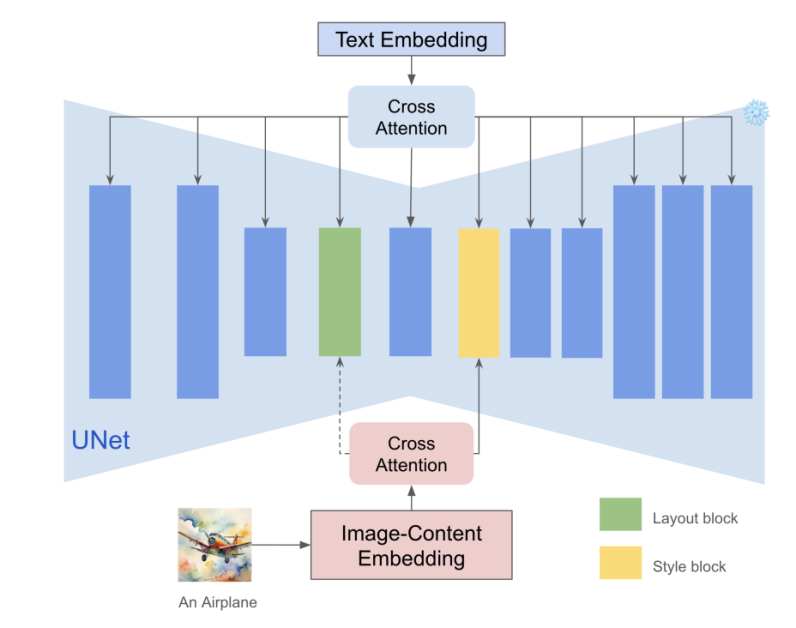

Coming to the methodology, as a substitute of using advanced methods to disentangle content material and magnificence from pictures, the Instantaneous-Model framework takes the best method to realize comparable efficiency. Compared towards the underdetermined type attributes, content material may be represented by pure textual content, permitting the Instantaneous-Model framework to make use of the textual content encoder from CLIP to extract the traits of the content material textual content as context representations. Concurrently, the Instantaneous-Model framework implements CLIP picture encoder to extract the options of the reference picture. Making the most of the characterization of CLIP international options, and publish subtracting the content material textual content options from the picture options, the Instantaneous-Model framework is ready to decouple the type and content material explicitly. Though it’s a easy technique, it helps the Instantaneous-Model framework is sort of efficient in maintaining content material leakage to a minimal.

Moreover, every layer inside a deep community is answerable for capturing completely different semantic info, and the important thing commentary from earlier fashions is that there exist two consideration layers which might be answerable for dealing with type. up Particularly, it’s the blocks.0.attentions.1 and down blocks.2.attentions.1 layers answerable for capturing type like shade, materials, environment, and the spatial format layer captures construction and composition respectively. The Instantaneous-Model framework makes use of these layers implicitly to extract type info, and prevents content material leakage with out dropping the type power. The technique is easy but efficient for the reason that mannequin has positioned type blocks that may inject the picture options into these blocks to realize seamless type switch. Moreover, for the reason that mannequin drastically reduces the variety of parameters of the adapter, the textual content management potential of the framework is enhanced, and the mechanism can also be relevant to different attention-based function injection fashions for enhancing and different duties.

Instantaneous-Model : Experiments and Outcomes

The Instantaneous-Model framework is applied on the Steady Diffusion XL framework, and it makes use of the generally adopted pre-trained IR-adapter as its exemplar to validate its methodology, and mutes all blocks besides the type blocks for picture options. The Instantaneous-Model mannequin additionally trains the IR-adapter on 4 million large-scale text-image paired datasets from scratch, and as a substitute of coaching all blocks, updates solely the type blocks.

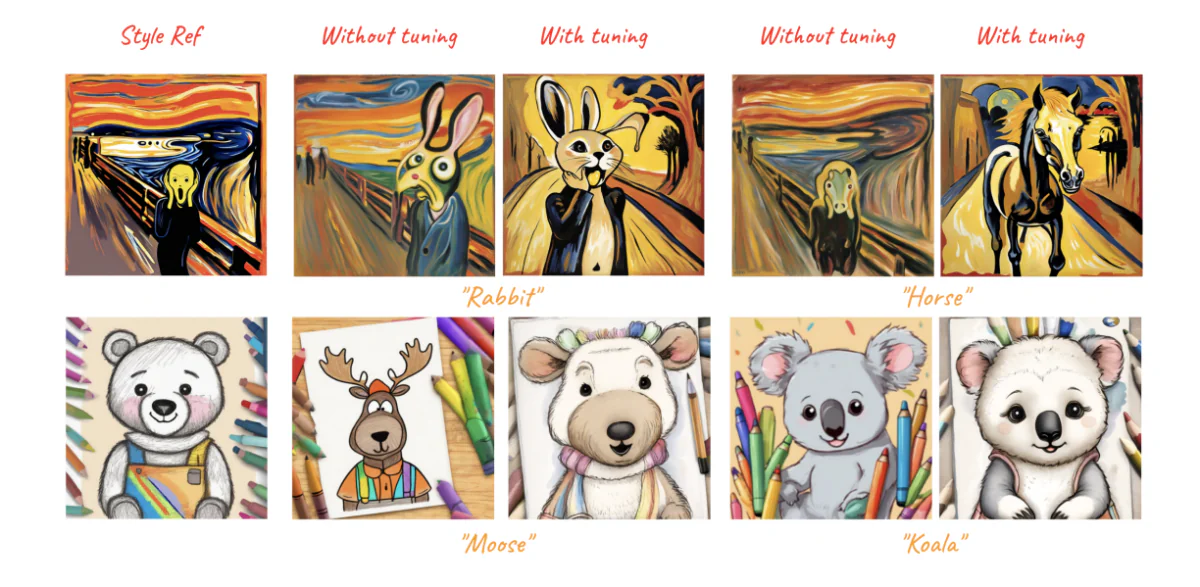

To conduct its generalization capabilities and robustness, the Instantaneous-Model framework conducts quite a few type switch experiments with varied kinds throughout completely different content material, and the outcomes may be noticed within the following pictures. Given a single type reference picture together with various prompts, the Instantaneous-Model framework delivers prime quality, constant type picture era.

Moreover, for the reason that mannequin injects picture info solely within the type blocks, it is ready to mitigate the difficulty of content material leakage considerably, and subsequently, doesn’t must carry out weight tuning.

Shifting alongside, the Instantaneous-Model framework additionally adopts the ControlNet structure to realize image-based stylization with spatial management, and the outcomes are demonstrated within the following picture.

Compared towards earlier state-of-the-art strategies together with StyleAlign, B-LoRA, Swapping Self Consideration, and IP-Adapter, the Instantaneous-Model framework demonstrates the perfect visible results.

Remaining Ideas

On this article, we’ve talked about Instantaneous-Model, a basic framework that employs two easy but efficient methods to realize efficient disentanglement of content material and magnificence from reference pictures. The InstantStyle framework is designed with the purpose of tackling the problems confronted by the present tuning-based diffusion fashions for picture era and customization. The Instantaneous-Model framework implements two important methods: A easy but efficient method to decouple type and content material from reference pictures throughout the function area, predicted on the belief that options throughout the identical function area may be both added to or subtracted from each other. Second, stopping type leaks by injecting the reference picture options solely into the style-specific blocks, and intentionally avoiding the necessity to use cumbersome weights for fine-tuning, usually characterizing extra parameter-heavy designs.