VentureBeat presents: AI Unleashed – An unique government occasion for enterprise knowledge leaders. Hear from prime business leaders on Nov 15. Reserve your free pass

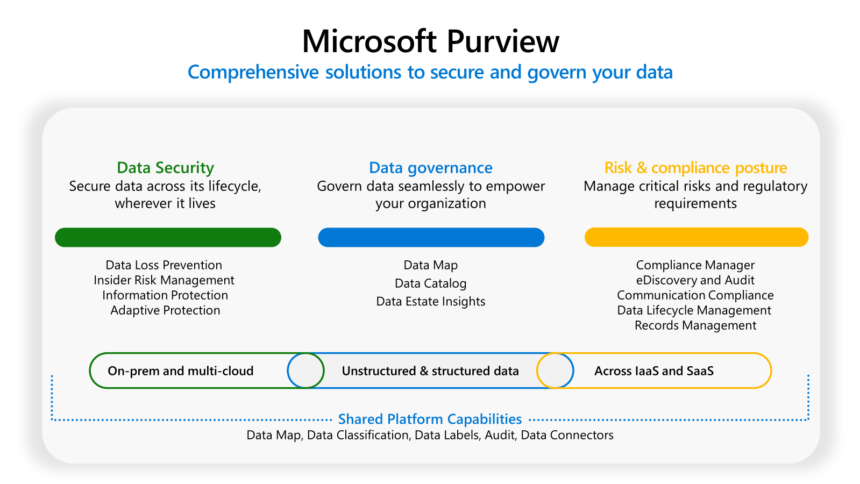

On the Microsoft Ignite convention right now, the software program big unveiled new knowledge safety and compliance capabilities in Microsoft Purview aimed toward defending info utilized in generative AI programs like Copilot.

The brand new options will enable Copilot customers on Microsoft 365 to regulate what knowledge the AI coding assistant can entry, mechanically classify delicate knowledge in responses, and institute compliance controls round LLM utilization.

Herain Oberi, normal supervisor of Microsoft knowledge safety, compliance, and privateness, and Rudra Mitra, company vp at Microsoft, spoke with VentureBeat forward of the announcement in a candid dialog the place they shared key insights into Microsoft’s modern method.

“Information is successfully the inspiration on which AI is constructed. AI is just pretty much as good as the info that goes in. And so it seems, it’s a particularly necessary a part of it,” Oberi stated, highlighting the crucial position knowledge performs in AI functions.

“With Purview, now, if you happen to join these two dots, Microsoft is trying to safe the way forward for AI or safe the way forward for the info with AI. And I believe that’s simply such a accountable method,” Mitra advised VentureBeat.

Visibility into Copilot dangers and utilization

A brand new AI hub in Purview will give directors visibility into Copilot utilization throughout the group. They will see which staff are interacting with the AI and assess related dangers.

Delicate knowledge may also be blocked from being enter into Copilot based mostly on person threat profiles. And output from the AI will inherit protecting labels from supply knowledge.

“It’s not simply visibility throughout Microsoft’s Copilots, we predict the entire image is what’s necessary for the shopper right here,” Mitra stated.

Delicate knowledge may also be blocked from being enter into Copilot based mostly on person threat profiles. And output from the AI will inherit protecting labels from supply knowledge.

Compliance insurance policies prolonged to Copilot

On the compliance aspect, Purview’s auditing, retention and communication monitoring will now prolong to Copilot interactions.

However that is just the start, as Microsoft plans to broaden Purview’s safety past Copilot to in-house constructed AI and third occasion client apps like ChatGPT.

With AI poised for larger adoption, Microsoft is positioning itself on the forefront of accountable and moral knowledge use in enterprise AI programs. Sturdy knowledge governance will probably be key to making sure privateness and stopping misuse on this subsequent frontier of know-how.

Nonetheless, true accountable AI would require buy-in throughout the whole tech business. Opponents like Google, Amazon and IBM might want to make knowledge ethics a precedence as nicely. For if customers can’t belief AI, it’s going to by no means attain its full potential.

The trail ahead is evident — enterprises need each leading edge innovation and forged iron knowledge safety. Whichever firm makes belief job one will lead us into the AI-powered future.