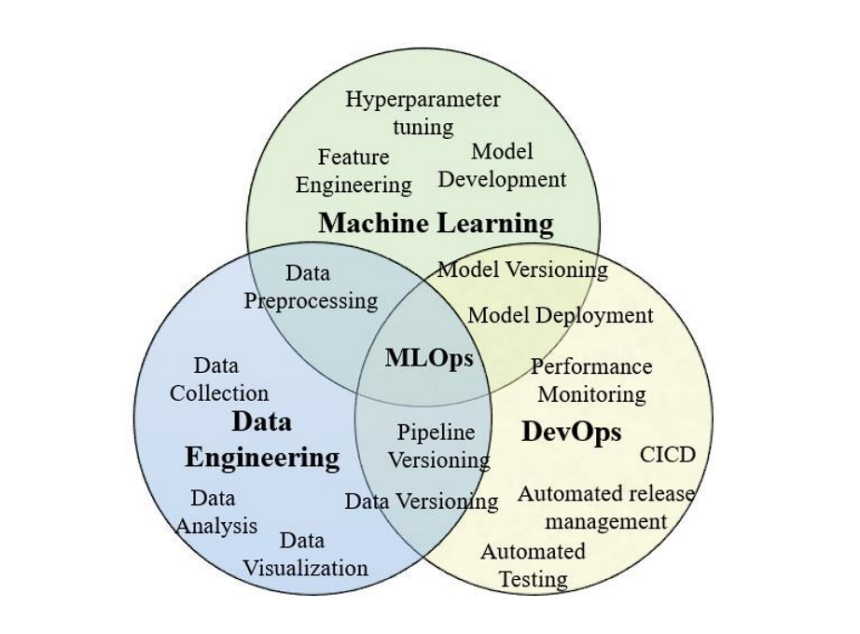

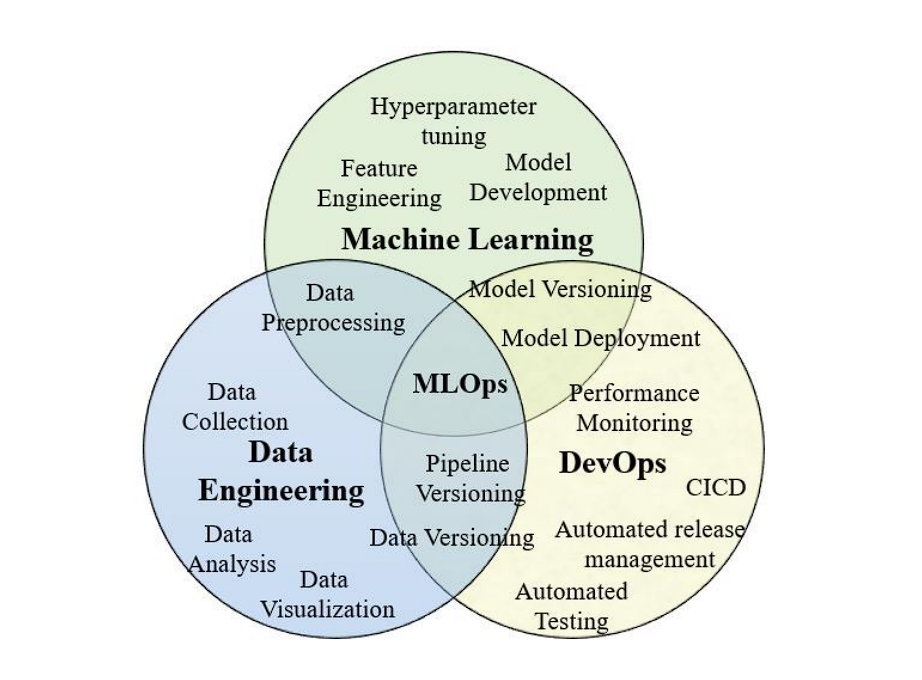

MLflow is an open-source platform designed to handle all the machine studying lifecycle, making it simpler for ML Engineers, Knowledge Scientists, Software program Builders, and everybody concerned within the course of. MLflow could be seen as a device that matches throughout the MLOps (synonymous with DevOps) framework.

Machine studying operations (MLOps) are a set of practices that automate and simplify machine studying (ML) workflows and deployments.

Many machine studying initiatives fail to ship sensible outcomes resulting from difficulties in automation and deployment. The reason being that many of the conventional information science practices contain handbook workflows, resulting in points throughout deployment.

MLOps goals to automate and operationalize ML fashions, enabling smoother transitions to manufacturing and deployment. MLflow particularly addresses the challenges within the growth and experimentation section.

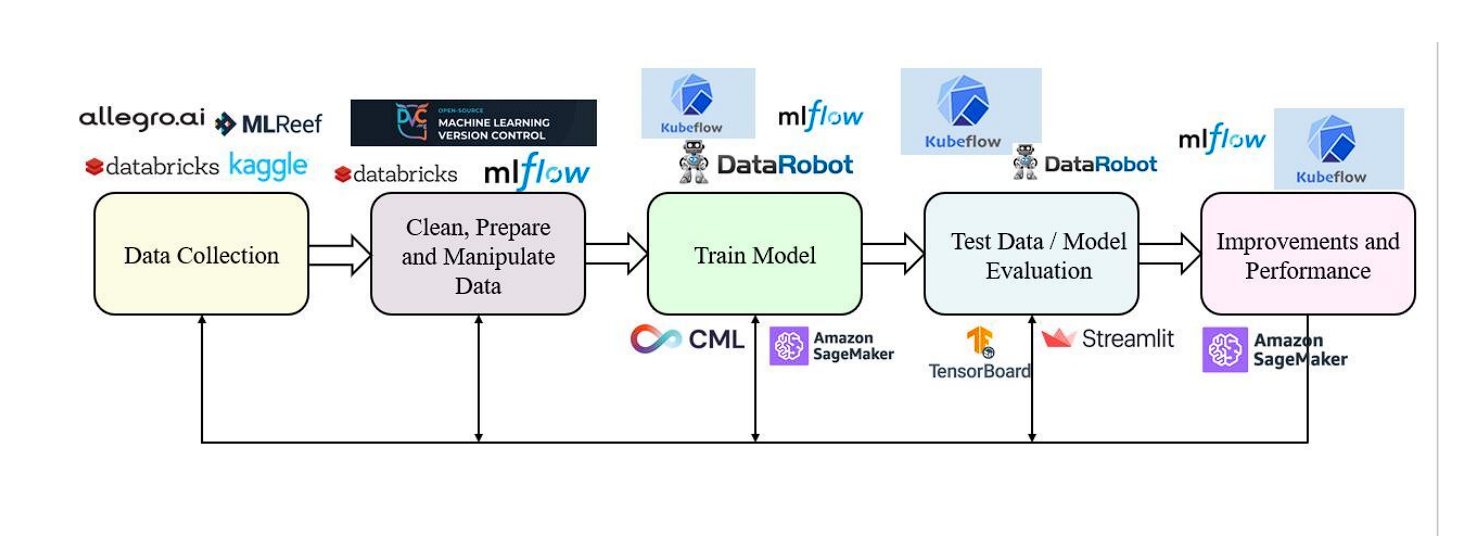

Levels Of ML Life Cycle

- Knowledge Acquisition: Gather information from related sources to precisely symbolize the issue you’re fixing.

- Knowledge Exploration and Preparation: Clear and put together the information for evaluation, together with figuring out patterns and fixing inconsistencies. Knowledge hardly ever is available in a clear, ready-to-use format.

- Mannequin Coaching: This step entails choosing an algorithm, feeding it with coaching information, and iteratively adjusting its parameters to attenuate error.

- Mannequin Analysis: Assess the efficiency of skilled fashions to establish the simplest one.

- Deployment: As soon as a mannequin is chosen based mostly on its analysis metrics, it’s deployed right into a manufacturing setting. Deployment can take numerous varieties, corresponding to integrating the mannequin into current purposes, utilizing it in a batch course of for giant datasets, or making it out there as a service by way of an API.

Automating these ML lifecycle steps is extraordinarily tough. A number of fashions fail and plenty of simply attain the manufacturing stage. Listed here are the challenges engineers face throughout the growth stage of ML fashions:

- A Number of Instruments: Not like conventional software program growth, ML requires experimenting with numerous instruments (libraries, frameworks) throughout totally different phases, making workflow administration complicated.

- Experiment Monitoring: Quite a few configuration choices (information, hyperparameters, pre-processing) impression ML outcomes. Monitoring these is essential however difficult for outcome evaluation.

- Reproducibility: The flexibility to breed outcomes is essential in ML growth. Nonetheless, with out detailed monitoring and administration of code, information, and setting configurations, reproducing the identical outcomes is unimaginable. This situation turns into much more tough when code is handed between totally different roles, corresponding to from an information scientist to a software program engineer for deployment.

- Manufacturing Deployment: Deploying fashions into manufacturing entails challenges associated to integration with current techniques, scalability, and guaranteeing low-latency predictions. Furthermore, sustaining CI/CD (steady integration and steady supply) is much more difficult.

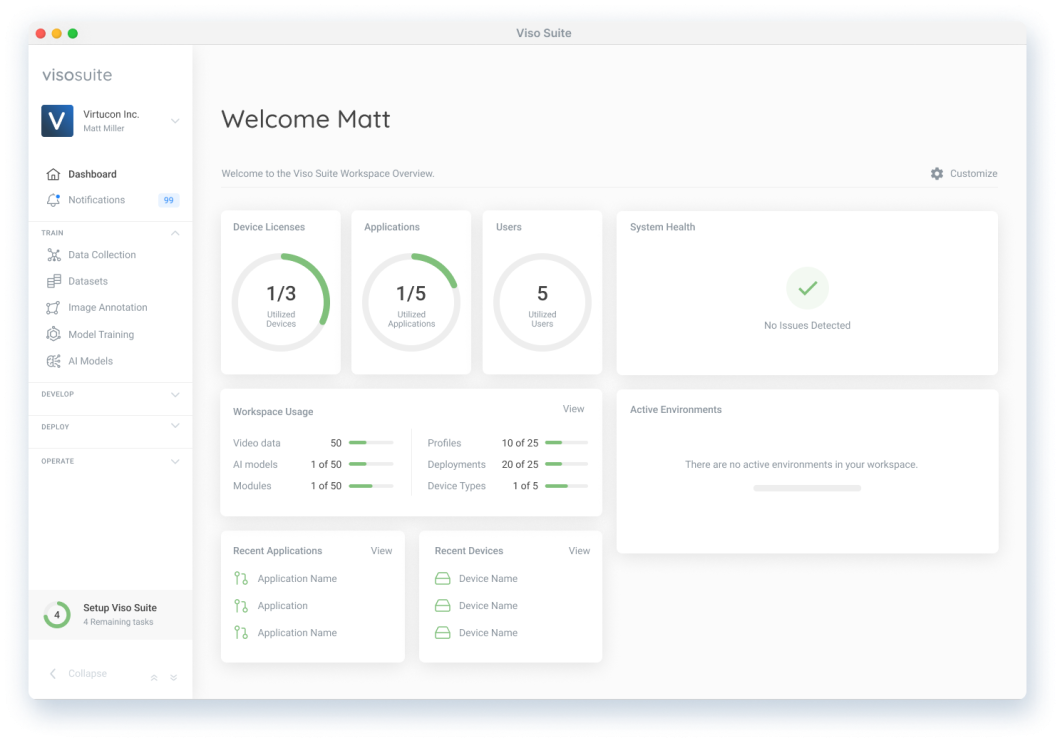

Viso Suite: By consolidating all the ML pipeline right into a unified infrastructure, Viso Suite makes coaching fashions and deploying them wherever, simple. By utilizing Viso Suite to handle all the lifecycle, ML groups can minimize the time-to-value of their purposes to simply 3 days. To be taught extra, e book a demo.

What’s MLflow?

MLflow is an open-source platform that helps streamline the ML course of, by following the MLOps framework. It may be divided into 4 main parts:

- MLflow Monitoring: An API for recording experiment particulars. This contains the code used, parameters set, enter information supplied, metrics generated throughout coaching, and any output recordsdata produced.

- MLflow Tasks: MLflow Tasks supplies a easy format for packaging machine studying code into reusable initiatives. Every mission can specify its setting (e.g., required libraries), the code to execute, and parameters that enable programmatic management inside multi-step workflows or automated instruments for hyperparameter tuning.

- MLflow Fashions: MLflow Fashions present a generic format for packaging skilled fashions. This format contains each the code and information important for the mannequin’s operation.

- Mannequin Registry: This serves as a centralized place the place you possibly can see all of your ML fashions. Offering options corresponding to collaboration and mannequin versioning.

What’s MLflow Monitoring?

MLflow Monitoring is an API that helps you handle and monitor your machine-learning experiments. The API helps to log, monitor, and retailer info concerning experiments. You need to use the API utilizing Python, REST, R, and Java.

Listed here are the phrases/options of MLflow monitoring:

- Runs: In MLflow, a “Run” is a person execution of machine studying code. Every run represents a single experiment, which might contain coaching a mannequin, testing a set of hyperparameters, or another ML process. They function containers to offer a structured method to document the experimentation course of.

- Experiments: Group-related runs made collectively. This helps manage your experiments and examine runs throughout the identical context.

- Monitoring APIs: These APIs assist you to programmatically work together with MLflow Monitoring to log information and handle experiments.

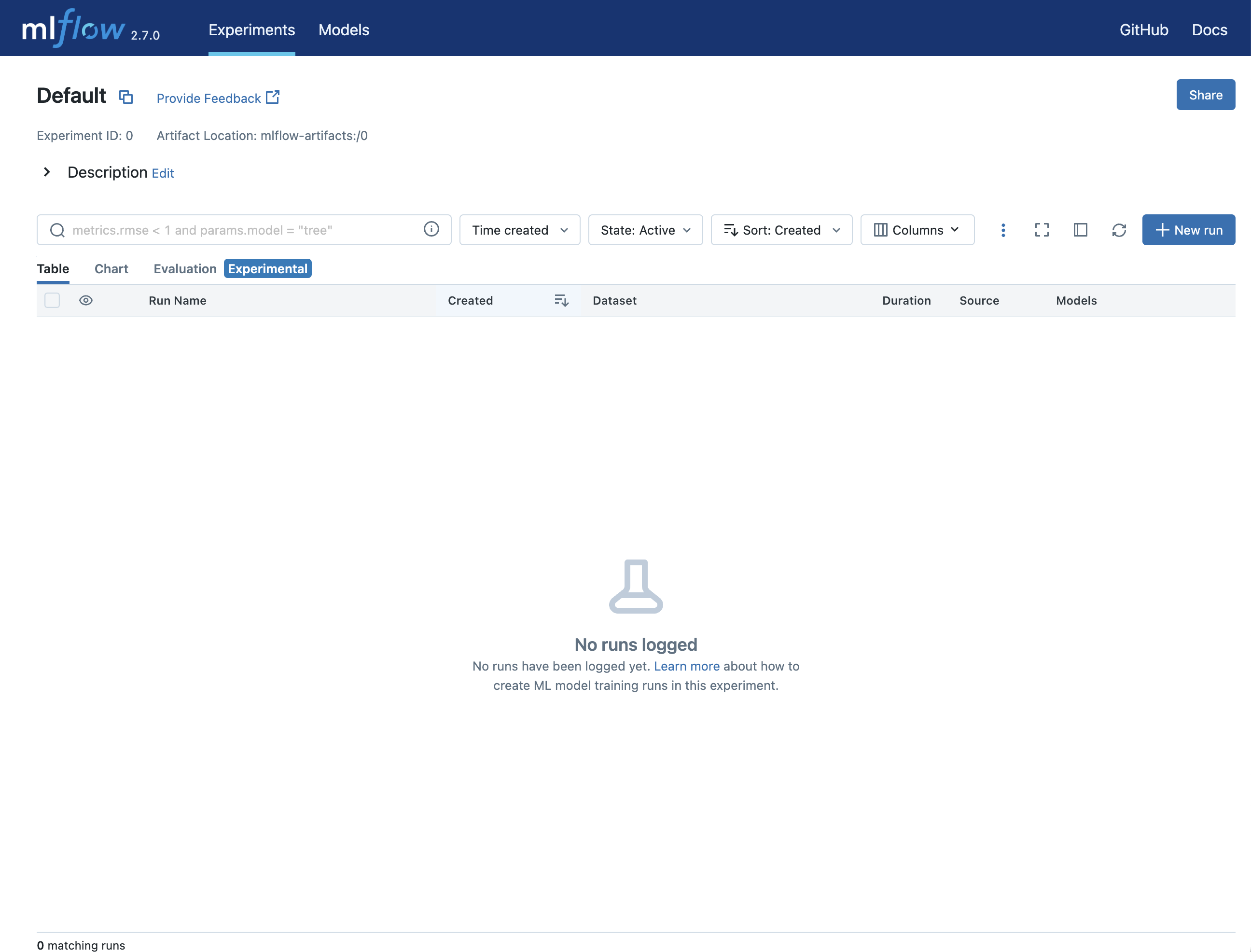

- Monitoring UI: An online interface for visualizing experiment outcomes and exploring runs.

- Backend Retailer: MLflow integration helps two varieties of storage for the backend: native recordsdata or database-based like PostgreSQL.

- Artifact Retailer: Shops bigger recordsdata generated throughout your runs, corresponding to mannequin weights or photographs. You may as well use Amazon S3 and Azure Blob Storage.

- MLflow Monitoring Server (Non-compulsory): An optionally available part that gives a central service for managing backend shops, artifact shops, and entry management.

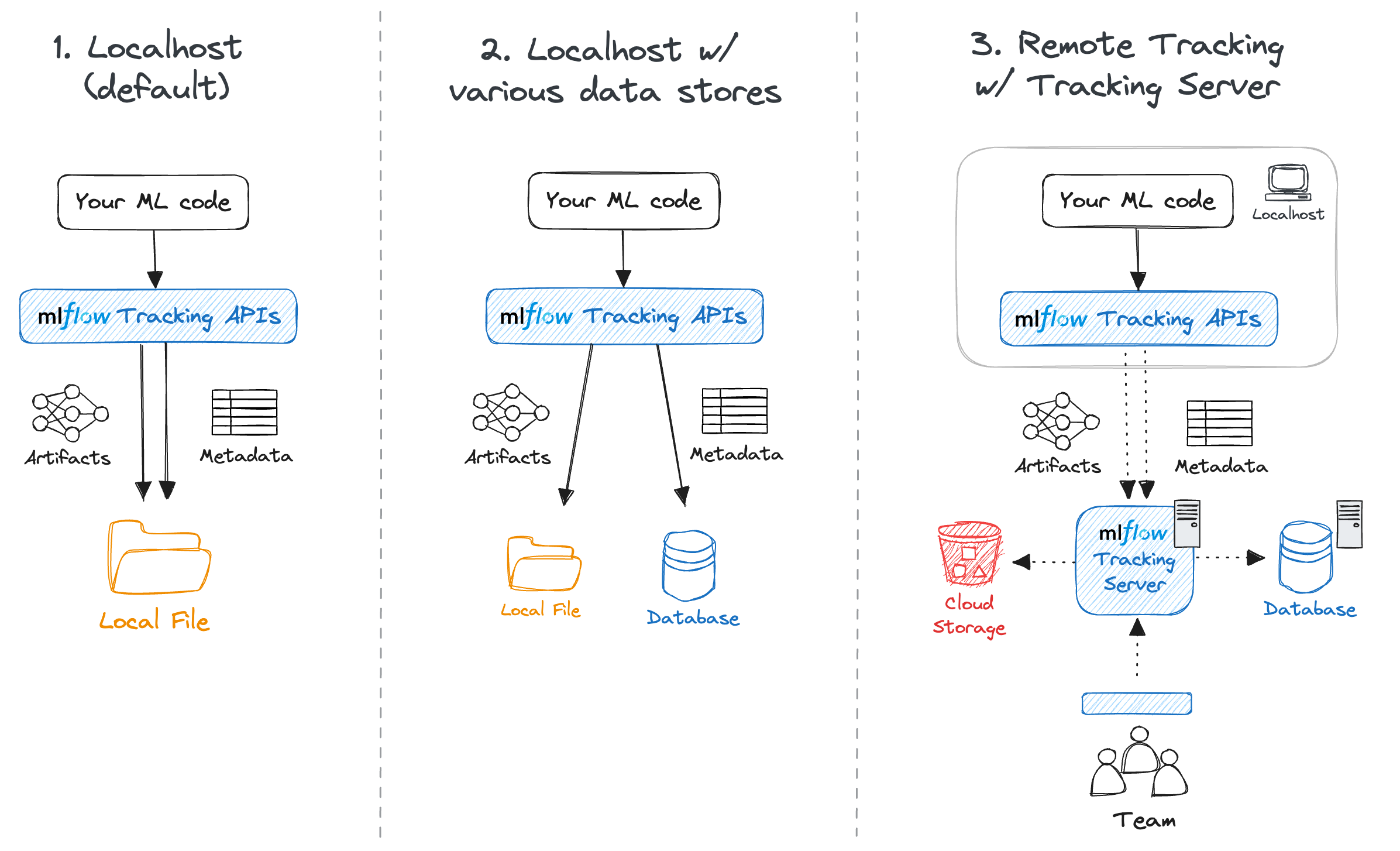

MLflow Monitoring gives flexibility to adapt to your growth workflow. You need to use it to trace fashions regionally or within the cloud.

- Native Improvement: For solo growth, MLflow shops the whole lot regionally by default, with no need any exterior servers or databases.

- Native Monitoring with Database: You need to use an area database to handle experiment metadata for a cleaner setup in comparison with native recordsdata.

- Distant Monitoring with Monitoring Server: For staff growth, a central monitoring server supplies a shared location to retailer artifacts and experiment information with entry management options.

Advantages of Monitoring Experiments

Experiment monitoring of your ML mannequin with MLflow brings a number of key advantages:

- Comparability: MLflow Monitoring permits you to effortlessly examine totally different Runs, and analyze how adjustments in parameters or coaching configurations impression mannequin efficiency. This facilitates figuring out the best-performing fashions for deployment.

- Reproducibility: Experiment monitoring captures all of the intricacies of a Run, together with code variations, parameters, and metrics. This ensures you possibly can reproduce profitable experiments later.

- Collaboration: You possibly can share your experiment outcomes with staff members and ask for assist when caught.

What are Tasks?

Tasks supply a standardized method to bundle the ML code into initiatives for reusability and reproducibility.

Every MLflow Challenge is a listing containing code or a Git repository. It’s outlined by a YAML file known as MLproject, which specifies dependencies (utilizing Conda setting and docker picture container) and tips on how to run the code.

Tasks present flexibility in execution utilizing mission entry factors, that are a number of entry factors with named parameters, permitting customers to run particular components of the mission with no need to know its internals. These parameters could be adjusted when the mission is run.

The core of an MLflow Challenge is its MLproject file, a YAML file that specifies:

- Identify: Optionally, the identify of the mission.

- Atmosphere: This defines the software program dependencies wanted to execute the mission. MLflow helps virtualenv, Conda, Docker containers, and the system setting.

- Entry Factors: These are instructions throughout the mission that you could invoke to run particular components of your code. The default entry level is known as “essential”.

Tasks could be submitted to cloud platforms like Databricks for distant execution. Customers can present parameters at runtime with no need to know mission internals. MLflow mechanically units up the mission’s runtime setting and executes it. Furthermore, initiatives could be tracked utilizing the Monitoring API.

Utilizing initiatives in your ML pipeline supplies the next advantages:

- Reusable Code: Share and collaborate on packaged code.

- Reproducible Experiments: Guarantee constant outcomes by capturing dependencies and parameters.

- Streamlined Workflows: Combine initiatives into automated workflows.

- Distant Execution: Run initiatives on platforms with devoted assets.

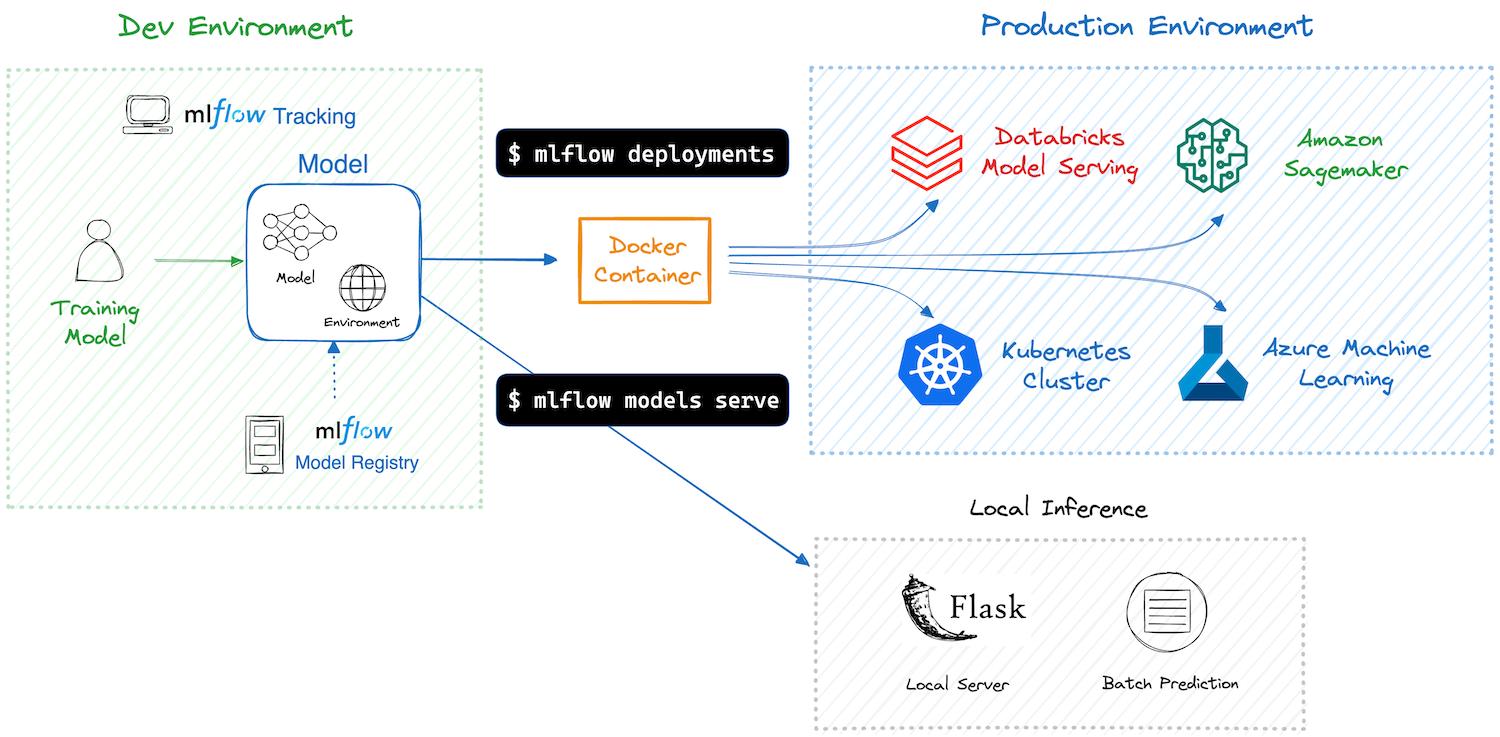

MLflow Fashions

MLflow fashions enable the packaging of all the skilled ML or AI mannequin into a number of codecs (e.g, TensorFlow or PyTorch), which the builders have named as “taste”.

The essential function of that is that the identical ML mannequin could be deployed in a Docker container for real-time REST serving, and on the identical time, could be deployed as an Apache Spark user-defined operate for batch inference.

This multi-flavor system ensures {that a} mannequin could be understood and used at numerous ranges of abstraction. Moreover, you don’t have to tweak and handle numerous instruments. Listed here are just a few flavors:

- Python Perform -: This versatile taste permits packaging fashions as generic Python features. Instruments that may execute Python code can leverage this taste for inference.

- R Perform

- Spark MLlib

- TensorFlow and PyTorch

- Keras (keras)

- H2O

- scikit be taught

MLflow Mannequin Construction: The MLmodel file is a YAML file that lists the supported flavors and contains fields corresponding to time_created, run_id.

Advantages of Flavors in Fashions

- Fashions can be utilized at totally different ranges of abstraction relying on the device.

- Allows deployment in numerous environments like REST API mannequin serving, Spark UDFs, and cloud-managed serving platforms like Amazon SageMaker and Azure ML.

- Considerably reduces the complexity related to mannequin deployment and reuse throughout numerous platforms.

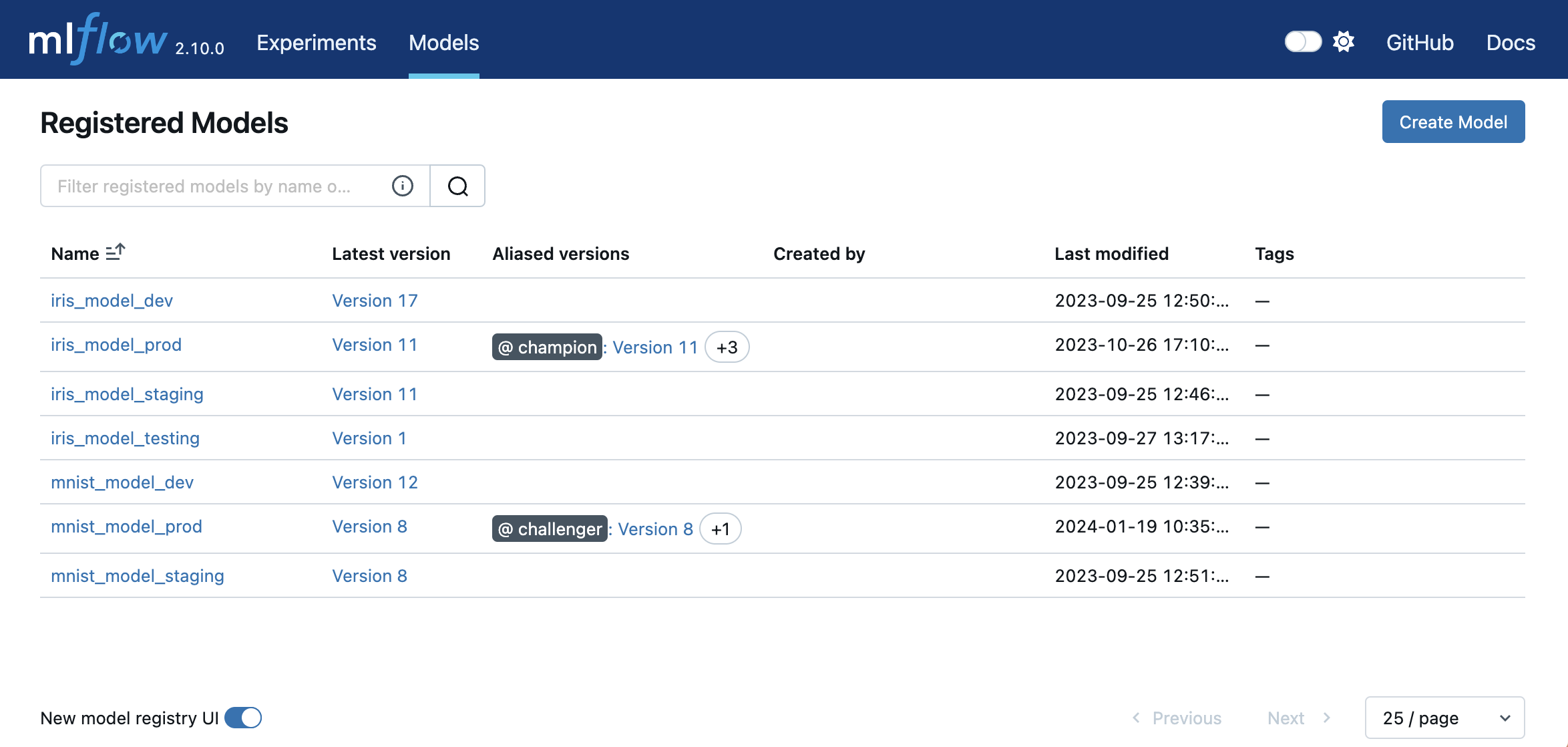

Mannequin Registry

This part gives a centralized system for managing all the lifecycle of machine studying fashions (a mannequin retailer), providing functionalities corresponding to versioning, storing fashions, aliases, and annotations.

- Mannequin Model:

- Every registered mannequin can have a number of variations.

- New fashions added to the identical registered mannequin change into new variations (i.e. model quantity will increase).

- Can have tags for monitoring attributes (e.g., pre-deployment checks).

- Mannequin Alias:

- A versatile reference to a selected mannequin model.

- Helps you to use a reputation (alias) as an alternative of the complete mannequin URI.

- Tags:

- To label and categorize, connect key-value pairs to fashions and variations.

- Instance: “process” tag for figuring out question-answering fashions.

- Annotations and Descriptions:

- Markdown textual content for documenting fashions and variations.

- Embrace particulars like algorithm descriptions, datasets used, or methodology.

- Gives a central location for staff collaboration and data sharing.

Setting Up MLflow

When establishing MLflow, you possibly can run it regionally in your machine, on a server, or within the cloud. To learn extra on tips on how to set it up, click on here.

- Native Setup: Run MLflow regionally for particular person use and testing. Merely set up MLflow utilizing pip, and you can begin logging experiments instantly utilizing the file retailer in your native filesystem.

- Server Setup: It’s possible you’ll need to arrange an MLflow monitoring server for staff environments to permit entry by a number of customers. This entails working an MLflow server with specified database and file storage places. You need to use a database like MySQL or PostgreSQL for storing experiment metadata and a distant file retailer like Amazon S3 for artifacts.

- Cloud Suppliers: MLflow may also combine with cloud platforms, permitting you to leverage cloud storage and compute assets. Suppliers like Databricks supply managed MLflow companies, simplifying the setup course of.

Interacting with MLflow

MLflow supplies numerous methods to work together with its options:

- MLflow APIs: MLflow gives Python, R, and Java APIs that allow you to log metrics, parameters, and artifacts, handle initiatives, and deploy fashions programmatically. These APIs are the first method through which most customers work together with MLflow.

- MLflow UI: MLflow features a web-based UI that permits you to visualize experiments, examine totally different runs, and handle fashions within the Mannequin Registry. It’s a handy method to overview and share outcomes with staff members.

MLflow UI –source - CLI: The MLflow Command-Line Interface (CLI) is a robust device. It permits customers to work together with MLflow’s functionalities straight from the terminal, providing an environment friendly method to automate duties and combine MLflow into broader workflows or CI/CD pipelines.

Instance Use Circumstances of MLflow

Listed here are just a few hypothetical situations the place MLflow can improve ML mannequin bundle growth.

- Autonomous Automobile Improvement: An organization creating self-driving automobiles leverages MLflow Tasks to make sure the reproducibility of their notion and management algorithms. Every mission model is saved with its dependencies, permitting them to duplicate coaching runs and simply roll again to earlier variations if wanted.

- Customized Studying Platform: An organization tailors instructional content material for particular person college students. MLflow Monitoring helps monitor experiments by evaluating totally different advice algorithms and content material choice methods. By analyzing metrics like pupil engagement and studying outcomes, information scientists can establish the simplest strategy for customized studying.

- Fraud Detection: A financial institution makes use of MLflow to trace experiments with numerous machine studying fashions for fraud detection. They’ll examine totally different fashions’ efficiency beneath numerous circumstances (e.g., transaction dimension, location) and fine-tune hyperparameters for optimum fraud detection accuracy.

- Social Media Content material Moderation: A social media platform makes use of the MLflow Mannequin Registry to handle the deployment lifecycle of content material moderation fashions. They’ll model and stage fashions for various ranges of moderation (e.g., automated vs. human overview) and combine the Mannequin Registry with CI/CD pipelines for automated deployment of latest fashions.

- Drug Discovery and Analysis: A pharmaceutical firm makes use of MLflow Tasks to handle workflows for analyzing giant datasets of molecules and predicting their potential effectiveness as medicine. Versioning ensures researchers can monitor adjustments to the mission and collaborate successfully.