Are you able to carry extra consciousness to your model? Take into account changing into a sponsor for The AI Impression Tour. Study extra in regards to the alternatives here.

Nvidia is strengthening its co-sell technique with Microsoft. At the moment, on the Ignite conference hosted by the Satya Nadella-led large, the chipmaker introduced an AI foundry service that can assist enterprises and startups construct customized AI purposes on the Azure cloud, together with these that may faucet enterprise information with retrieval augmented technology (RAG).

“Nvidia’s AI foundry service combines our generative AI mannequin applied sciences, LLM coaching experience and giant-scale AI manufacturing facility. We constructed this in Microsoft Azure so enterprises worldwide can join their customized mannequin with Microsoft’s world-leading cloud companies,” Jensen Huang, founder and CEO of Nvidia, stated in a press release.

Nvidia additionally introduced new 8-billion parameter fashions – additionally part of the foundry service – in addition to the plan so as to add its next-gen GPU to Microsoft Azure within the coming months.

How will the AI foundry service assistance on Azure?

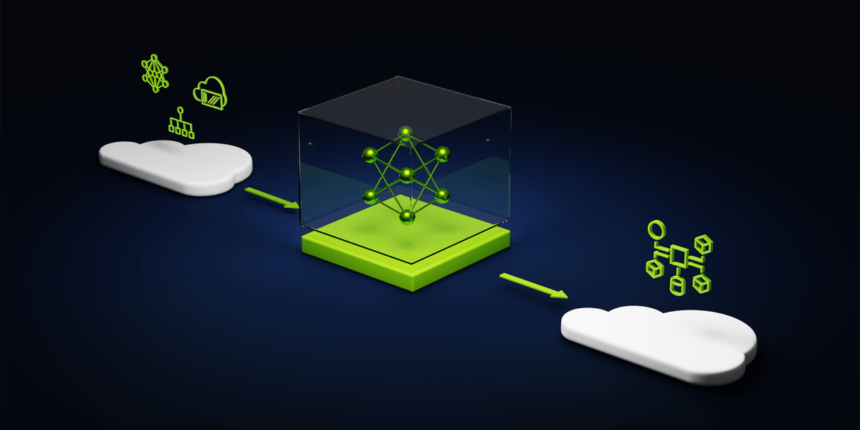

With Nvidia’s AI foundry service on Azure, enterprises utilizing the cloud platform will get all key components required to construct a customized, business-centered generative AI utility at one place. This implies every thing might be out there end-to-end, proper from the Nvidia AI basis fashions and NeMo framework to the Nvidia DGX cloud supercomputing service.

“For the primary time, this complete course of with all of the items which can be wanted, from {hardware} to software program, can be found finish to finish on Microsoft Azure. Any buyer can come and do your complete enterprise generative AI workflow with Nvdia on Azure. They will procure the required parts of the know-how proper inside Azure. Merely put, it’s a co-sell between Nvidia and Microsoft,” Manuvir Das, the VP of enterprise computing at Nvidia, stated in a media briefing.

To supply enterprises with a variety of basis fashions to work with when utilizing the foundry service in Azure environments, Nvidia can also be including a brand new household of Nemotron-3 8B fashions that help the creation of superior enterprise chat and Q&A purposes for industries corresponding to healthcare, telecommunications and monetary companies. These fashions may have multilingual capabilities and are set to turn out to be out there through Azure AI mannequin catalog in addition to through Hugging Face and the Nvidia NGC catalog.

Different group basis fashions within the Nvidia catalog are Llama 2 (additionally coming to Azure AI catalog), Steady Diffusion XL and Mistral 7b.

As soon as a person has entry to the mannequin of selection, they’ll transfer to the coaching and deployment stage for customized purposes with Nvidia DGX Cloud and AI Enterprise software program, out there through Azure marketplace. The DGX Cloud options situations prospects can hire, scaling to 1000’s of NVIDIA Tensor Core GPUs, for coaching and consists of the AI Enterprise toolkit, which brings the NeMo framework and Nvidia Triton Inference Server to Azure’s enterprise-grade AI service, to hurry LLM customization.

This toolkit can also be out there as a separate product on {the marketplace}, Nvidia stated whereas noting that customers will have the ability to use their present Microsoft Azure Consumption Dedication credit to make the most of these choices and velocity mannequin growth.

Notably, the corporate had additionally introduced the same partnership with Oracle final month, giving eligible enterprises an choice to buy the instruments immediately from the Oracle Cloud marketplace and begin coaching fashions for deployment on the Oracle Cloud Infrastructure (OCI).

Presently, software program main SAP, Amdocs and Getty Photographs are among the many early customers testing the foundry service on Azure and constructing customized AI purposes focusing on totally different use circumstances.

What’s extra from Nvidia and Microsoft?

Together with the service for generative AI, Microsoft and Nvidia additionally expanded their partnership for the chipmaker’s newest {hardware}.

Particularly, Microsoft introduced new NC H100 v5 digital machines for Azure, the business’s first cloud situations that includes a pair of PCIe-based H100 GPUs related through Nvidia NVLink, with practically 4 petaflops of AI compute and 188GB of sooner HBM3 reminiscence.

The Nvidia H100 NVL GPU can ship as much as 12x greater efficiency on GPT-3 175B over the earlier technology and is good for inference and mainstream coaching workloads.

As well as, the corporate plans so as to add the brand new Nvidia H200 Tensor Core GPU to its Azure fleet subsequent 12 months. This providing brings 141GB of HBM3e reminiscence (1.8x greater than its predecessor) and 4.8 TB/s of peak reminiscence bandwidth (a 1.4x improve), serving as a purpose-built resolution to run the biggest AI workloads, together with generative AI coaching and inference.

It can be a part of Microsoft’s new Maia 100 AI accelerator, giving Azure customers a number of choices to select from for AI workloads.

Lastly, to speed up LLM work on Home windows gadgets, Nvidia introduced a bunch of updates, together with an replace for TensorRT LLM for Widows, which introduces help for brand new giant language fashions corresponding to Mistral 7B and Nemotron-3 8B.

The replace, set to launch later this month, can even ship 5 occasions sooner inference efficiency which can make operating these fashions simpler on desktops and laptops with GeForce RTX 30 Sequence and 40 Sequence GPUs with not less than 8GB of RAM.

Nvidia added TensorRT-LLM for Home windows can even be appropriate with OpenAI’s Chat API by way of a brand new wrapper, enabling a whole bunch of developer tasks and purposes to run regionally on a Home windows 11 PC with RTX, as an alternative of within the cloud.