Because of their distinctive content material creation capabilities, Generative Massive Language Fashions are actually on the forefront of the AI revolution, with ongoing efforts to boost their generative skills. Nonetheless, regardless of speedy developments, these fashions require substantial computational energy and sources. That is largely as a result of they encompass tons of of billions of parameters. Furthermore, to function easily, generative AI fashions depend on hundreds of GPUs, resulting in vital operational prices. The excessive operational calls for are a key purpose why generative AI fashions are usually not but successfully deployed on personal-grade gadgets.

On this article, we are going to talk about PowerInfer, a high-speed LLM inference engine designed for traditional computer systems powered by a single consumer-grade GPU. The PowerInfer framework seeks to make the most of the excessive locality inherent in LLM inference, characterised by a power-law distribution in neuron activations. Which means at any given time, a small subset of ‘sizzling’ neurons are persistently lively throughout inputs, whereas the remainder, termed ‘chilly’ neurons, activate based mostly on particular inputs or necessities. This method permits the PowerInfer framework to cut back the computing energy wanted for generative AI to supply desired outputs.

We are going to delve into the PowerInfer framework intimately, exploring its methodology, pipeline, and sensible software outcomes. Let’s start.

PowerInfer: Quick Massive Language Mannequin with Shopper-Grade GPU

Generative Massive Language Fashions, similar to ChatGPT and DALL-E, are identified for stylish generative and pure language processing duties. Because of their excessive computational necessities, these fashions are sometimes deployed in information facilities with superior GPUs. The necessity for such excessive computational energy limits their deployment to information facilities, highlighting the need to deploy massive language fashions on extra accessible native platforms like private computer systems.

Growing the accessibility of huge language fashions may cut back inference and content material era prices, improve information privateness, and permit for mannequin customization. Moreover, whereas information heart deployments prioritize excessive throughput, native LLM deployments may give attention to low latency on account of smaller batch sizes.

Nonetheless, deploying these fashions on native gadgets poses vital challenges on account of their substantial reminiscence necessities. Massive language fashions, functioning as autoregressive transformers, generate textual content token-by-token, with every token requiring entry to all the mannequin, comprising tons of of billions of parameters. This necessitates quite a few high-end GPUs for low-latency output era. Moreover, native deployments sometimes course of particular person requests sequentially, limiting the potential for parallel processing.

To handle the complicated reminiscence necessities of the generative AI framework, present options make use of strategies like mannequin offloading and compression. Methods like distillation, pruning, and quantization cut back the mannequin dimension however are nonetheless too massive for standard-grade GPUs in private computer systems. Mannequin offloading, which partitions the mannequin on the Transformer Layer between CPUs and GPUs, permits for distributed layer processing throughout CPU and GPU recollections. Nonetheless, this technique is restricted by the sluggish PCIe interconnection and the CPUs’ restricted computational capabilities, resulting in excessive inference latency.

The PowerInference framework posits that the mismatch between LLM inference traits and {hardware} construction is the first explanation for reminiscence points in LLM inference. Ideally, information accessed continuously needs to be saved in high-bandwidth, limited-capacity GPUs, whereas much less continuously accessed information needs to be in low-bandwidth, high-capacity CPUs. Nonetheless, the big parameter quantity of every LLM inference iteration makes the working set too massive for a single GPU, leading to inefficient exploitation of locality.

The inference course of in massive language fashions demonstrates excessive locality, with every iteration activating a restricted variety of neurons. The PowerInference framework goals to use this locality by managing a small variety of sizzling neurons with the GPU, whereas the CPU handles the chilly neurons. It preselects and preloads sizzling neurons within the GPU and identifies activated neurons throughout runtime. This method minimizes pricey PCIe information transfers, permitting GPUs and CPUs to independently course of their assigned neurons.

Nonetheless, deploying LLMs on native gadgets faces obstacles. On-line predictors, essential for figuring out lively neurons, eat appreciable GPU reminiscence. The PowerInfer framework makes use of an adaptive technique to assemble small predictors for layers with increased activation skewness and sparsity, sustaining accuracy whereas lowering dimension. Moreover, LLM frameworks require specialised sparse operators. The PowerInfer framework employs neuron-aware sparse operators that immediately talk with neurons, eliminating the necessity for particular sparse format conversions.

Lastly, optimally inserting activated neurons between the CPU and GPU is difficult. The PowerInfer framework makes use of an offline stage to create a neuron placement coverage, measuring every neuron’s impression on LLM inference outcomes and framing it as an integer linear drawback.

Structure and Methodology

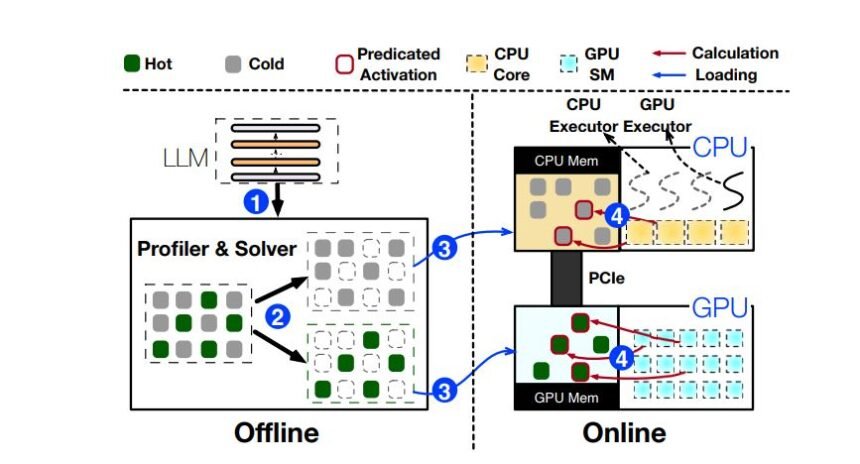

The next determine elaborates the structure of the PowerInfer framework consisting of offline and on-line parts within the pipeline.

Because of the variation noticed within the locality properties amongst totally different massive language fashions, the offline part profiles the activation sparsity of the LLM framework permitting it to distinguish between cold and hot neurons. Then again, within the offline section, two forms of neurons are loaded by the inference engine into each CPU and GPU, thus serving LLM requests throughout runtime with low latency.

Offline Part : Coverage Solver and LLM Profiler

Within the offline section, a LLM profiler part makes use of requests derived from normal dataset to gather activation information from the inference course of. In step one, it displays the activation of neurons throughout all of the layers within the framework, and proceeds to make use of a coverage solver part to categorize the neurons as both sizzling or chilly. The first goal of the coverage solver is to allocate neurons activated extra continuously to the GPU layers whereas allocating the rest to the CPU layers. Within the second stage, the coverage solver part makes use of neuron impression metrics and {hardware} specs to steadiness the workload between the layers, and maximizes the impression metric of GPU for neurons by using integer linear programming.

On-line Part : Neuron Conscious LLM Inference Engine

As soon as the offline stage is executed efficiently, the framework proceeds to execute the web section. Within the third step of the method, the web engine assigns cold and hot neurons to their respective processing items earlier than processing the person requests, relying as per the output of the offline coverage solver. Throughout runtime, and in step 4, the web engine manages GPU-CPU computations by creating CPU and GPU executors which might be threads working on the CPU facet. The engine then predicts the activated neurons and proceeds to skip the non-activated neurons. The activated neurons are then preloaded into the GPU for processing. In the mean time, the CPU calculates and transfers the outcomes for its neurons to be built-in with the GPU. The web engine is ready to give attention to particular person neurons rows and columns inside matrices as a result of it makes use of sparse neuron conscious operators on CPUs in addition to on GPUs.

Adaptive Sparsity Predictors

The first idea behind lowering computational masses by on-line inference engine within the PowerInfer framework is that it solely processes neurons that it predicts to be activated. Historically, inside every Transformer layer, a framework makes use of two totally different predictors to foretell the activation of neurons within the MLP and self-attention blocks, because of which the inference computation is restricted to the neurons predicted to be lively. Nonetheless, it’s troublesome to design efficient predictors for native deployment as a result of the restricted quantity of sources make it troublesome to steadiness the mannequin dimension and the prediction accuracy. Since these predictors are deployed by the framework continuously to foretell lively neurons, they should be saved within the GPU to allow quicker entry. Nonetheless, frameworks typically deploy a lot of predictors that occupy appreciable reminiscence, even the one wanted to retailer LLM parameters.

Moreover, the scale of predictors is mostly decided by two elements: Inner Skewness and Sparsity of LLM layers.

To optimize for these elements, the PowerInfer framework makes use of an iterative coaching technique for every predictor within the Transformer layer and not using a fixed-size. In step one of this coaching technique, the scale of the baseline mannequin is established on the idea of the sparsity profile of the mannequin, and the scale of the mannequin is adjusted iteratively by taking inner activation skewness into consideration to take care of accuracy.

Neuron Placement and Administration

As talked about earlier, whereas the offline coverage solver part is figuring out the neuron placement coverage, the web inference engine part masses the mannequin into the GPU and CPU reminiscence as per the generated coverage. For every layer which will or might not have a number of weight matrices, the PowerInfer framework assigns every neuron both to the CPU or the GPU on the idea of whether or not the neuron is hot-activated. Making certain correct computation of segmented neurons within the decided sequence is crucial for exact outcomes. To deal with this, the PowerInfer framework generates two neuron tables: one positioned within the GPU, and one positioned within the CPU reminiscence, with every desk correlating particular person neurons to its unique place within the matrix.

Neuron Conscious Operator

Given the activation sparsity noticed in massive language fashions, the inactive neurons and their weights could be bypassed by matrix multiplication operations, thus creating a necessity for using sparse operators. As an alternative of using sparse operators which have a number of limitations, the PowerInfer framework employs neuron-aware operators that compute activated neurons and their weights immediately on the GPU and CPU with out requiring conversion to dense format throughout runtime. The neuron conscious operators differ from conventional sparse operators as they give attention to particular person row and column vectors inside a single matrix quite than focussing on all the matrix.

Neuron Placement Coverage

To take advantage of the computational capabilities of CPUs and GPUs, the offline part within the PowerInfer framework generates a placement coverage that guides the framework when allocating neurons to both the CPU or the GPU layers. The coverage solver generates this coverage, and controls neuron placement inside every layer, which helps in figuring out the computational workload for particular person processing items. When producing the position coverage, the coverage solver part considers various factors together with the activation frequency for every neuron, the communication overhead, and the computational capabilities like bandwidths and reminiscence dimension of every processing unit.

Outcomes and Implementation

To display the generalization capabilities of the PowerInfer framework throughout gadgets with totally different {hardware} configurations, the experiments are carried out on two distinct private computer systems: one geared up with Intel i9-13900K processor, NVIDIA RTX 4090 GPU and 192 GB host reminiscence whereas the opposite operates on Intel i7-12700K processor, NVIDIA RTX 2080Ti GPU and 64 GB of host reminiscence.

The tip to finish efficiency of the PowerInfer framework is in contrast towards llama.cpp with a batch dimension of 1, and default deployment settings. The framework then samples prompts from ChatGPT and Alpaca datasets given the size variability noticed in real-world dialogue enter and output. The next determine demonstrates the era speeds for various fashions.

As it may be noticed, the PowerInfer framework generates 8.32 tokens per second, and reaches as much as 16 tokens generated per second , thus outperforming the llama.cpp framework by a big margin. Moreover, because the variety of output tokens improve, the efficiency of the PowerInfer framework additionally improves because the era section impacts the general inference time considerably.

Moreover, as it may be noticed within the above picture, the PowerInfer framework outperforms the llama.cpp framework on low-end PCs with a peak era charge of seven tokens per second, and a median token era velocity of 5 tokens per second.

The above picture demonstrates the distribution of neuron masses between the GPU and CPU for the 2 frameworks. As it may be seen, the PowerInfer framework will increase the GPU’s share of neuron load considerably, from 20 to 70 %.

The above picture compares the efficiency of the 2 frameworks on two PCs with totally different specs. As it may be seen, the PowerInfer framework persistently delivers a excessive output token era velocity when put next towards the llama.cpp framework.

Ultimate Ideas

On this article, now we have talked about PowerInfer, a high-speed LLM inference engine for the standard laptop powered by a single consumer-grade GP. At its core, the PowerInfer framework makes an attempt to use the excessive locality inherent inference in LLMs, a technique characterised by neuron activation’s power-law distribution. The PowerInfer framework is a quick interference system designed for giant language fashions that makes use of adaptive predictors and neuron-aware operators to activate the neurons and the computational sparsity.