Hiya, people, and welcome to TechCrunch’s inaugural AI e-newsletter. It’s really a thrill to sort these phrases — this one’s been lengthy within the making, and we’re excited to lastly share it with you.

With the launch of TC’s AI e-newsletter, we’re sunsetting This Week in AI, the semiregular column beforehand often known as Perceptron. However you’ll discover all of the evaluation we dropped at This Week in AI and extra, together with a highlight on noteworthy new AI fashions, proper right here.

This week in AI, bother’s brewing — once more — for OpenAI.

A gaggle of former OpenAI staff spoke with The New York Occasions’ Kevin Roose about what they understand as egregious security failings throughout the group. They — like others who’ve left OpenAI in latest months — declare that the corporate isn’t doing sufficient to forestall its AI techniques from turning into probably harmful and accuse OpenAI of using hardball ways to aim to forestall employees from sounding the alarm.

The group printed an open letter on Tuesday calling for main AI firms, together with OpenAI, to ascertain better transparency and extra protections for whistleblowers. “As long as there isn’t a efficient authorities oversight of those firms, present and former staff are among the many few individuals who can maintain them accountable to the general public,” the letter reads.

Name me pessimistic, however I anticipate the ex-staffers’ calls will fall on deaf ears. It’s powerful to think about a state of affairs by which AI firms not solely conform to “assist a tradition of open criticism,” because the undersigned advocate, but in addition decide to not implement nondisparagement clauses or retaliate towards present workers who select to talk out.

Think about that OpenAI’s security fee, which the corporate not too long ago created in response to preliminary criticism of its security practices, is staffed with all firm insiders — together with CEO Sam Altman. And take into account that Altman, who at one level claimed to don’t have any information of OpenAI’s restrictive nondisparagement agreements, himself signed the incorporation paperwork establishing them.

Positive, issues at OpenAI may flip round tomorrow — however I’m not holding my breath. And even when they did, it’d be powerful to belief it.

Information

AI apocalypse: OpenAI’s AI-powered chatbot platform, ChatGPT — together with Anthropic’s Claude and Google’s Gemini and Perplexity — all went down this morning at roughly the identical time. All of the providers have since been restored, however the reason for their downtime stays unclear.

OpenAI exploring fusion: OpenAI is in talks with fusion startup Helion Vitality a few deal by which the AI firm would purchase huge portions of electrical energy from Helion to supply energy for its information facilities, in keeping with the Wall Road Journal. Altman has a $375 million stake in Helion and sits on the corporate’s board of administrators, however he reportedly has recused himself from the deal talks.

The price of coaching information: TechCrunch takes a take a look at the expensive information licensing offers which can be turning into commonplace within the AI trade — offers that threaten to make AI analysis untenable for smaller organizations and educational establishments.

Hateful music mills: Malicious actors are abusing AI-powered music mills to create homophobic, racist and propagandistic songs — and publishing guides instructing others how to take action as nicely.

Cash for Cohere: Reuters reviews that Cohere, an enterprise-focused generative AI startup, has raised $450 million from Nvidia, Salesforce Ventures, Cisco and others in a brand new tranche that values Cohere at $5 billion. Sources acquainted inform TechCrunch that Oracle and Thomvest Ventures — each returning traders — additionally participated within the spherical, which was left open.

Analysis paper of the week

In a research paper from 2023 titled “Let’s Confirm Step by Step” that OpenAI recently highlighted on its official weblog, scientists at OpenAI claimed to have fine-tuned the startup’s general-purpose generative AI mannequin, GPT-4, to realize better-than-expected efficiency in fixing math issues. The method may result in generative fashions much less liable to going off the rails, the co-authors of the paper say — however they level out a number of caveats.

Within the paper, the co-authors element how they educated reward fashions to detect hallucinations, or cases the place GPT-4 received its info and/or solutions to math issues improper. (Reward fashions are specialised fashions to guage the outputs of AI fashions, on this case math-related outputs from GPT-4.) The reward fashions “rewarded” GPT-4 every time it received a step of a math drawback proper, an method the researchers discuss with as “course of supervision.”

The researchers say that course of supervision improved GPT-4’s math drawback accuracy in comparison with earlier methods of “rewarding” fashions — not less than of their benchmark checks. They admit it’s not good, nonetheless; GPT-4 nonetheless received drawback steps improper. And it’s unclear how the type of course of supervision the researchers explored would possibly generalize past the mathematics area.

Mannequin of the week

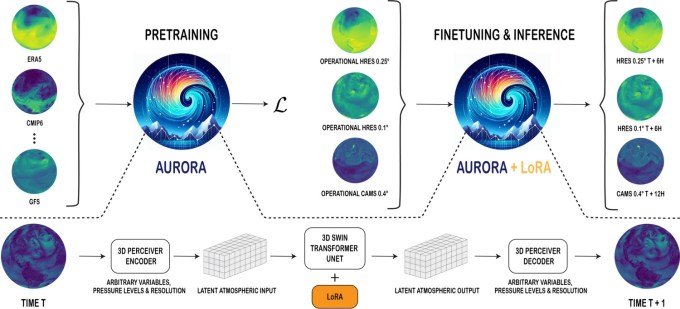

Forecasting the climate could not really feel like a science (not less than while you get rained on, like I simply did), however that’s as a result of it’s all about possibilities, not certainties. And what higher option to calculate possibilities than a probabilistic mannequin? We’ve already seen AI put to work on climate prediction at time scales from hours to centuries, and now Microsoft is getting in on the enjoyable. The corporate’s new Aurora model strikes the ball ahead on this fast-evolving nook of the AI world, offering globe-level predictions at ~0.1° decision (suppose on the order of 10 km sq.).

Skilled on over 1,000,000 hours of climate and local weather simulations (not actual climate? Hmm…) and fine-tuned on quite a lot of fascinating duties, Aurora outperforms conventional numerical prediction techniques by a number of orders of magnitude. Extra impressively, it beats Google DeepMind’s GraphCast at its personal sport (although Microsoft picked the sector), offering extra correct guesses of climate situations on the one- to five-day scale.

Firms like Google and Microsoft have a horse within the race, in fact, each vying on your on-line consideration by making an attempt to supply essentially the most customized net and search expertise. Correct, environment friendly first-party climate forecasts are going to be an essential a part of that, not less than till we cease going outdoors.

Seize bag

In a thought piece last month in Palladium, Avital Balwit, chief of workers at AI startup Anthropic, posits that the following three years could be the final she and lots of information employees should work due to generative AI’s fast developments. This could come as a consolation relatively than a purpose to worry, she says, as a result of it may “[lead to] a world the place folks have their materials wants met but in addition don’t have any have to work.”

“A famend AI researcher as soon as instructed me that he’s practising for [this inflection point] by taking over actions that he’s not significantly good at: jiu-jitsu, browsing, and so forth, and savoring the doing even with out excellence,” Balwit writes. “That is how we will put together for our future the place we should do issues from pleasure relatively than want, the place we’ll not be the perfect at them, however will nonetheless have to decide on tips on how to fill our days.”

That’s actually the glass-half-full view — however one I can’t say I share.

Ought to generative AI substitute most information employees inside three years (which appears unrealistic to me given AI’s many unsolved technical issues), financial collapse may nicely ensue. Data employees make up large portions of the workforce and tend to be high earners — and thus huge spenders. They drive the wheels of capitalism ahead.

Balwit makes references to common fundamental revenue and different large-scale social security internet packages. However I don’t have quite a lot of religion that nations just like the U.S., which might’t even handle fundamental federal-level AI laws, will undertake common fundamental revenue schemes anytime quickly.

With a bit of luck, I’m improper.