Be part of us in returning to NYC on June fifth to collaborate with govt leaders in exploring complete strategies for auditing AI fashions concerning bias, efficiency, and moral compliance throughout numerous organizations. Discover out how one can attend right here.

The financial potential of AI is uncontested, however it’s largely unrealized by organizations, with an astounding 87% of AI projects failing to succeed.

Some take into account this a expertise downside, others a enterprise downside, a tradition downside or an trade downside — however the newest proof reveals that it’s a belief downside.

Based on latest analysis, practically two-thirds of C-suite executives say that belief in AI drives income, competitiveness and buyer success.

Belief has been an advanced phrase to unpack in the case of AI. Are you able to belief an AI system? In that case, how? We don’t belief people instantly, and we’re even much less prone to belief AI programs instantly.

However a scarcity of belief in AI is holding again financial potential, and most of the suggestions for constructing belief in AI programs have been criticized as too summary or far-reaching to be sensible.

It’s time for a brand new “AI Belief Equation” centered on sensible software.

The AI belief equation

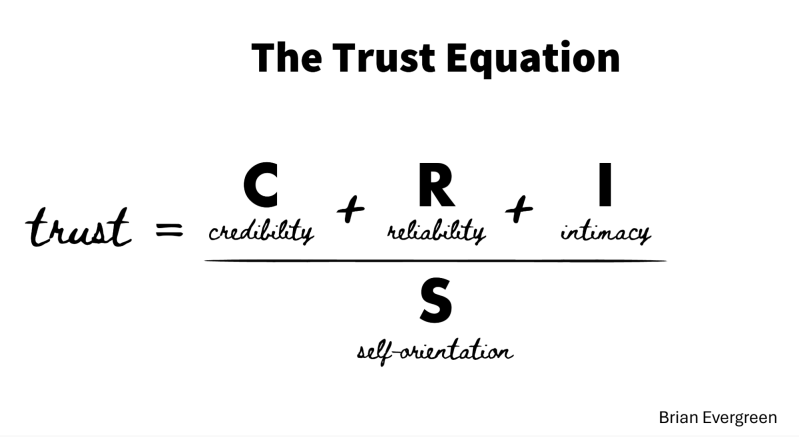

The Belief Equation, an idea for constructing belief between individuals, was first proposed in The Trusted Advisor by David Maister, Charles Inexperienced and Robert Galford. The equation is Belief = Credibility + Reliability + Intimacy, divided by Self-Orientation.

It’s clear at first look why this is a perfect equation for constructing belief between people, but it surely doesn’t translate to constructing belief between people and machines.

For constructing belief between people and machines, the brand new AI Belief Equation is Belief = Safety + Ethics + Accuracy, divided by Management.

Safety types step one within the path to belief, and it’s made up of a number of key tenets which might be nicely outlined elsewhere. For the train of constructing belief between people and machines, it comes all the way down to the query: “Will my data be safe if I share it with this AI system?”

Ethics is extra sophisticated than safety as a result of it’s a ethical query quite than a technical query. Earlier than investing in an AI system, leaders want to contemplate:

- How have been individuals handled within the making of this mannequin, such because the Kenyan workers within the making of ChatGPT? Is that one thing I/we really feel snug with supporting by constructing our options with it?

- Is the mannequin explainable? If it produces a dangerous output, can I perceive why? And is there something I can do about it (see Management)?

- Are there implicit or specific biases within the mannequin? This can be a totally documented downside, such because the Gender Shades analysis from Pleasure Buolamwini and Timnit Gebru and Google’s latest try to remove bias of their fashions, which resulted in creating ahistorical biases.

- What’s the enterprise mannequin for this AI system? Are these whose data and life’s work have skilled the mannequin being compensated when the mannequin constructed on their work generates income?

- What are the acknowledged values of the corporate that created this AI system, and the way nicely do the actions of the corporate and its management observe to these values? OpenAI’s latest option to imitate Scarlett Johansson’s voice with out her consent, for instance, reveals a major divide between the acknowledged values of OpenAI and Altman’s determination to disregard Scarlett Johansson’s alternative to say no using her voice for ChatGPT.

Accuracy will be outlined as how reliably the AI system offers an correct reply to a variety of questions throughout the circulate of labor. This may be simplified to: “After I ask this AI a query primarily based on my context, how helpful is its reply?” The reply is immediately intertwined with 1) the sophistication of the mannequin and a couple of) the information on which it’s been skilled.

Management is on the coronary heart of the dialog about trusting AI, and it ranges from probably the most tactical query: “Will this AI system do what I would like it to do, or will it make a mistake?” to the one of the vital urgent questions of our time: “Will we ever lose management over clever programs?” In each instances, the power to manage the actions, choices and output of AI programs underpins the notion of trusting and implementing them.

5 steps to utilizing the AI belief equation

- Decide whether or not the system is beneficial: Earlier than investing time and assets in investigating whether or not an AI platform is reliable, organizations would profit from figuring out whether or not a platform is beneficial in serving to them create extra worth.

- Examine if the platform is safe: What occurs to your knowledge for those who load it into the platform? Does any data depart your firewall? Working carefully along with your safety staff or hiring safety advisors is important to making sure you possibly can depend on the safety of an AI system.

- Set your moral threshold and consider all programs and organizations towards it: If any fashions you put money into have to be explainable, outline, to absolute precision, a standard, empirical definition of explainability throughout your group, with higher and decrease tolerable limits, and measure proposed programs towards these limits. Do the identical for each moral precept your group determines is non-negotiable in the case of leveraging AI.

- Outline your accuracy targets and don’t deviate: It may be tempting to undertake a system that doesn’t carry out nicely as a result of it’s a precursor to human work. But when it’s performing under an accuracy goal you’ve outlined as acceptable on your group, you run the danger of low high quality work output and a better load in your individuals. As a rule, low accuracy is a mannequin downside or an information downside, each of which will be addressed with the best degree of funding and focus.

- Resolve what diploma of management your group wants and the way it’s outlined: How a lot management you need decision-makers and operators to have over AI programs will decide whether or not you desire a totally autonomous system, semi-autonomous, AI-powered, or in case your organizational tolerance degree for sharing management with AI programs is the next bar than any present AI programs could possibly attain.

Within the period of AI, it may be simple to seek for greatest practices or fast wins, however the fact is: nobody has fairly figured all of this out but, and by the point they do, it received’t be differentiating for you and your group anymore.

So, quite than anticipate the right answer or comply with the tendencies set by others, take the lead. Assemble a staff of champions and sponsors inside your group, tailor the AI Belief Equation to your particular wants, and begin evaluating AI programs towards it. The rewards of such an endeavor will not be simply financial but in addition foundational to the way forward for expertise and its function in society.

Some expertise firms see the market forces transferring on this course and are working to develop the best commitments, management and visibility into how their AI programs work — equivalent to with Salesforce’s Einstein Trust Layer — and others are claiming that that any degree of visibility would cede aggressive benefit. You and your group might want to decide what diploma of belief you need to have each within the output of AI programs in addition to with the organizations that construct and keep them.

AI’s potential is immense, however it would solely be realized when AI programs and the individuals who make them can attain and keep belief inside our organizations and society. The way forward for AI is determined by it.

Brian Evergreen is creator of “Autonomous Transformation: Making a Extra Human Future within the Period of Synthetic Intelligence.”