Intersection over Union (IoU) is a key metric utilized in laptop imaginative and prescient to evaluate the efficiency and accuracy of object detection algorithms. It quantifies the diploma of overlap between two bounding containers: one representing the “floor reality” (precise location of an object) and the opposite representing the mannequin’s “prediction” for a similar object. It measures how nicely a predicted object aligns with the precise object annotation. A better IoU rating implies a extra correct prediction.

On this article, you’ll study:

- What’s Intersection over Union (IoU)?

- Key Mathematical Elements

- How is IoU Calculated?

- Utilizing IoU for Benchmarking Pc Imaginative and prescient Fashions

- Functions, Challenges, and Limitations Whereas Implementing IoU

- Future Developments

What’s Intersection over Union (IoU)?

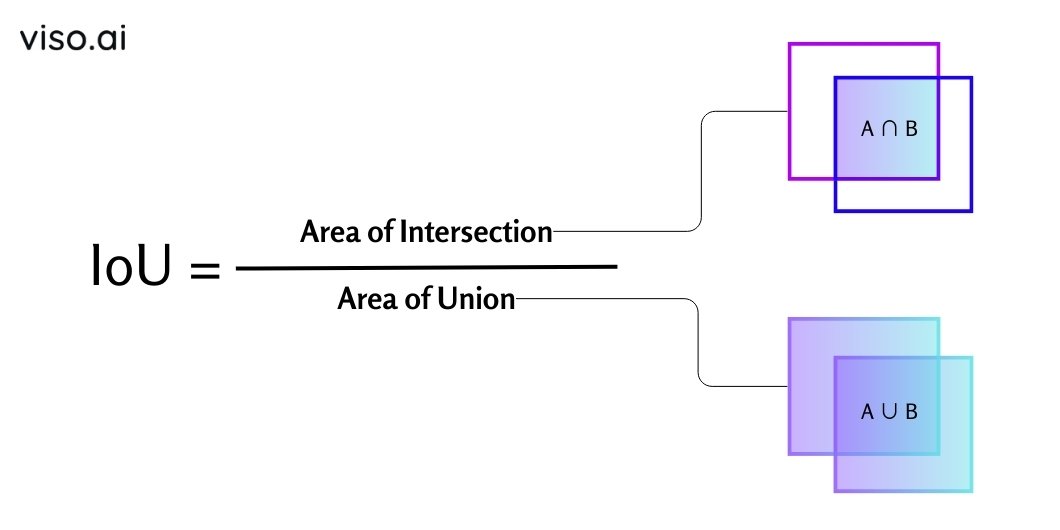

Intersection over Union (IoU), often known as the Jaccard index, is the ratio of the ‘space of intersection’ to the ‘space of the union’ between the expected and floor reality bounding containers. It quantitatively measures how nicely a predicted bounding field aligns with the bottom reality bounding field.

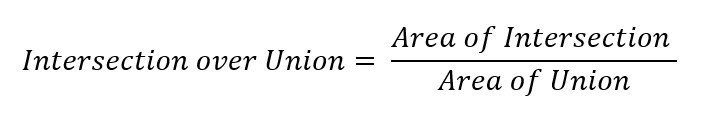

The IoU Components

The mathematical illustration is:

The place,

- Space of Intersection = Frequent space shared by the 2 bounding containers (Overlap)

- Space of Union = Complete space coated by the 2 bounding containers

This system produces a worth between 0 and 1, the place 0 signifies no overlap, and 1 signifies an ideal match between the expected field and floor reality bounding containers.

Key Mathematical Elements

To know IoU, let’s break down its key parts:

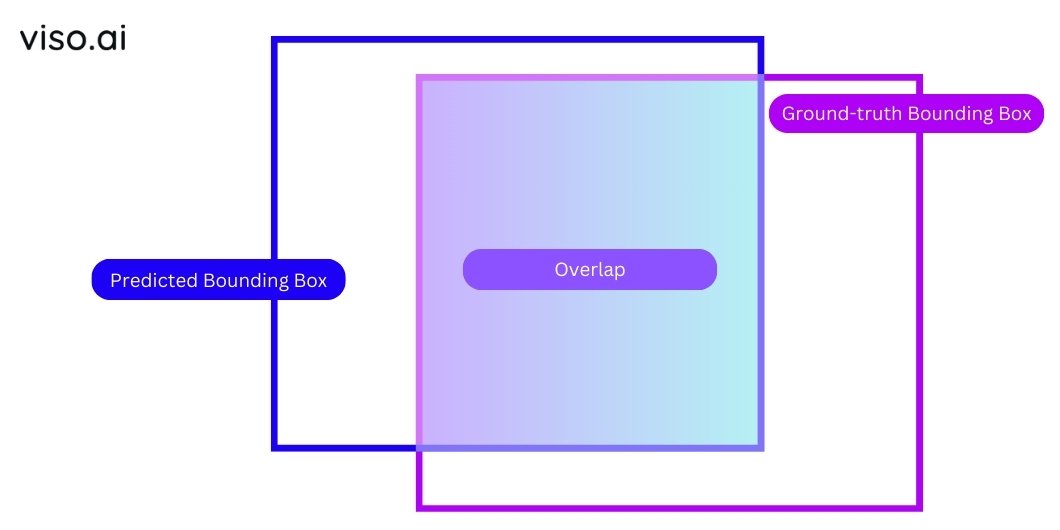

Floor Fact Bounding Field

A floor reality bounding field is an oblong area that encloses an object of curiosity in a picture. It defines the precise location and measurement of an object in a picture and serves because the reference level for evaluating the mannequin’s predictions.

Predicted Bounding Field

A predicted bounding field is an oblong area a pc imaginative and prescient mannequin generates to detect and localize an object in a picture. It represents the algorithm’s estimate of the item’s location and extent throughout the picture. The diploma of overlap between the expected bounding field and the bottom reality field determines the accuracy of the prediction.

Overlap

Overlap is a time period that describes how a lot two bounding containers share the identical area. A bigger overlap signifies higher localization and accuracy of the expected mannequin.

Precision and Recall Definitions

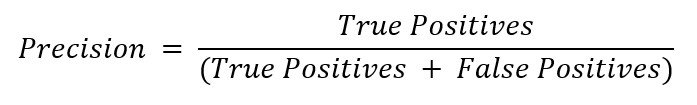

These two metrics consider how nicely a pc imaginative and prescient mannequin performs on a detection activity. Precision measures the accuracy of the expected bounding containers, whereas recall measures the mannequin’s potential to detect all cases of the item.

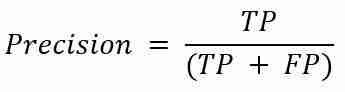

Precision defines what number of true positives (appropriate detections) the mannequin made. It’s the ratio of True Positives (TP) to the sum of True Positives and False Positives (FP).

OR

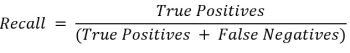

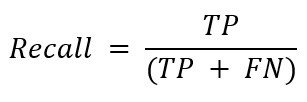

Recall signifies what number of true positives the mannequin has missed. It’s the ratio of True Positives to the sum of True Positives and False Negatives (FN).

OR

The place,

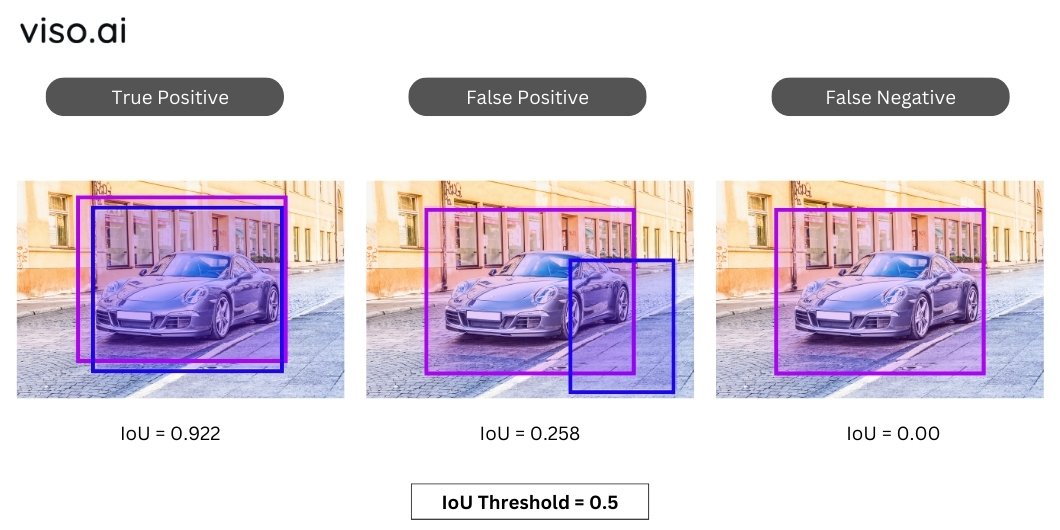

- True Constructive (TP) is a predicted bounding field with a excessive sufficient IoU (normally 0.5+ thresholds).

- False Constructive (FP) is a predicted bounding field that doesn’t overlap considerably with any floor reality field, indicating the mannequin incorrectly detected an object.

- False Unfavourable (FN) is a floor reality field that the mannequin missed totally, that means it didn’t detect an current object.

How is IoU Calculated?

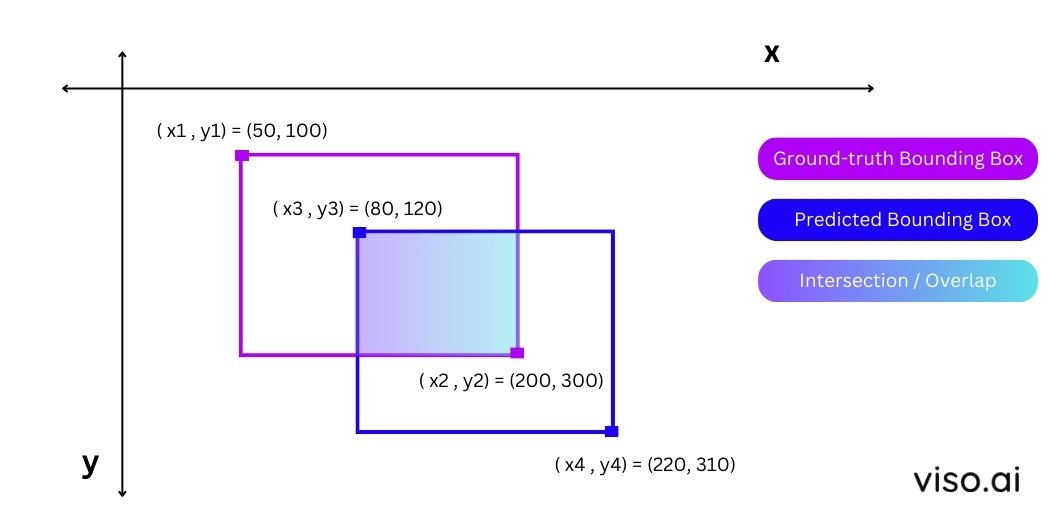

Contemplate the next instance:

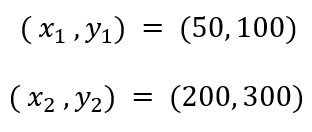

Coordinates of Floor Fact Bounding Field:

Predicted Bounding Field Coordinates:

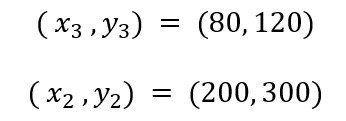

Coordinates of Intersection Area:

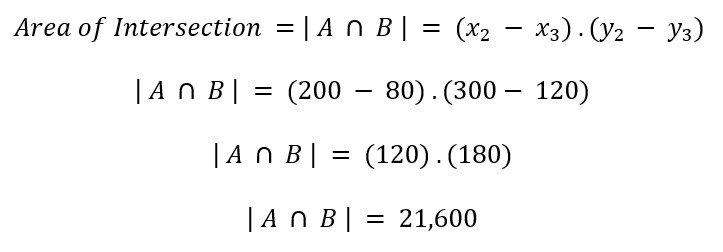

Step 1: Calculating Space of Intersection

The realm of intersection is the frequent space shared by the bottom reality bounding field and the expected bounding field. You may calculate the realm of the intersection/overlapping area by discovering the coordinates of its top-left and bottom-right corners.

Step 2: Calculate Space of Union

The realm of union is the entire space coated by the bottom reality bounding field and the expected bounding field. To seek out the realm of union, add the areas of each bounding containers after which subtract the realm of intersection.

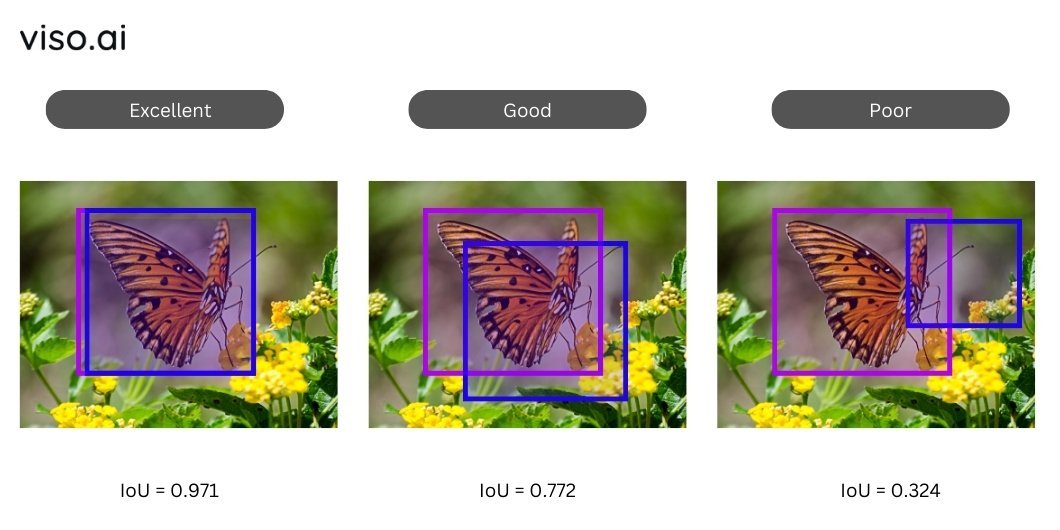

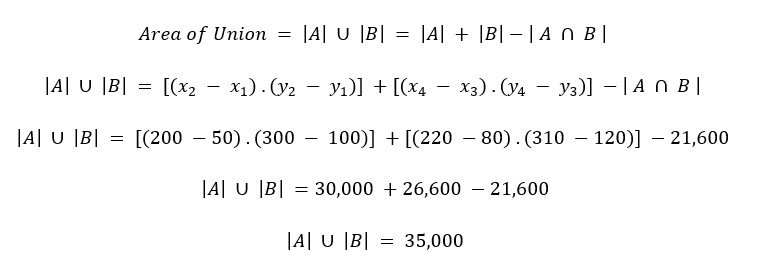

Step 3: Interpret IoU

We compute the IoU by dividing the realm of the intersection by the realm of the union. A better IoU worth signifies a extra correct prediction, whereas a decrease worth suggests a poor alignment between the expected and floor reality bounding containers.

The mannequin’s Intersection over Union (IoU) for the instance into consideration is 0.618, suggesting a naked overlap between the expected and precise outputs.

Acceptable IoU values are usually above 0.5, whereas good IoU values are above 0.7.

Nevertheless, these thresholds could range relying on the applying and activity.

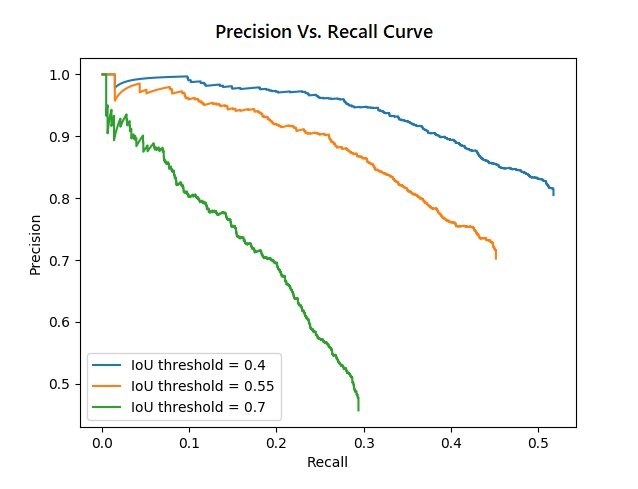

Step 4: Alter Thresholds for Precision and Recall

The intersection-over-union (IoU) threshold acts as a gatekeeper, classifying predicted bounding containers as true positives in the event that they cross the edge and false positives in the event that they fall under it. By adjusting the edge, we will management the trade-off between precision and recall. A better threshold will increase precision (fewer false positives) however decreases recall (extra missed positives). Conversely, a decrease threshold will increase recall however decreases precision.

For instance, to prioritize precision over recall set a better IoU threshold for a optimistic detection, akin to 0.8 or 0.9. The algorithm counts solely predictions with a excessive diploma of overlap with the bottom reality as true positives, whereas it counts predictions with a low diploma of overlap as false positives. It will lead to a better precision however a decrease recall.

Conversely, to prioritize recall over precision, set a decrease IoU threshold for a optimistic detection, akin to 0.3 or 0.4. Which means predictions that partially overlap with the bottom reality are true positives, whereas these with no overlap are false negatives. It will lead to a decrease precision however a better recall.

Position of IoU in Benchmarking Pc Imaginative and prescient Fashions

IoU kinds the spine of quite a few laptop imaginative and prescient benchmarks, permitting researchers and builders to objectively evaluate the efficiency of various fashions on standardized datasets. This facilitates:

Goal Comparability: Permits researchers and builders to match fashions throughout completely different datasets and duties quantitatively.

Standardization: Supplies a typical IoU Intersection over Union metric for understanding and monitoring progress within the discipline.

Efficiency Evaluation: Presents insights into the strengths and weaknesses of various fashions, guiding additional improvement.

Fashionable benchmarks like Pascal VOC, COCO, and Cityscapes use IoU as their major metric for evaluating mannequin efficiency and accuracy. Let’s focus on them briefly:

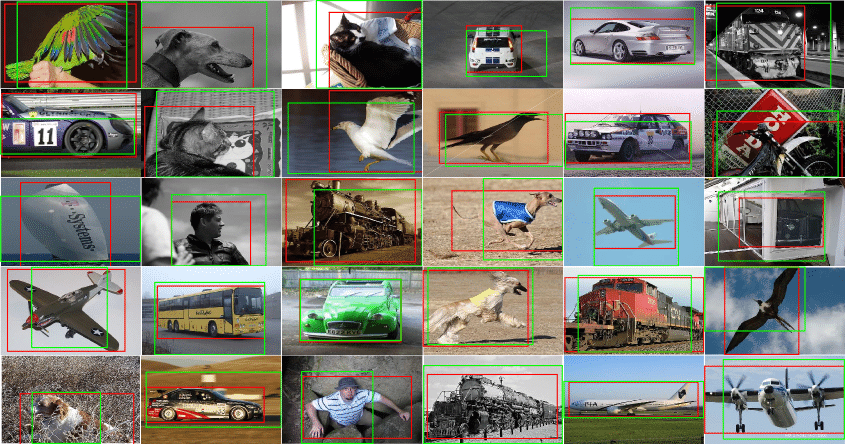

Pascal VOC

Pascal VOC (Visible Object Courses) is a broadly used benchmark dataset for IoU object detection and picture classification. It consists of a giant assortment of pictures labeled with object annotations. IoU is utilized in Pascal VOC to judge the accuracy of object detection fashions and rank them based mostly on their efficiency.

The principle IoU metric used for evaluating fashions on Pascal VOC is imply common precision (mAP), which is the common of the precision values at completely different recall ranges. To calculate mAP, the IoU threshold is ready to 0.5, that means that solely predictions with at the least 50% overlap with the bottom reality are thought of optimistic detections.

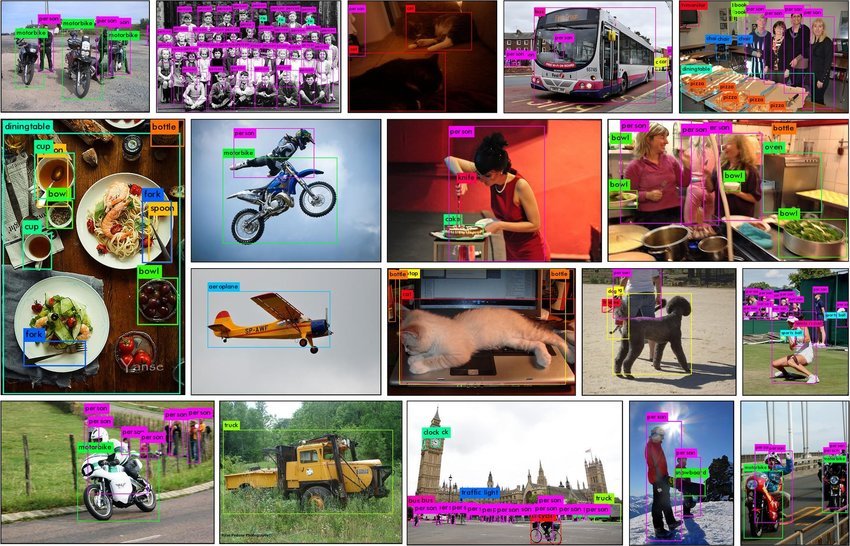

MS COCO

Microsoft’s Frequent Objects in Context (COCO) dataset is famend for its complexity and various set of object courses. IoU performs a central position in assessing the accuracy of object detection and picture segmentation algorithms competing within the COCO benchmark.

Cityscapes Dataset

Cityscapes focuses on a semantic understanding of city scenes. This benchmark focuses on pixel-level semantic segmentation, the place IoU measures the accuracy of pixel-wise predictions for various object classes. It goals to establish and phase objects inside advanced metropolis environments, contributing to developments in autonomous driving and concrete planning.

Actual-World Functions of IoU

IoU has a variety of functions in laptop imaginative and prescient past benchmarking. Listed below are some real-world situations the place IoU performs an important position:

Object Detection and Localization

IoU is extensively employed in object detection duties to measure the accuracy of bounding field predictions. It helps in figuring out the areas the place the mannequin excels and the place enhancements are wanted, contributing to the refinement of detection algorithms.

Segmentation

In picture segmentation, IoU is utilized to judge the accuracy of pixel-wise predictions. It aids in quantifying the diploma of overlap between predicted and floor reality segmentation masks, guiding the event of extra exact Intersection over Union segmentation algorithms.

Info Retrieval

IoU is efficacious in info retrieval situations the place the aim is to find and extract related info from pictures. By assessing the alignment between predicted and precise info areas, IoU facilitates the optimization of retrieval algorithms.

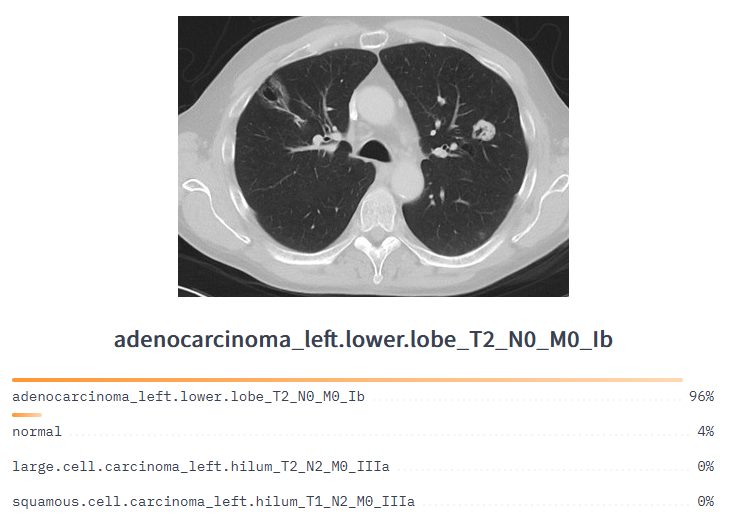

Medical Imaging

In medical imaging, correct localization of constructions akin to tumors is essential. IoU serves as a metric to judge the precision of segmentation algorithms, guaranteeing dependable and exact identification of anatomical areas in medical pictures.

Robotics

IoU finds functions in robotics for duties akin to object manipulation and scene understanding. By assessing the accuracy of object localization, IoU contributes to the event of extra strong and dependable robotic programs.

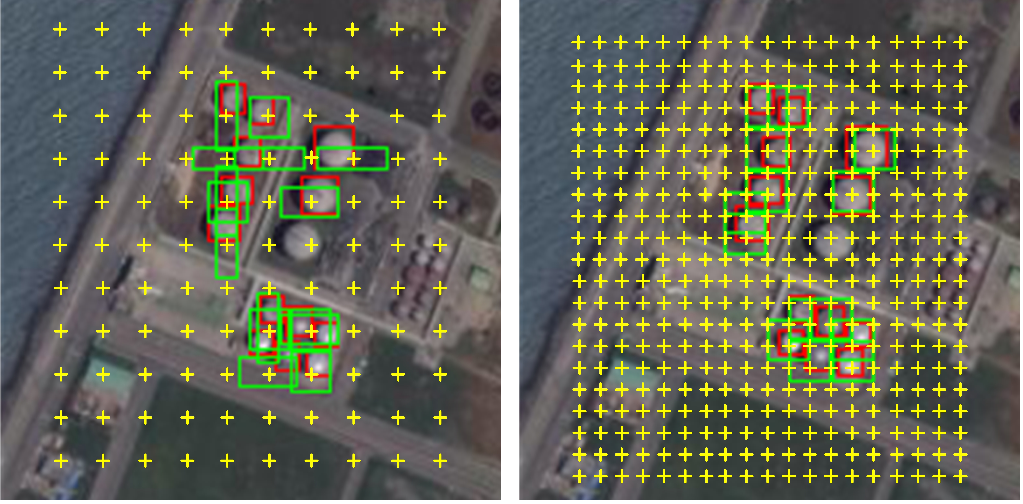

Distant Sensing

In distant sensing functions, IoU is used to judge the accuracy of algorithms in detecting and classifying objects inside satellite tv for pc or aerial imagery. It aids within the identification and classification of objects inside large-scale geographical areas. It might probably measure how nicely the algorithm predictions align with the bottom reality objects, offering a measure of classification accuracy.

IoU Challenges and Limitations

Whereas highly effective, IoU has its limitations:

- Delicate to field measurement: IoU might be delicate to the dimensions of bounding containers. A small shift in a big field could have a minimal affect on IoU, whereas the identical shift in a small field would possibly considerably change the rating.

- Ignores form and inside construction: It solely considers the overlap space, neglecting objects’ form and inside construction. The results could possibly be problematic is duties with vital function particulars, for instance, in medical picture segmentation.

- Lack of ability to deal with overlapping objects: It struggles to tell apart between a number of overlapping objects inside a single bounding field. This could result in misinterpretations and inaccurate evaluations.

- Binary thresholding: It usually makes use of a binary threshold (e.g., 0.5) to find out whether or not a prediction is appropriate. Because of this, the result might be overly simplistic and miss out on delicate variations in high quality.

- Ignores confidence scores: It doesn’t think about the mannequin’s confidence rating for its predictions. This could result in conditions the place a low-confidence prediction with a excessive IoU is taken into account higher than a high-confidence prediction with a barely decrease IoU.

Future Developments

As laptop imaginative and prescient continues to advance, there’s ongoing analysis and improvement to boost the accuracy and reliability of IoU and associated metrics. Some future developments in IoU embody the incorporation of object form info, consideration of contextual info, and the event of extra strong analysis methodologies.

Superior laptop imaginative and prescient strategies, together with the mixing of neural networks, CNNs, and a spotlight mechanisms, present promise in bettering the accuracy and reliability of Intersection over Union object detection and localization metrics.

What’s Subsequent?

IoU stays a basic metric in laptop imaginative and prescient, and its position is anticipated to proceed rising as the sector advances. Researchers and builders will possible witness the refinement of IoU-based metrics and the emergence of extra subtle approaches to handle the restrictions of present methodologies.

Listed below are some extra assets you would possibly discover useful in gaining a deeper understanding of IoU and its associated ideas in laptop imaginative and prescient: