Rick Korzekwa, 11 April 2023, up to date 13 April 2023

At AI Impacts, we’ve been trying into how individuals, establishments, and society method novel, highly effective applied sciences. One a part of that is our technological temptations mission, during which we’re trying into circumstances the place some actors had a robust incentive to develop or deploy a expertise, however selected to not or confirmed hesitation or warning of their method. Our researcher Jeffrey Heninger has just lately completed some case research on this matter, protecting geoengineering, nuclear energy, and human problem trials.

This doc summarizes the teachings I believe we will take from these case research. A lot of it’s borrowed instantly from Jeffrey’s written evaluation or conversations I had with him, a few of it’s my unbiased take, and a few of it’s a mixture of the 2, which Jeffrey could or could not agree with. All of it depends closely on his analysis.

The writing is considerably extra assured than my beliefs. A few of that is very speculative, although I attempted to flag essentially the most speculative components as such.

Jeffrey Heninger investigated three circumstances of applied sciences that create substantial worth, however weren’t pursued or pursued extra slowly

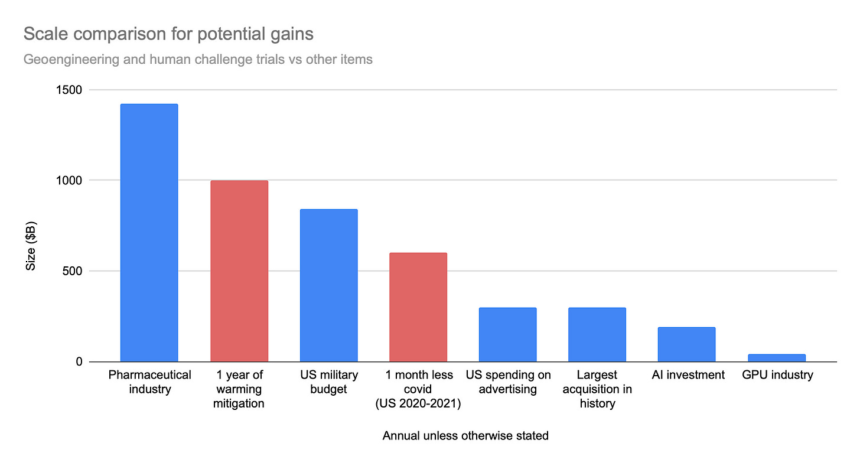

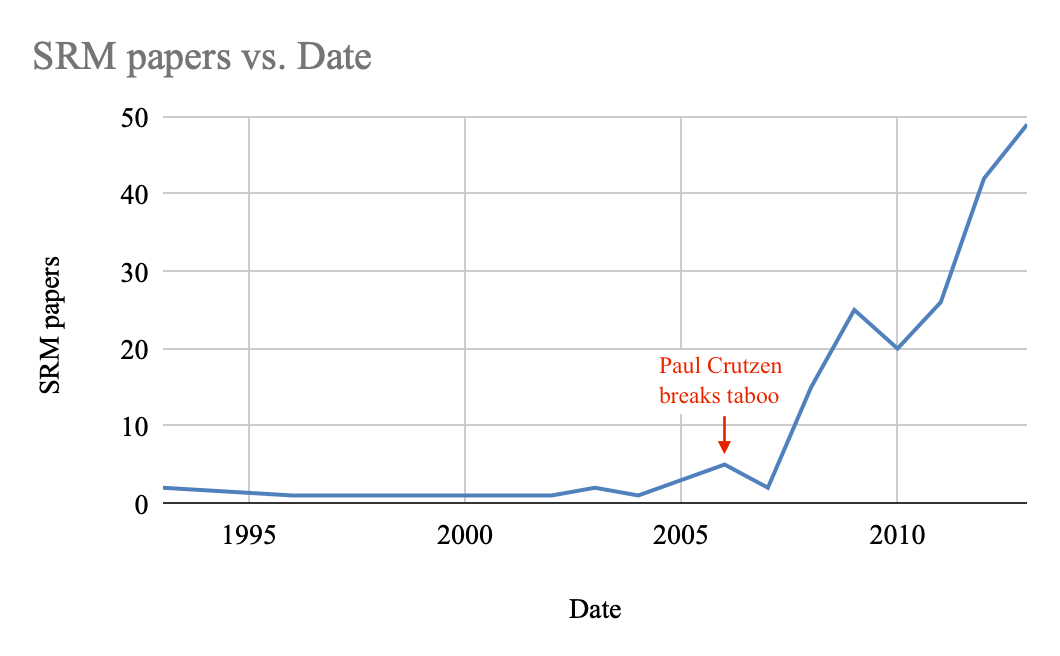

The general scale of worth at stake was very massive for these circumstances, on the order of tons of of billions to trillions of {dollars}. However it’s not clear who might seize that worth, so it’s not clear whether or not the temptation was nearer to $10B or $1T.

Social norms can generate robust disincentives for pursuing a expertise, particularly when mixed with enforceable regulation.

Scientific communities and people inside these communities appear to have significantly excessive leverage in steering technological improvement at early phases.

Inhibiting deployment can inhibit improvement for a expertise over the long run, at the least by slowing price reductions.

A few of these classes are transferable to AI, at the least sufficient to be price conserving in thoughts.

- Geoengineering might feasibly present advantages of $1-10 trillion per yr via international warming mitigation, at a value of $1-10 billion per yr, however actors who stand to realize essentially the most haven’t pursued it, citing a scarcity of analysis into its feasibility and security. Analysis has been successfully prevented by local weather scientists and social activist teams.

- Nuclear energy has proliferated globally because the Fifties, however many international locations have prevented or inhibited the development of nuclear energy vegetation, generally at an annual price of tens of billions of {dollars} and hundreds of lives. That is primarily achieved via laws, like Italy’s ban on all nuclear energy, or via pricey laws, like security oversight within the US that has elevated the price of plant building within the US by an element of ten.

- Human problem trials could have accelerated deployment of covid vaccines by greater than a month, saving many hundreds of lives and billions or trillions of {dollars}. Regardless of this, the primary problem trial for a covid vaccine was not carried out till after a number of vaccines had been examined and authorised utilizing conventional strategies. That is in keeping with the historic rarity of problem trials, which appears to be pushed by moral considerations and enforced by institutional overview boards.

The very first thing to note about these circumstances is the dimensions of worth at stake. Mitigating local weather change could possibly be price tons of of billions or trillions of {dollars} per yr, and deploying covid vaccines a month sooner might have saved many hundreds of lives. Whereas these numbers don’t characterize a significant fraction of the worldwide economic system or the general burden of illness, they’re massive in comparison with many related scales for AI danger. The world’s most respected corporations have market caps of some trillion {dollars}, and all the world spends round two trillion {dollars} per yr on protection. Compared, annual funding for AI is on the order of $100B.

Setting apart for the second who might seize the worth from a expertise and whether or not the explanations for delaying or forgoing its improvement are rational or justified, I believe it’s price recognizing that the potential upsides are massive sufficient to create robust incentives.

My learn on these circumstances is {that a} robust determinant for whether or not a expertise will likely be pursued is social attitudes towards the expertise and its regulation. I’m undecided what would have occurred if Pfizer had, in defiance of FDA requirements and medical ethics norms, contaminated volunteers with covid as a part of their vaccine testing, however I think about it could have been extra extreme than fines or issue acquiring FDA approval. They’d have misplaced standing within the medical neighborhood and probably been unable to proceed current as an organization. This goes equally for different applied sciences and actors. Constructing nuclear energy vegetation with out adhering to security requirements is thus far outdoors the vary of acceptable actions that even suggesting it as a technique for operating a enterprise or addressing local weather change is a severe danger to fame for a CEO or public official. An oil firm government who funds a mission to disperse aerosols into the higher ambiance to scale back international warming and shield his enterprise appears like a Bond film villain.

This isn’t to recommend that social norms are infinitely robust or that they’re all the time well-aligned with society’s pursuits. Governments and companies will do issues which can be extensively seen as unethical in the event that they assume they will get away with it, for instance, by doing it in secret. And I believe that public help for our present nuclear security regime is gravely mistaken. However robust social norms, both towards a expertise or towards breaking laws do appear in a position, at the least in some circumstances, to create incentives robust sufficient to constrain invaluable applied sciences.

The general public

The general public performs a significant function in defining and implementing the vary of acceptable paths for expertise. Public backlash in response to early problem trials set the stage for our present ethics requirements, and nuclear energy faces crippling security laws largely due to public outcry in response to a perceived lack of acceptable security requirements. In each of those circumstances, the end result was not simply the creation of laws, however robust buy-in and a souring of public opinion on a broad class of applied sciences.

Though public opposition is usually a highly effective drive in expelling issues from the Overton window, it doesn’t appear simple to foretell or steer. The Chernobyl catastrophe made a robust case for designing reactors in a accountable method, but it surely was as an alternative seen by a lot of the general public as an illustration that nuclear energy must be abolished totally. I do not need a robust tackle how exhausting this downside is generally, however I do assume it is necessary and must be investigated additional.

The scientific neighborhood

The exact boundaries of acceptable expertise are outlined partly by the scientific neighborhood, particularly when applied sciences are very early in improvement. Coverage makers and the general public are likely to defer to what they perceive to be the official, legible scientific view when deciding what’s or is just not okay. This doesn’t all the time match with precise views of scientists.

Geoengineering as an method to decreasing international warming has not been advisable by the IPCC, and a minority of local weather scientists help analysis into geoengineering. Presumably the advocacy teams opposing geoengineering experiments would have confronted a more durable battle if the official stance from the local weather science neighborhood have been in favor of geoengineering.

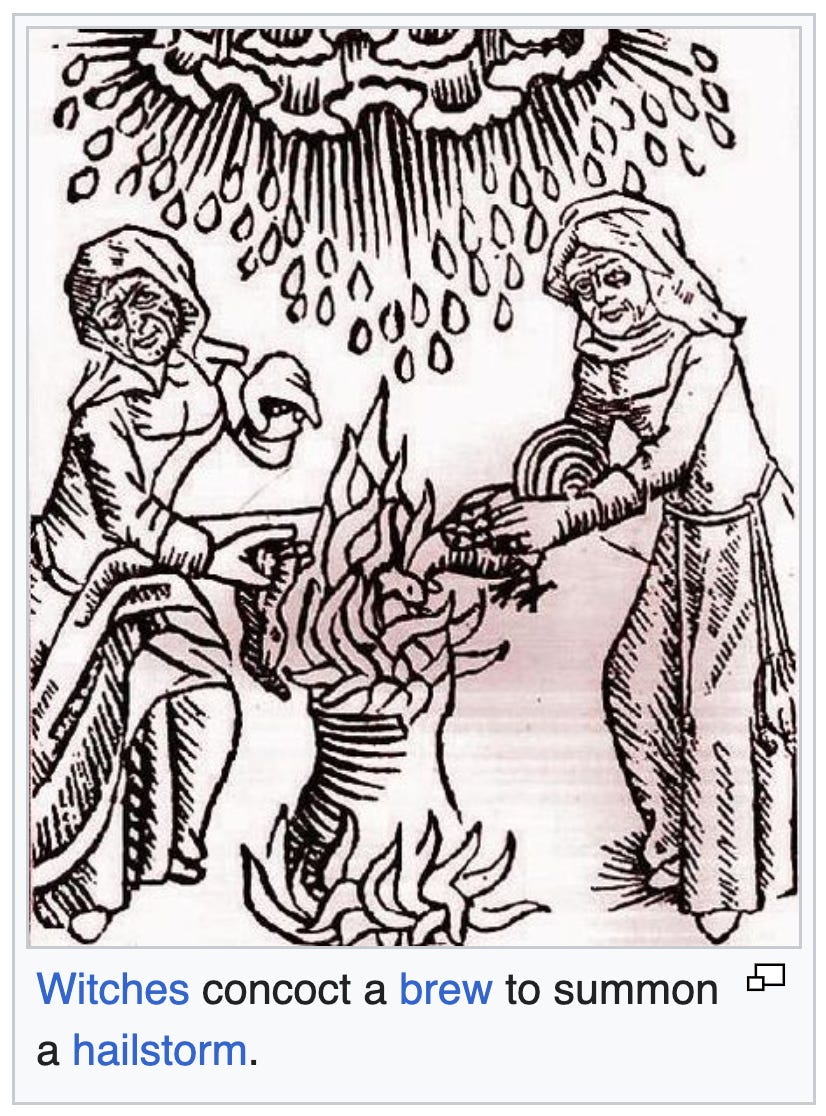

One attention-grabbing side of that is that scientific communities are small and closely influenced by particular person prestigious scientists. The taboo on geoengineering analysis was damaged by the editor of a significant local weather journal, after which the variety of papers on the subject elevated by greater than an element of 20 after two years.

I think the general public and policymakers are usually not all the time capable of inform the distinction between the official stance of regulatory our bodies and the consensus of scientific communities. My impression is that scientific consensus is just not in favor of radiation well being fashions utilized by the Nuclear Regulatory Fee, however many individuals nonetheless imagine that such fashions are sound science.

Warning photographs

Previous incidents just like the Fukushima catastrophe and the Tuskegee syphilis examine are regularly cited by opponents of nuclear energy and human problem trials. I believe this can be vital, as a result of it means that these “warning photographs” have achieved so much to form notion of those applied sciences, even many years later. One interpretation of that is that, no matter why somebody is against one thing, they profit from citing memorable occasions when making their case. One other, non-competing interpretation is that these occasions are causally vital within the trajectory of those applied sciences’ improvement and the general public’s notion of them.

I’m undecided the right way to untangle the relative contribution of those results, however both method, it means that such incidents are vital for shaping and preserving norms across the deployment of expertise.

Locality

On the whole, social norms are native. Constructing energy vegetation is rather more acceptable in France than it’s in Italy. Even when two international locations permit the development of nuclear energy vegetation and have equally robust norms towards breaking nuclear security laws, these security laws could also be completely different sufficient to create a big distinction in plant building between international locations, as seen with the US and France.

As a result of scientific communities have members and affect throughout worldwide borders, they might have extra sway over what occurs globally (as we’ve seen with geoengineering), however this can be restricted by native variations within the acceptability of going towards scientific consensus.

A standard characteristic of those circumstances is that stopping or limiting deployment of the expertise inhibited its improvement. As a result of much less developed applied sciences are much less helpful and tougher to belief, this appears to have helped cut back deployment.

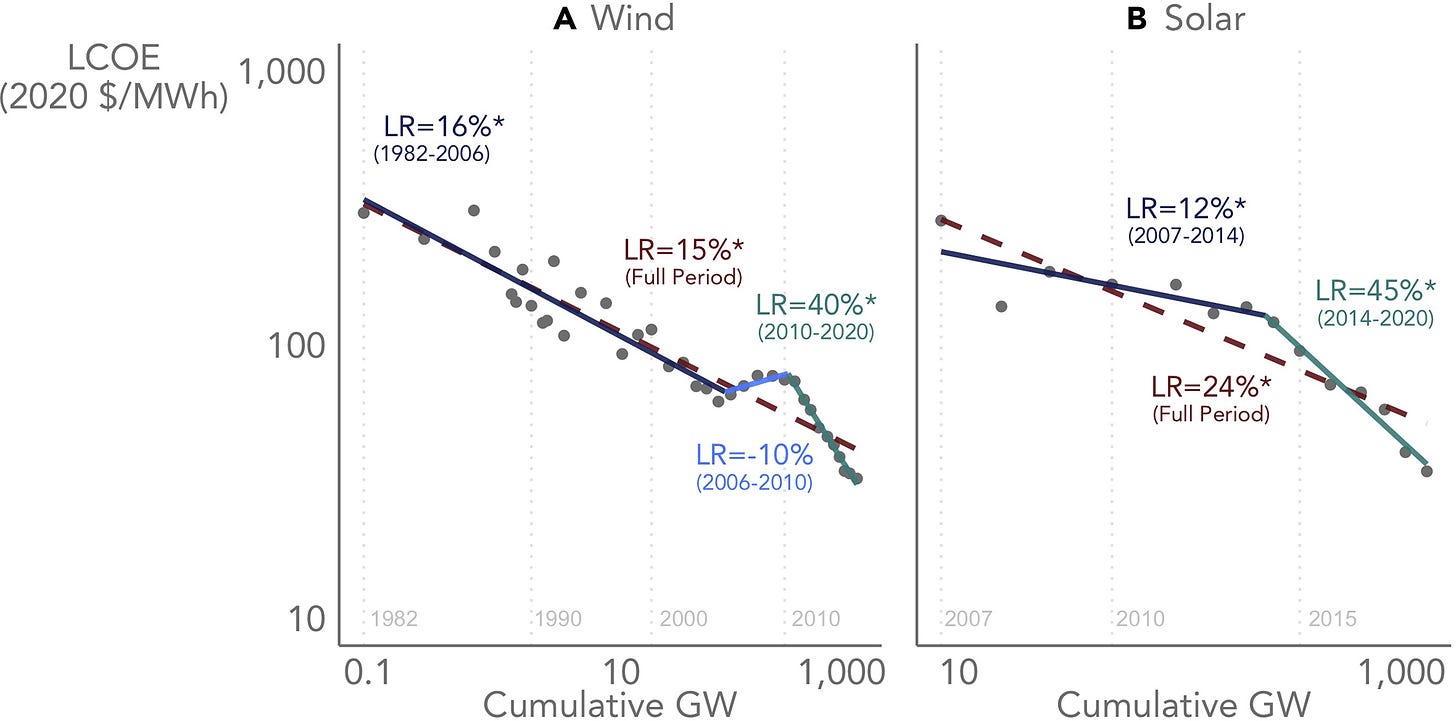

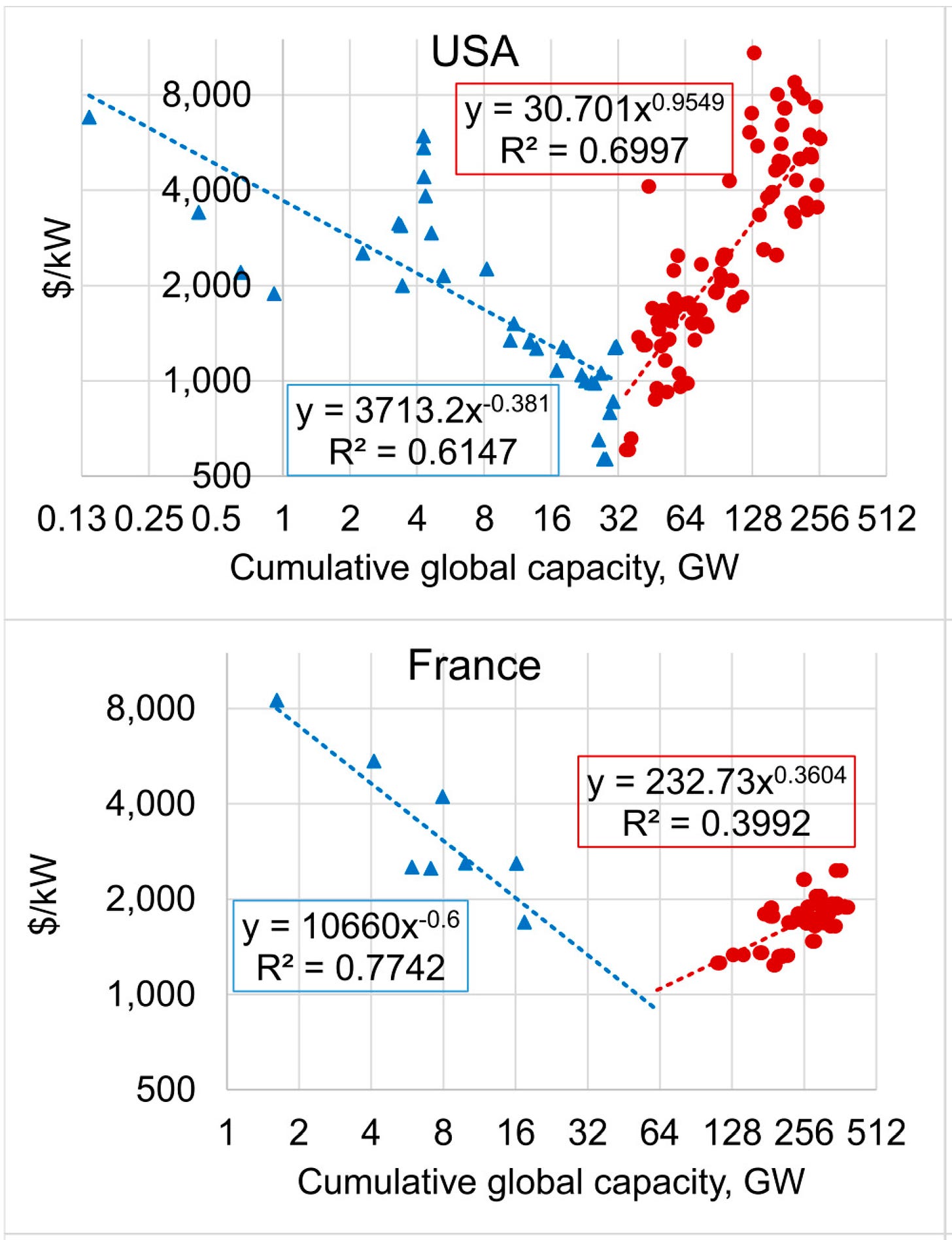

Usually, issues change into cheaper to make as we make extra of them in a considerably predictable method. The associated fee goes down with the entire quantity that has been produced, following an influence legislation. That is what has been occurring with photo voltaic and wind energy.

Initially, constructing nuclear energy vegetation appears to have change into cheaper within the regular method for brand spanking new expertise—doubling the entire capability of nuclear energy vegetation decreased the fee per kilowatt by a relentless fraction. Beginning round 1970, laws and public opposition to constructing vegetation did greater than enhance building prices within the close to time period. By decreasing the variety of vegetation constructed and inhibiting small-scale design experiments, it slowed the event of the expertise, and correspondingly decreased the speed at which we discovered to construct vegetation cheaply and safely. Absent reductions in price, they proceed to be uncompetitive with different energy producing applied sciences in lots of contexts.

As a result of photo voltaic radiation administration acts on a scale of months-to-years and the prices of worldwide warming are usually not but very excessive, I’m not stunned that we’ve nonetheless not deployed it. However this doesn’t clarify the dearth of analysis, and one of many causes given for opposition to experiments is that it has not been proven to be protected. However the purpose we lack proof on security is as a result of analysis has been opposed, even at small scales.

It’s much less clear to me how a lot the relative lack of human problem trials up to now has made us much less capable of do them nicely now. I’m additionally undecided how a lot a stronger previous document of problem trials would trigger them to be seen extra positively. Nonetheless, absent proof that medical analysis methodology doesn’t enhance within the regular method with amount of analysis, I anticipate we’re at the least considerably much less efficient at performing human problem trials than we in any other case could be.

I believe it’s spectacular that regulatory our bodies are capable of forestall use of expertise even when the price of doing so is on the dimensions of many billions, plausibly trillions of {dollars}. One of many causes this works appears to be that regulators will likely be blamed in the event that they approve one thing and it goes poorly, however they won’t obtain a lot credit score if issues go nicely. Equally, they won’t be held accountable for failing to approve one thing good. This creates robust incentives for avoiding adverse outcomes whereas creating little incentive to hunt constructive outcomes. I’m undecided if this asymmetry was intentionally constructed into the system or if it’s a facet impact of different incentive constructions (e.g, on the stage of politics, there’s extra profit from putting blame than there’s from giving credit score), however it’s a drive to be reckoned with, particularly in contexts the place there’s a robust social norm towards disregarding the judgment of regulators.

It’s exhausting to evaluate which actors are literally tempted by a expertise. Whereas society at massive may benefit from constructing extra nuclear energy vegetation, a lot of the profit could be dispersed as public well being good points, and it’s tough for any explicit actor to seize that worth. Equally, whereas many deaths might have been prevented if the covid vaccines had been accessible two months earlier, it isn’t clear if this worth might have been captured by Pfizer or Moderna–demand for vaccines was not altering that rapidly.

Then again, not all the advantages are exterior–switching from coal to nuclear energy within the US might save tens of billions of {dollars} a yr, and drug corporations pay billions of {dollars} per yr for trials. Some authorities establishments and officers have the said objective of making advantages like public well being, along with financial and reputational stakes in outcomes like the fast deployment of vaccines throughout a pandemic. These establishments pay prices and make choices on the idea of financial and well being good points from expertise (for instance, subsidizing photovoltaics and weight problems analysis), suggesting they’ve incentive to create that worth.

General, I believe this lack of readability round incentives and seize of worth is the largest purpose for doubt that these circumstances exhibit robust resistance to technological temptation.

How nicely these circumstances generalize to AI will rely on information about AI that aren’t but recognized. For instance, if highly effective AI requires massive amenities and easily-trackable tools, I believe we will anticipate classes from nuclear energy to be extra transferable than if it may be achieved at a smaller scale with commonly-available supplies. Nonetheless, I believe a few of what we’ve seen in these circumstances will switch to AI, both due to similarity with AI or as a result of they replicate extra normal ideas.

Social norms

The primary factor I anticipate to generalize is the facility of social norms to constrain technological improvement. Whereas it’s removed from assured to forestall irresponsible AI improvement, particularly if constructing harmful AI is just not seen as a significant transgression in all places that AI is being developed, it does appear to be the world is way safer if constructing AI in defiance of laws is seen as equally villainous to constructing nuclear reactors or infecting examine contributors with out authorization. We’re not at that time, however the public does appear ready to help concrete limits on AI improvement.

I do assume there are causes for pessimism about norms constraining AI. For geoengineering, the norms labored by tabooing a selected matter in a analysis neighborhood, however I’m undecided if it will work with a expertise that’s now not in such an early stage. AI already has a big physique of analysis and many individuals who’ve already invested their careers in it. For medical and nuclear expertise, the norms are highly effective as a result of they implement adherence to laws, and people laws outline the constraints. However it may be exhausting to construct laws that create the fitting boundaries round expertise, particularly one thing as imprecise-defined as AI. If somebody begins constructing a nuclear energy plant within the US, it should change into clear comparatively early on that that is what they’re doing, however a datacenter coaching an AI and a datacenter updating a search engine could also be tough to inform aside.

Another excuse for pessimism is tolerance for failure. Previous applied sciences have principally carried dangers that scaled with how a lot of the expertise was constructed. For instance, for those who’re frightened about nuclear waste, you in all probability assume two energy vegetation are about twice as unhealthy as one. Whereas danger from AI could prove this fashion, it might be {that a} single highly effective system poses a world danger. If this does change into the case, then even when robust norms mix with robust regulation to attain the identical stage of success as for nuclear energy, it nonetheless is not going to be ample.

Improvement good points from deployment

I’m very unsure how a lot improvement of harmful AI will likely be hindered by constraints on deployment. I believe roughly all applied sciences face some limitations like this, in some circumstances very extreme limitations, as we’ve seen with nuclear energy. However we’re primarily within the good points to improvement towards harmful methods, which can be doable to advance with little deployment. Including to the uncertainty, there’s ambiguity the place the road is drawn between testing and deployment or whether or not permitting the deployment of verifiably protected methods will present the good points wanted to create harmful methods.

Separating security choices from good points

I don’t see any explicit purpose to assume that uneven justice will function in a different way with AI, however I’m unsure whether or not regulatory methods round AI, if created, can have such incentives. I believe it’s price occupied with IRB-like fashions for AI security.

Seize of worth

It’s apparent there are actors who imagine they will seize substantial worth from AI (for instance Microsoft just lately invested $10B in OpenAI), however I’m undecided how it will go as AI advances. By default, I anticipate the worth created by AI to be extra straightforwardly capturable than for nuclear energy or geoengineering, however I’m undecided the way it differs from drug improvement.

Social preview picture: German anti-nuclear energy protesters in 2012. Used below Artistic Commons license from Bündnis 90/Die Grünen Baden-Württemberg Flickr