Be part of our every day and weekly newsletters for the newest updates and unique content material on industry-leading AI protection. Study Extra

To scale up massive language fashions (LLMs) in assist of long-term AI methods, enterprises are counting on retrieval augmented generation (RAG) frameworks that want stronger contextual safety to satisfy the skyrocketing calls for for integration.

Defending RAGs requires contextual intelligence

Nonetheless, conventional RAG entry management methods aren’t designed to ship contextual management. RAG’s lack of native entry management poses a major safety danger to enterprises, because it may enable unauthorized customers to entry delicate data.

Function-Based mostly Entry Management (RBAC) lacks the flexibleness to adapt to contextual requests, and Attribute-Based mostly Entry Management (ABAC) is understood for restricted scalability and better upkeep prices. What’s wanted is a extra contextually clever method to defending RAG frameworks that received’t hinder pace and scale.

Lasso Security began seeing these limitations with LLMs early and developed Context-Based mostly Entry Management (CBAC) in response to the challenges of bettering contextual entry. Lasso Safety’s CBAC is noteworthy for its modern method to dynamically evaluating the context of all entry requests to an LLM. The corporate instructed VentureBeat the CBAC evaluates entry, response, interplay, behavioral and knowledge modification requests to make sure complete safety, stop unauthorized entry, and keep high-security requirements in LLM and RAG frameworks. The purpose is to make sure that solely licensed customers can entry particular data.

Contextual intelligence helps guarantee chatbots don’t expose delicate data from LLMs, the place delicate data is vulnerable to publicity.

“We’re making an attempt to base our options on context. The place the place role-based entry or attribute-based entry fails is that it actually seems on one thing very static, one thing that’s inherited from some place else, and one thing that’s by design not managed,” Ophir Dror, co-founder and CPO at Lasso Safety, instructed VentureBeat in a latest interview.

“By specializing in the information stage and never patterns or attributes, CBAC ensures that solely the precise data reaches the precise customers, offering a stage of precision and safety that conventional strategies can’t match,” says Dror. “This modern method permits organizations to harness the total energy of RAG whereas sustaining stringent entry controls, actually revolutionizing how we handle and shield knowledge,” he continued.

What’s Retrieval-Augmented Technology (RAG)?

In 2020, researchers from Fb AI Analysis, College Faculty London and New York College authored the paper titled Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks, defining Retrieval-Augmented Technology (RAG) as “We endow pre-trained, parametric-memory technology fashions with a non-parametric reminiscence by means of a general-purpose fine-tuning method which we seek advice from as retrieval-augmented technology (RAG). We construct RAG fashions the place the parametric reminiscence is a pre-trained seq2seq transformer, and the non-parametric reminiscence is a dense vector index of Wikipedia, accessed with a pre-trained neural retriever.”

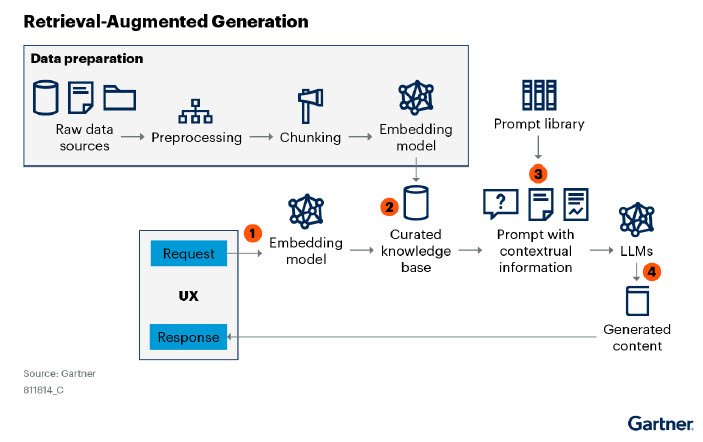

“Retrieval-augmented technology (RAG) is a sensible solution to overcome the restrictions of basic massive language fashions (LLMs) by making enterprise knowledge and data accessible for LLM processing,” writes Gartner of their latest report, Getting Started With Retrieval-Augmented Generation. The next graphic from Gartner explains how a RAG works:

How Lasso Safety designed CBAC with RAG

“We constructed CBAC to work as a standalone or related to our merchandise. It may be built-in with Lively Listing or used independently with minimal setup. This flexibility ensures that organizations can undertake CBAC with out in depth modifications to their LLM infrastructure,” Dror mentioned.

Whereas designed as a standalone answer, Lasso Safety has additionally designed it to combine with its gen AI security suite, which provides safety for workers’ use of gen AI-based chatbots, purposes, brokers, dode assistants, and built-in fashions in manufacturing environments. No matter the way you deploy LLMs, Lasso Safety screens each interplay involving knowledge switch to or from the LLM. It additionally swiftly identifies any anomalies or violations of organizational insurance policies, guaranteeing a safe and compliant atmosphere always.

Dror defined that CBAC is designed to repeatedly monitor and consider all kinds of contextual cues to find out entry management insurance policies, guaranteeing that solely licensed customers have entry privileges to particular data, even in paperwork and experiences that comprise presently related and out-of-scope knowledge.

“There are lots of totally different heuristics that we use to find out if it’s an anomaly or if it’s a legit request. And likewise response we’ll have a look at each methods. However mainly, if you concentrate on it, it’s all involves the query if this individual ought to be asking this query and if this individual ought to be getting a solution to this query from the number of knowledge that this mannequin is related to.

Core to CBAC is a sequence of supervised machine studying (ML) algorithms that constantly study and adapt primarily based on the contextual insights gained from person conduct patterns and historic knowledge. “The core of our method is context. Who’s the individual? What’s their function? Ought to they be asking this query? Ought to they be getting this reply? By evaluating these components, we stop unauthorized entry and guarantee knowledge safety in LLM environments,” Dror instructed VentureBeat.

CBAC takes on safety challenges

“We see now a variety of firms who already went the space and constructed a RAG, together with architecting a RAG chatbot, they usually’re now encountering the issues of who can ask what, who can see what, who can get what,” Dror mentioned.

Dror says RAG’s hovering adoption can be making the restrictions of LLMs and the issues they trigger turn out to be extra pressing. Hallucinations and the issue of coaching LLMs with new knowledge have additionally surfaced, additional illustrating how difficult it’s to unravel RAG’s permissions drawback. CBAC was invented to tackle these challenges and supply the wanted contextual insights so a extra dynamic method to entry management could possibly be achieved.

With RAG being the cornerstone of organizations’ present and future LLM and broader AI methods, contextual intelligence will show to be an inflection level in how they’re protected and scaled with out impacting efficiency.

Source link