Because of its huge potential and commercialization alternatives, significantly in gaming, broadcasting, and video streaming, the Metaverse is presently one of many fastest-growing applied sciences. Fashionable Metaverse purposes make the most of AI frameworks, together with pc imaginative and prescient and diffusion fashions, to reinforce their realism. A big problem for Metaverse purposes is integrating numerous diffusion pipelines that present low latency and excessive throughput, making certain efficient interplay between people and these purposes.

Immediately’s diffusion-based AI frameworks excel in creating photographs from textual or picture prompts however fall brief in real-time interactions. This limitation is especially evident in duties that require steady enter and excessive throughput, similar to online game graphics, Metaverse purposes, broadcasting, and stay video streaming.

On this article, we’ll talk about StreamDiffusion, a real-time diffusion pipeline developed to generate interactive and sensible photographs, addressing the present limitations of diffusion-based frameworks in duties involving steady enter. StreamDiffusion is an modern method that transforms the sequential noising of the unique picture into batch denoising, aiming to allow excessive throughput and fluid streams. This method strikes away from the normal wait-and-interact methodology utilized by present diffusion-based frameworks. Within the upcoming sections, we’ll delve into the StreamDiffusion framework intimately, exploring its working, structure, and comparative outcomes in opposition to present state-of-the-art frameworks. Let’s get began.

Metaverse are efficiency intensive purposes as they course of a considerable amount of information together with texts, animations, movies, and pictures in real-time to offer its customers with its trademark interactive interfaces and expertise. Fashionable Metaverse purposes depend on AI-based frameworks together with pc imaginative and prescient, picture processing, and diffusion fashions to realize low latency and a excessive throughput to make sure a seamless consumer expertise. At present, a majority of Metaverse purposes depend on decreasing the incidence of denoising iterations to make sure excessive throughput and improve the appliance’s interactive capabilities in real-time. These frameworks go for a typical technique that both includes re-framing the diffusion course of with neural ODEs (Extraordinary Differential Equations) or decreasing multi-step diffusion fashions into a number of steps or perhaps a single step. Though the method delivers passable outcomes, it has sure limitations together with restricted flexibility, and excessive computational prices.

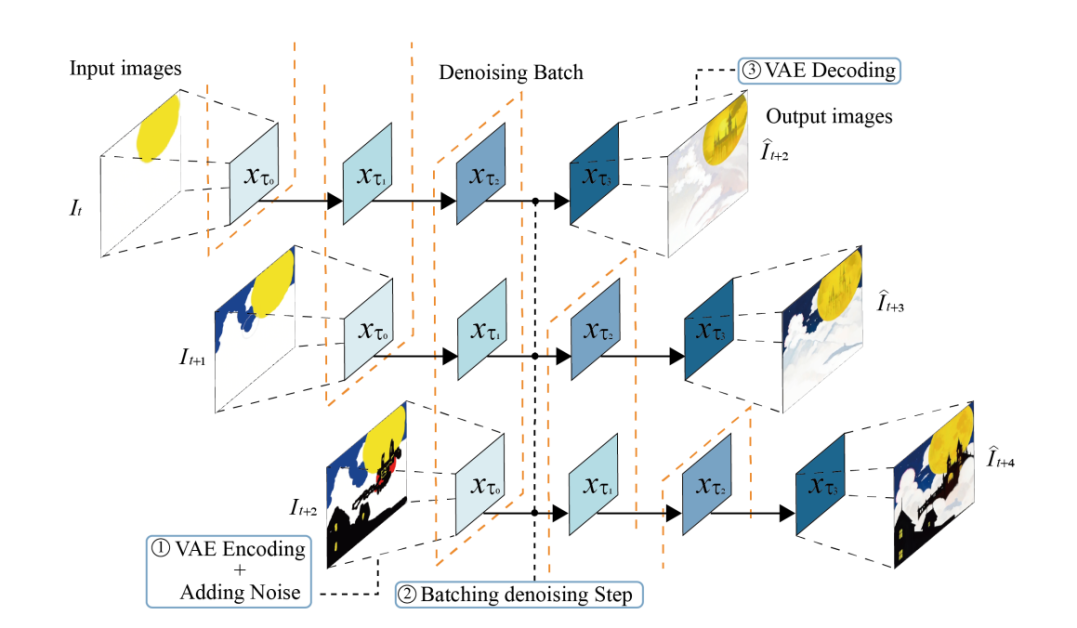

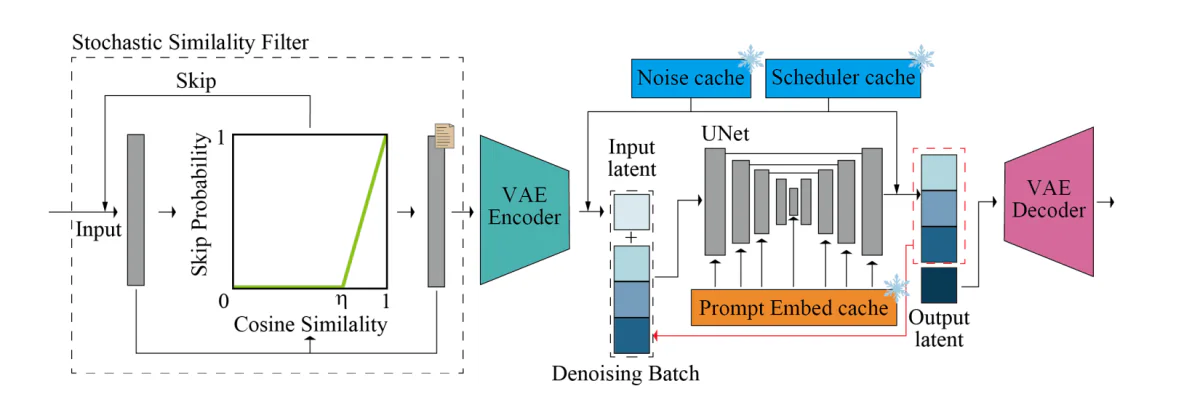

However, the StreamDiffusion is a pipeline stage answer that begins from an orthogonal route and enhances the framework’s capabilities to generate interactive photographs in real-time whereas making certain a excessive throughput. StreamDiffusion makes use of a easy technique by which as a substitute of denoising the unique enter, the framework batches the denoising step. The technique takes inspiration from asynchronous processing because the framework doesn’t have to attend for the primary denoising stage to finish earlier than it may well transfer on to the second stage, as demonstrated within the following picture. To deal with the difficulty of U-Web processing frequency and enter frequency synchronously, the StreamDiffusion framework implements a queue technique to cache the enter and the outputs.

Though the StreamDiffusion pipeline seeks inspiration from asynchronous processing, it’s distinctive in its personal means because it implements GPU parallelism that permits the framework to make the most of a single UNet element to denoise a batched noise latent characteristic. Moreover, present diffusion-based pipelines emphasize on the given prompts within the generated photographs by incorporating classifier-free steering, on account of which the present pipelines are rigged with redundant and extreme computational overheads. To make sure the StreamDiffusion pipeline don’t encounter the identical points, it implements an modern RCFG or Residual Classifier-Free Steerage method that makes use of a digital residual noise to approximate the destructive circumstances, thus permitting the framework to calculate the destructive noise circumstances within the preliminary phases of the method itself. Moreover, the StreamDiffusion pipeline additionally reduces the computational necessities of a conventional diffusion-pipeline by implementing a stochastic similarity filtering technique that determines whether or not the pipeline ought to course of the enter photographs by computing the similarities between steady inputs.

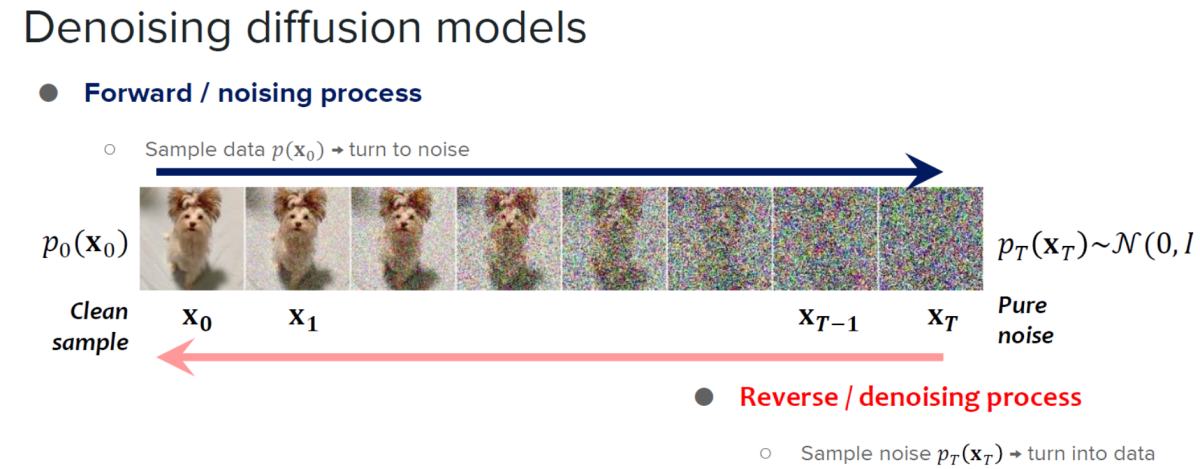

The StreamDiffusion framework is constructed on the learnings of diffusion fashions, and acceleration diffusion fashions.

Diffusion fashions are recognized for his or her distinctive picture technology capabilities and the quantity of management they provide. Owing to their capabilities, diffusion fashions have discovered their purposes in picture modifying, textual content to picture technology, and video technology. Moreover, improvement of constant fashions have demonstrated the potential to reinforce the pattern processing effectivity with out compromising on the standard of the photographs generated by the mannequin that has opened new doorways to develop the applicability and effectivity of diffusion fashions by decreasing the variety of sampling steps. Though extraordinarily succesful, diffusion fashions are likely to have a significant limitation: gradual picture technology. To deal with this limitation, builders launched accelerated diffusion fashions, diffusion-based frameworks that don’t require extra coaching steps or implement predictor-corrector methods and adaptive step-size solvers to extend the output speeds.

The distinguishing issue between StreamDiffusion and conventional diffusion-based frameworks is that whereas the latter focuses totally on low latency of particular person fashions, the previous introduces a pipeline-level method designed for attaining excessive throughputs enabling environment friendly interactive diffusion.

StreamDiffusion : Working and Structure

The StreamDiffusion pipeline is a real-time diffusion pipeline developed for producing interactive and sensible photographs, and it employs 6 key parts specifically: RCFG or Residual Classifier Free Steerage, Stream Batch technique, Stochastic Similarity Filter, an input-output queue, mannequin acceleration instruments with autoencoder, and a pre-computation process. Let’s discuss these parts intimately.

Stream Batch Technique

Historically, the denoising steps in a diffusion mannequin are carried out sequentially, leading to a big improve within the U-Web processing time to the variety of processing steps. Nonetheless, it’s important to extend the variety of processing steps to generate high-fidelity photographs, and the StreamDiffusion framework introduces the Stream Batch technique to beat high-latency decision in interactive diffusion frameworks.

Within the Stream Batch technique, the sequential denoising operations are restructured into batched processes with every batch equivalent to a predetermined variety of denoising steps, and the variety of these denoising steps is set by the scale of every batch. Due to the method, every aspect within the batch can proceed one step additional utilizing the only passthrough UNet within the denoising sequence. By implementing the stream batch technique iteratively, the enter photographs encoded at timestep “t” could be reworked into their respective picture to picture outcomes at timestep “t+n”, thus streamlining the denoising course of.

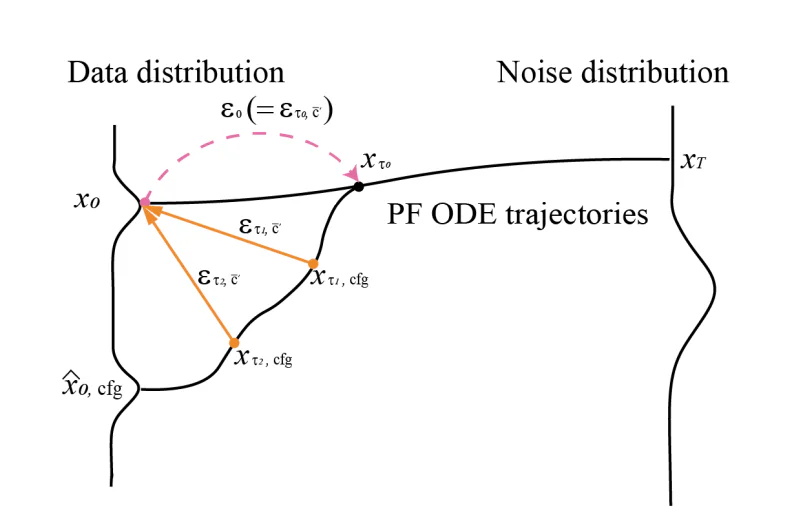

Residual Classifier Free Steerage

CFG or Classifier Free Steerage is an AI algorithm that performs a bunch of vector calculations between the unique conditioning time period and a destructive conditioning or unconditioning time period to reinforce the impact of authentic conditioning. The algorithm strengthens the impact of the immediate although to compute the destructive conditioning residual noise, it’s essential to pair particular person enter latent variables with destructive conditioning embedding adopted up by passing the embeddings via the UNet at reference time.

To deal with this difficulty posed by Classifier Free Steerage algorithm, the StreamDiffusion framework introduces Residual Classifier Free Steerage algorithm with the intention to cut back computational prices for added UNet interference for destructive conditioning embedding. First, the encoded latent enter is transferred to the noise distribution through the use of values decided by the noise scheduler. As soon as the latent consistency mannequin has been applied, the algorithm can predict information distribution, and use the CFG residual noise to generate the following step noise distribution.

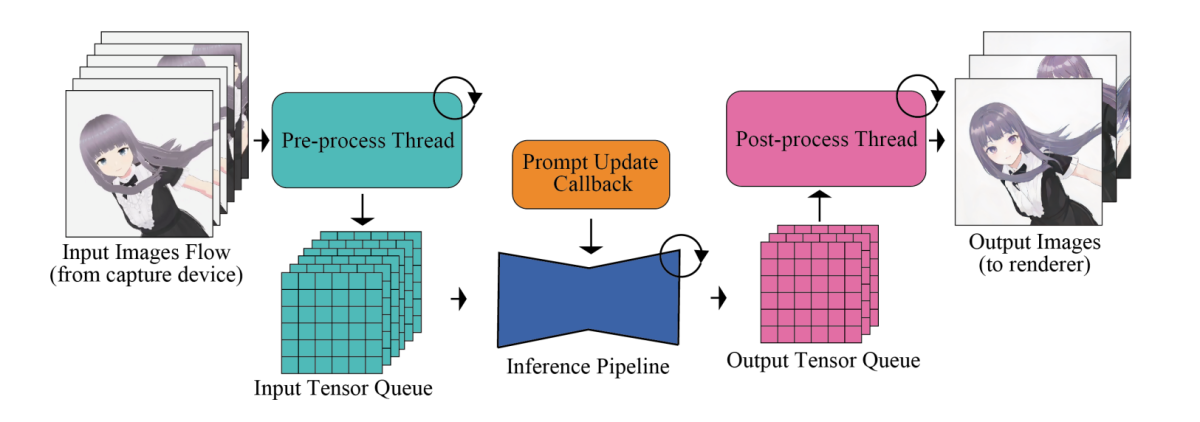

Enter Output Queue

The key difficulty with high-speed picture technology frameworks is their neural community modules together with the UNet and VAE parts. To maximise the effectivity and total output pace, picture technology frameworks transfer processes like pre and put up processing photographs that don’t require extra dealing with by the neural community modules outdoors of the pipeline, put up which they’re processed in parallel. Moreover, when it comes to dealing with the enter picture, particular operations together with conversion of tensor format, resizing enter photographs, and normalization are executed by the pipeline meticulously.

To deal with the disparity in processing frequencies between the mannequin throughput and the human enter, the pipeline integrates an input-output queuing system that permits environment friendly parallelization as demonstrated within the following picture.

The processed enter tensors are first queued methodically for Diffusion fashions, and through every body, the mannequin retrieves the latest tensor from the enter queue, and forwards the tensor to the VAE encoder, thus initiating the picture technology course of. On the similar time, the tensor output from the VAE decoder is fed into the output queue. Lastly, the processed picture information is transmitted to the rendering shopper.

Stochastic Similarity Filter

In eventualities the place the photographs both stay unchanged or present minimal modifications and not using a static atmosphere or with out energetic consumer interplay, enter photographs resembling one another are fed repeatedly into UNet and VAE parts. The repeated feeding results in technology of close to an identical photographs and extra consumption of GPU sources. Moreover, in eventualities involving steady inputs, unmodified enter photographs would possibly floor sometimes. To beat this difficulty and forestall pointless utilization of sources, the StreamDiffusion pipeline employs a Stochastic Similarity Filter element in its pipeline. The Stochastic Similarity Filter first calculates the cosine similarity between the reference picture and the enter picture, and makes use of the cosine similarity rating to calculate the chance of skipping the following UNet and VAE processes.

On the idea of the chance rating, the pipeline decides whether or not subsequent processes like VAE Encoding, VAE Decoding, and U-Web needs to be skipped or not. If these processes aren’t skipped, the pipeline saves the enter picture at the moment, and concurrently updates the reference picture for use sooner or later. This probability-based skipping mechanism permits the StreamDiffusion pipeline to totally function in dynamic eventualities with low inter-frame similarity whereas in static eventualities, the pipeline operates with increased inter-frame similarity. The method helps in conserving the computational sources and likewise ensures optimum GPU utilization primarily based on the similarity of the enter photographs.

Pre-Computation

The UNet structure wants each conditioning embeddings in addition to enter latent variables. Historically, the conditioning embeddings are derived from immediate embeddings that stay fixed throughout frames. To optimize the derivation from immediate embeddings, the StreamDiffusion pipeline pre-computed these immediate embeddings and shops them in a cache, that are then known as in streaming or interactive mode. Inside the UNet framework, the Key-Worth pair is computed on the idea of every body’s pre-computed immediate embedding, and with slight modifications within the U-Web, these Key-Worth pairs could be reused.

Mannequin Acceleration and Tiny AutoEncoder

The StreamDiffusion pipeline employs TensorRT, an optimization toolkit from Nvidia for deep studying interfaces, to assemble the VAE and UNet engines, to speed up the inference pace. To attain this, the TensorRT element performs quite a few optimizations on neural networks which might be designed to spice up effectivity and improve throughput for deep studying frameworks and purposes.

To optimize pace, the StreamDiffusion configures the framework to make use of mounted enter dimensions and static batch sizes to make sure optimum reminiscence allocation and computational graphs for a selected enter dimension in an try to realize quicker processing occasions.

The above determine offers an summary of the inference pipeline. The core diffusion pipeline homes the UNet and VAE parts. The pipeline incorporates a denoising batch, sampled noise cache, pre-computed immediate embedding cache, and scheduler values cache to reinforce the pace, and the flexibility of the pipeline to generate photographs in real-time. The Stochastic Similarity Filter or SSF is deployed to optimize GPU utilization, and likewise to gate the move of the diffusion mannequin dynamically.

StreamDiffusion : Experiments and Outcomes

To guage its capabilities, the StreamDiffusion pipeline is applied on LCM and SD-turbo frameworks. The TensorRT by NVIDIA is used because the mannequin accelerator, and to allow light-weight effectivity VAE, the pipeline employs the TAESD element. Let’s now take a look at how the StreamDiffusion pipeline performs compared in opposition to present cutting-edge frameworks.

Quantitative Analysis

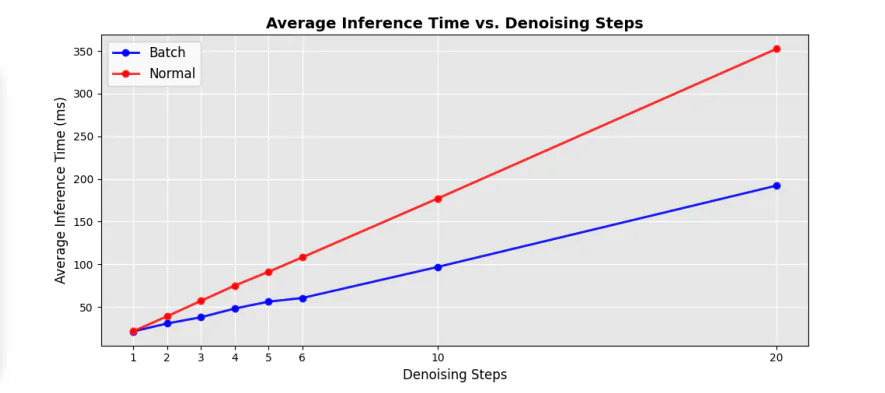

The next determine demonstrates the effectivity comparability between the unique sequential UNet and the denoising batch parts within the pipeline, and as it may be seen, implementing the denoising batch method helps in decreasing the processing time considerably by virtually 50% when in comparison with the normal UNet loops at sequential denoising steps.

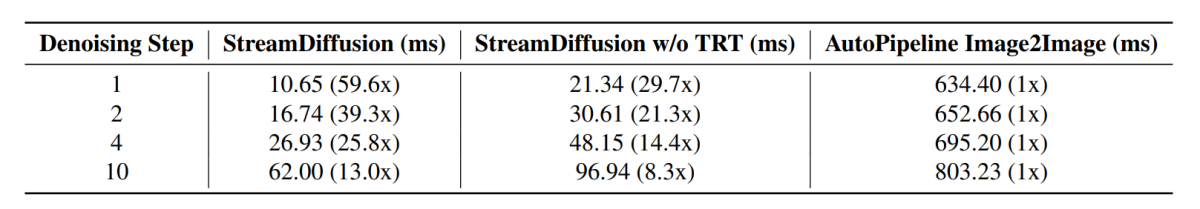

Moreover, the typical inference time at completely different denoising steps additionally witnesses a considerable increase with completely different speedup elements compared in opposition to present cutting-edge pipelines, and the outcomes are demonstrated within the following picture.

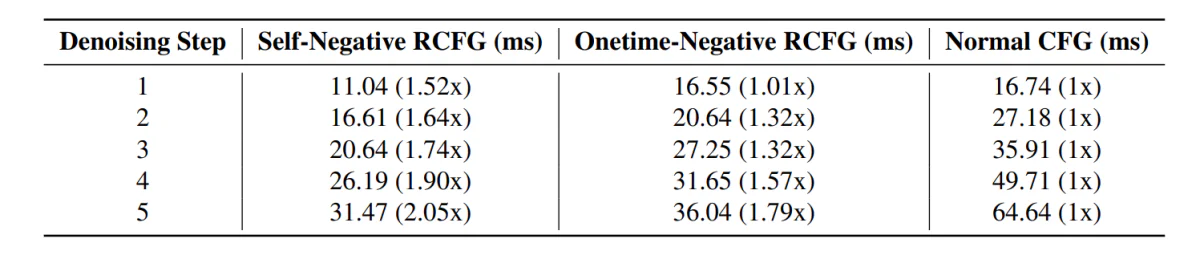

Transferring alongside, the StreamDiffusion pipeline with the RCFG element demonstrates much less inference time compared in opposition to pipelines together with the normal CFG element.

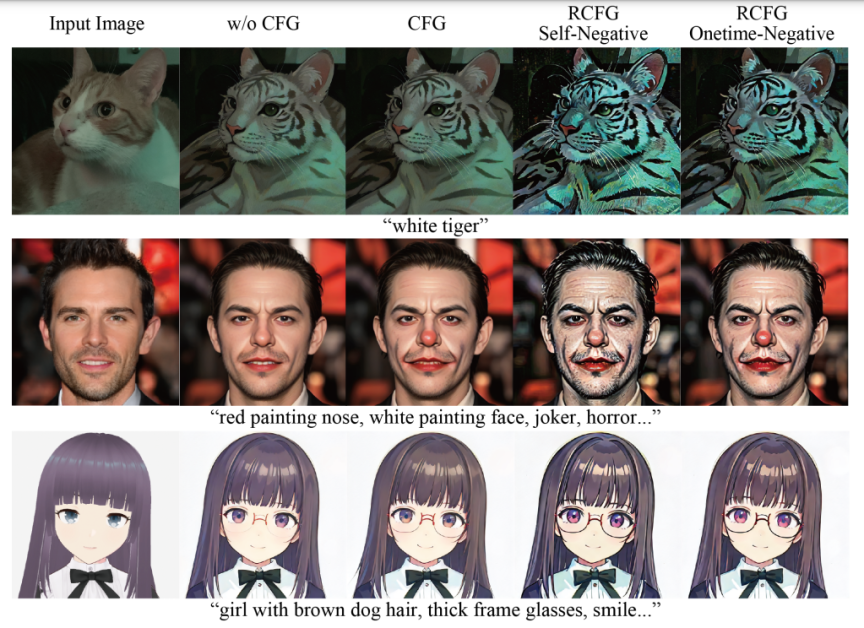

Moreover, the impression of utilizing the RCFG element its evident within the following photographs when in comparison with utilizing the CFG element.

As it may be seen, the usage of CFG intesifies the impression of the textual immediate in picture technology, and the picture resembles the enter prompts much more when in comparison with the photographs generated by the pipeline with out utilizing the CFG element. The outcomes enhance additional with the usage of the RCFG element because the affect of the prompts on the generated photographs is kind of important when in comparison with the unique CFG element.

Last Ideas

On this article, we’ve got talked about StreamDiffusion, a real-time diffusion pipeline developed for producing interactive and sensible photographs, and deal with the present limitations posed by diffusion-based frameworks on duties involving steady enter. StreamDiffusion is an easy and novel method that goals to remodel the sequential noising of the unique picture into batch denoising. StreamDiffusion goals to allow excessive throughput and fluid streams by eliminating the normal wait and work together method opted by present diffusion-based frameworks. The potential effectivity beneficial properties highlights the potential of StreamDiffusion pipeline for industrial purposes providing high-performance computing and compelling options for generative AI.